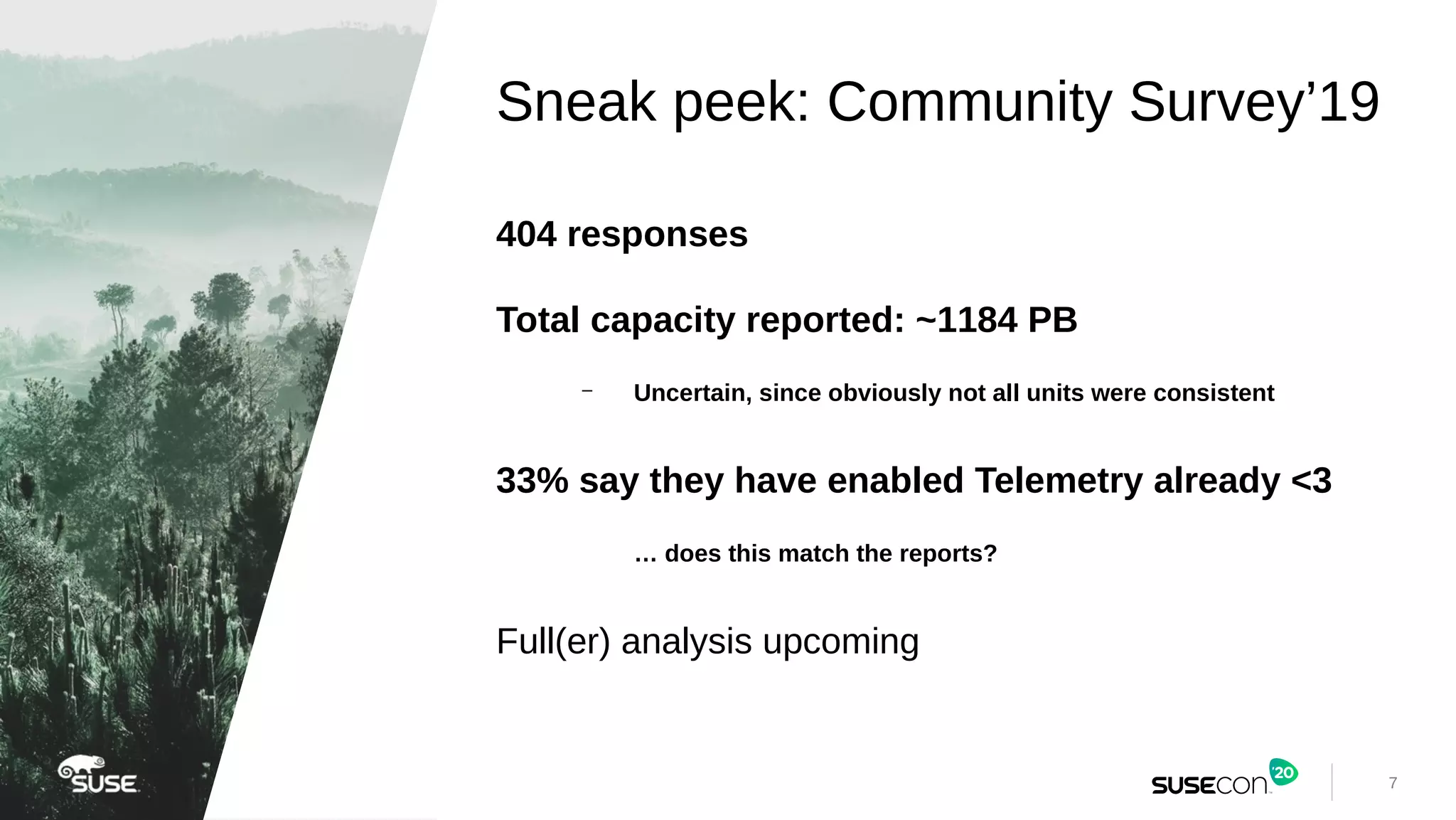

The document discusses the importance and benefits of enabling Ceph telemetry for improving software-defined storage outcomes by detecting anomalies and trends proactively. It highlights the disparities in user adoption, reasons for non-adoption, and provides insights from community surveys on capacity and version usage. Additionally, it outlines the methodology for data collection and analysis, as well as the limitations and future enhancements for telemetry reporting.

![17

Cross-checking this with the survey results:

In [183]: t_on = survey[

survey['Is telemetry enabled in your cluster?'] == 'Yes']

In [184]: t_on['Total raw capacity'].agg('sum')/10**3

Out[184]: 280.126

In [185]: t_on['How many clusters ...'].agg('sum')

Out[185]: 308.0](https://image.slidesharecdn.com/sup-312-ceph-telemetry-200326220044/75/Ceph-Telemetry-Improving-Software-Defined-Storage-Outcomes-17-2048.jpg)