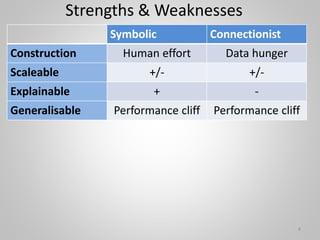

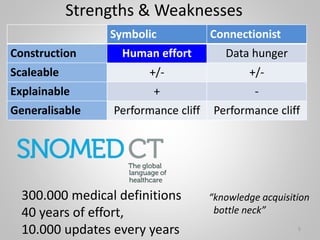

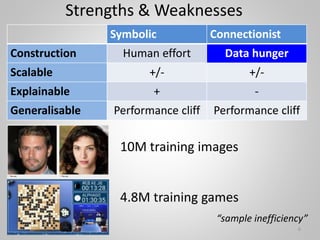

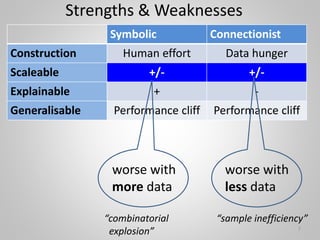

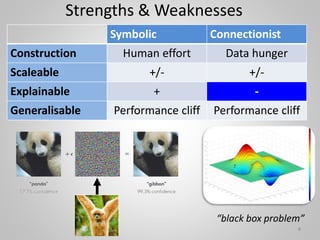

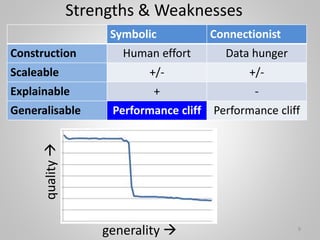

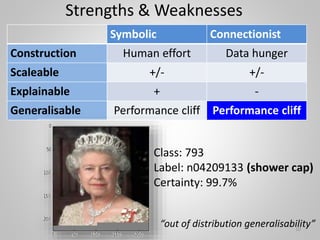

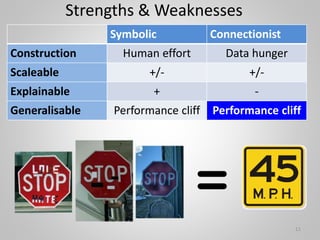

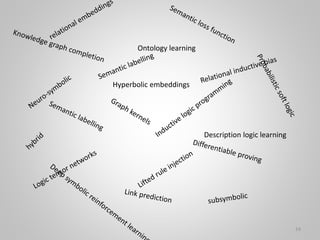

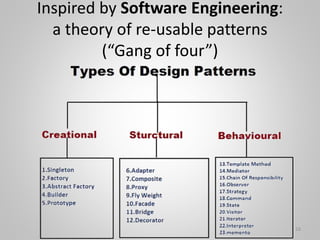

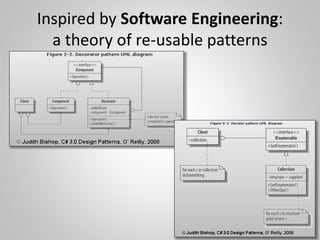

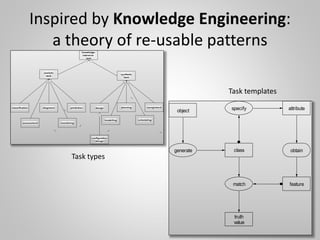

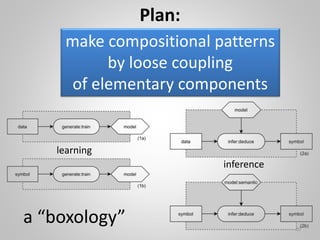

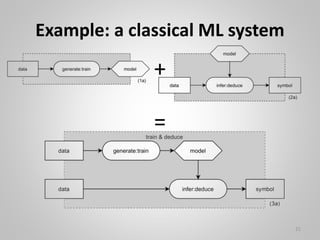

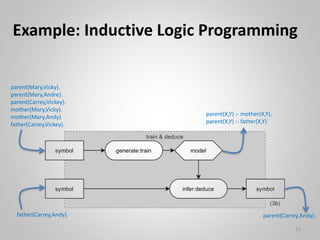

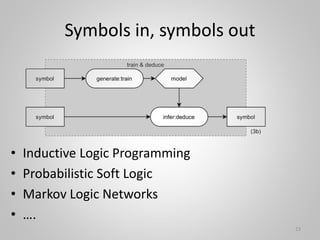

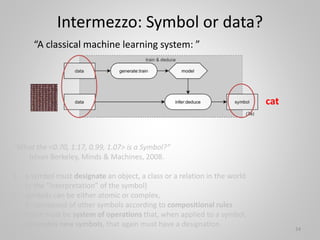

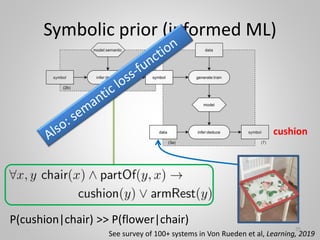

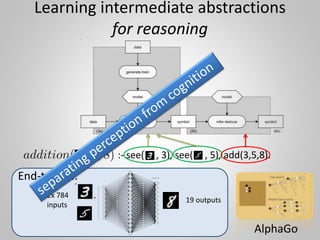

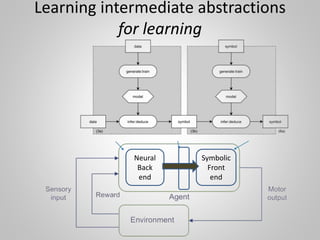

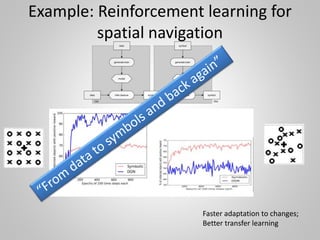

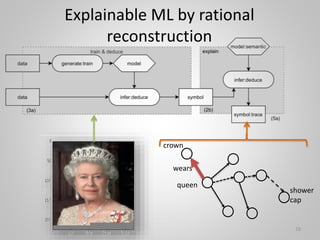

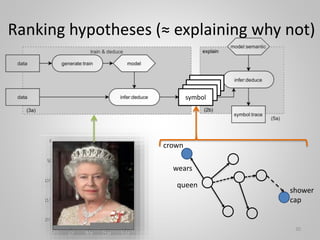

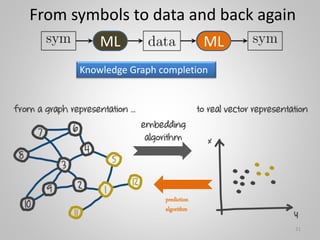

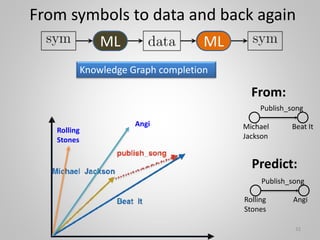

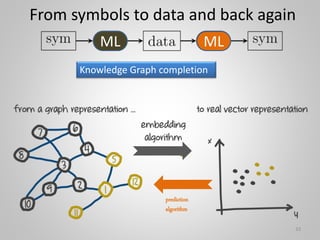

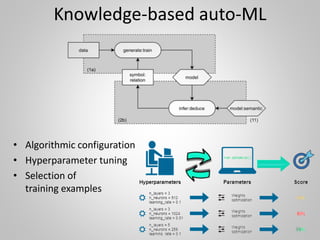

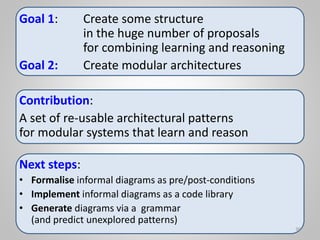

The document discusses the integration of neural and symbolic techniques in AI, highlighting strengths and weaknesses of both approaches to learning and reasoning. It presents a 'boxology' framework inspired by modular software design, aiming to create reusable architectural patterns for AI systems. The authors emphasize the need for structured methodologies to facilitate collaboration and enhance the efficiency of AI knowledge acquisition and reasoning processes.