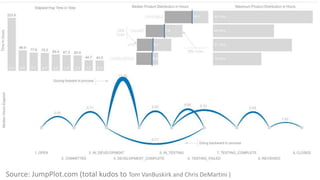

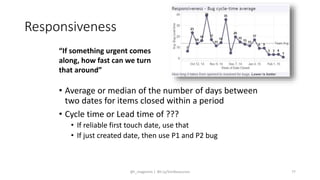

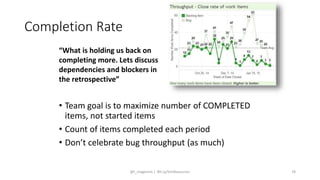

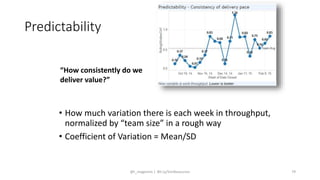

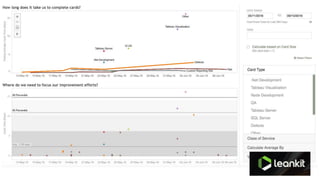

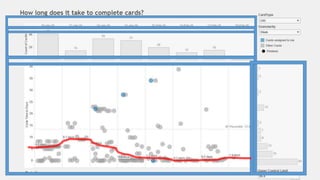

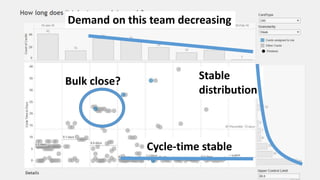

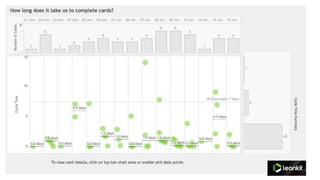

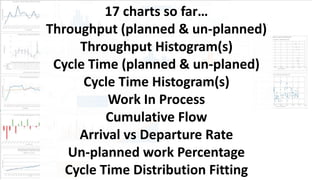

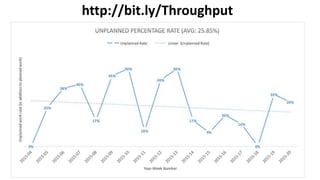

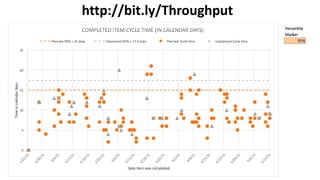

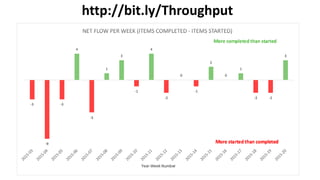

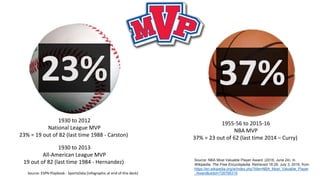

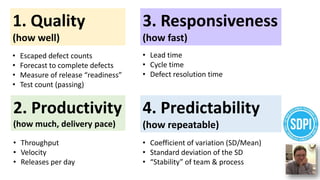

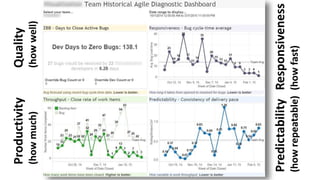

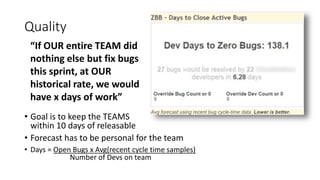

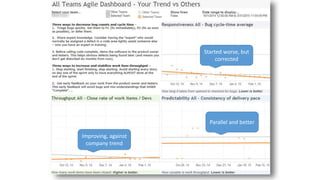

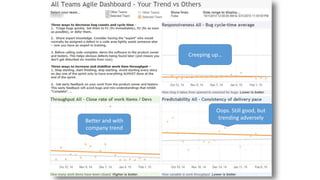

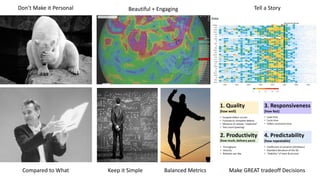

The document discusses the importance of using data-driven insights for coaching teams, emphasizing that data should tell a story and be presented in a meaningful way. It includes various metrics and frameworks, such as cycle time analysis and the Software Development Performance Index (SDPI), to measure aspects like productivity, quality, and predictability. Additionally, it provides multiple resources and tools for effective data visualization and forecasting in team performance management.

![Data Driven Coaching

Safely turning team data into coaching insights (Troy Magennis)

These slides available here:

[link]

@t_magennis

troy.magennis@FocusedObjective.com](https://image.slidesharecdn.com/datadrivencoaching-agile2016troymagennis-160720220114/75/Data-driven-coaching-Agile-2016-troy-magennis-1-2048.jpg)

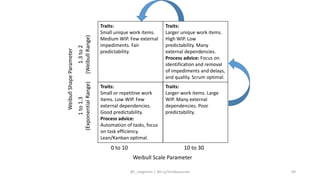

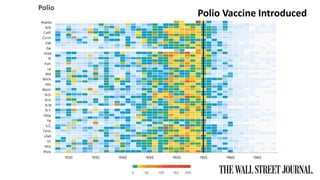

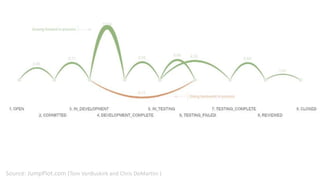

![65

Probability Density Function

Histogram Gamma (3P) Lognormal Rayleigh Weibull

x

1301201101009080706050403020100-10

f(x)

0.32

0.28

0.24

0.2

0.16

0.12

0.08

0.04

0

1997: Industrial Strength Software

by Lawrence H.

Putnam , IEEE , Ware Myers

2002: Metrics and Models in

Software Quality Engineering

(2nd Edition) [Hardcover]

Stephen H. Kan (Author)

Paper: http://bit.ly/14eYFM2](https://image.slidesharecdn.com/datadrivencoaching-agile2016troymagennis-160720220114/85/Data-driven-coaching-Agile-2016-troy-magennis-63-320.jpg)