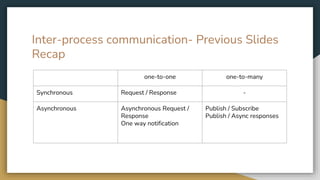

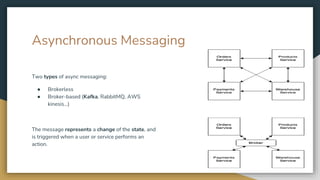

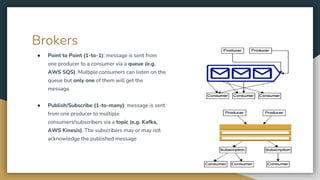

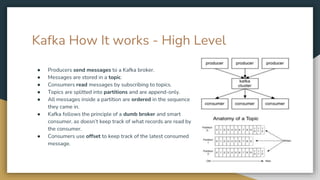

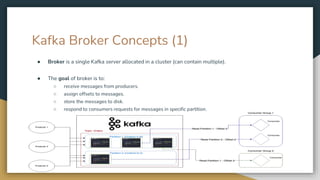

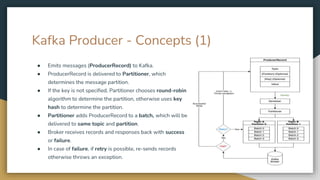

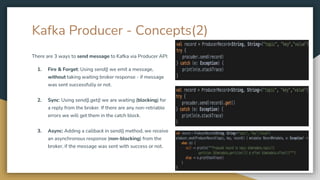

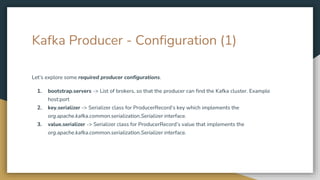

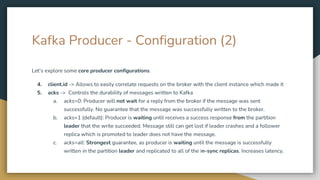

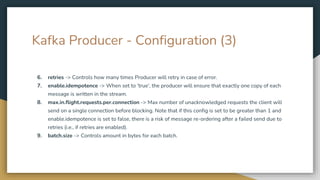

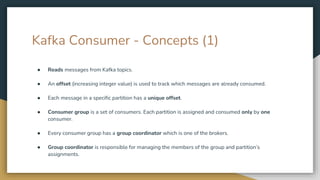

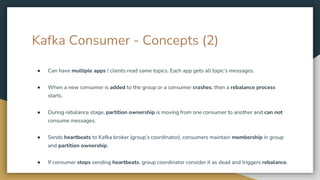

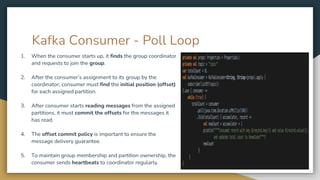

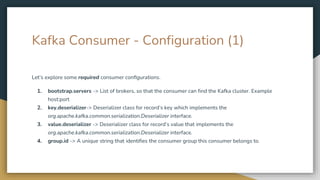

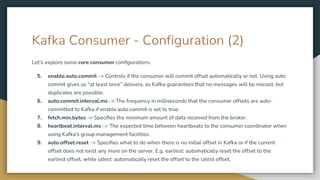

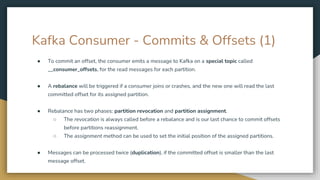

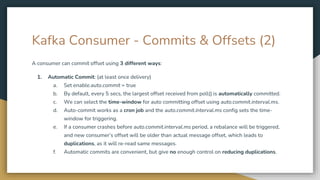

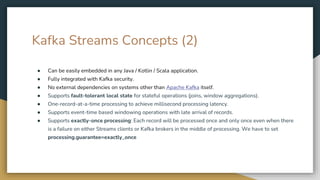

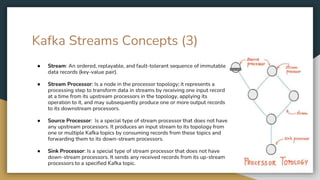

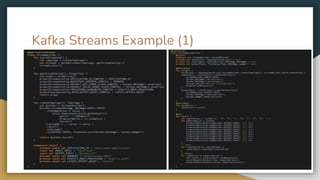

The document serves as an introduction to Kafka and event-driven inter-process communication, explaining key concepts like message brokers, producers, consumers, and producer/consumer configurations. Kafka is described as an event streaming platform that emphasizes high throughput and durability through its distributed commit log architecture. The document also details practical configurations for brokers, producers, and consumers, along with strategies for ensuring reliable data delivery and processing guarantees.