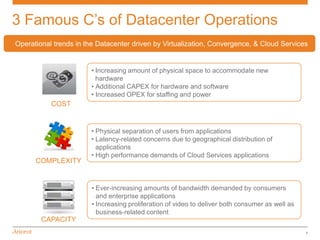

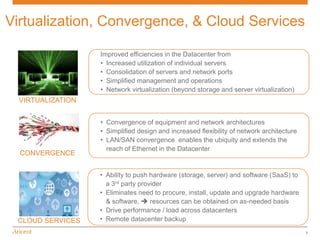

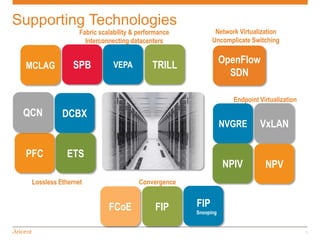

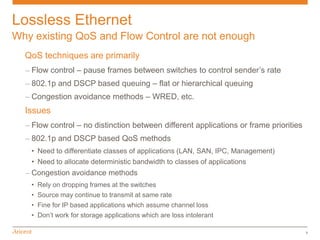

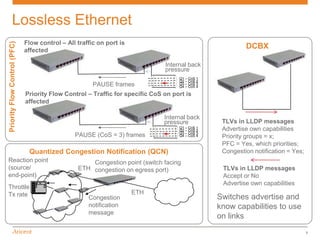

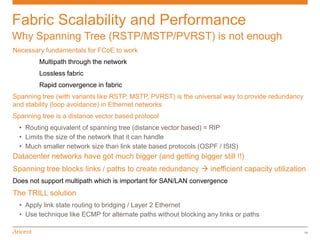

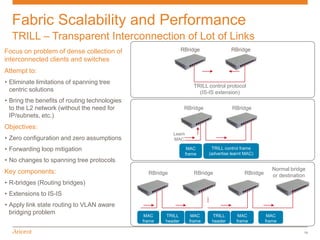

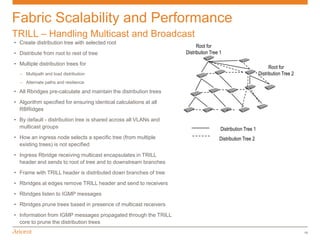

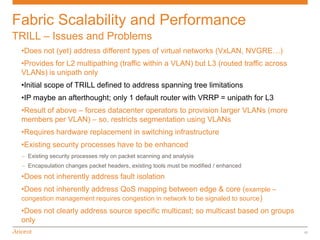

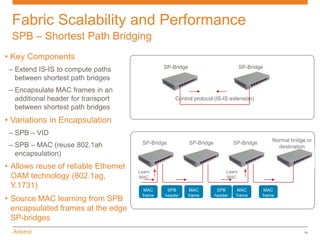

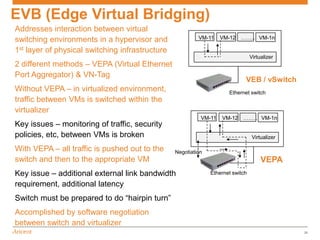

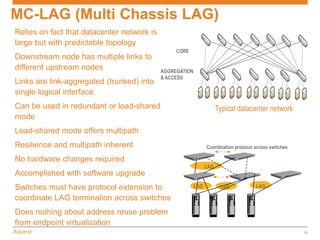

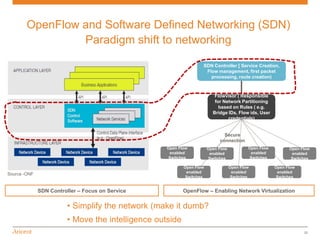

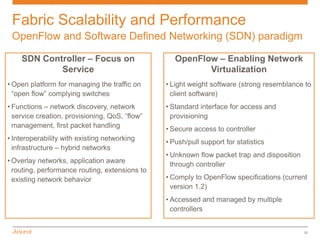

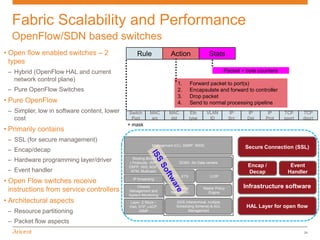

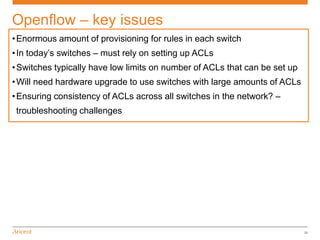

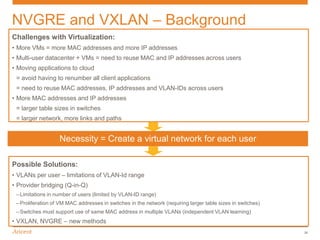

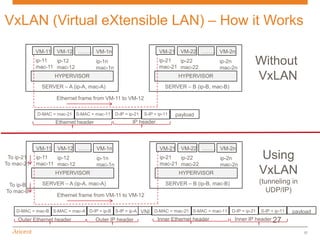

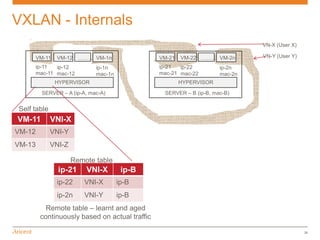

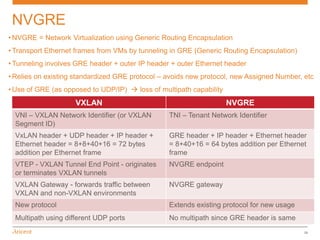

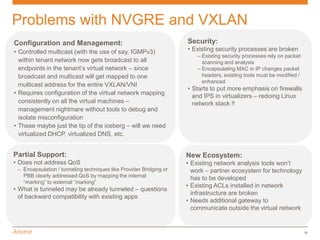

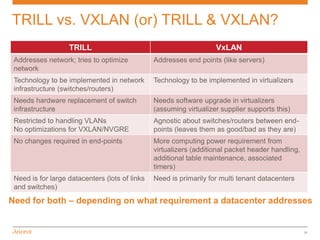

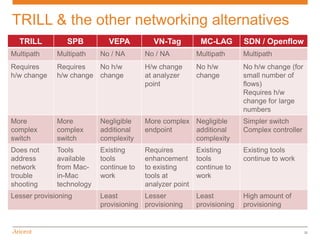

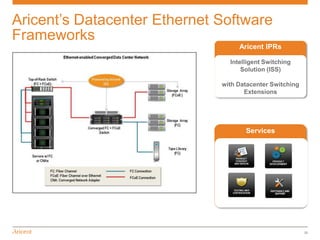

The document discusses the significance of TRILL and various data center technologies in enhancing data center operations through improved virtualization, convergence, and cloud services. It outlines key imperatives such as increasing price/performance ratios, energy efficiency, and the need for standardized solutions in network management. Additionally, it presents alternatives to TRILL, such as Shortest Path Bridging (SPB), OpenFlow, and SDN, while addressing challenges and scalability issues within data center networks.