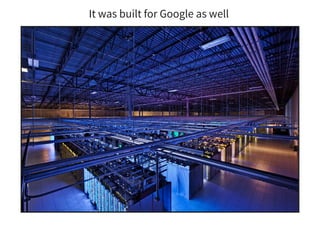

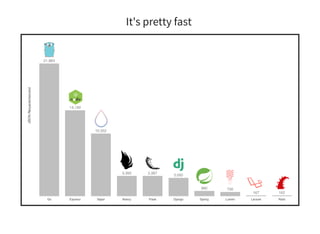

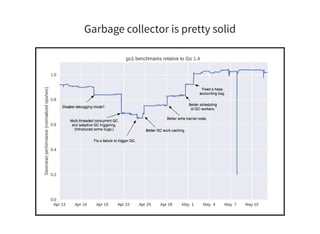

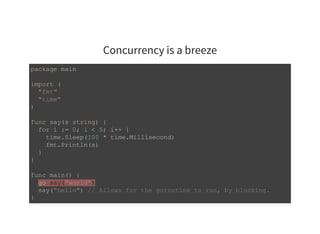

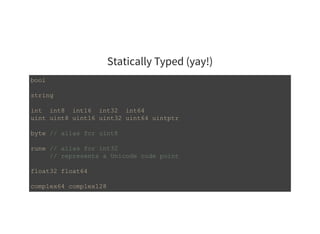

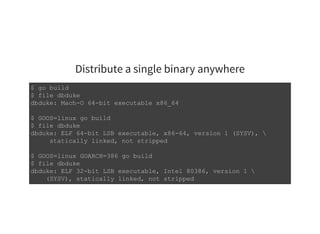

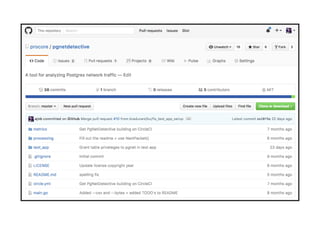

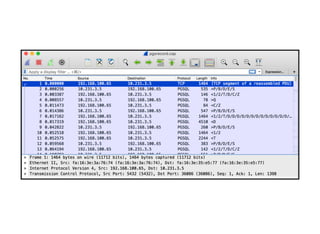

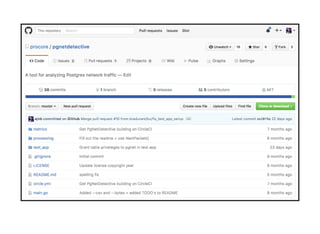

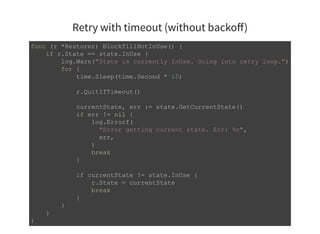

The document discusses the use of Go for building PostgreSQL tools, highlighting its performance, reliability, and ease of use. It features practical examples, including interacting with databases and creating tools such as 'pgnetdetective' and 'dbduke' for processing queries and managing database restores. The author, AJ Bahnken, emphasizes Go's capabilities in concurrency and error handling, while promoting job opportunities at Procore.