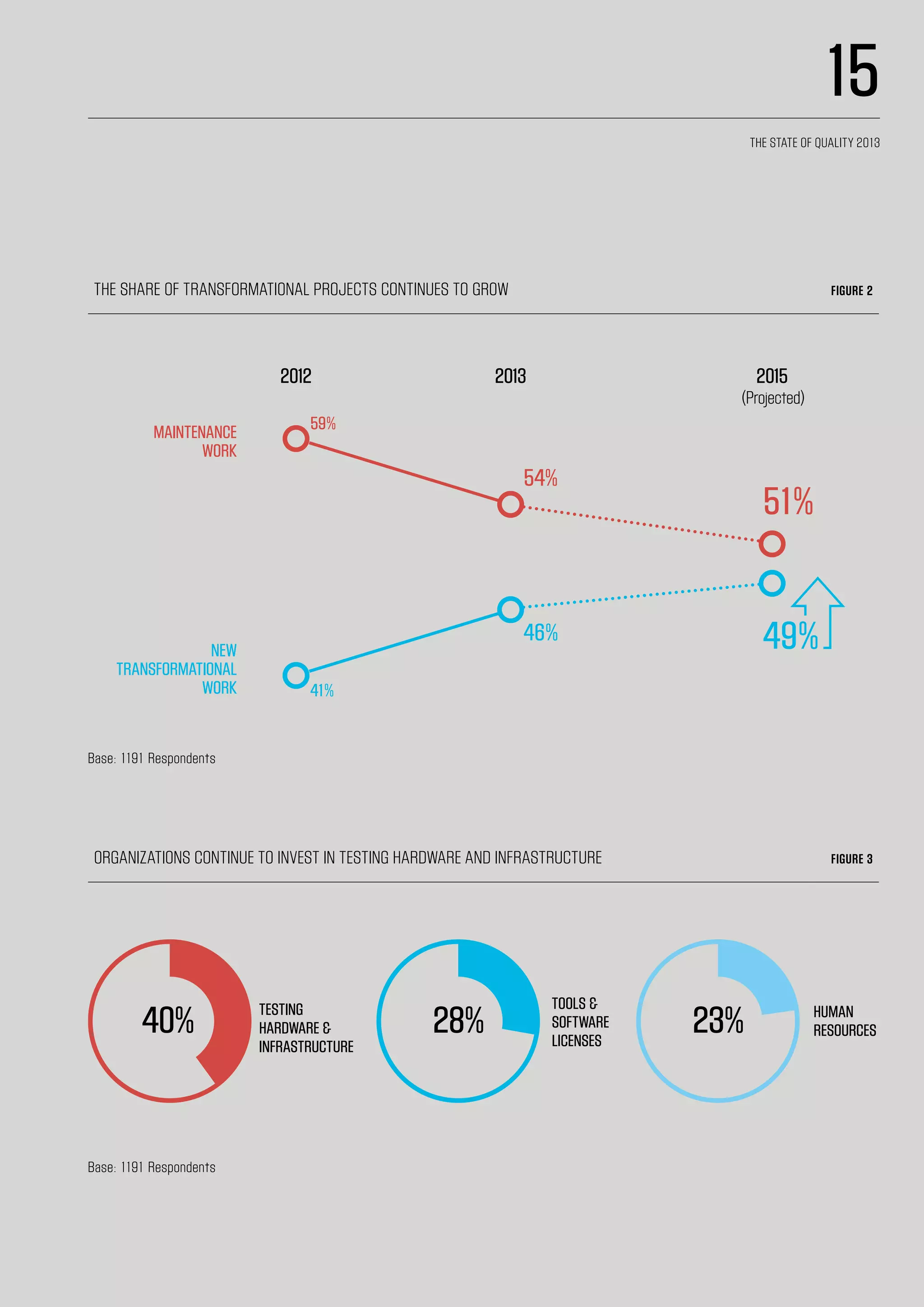

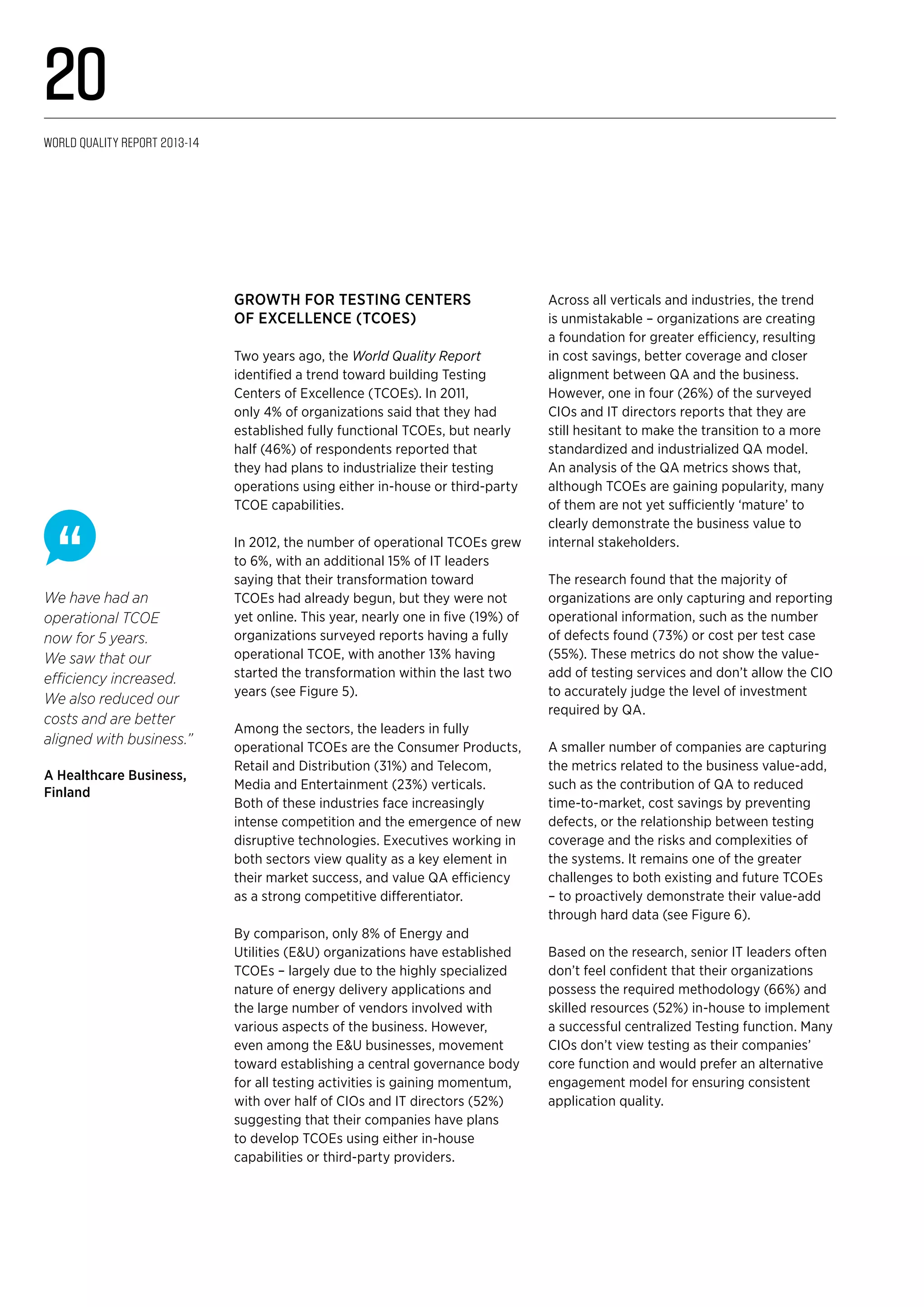

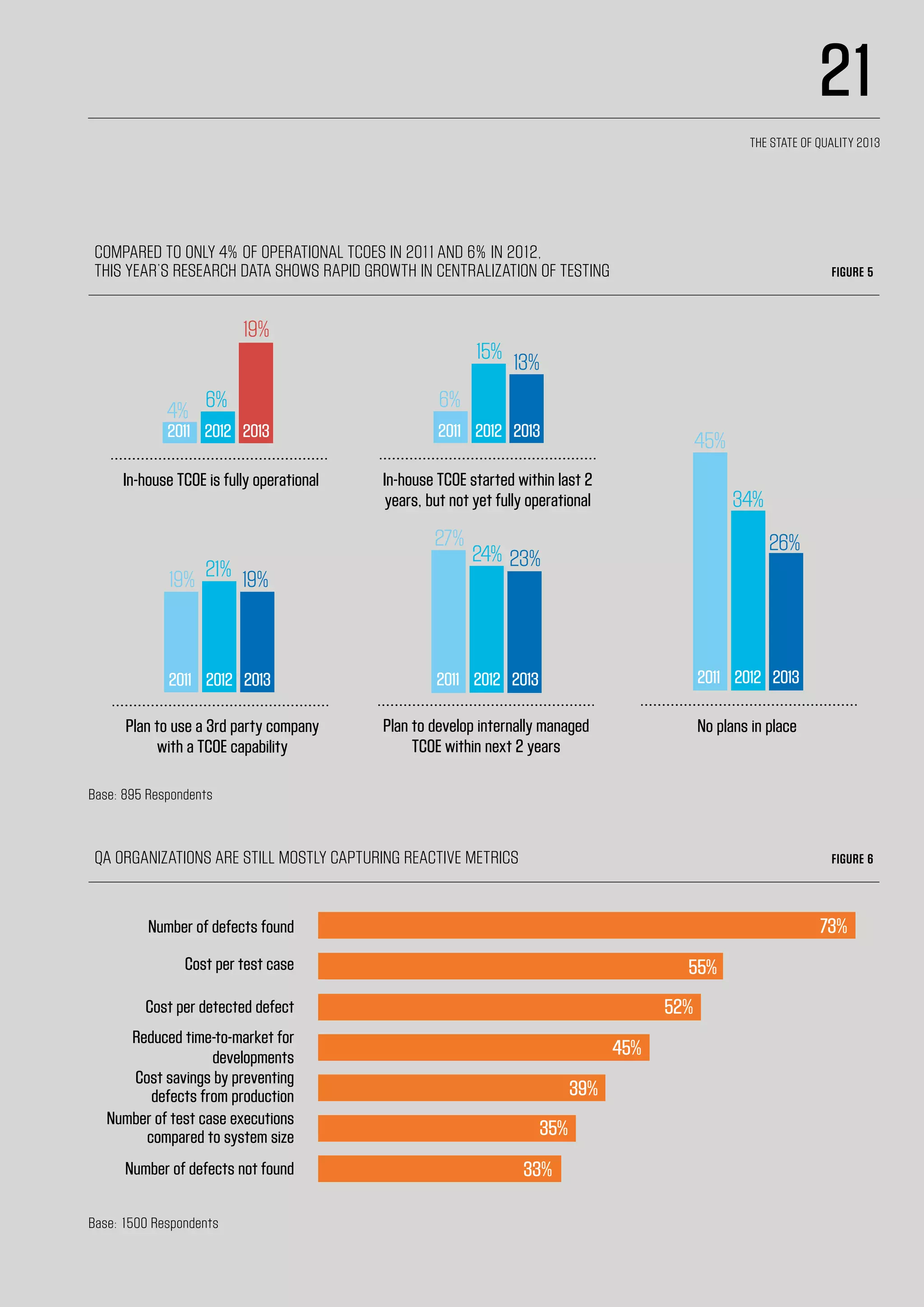

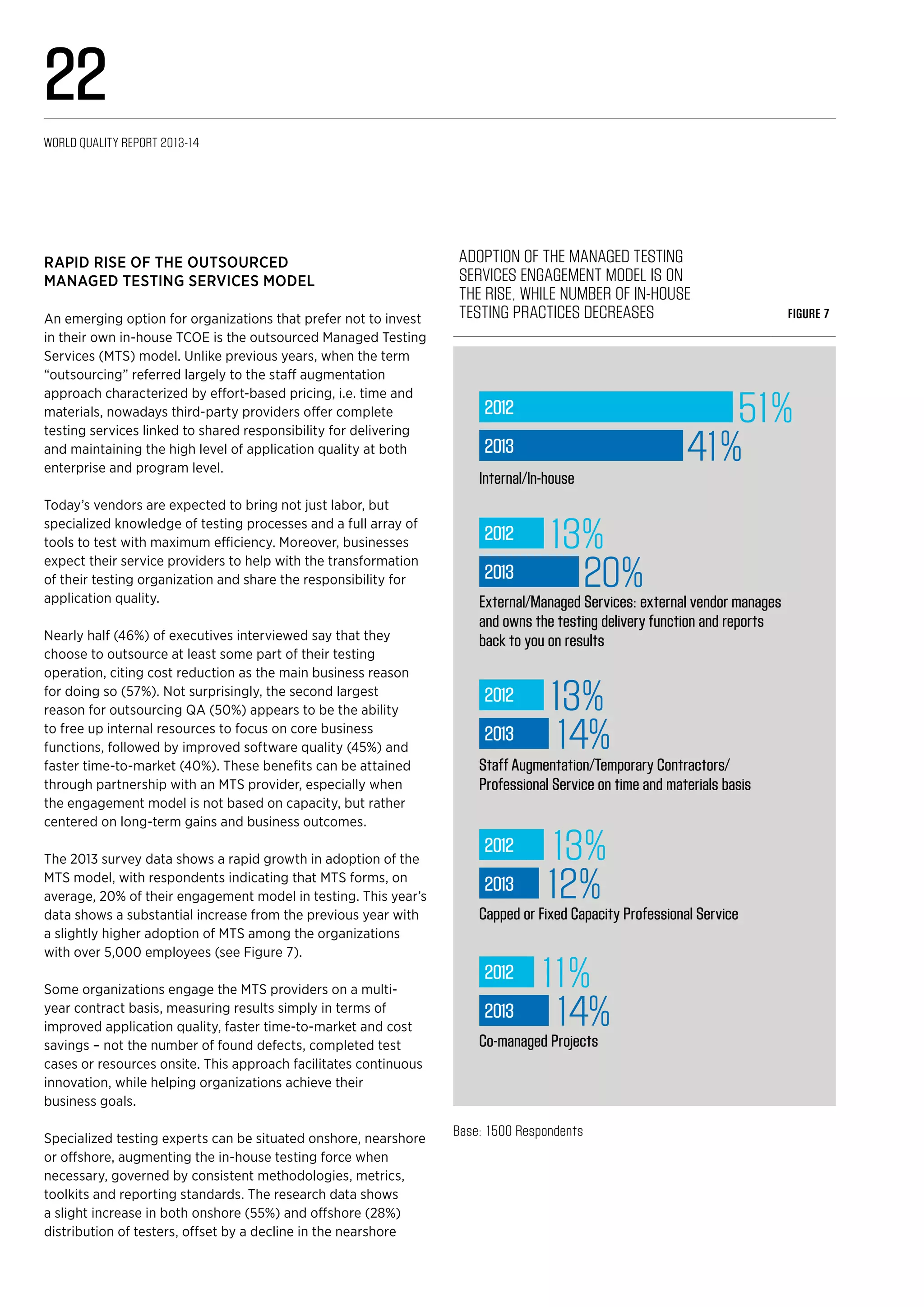

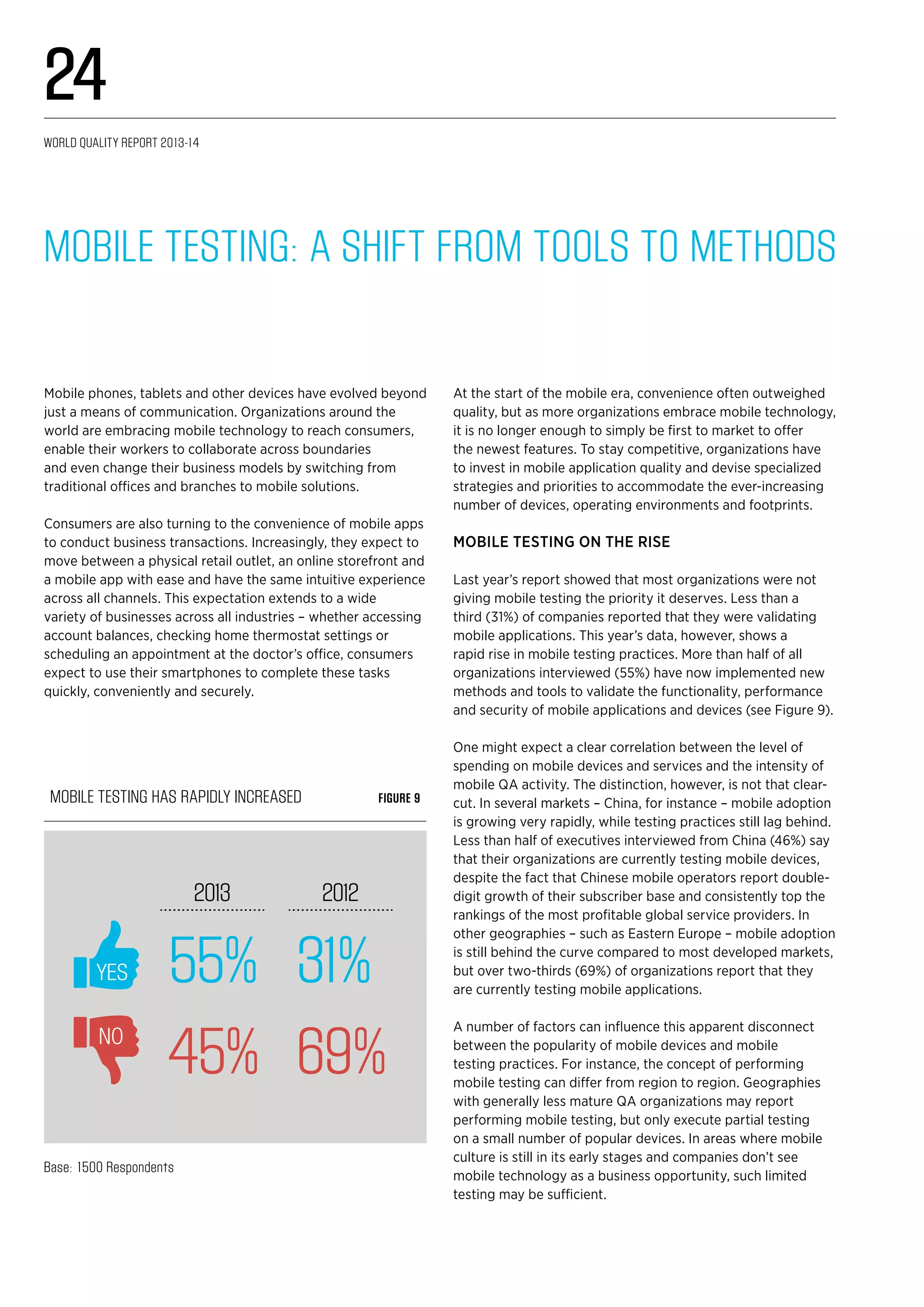

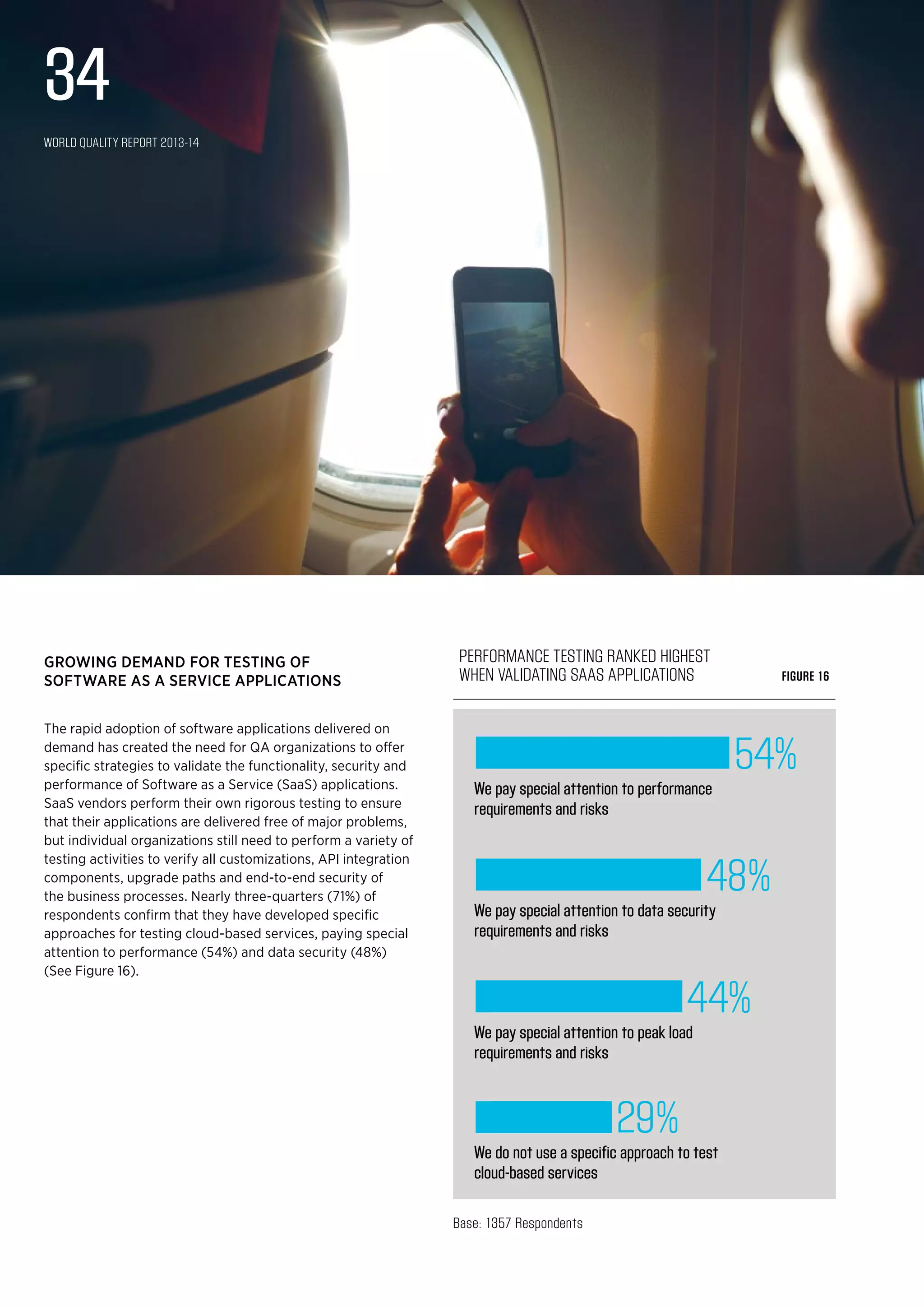

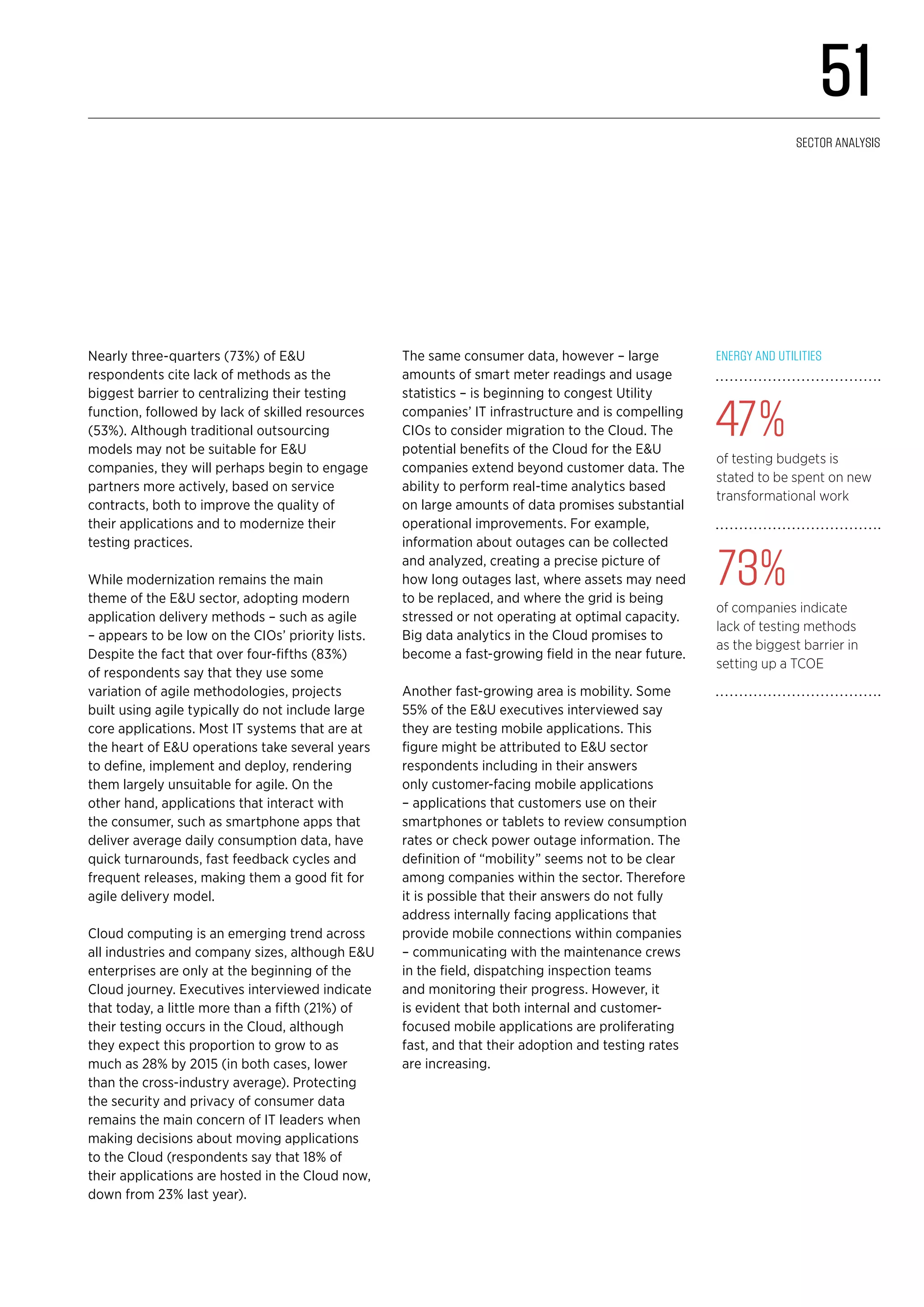

The fifth edition of the World Quality Report highlights ongoing trends in quality assurance and testing, indicating an increasing budget allocation towards these functions, particularly for transformational projects. It reveals that organizations are working to centralize QA processes and are facing challenges with mobile and agile testing methodologies. The report emphasizes the importance of early engagement in testing and the need for business-oriented metrics to demonstrate the value of QA efforts.