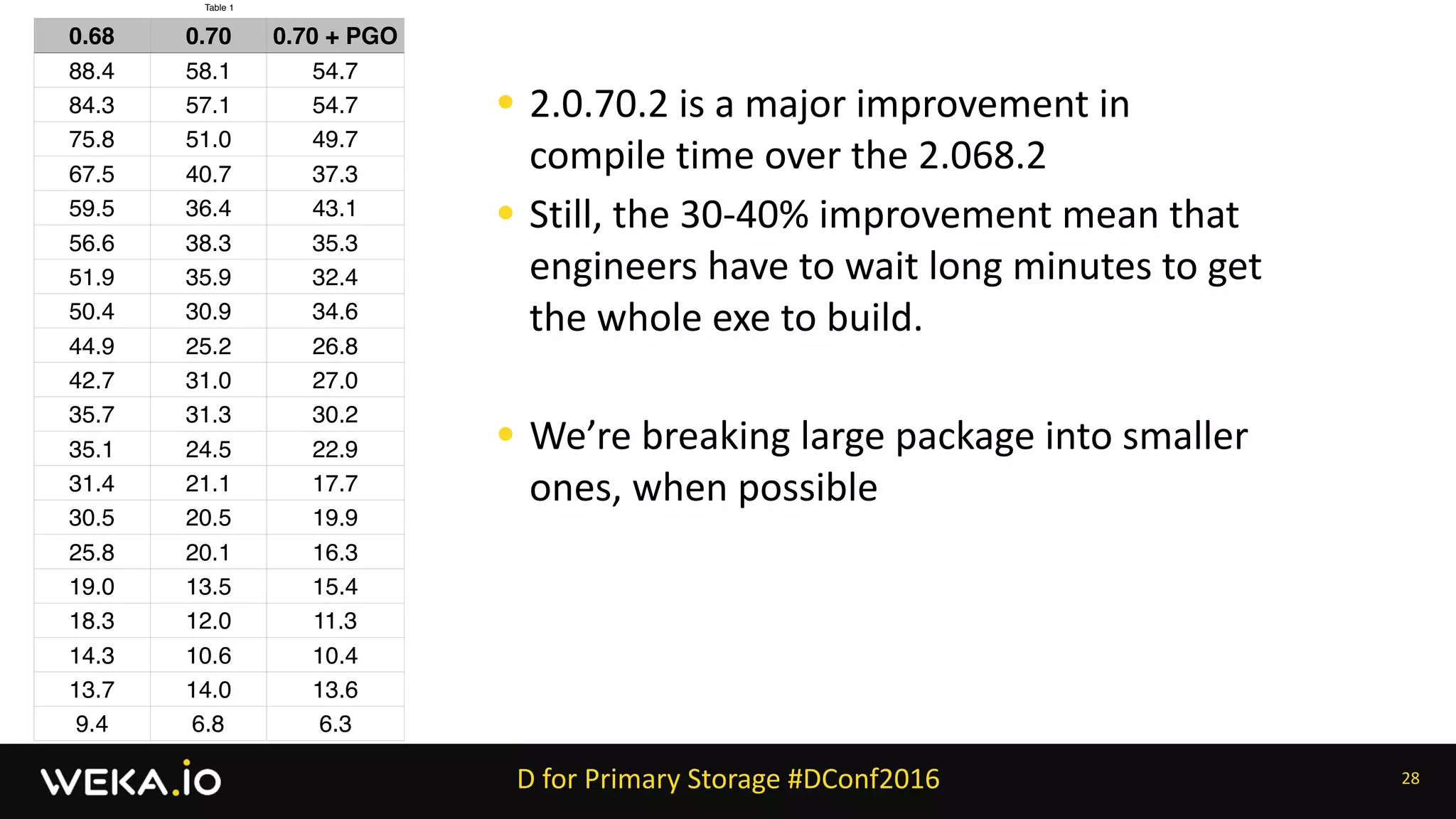

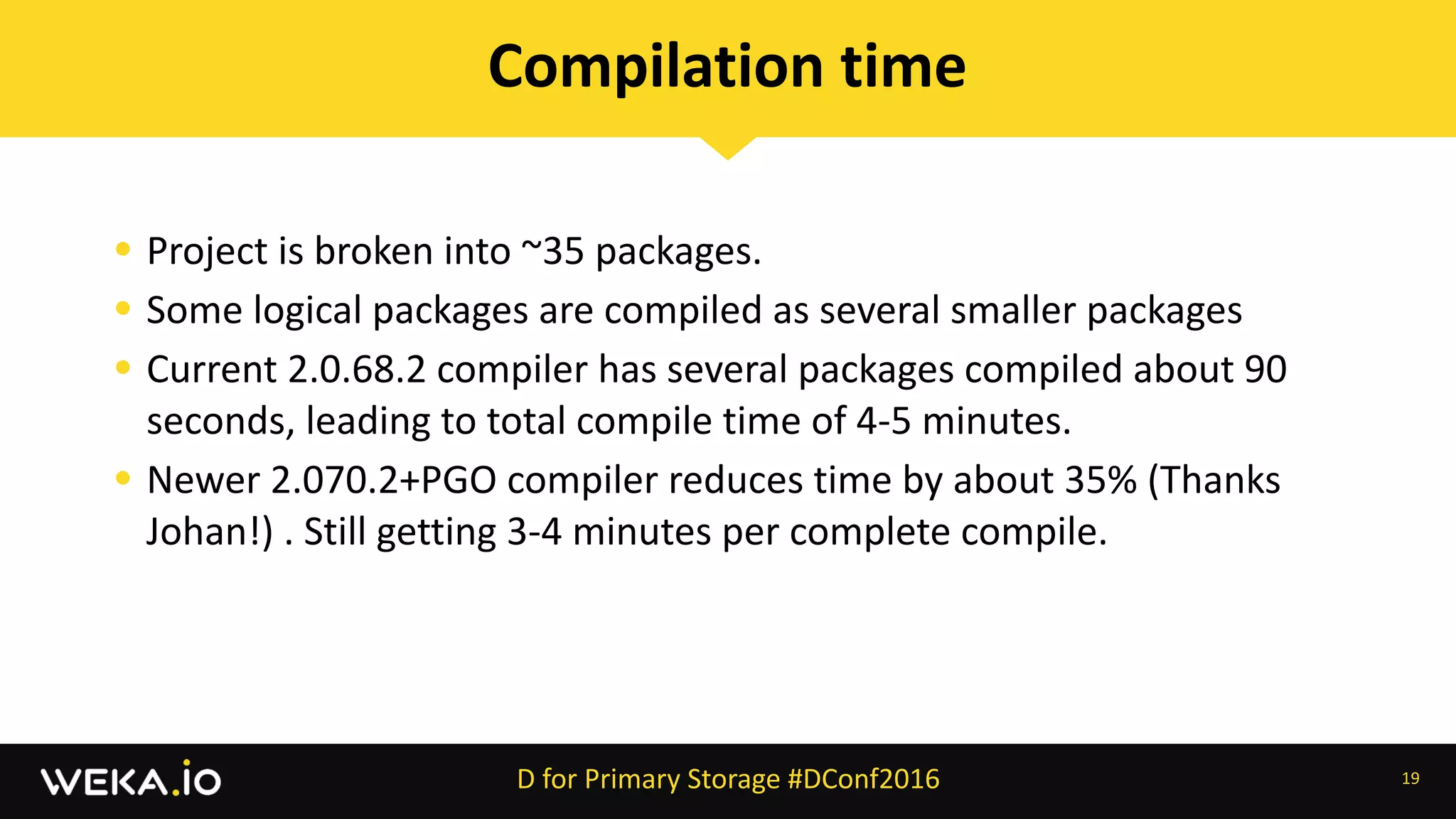

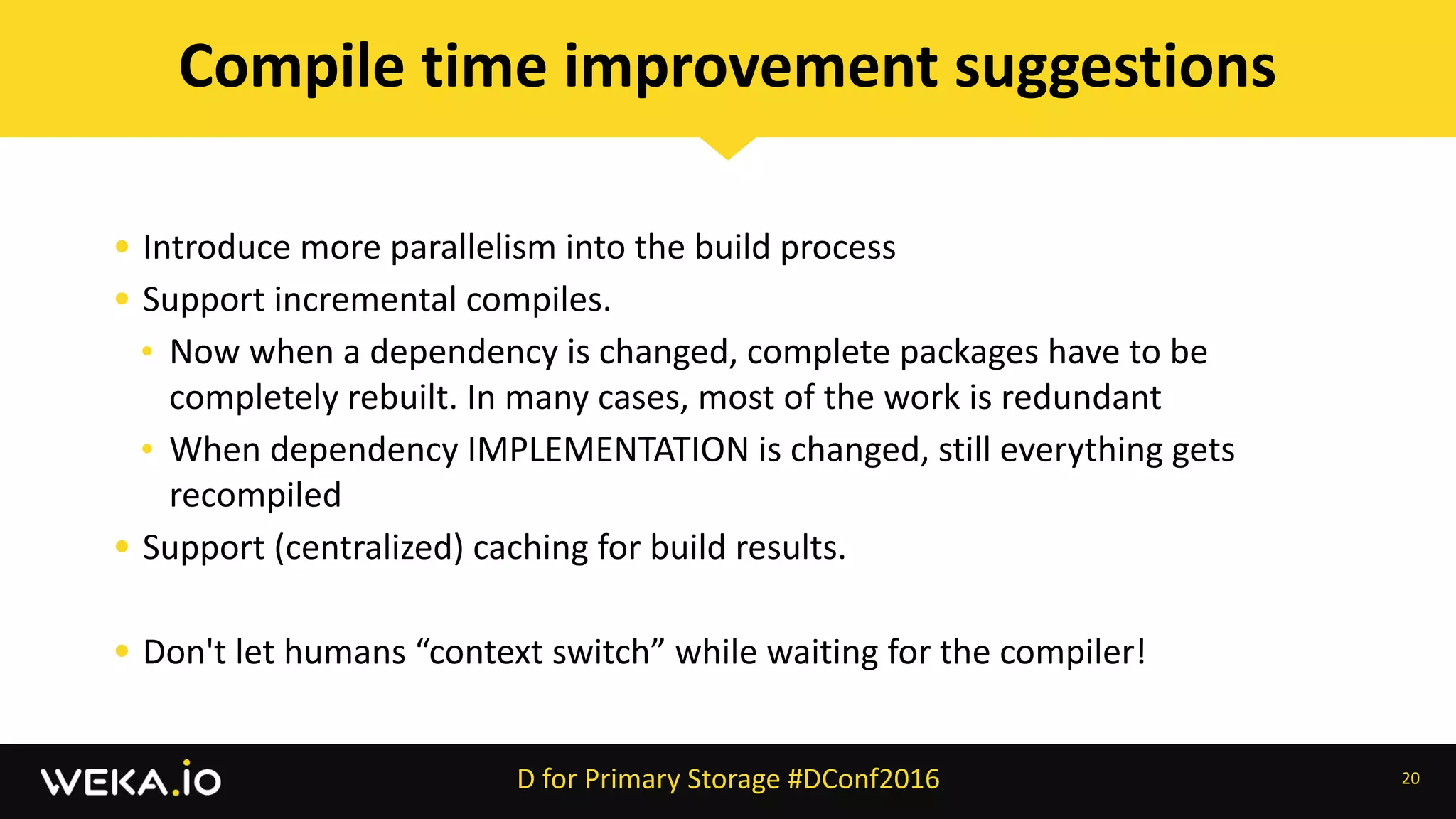

The document discusses the development and progress of a primary storage solution using the D programming language at Weka.io, emphasizing significant improvements in performance and compilation times. It outlines the architecture, challenges faced, and strategies for development, including optimizing code and utilizing parallelism. Additionally, it highlights ongoing efforts to enhance build processes and manage memory efficiently to cater to high performance storage demands.

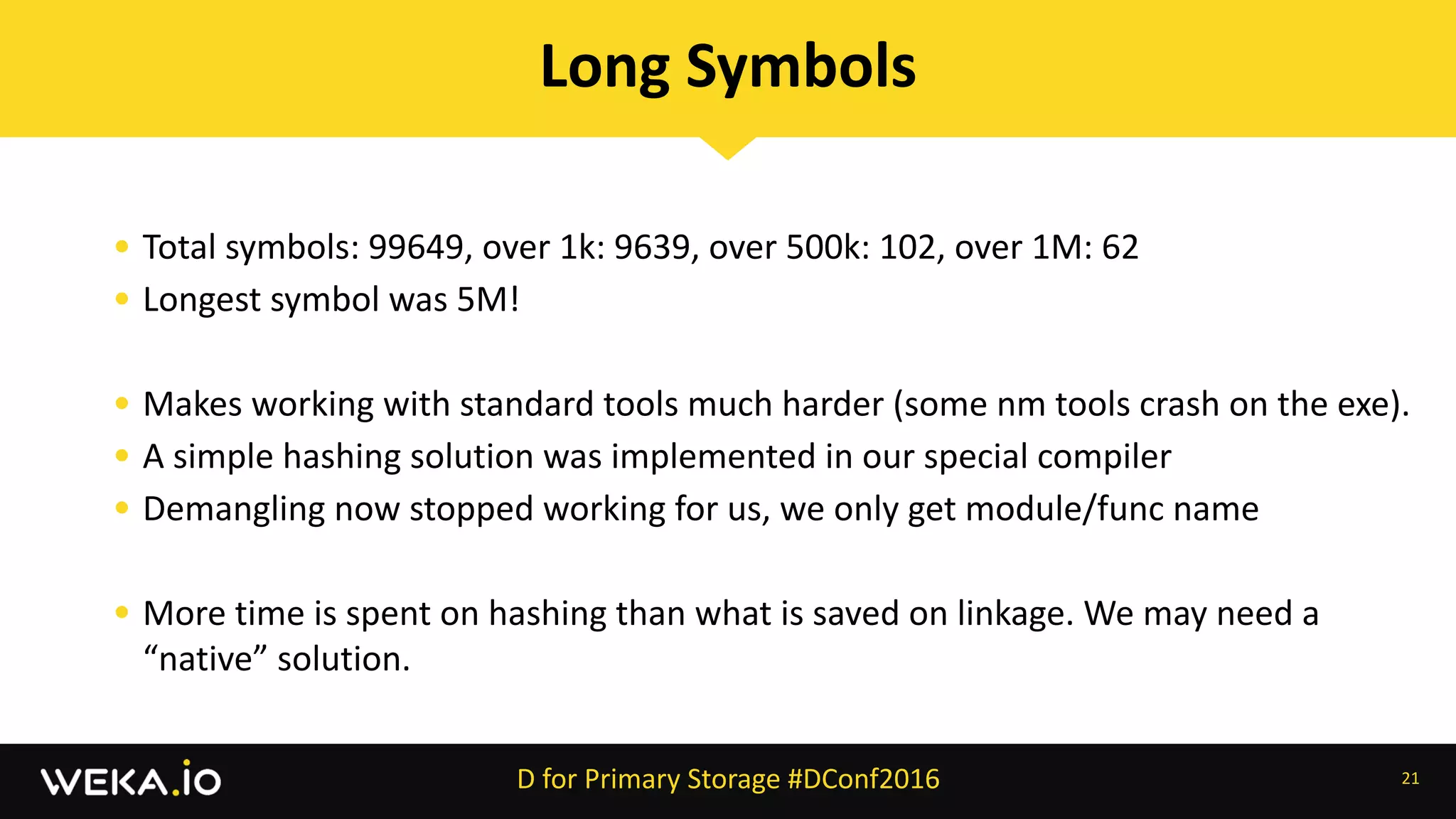

![static if (JoinedKV.sizeof <= CACHE_LINE_SIZE) {

alias KV = JoinedKV;

enum separateKV = false;

} else {

struct KV {

K key;

/* values will be stored separately for

better cache behavior */

}

V[NumEntries] values;

enum separateKV = true;

}

17

Efficient packing

D for Primary Storage #DConf2016](https://image.slidesharecdn.com/dconf2016presentationdforprimarystorage-160505080351/75/D-conf-2016-Using-D-for-Primary-Storage-17-2048.jpg)

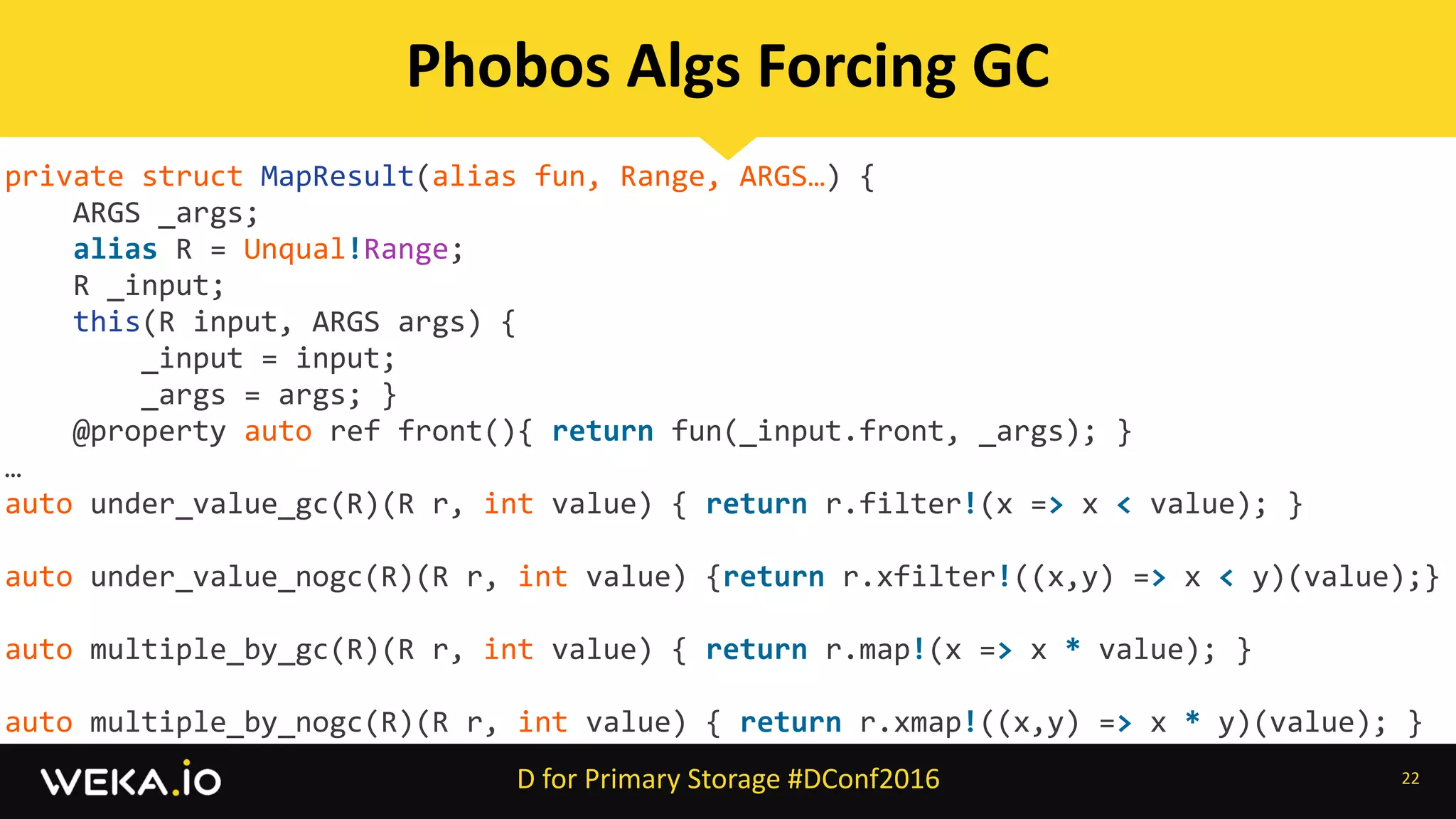

![• Make it explicit

• Allow it to manipulate types, to replace complex template recursion

24

static foreach

D for Primary Storage #DConf2016

template hasUDAttributeOfType(T, alias X) {

alias attrs = TypeTuple!(__traits(getAttributes, X));

template helper(int i) {

static if (i >= attrs.length) {

enum helper = false;

} else static if (is(attrs[i] == T) || is(typeof(attrs[i]) == T)) {

static assert (!helper!(i+1), "More than one matching attribute: " ~ attrs.stringof);

enum helper = true;

} else {

enum helper = helper!(i+1);

}

}

enum hasUDAttributeOfType = helper!0;

}](https://image.slidesharecdn.com/dconf2016presentationdforprimarystorage-160505080351/75/D-conf-2016-Using-D-for-Primary-Storage-24-2048.jpg)

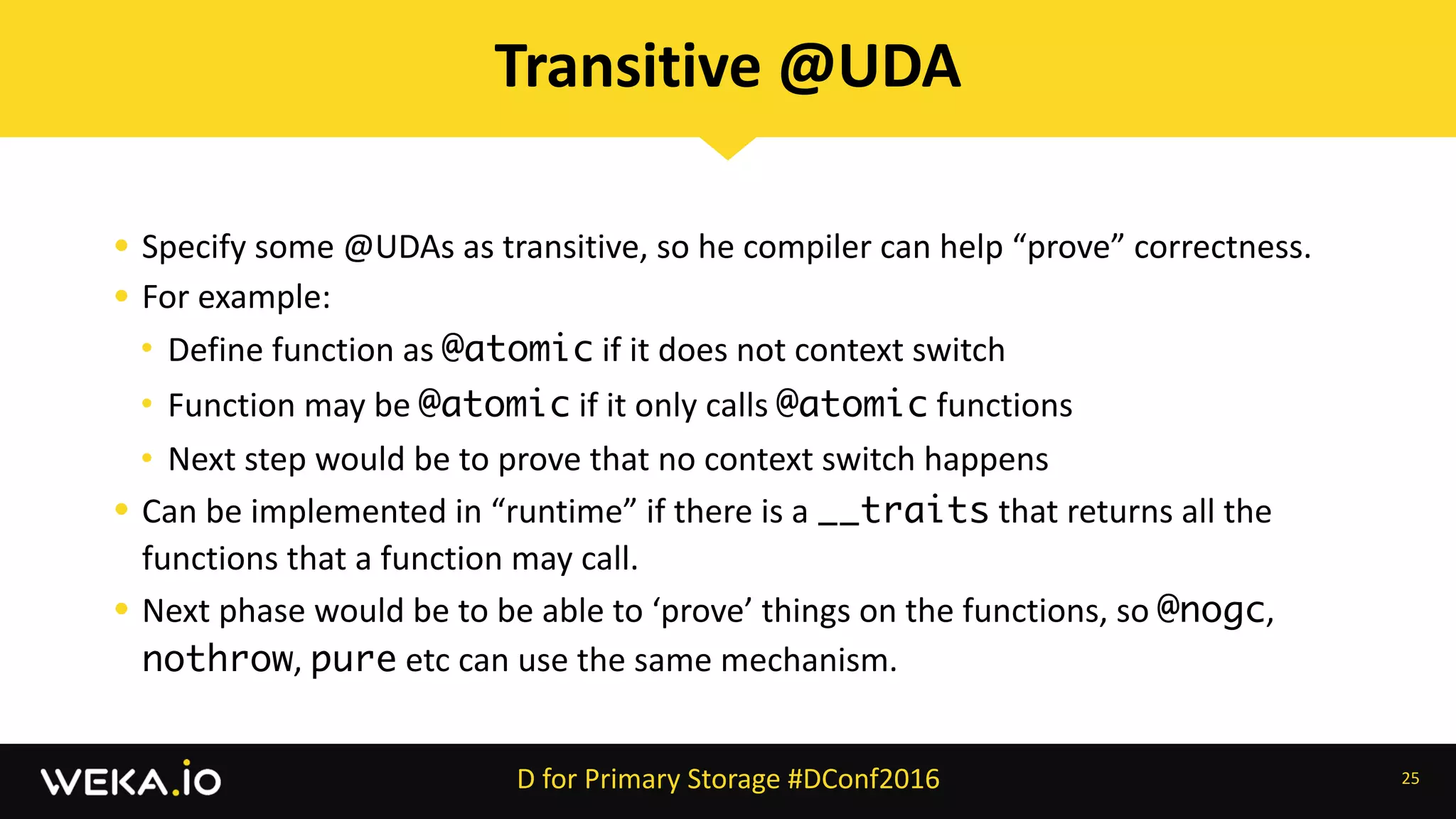

![• __traits that returns that max stack size of a function

• Add a predicate that tells whether there is an existing exception currently

handled

• Donate Weka’s @nogc ‘standard library’ to Phobos:

• Our Fiber additions into Phobos (throwInFiber, TraceInfo support, etc) [other

lib funs as well]

• Containers, algorithms, lockless data structures, etc…

26

Other Suggestions

D for Primary Storage #DConf2016](https://image.slidesharecdn.com/dconf2016presentationdforprimarystorage-160505080351/75/D-conf-2016-Using-D-for-Primary-Storage-26-2048.jpg)