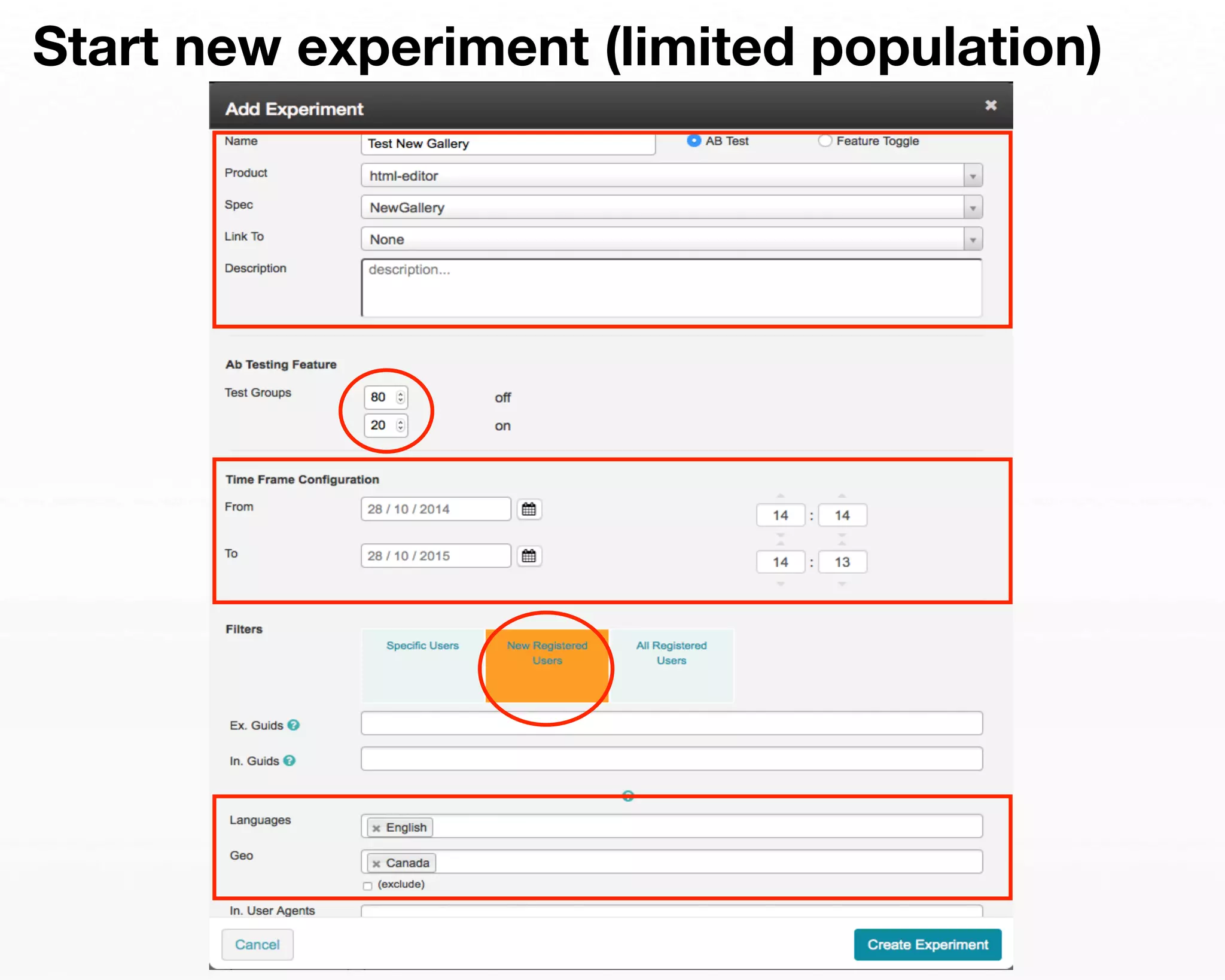

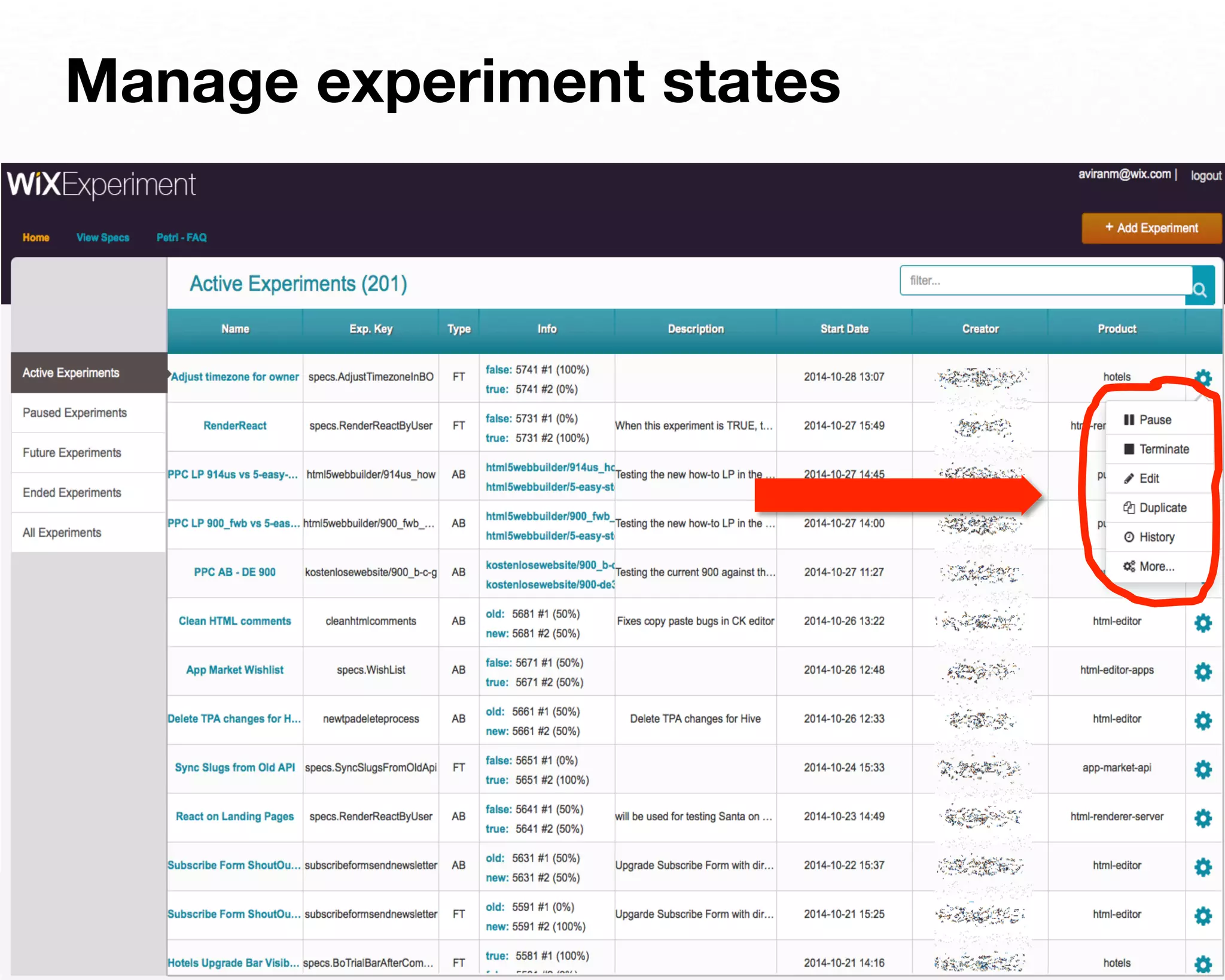

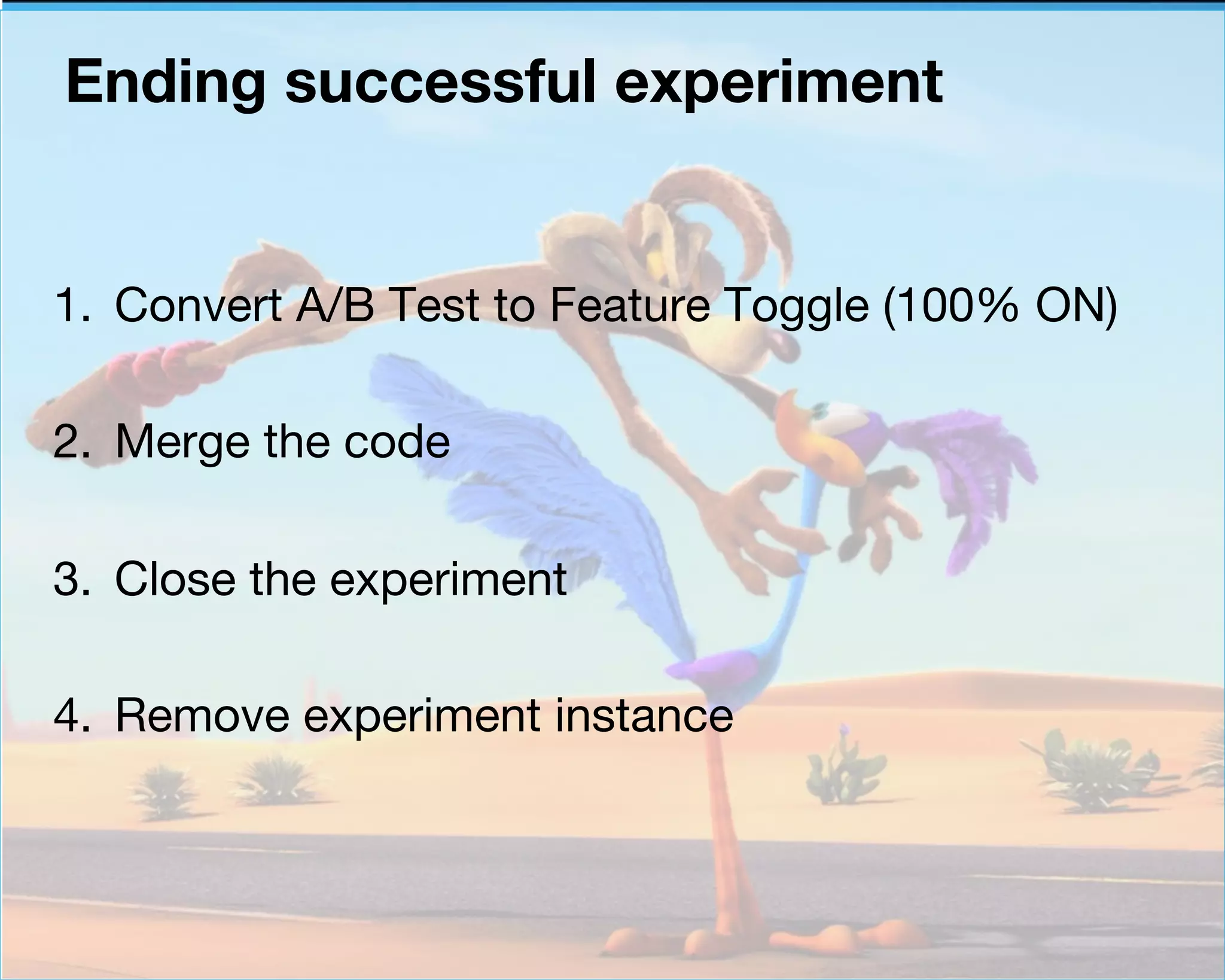

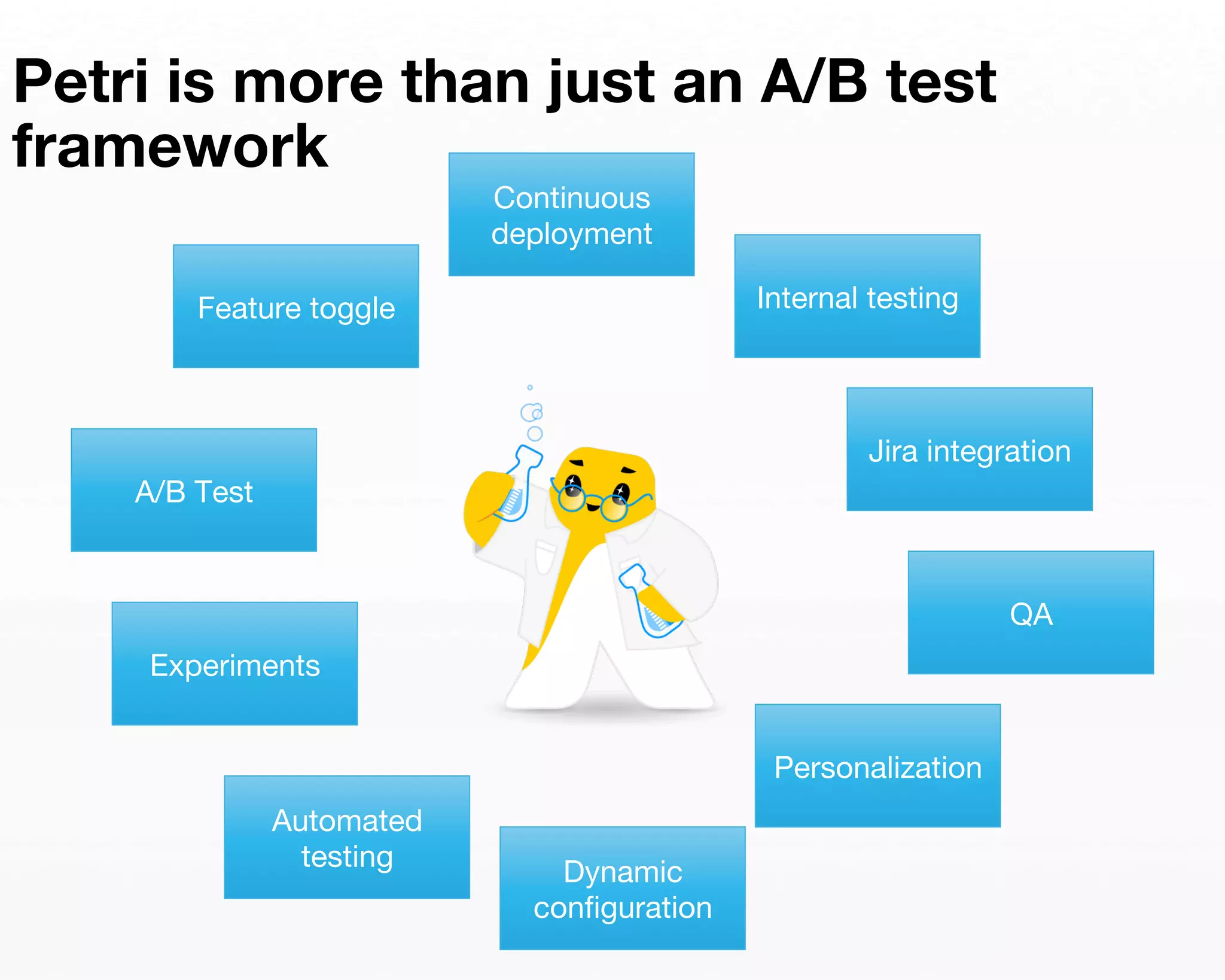

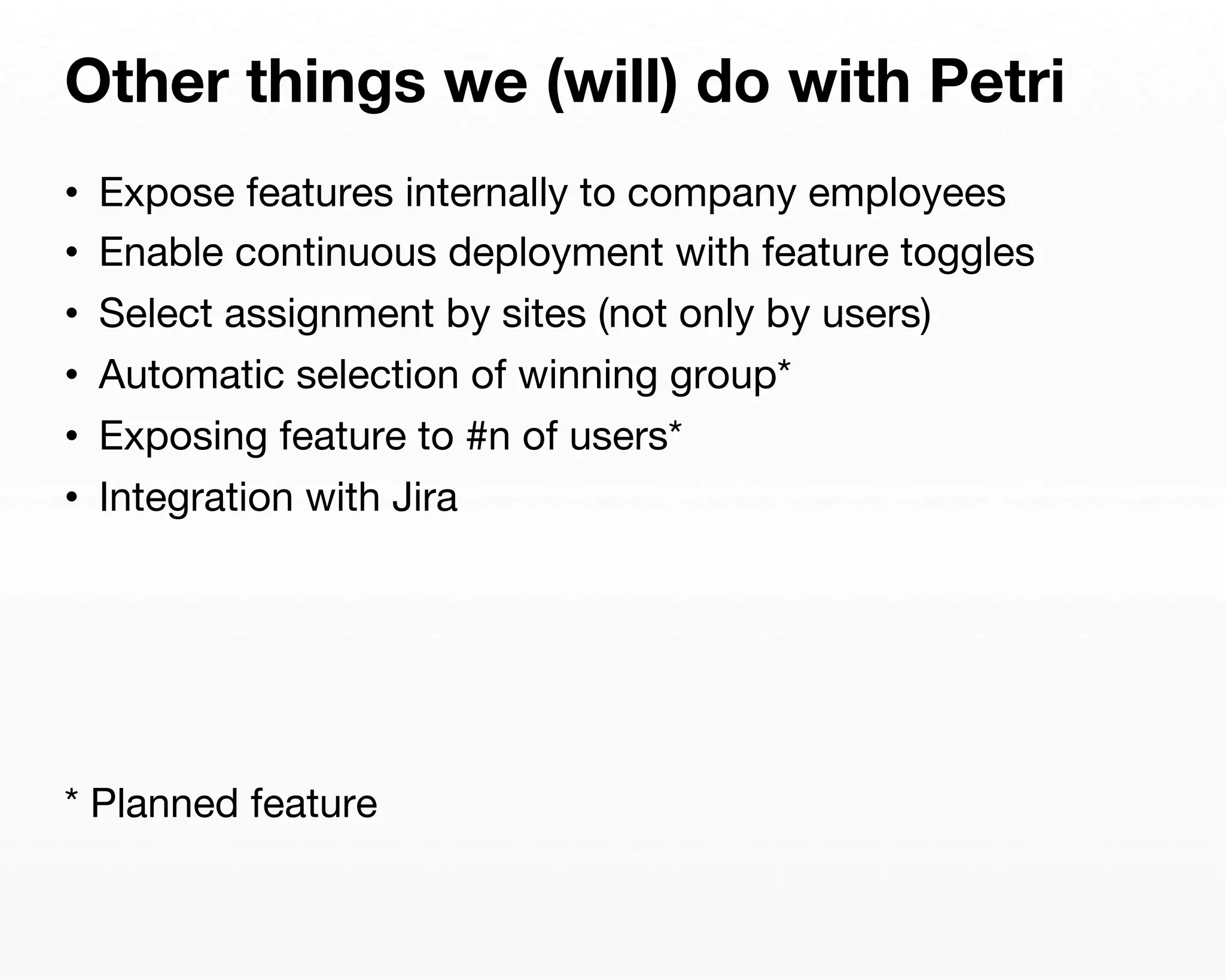

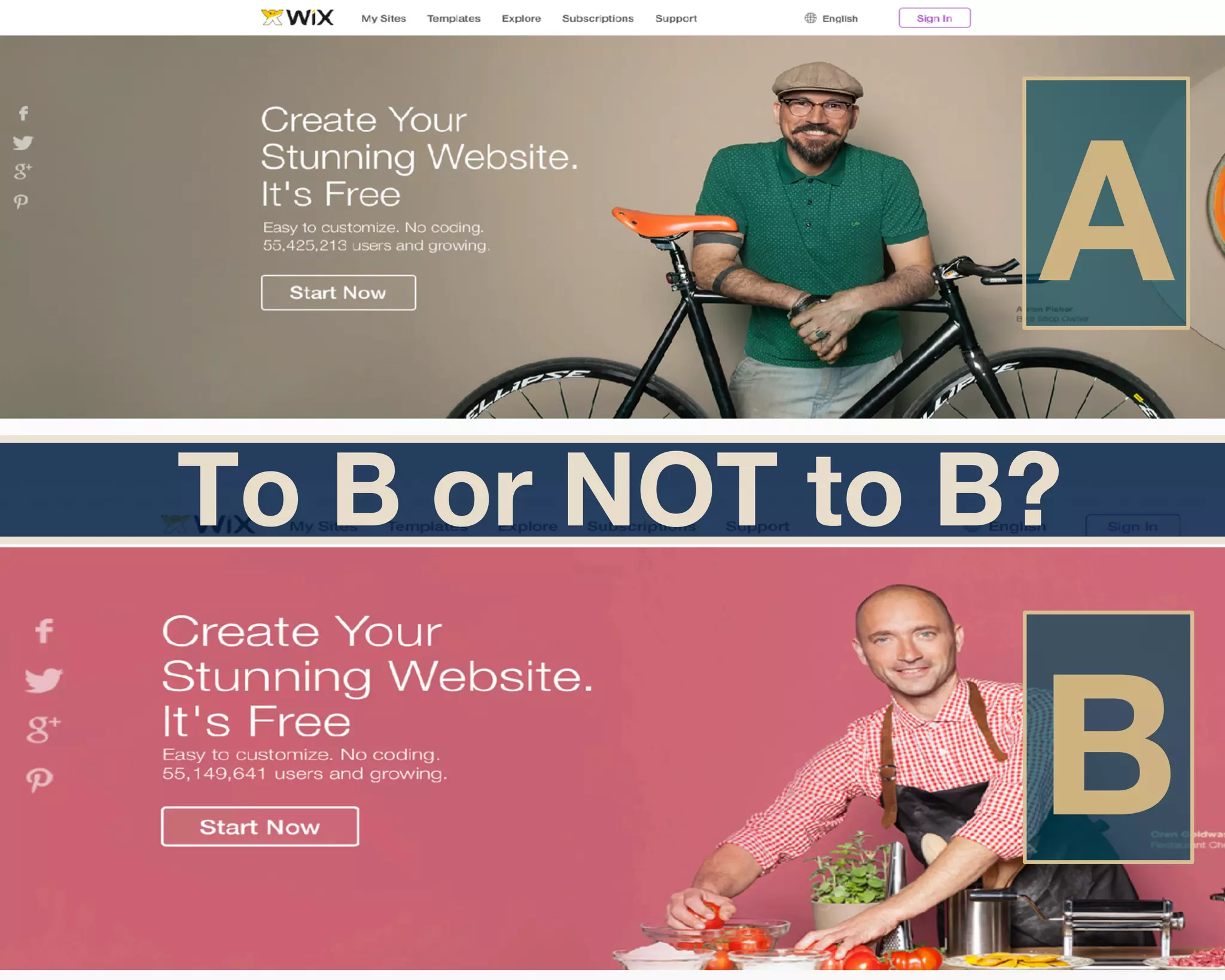

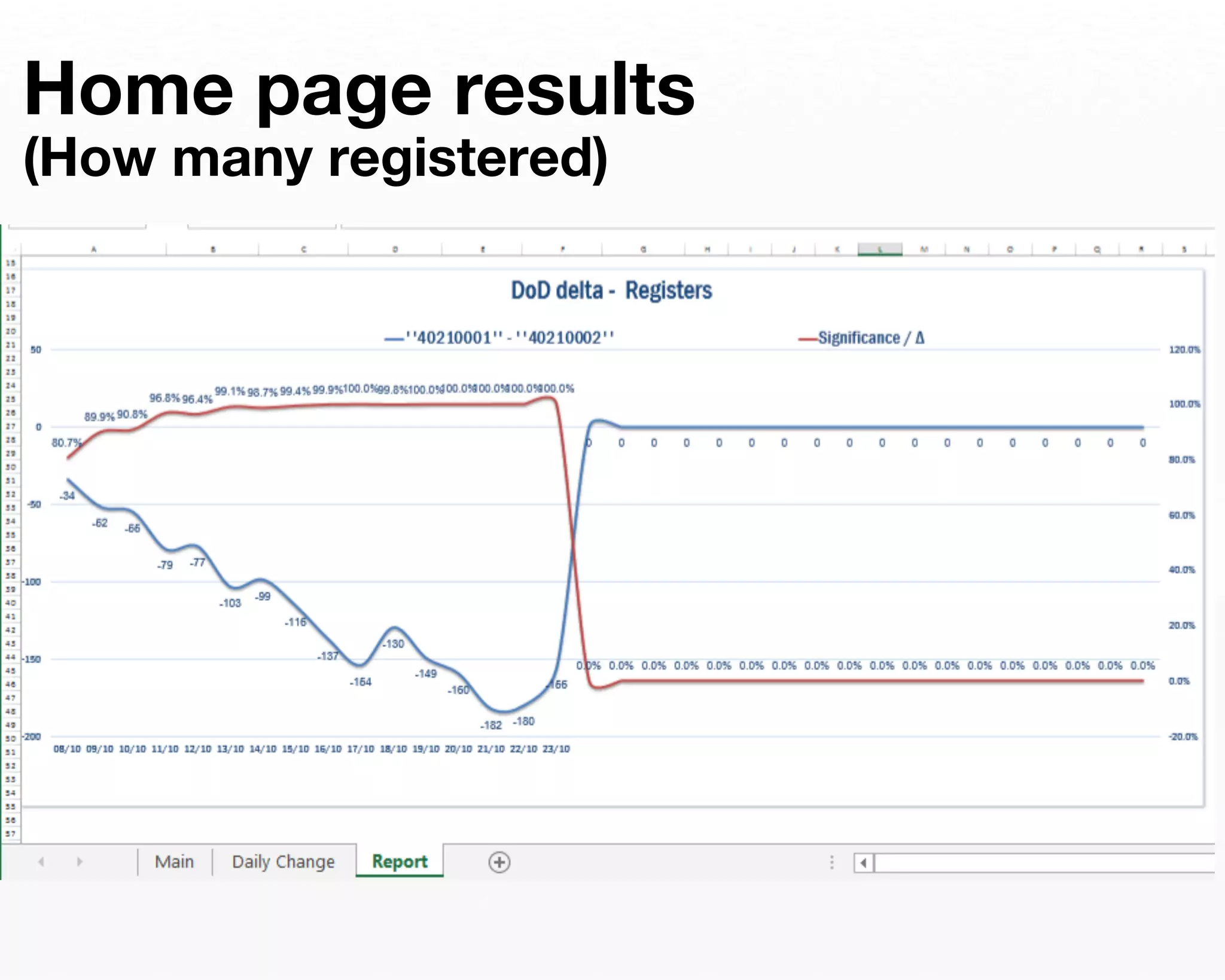

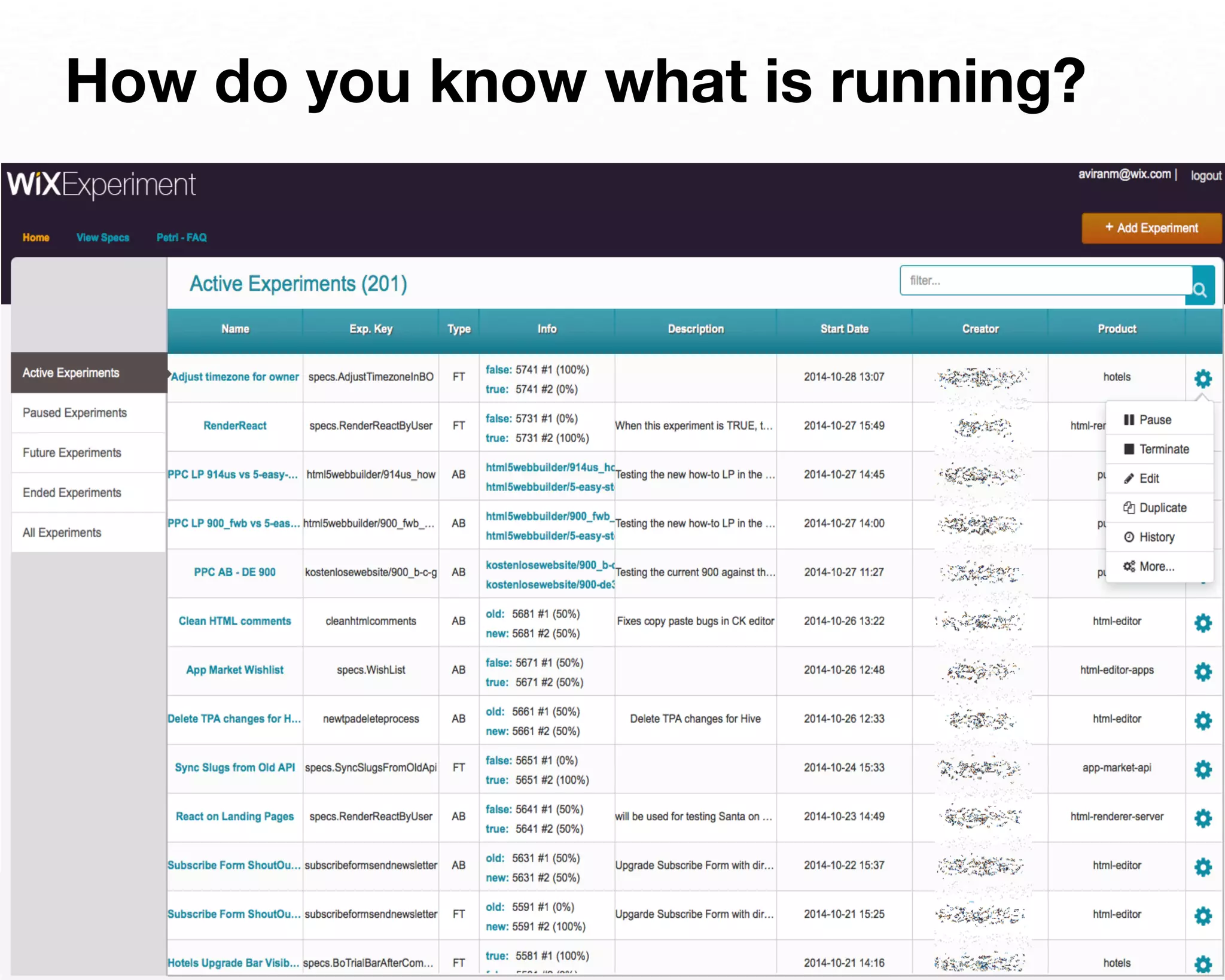

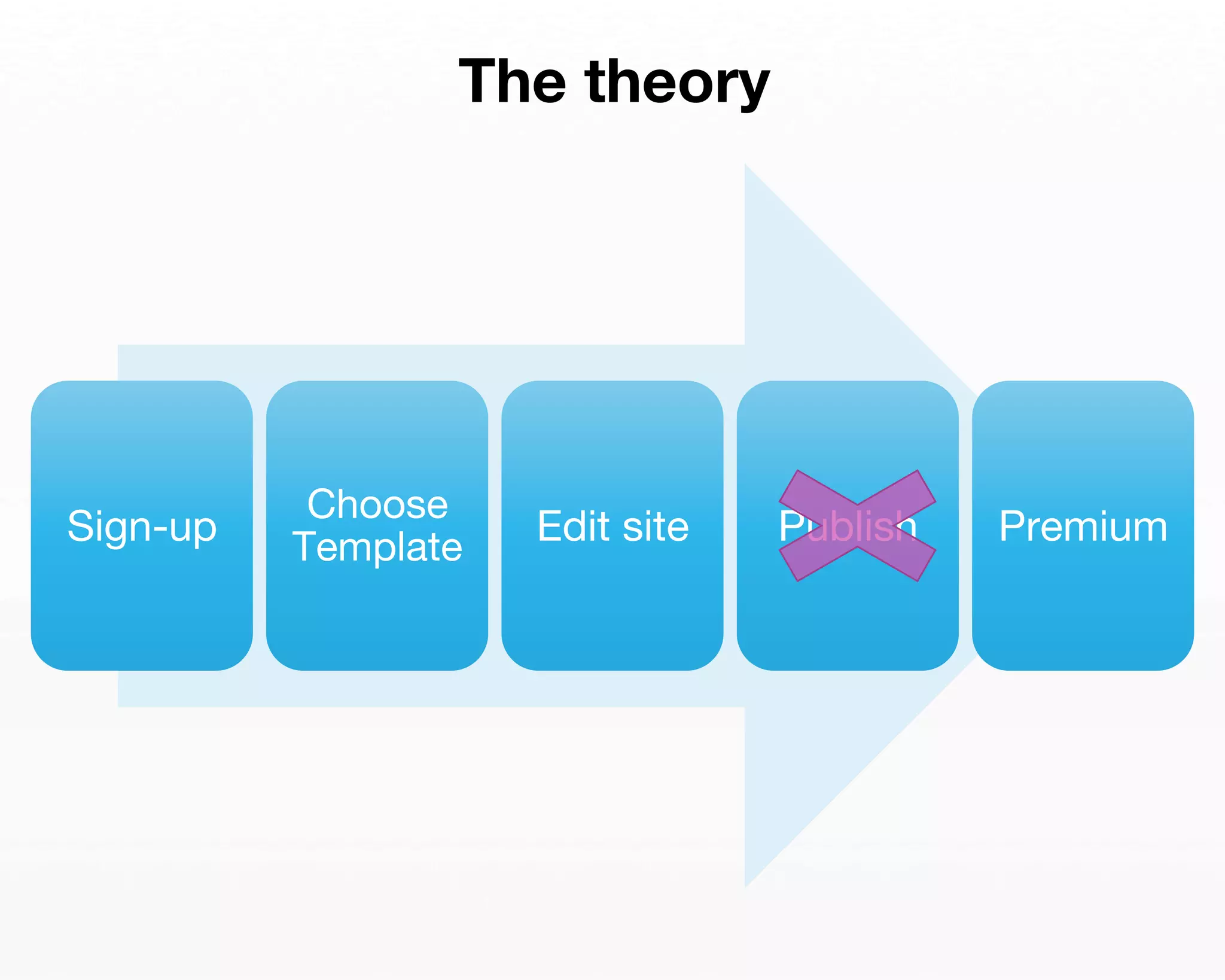

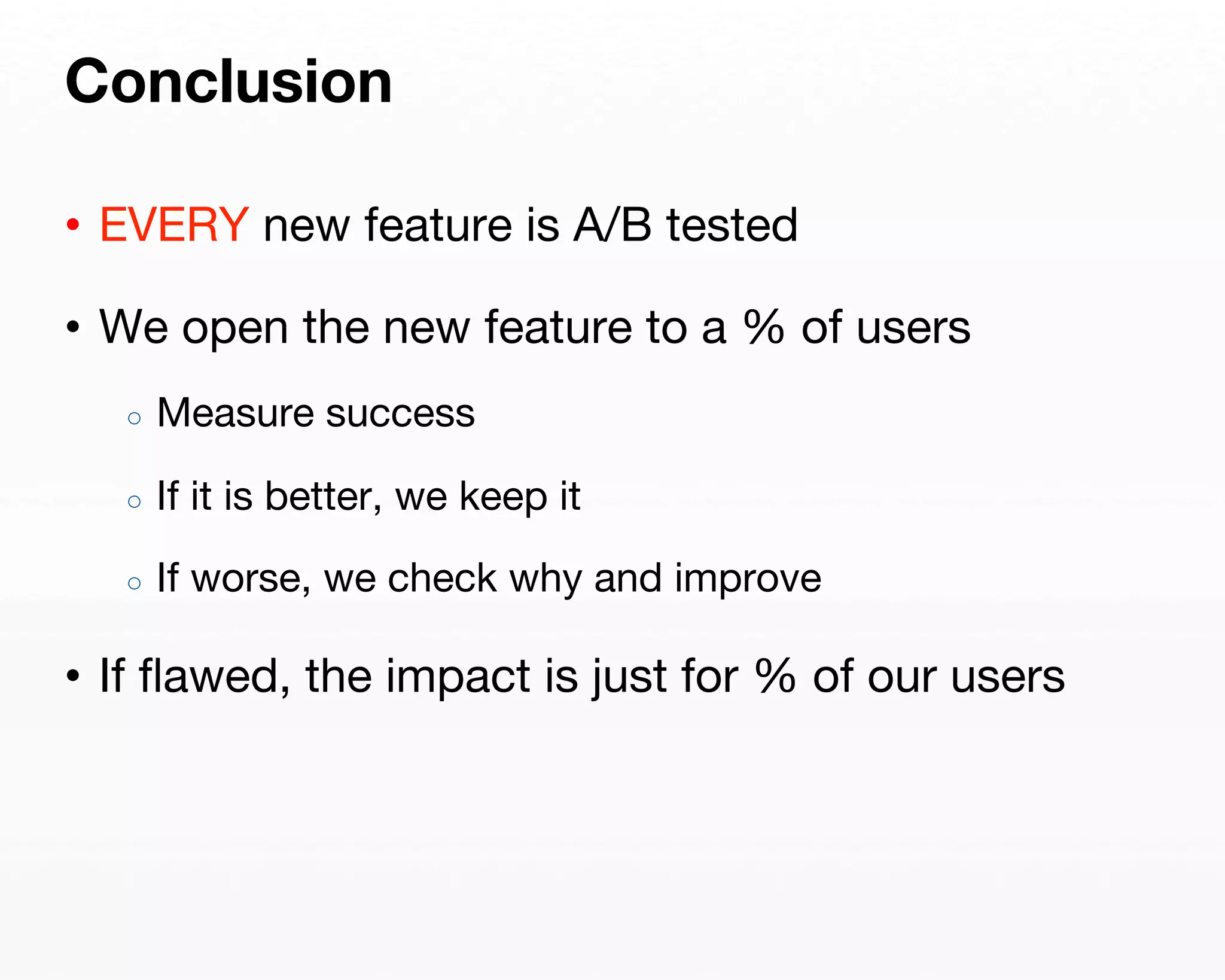

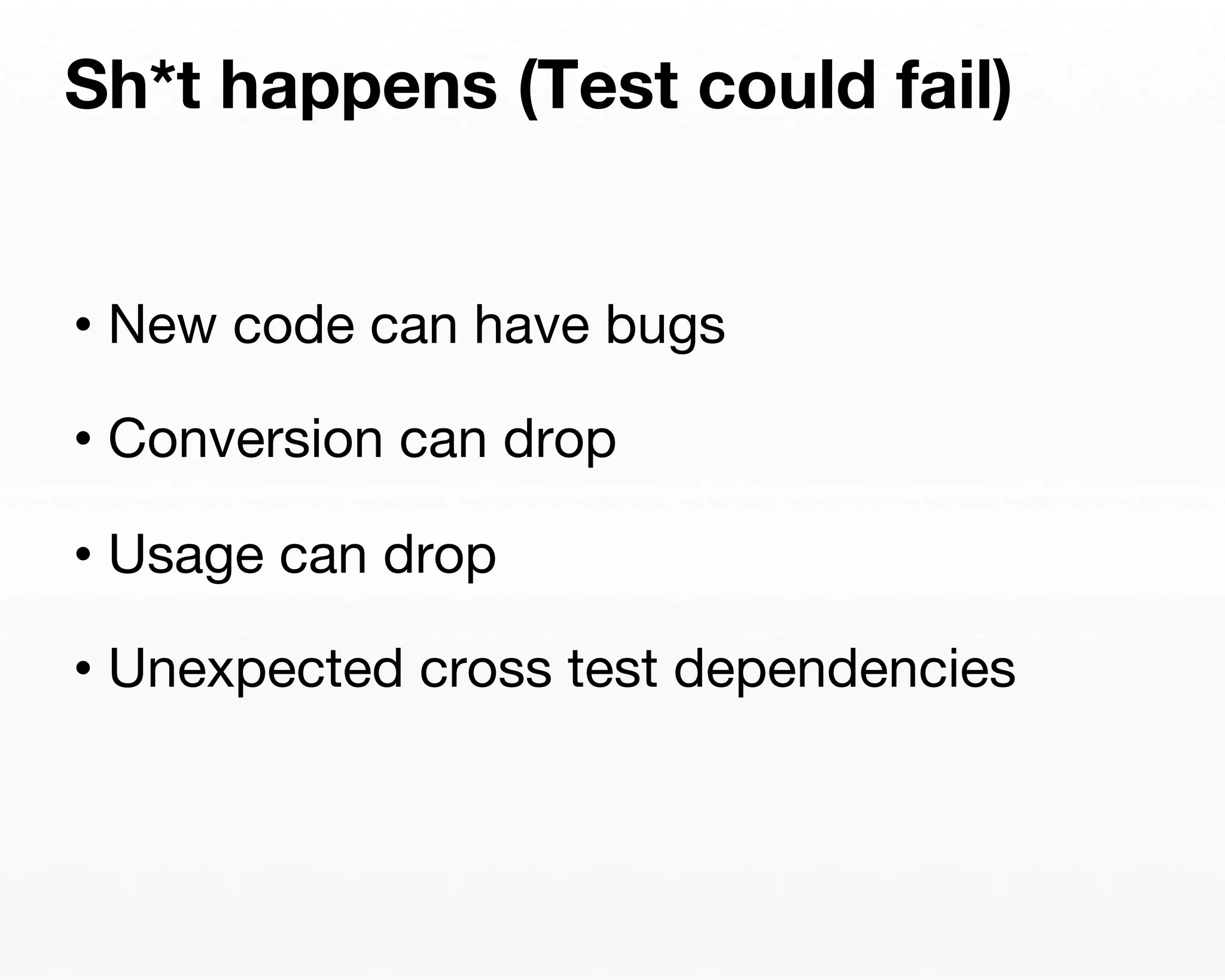

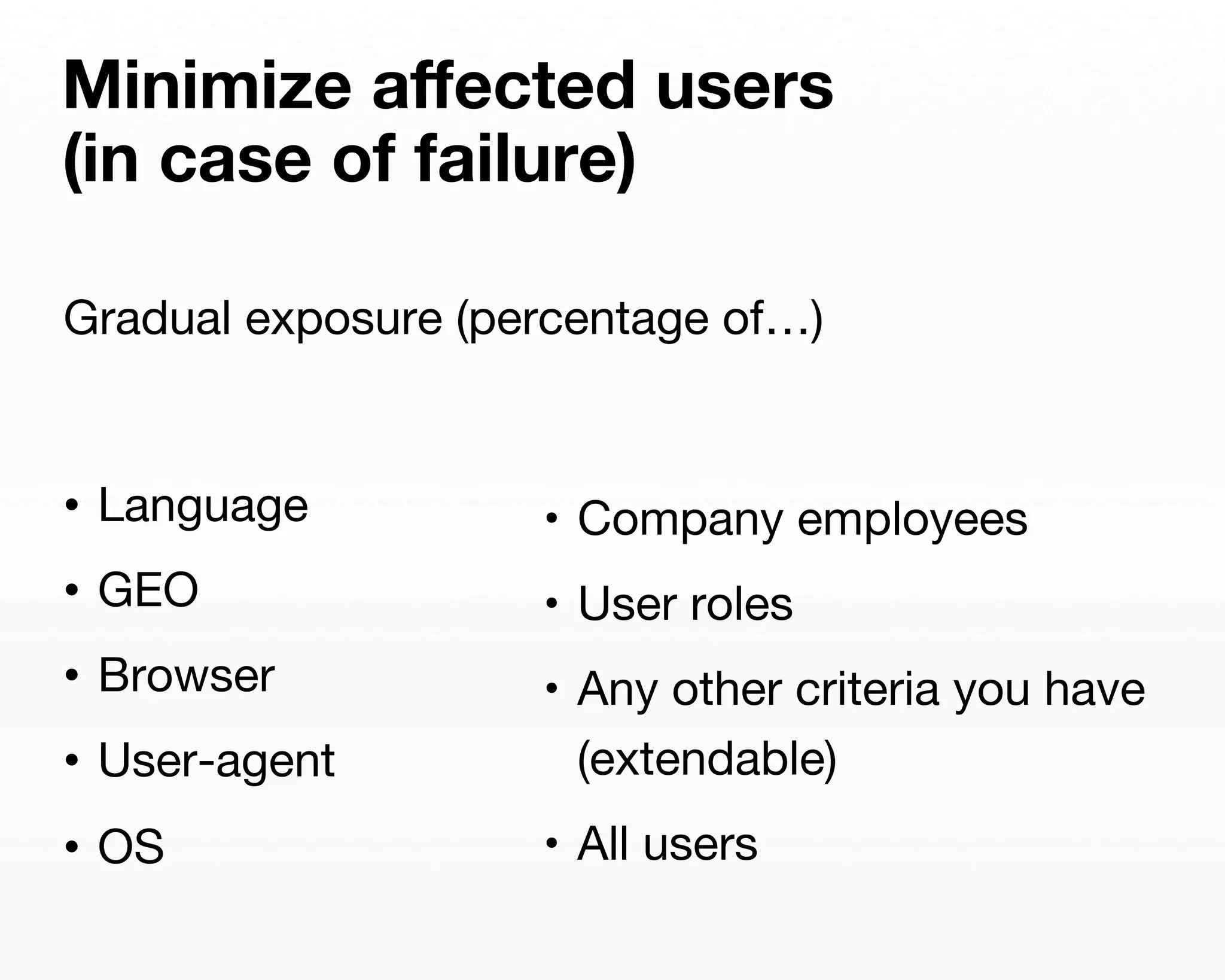

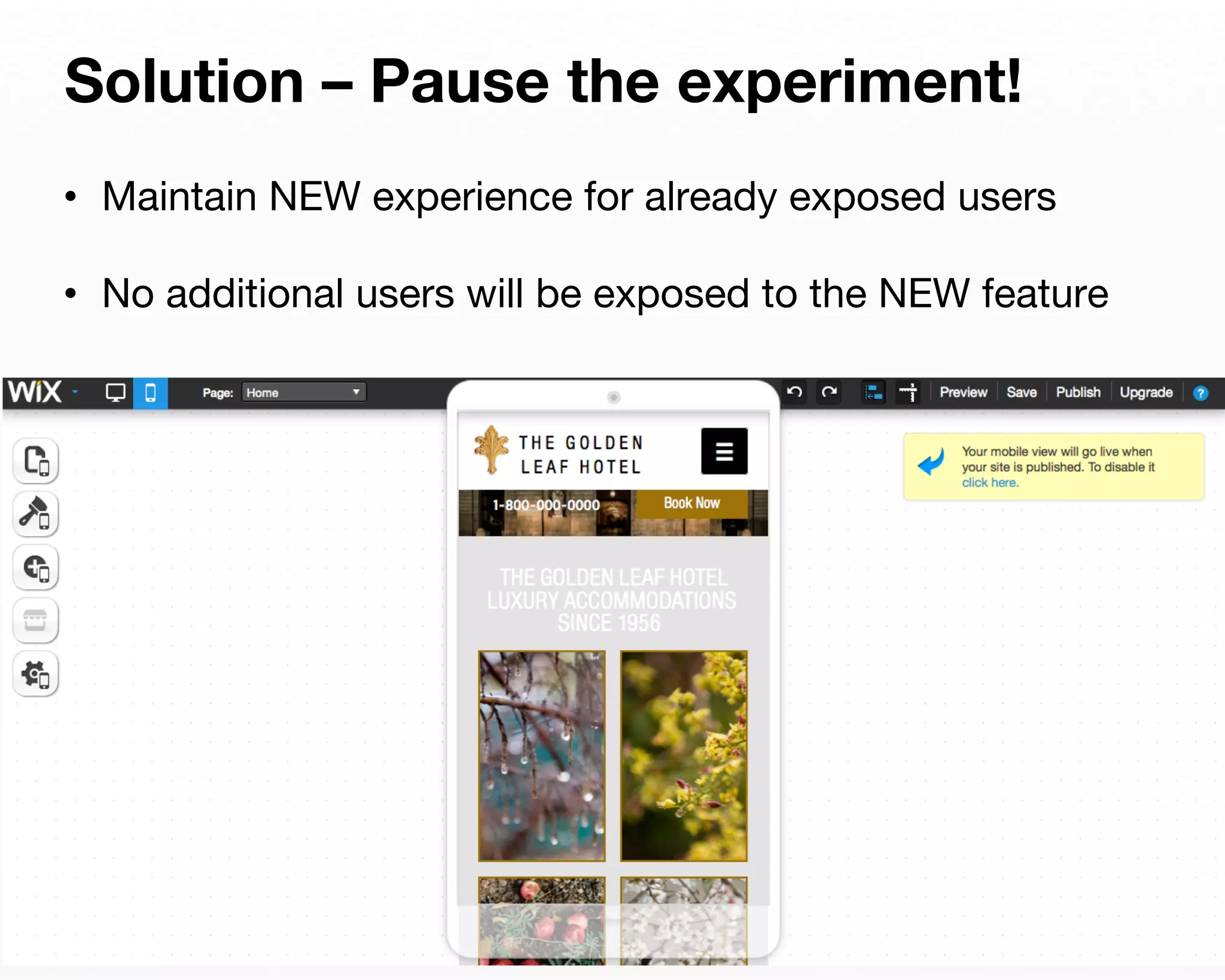

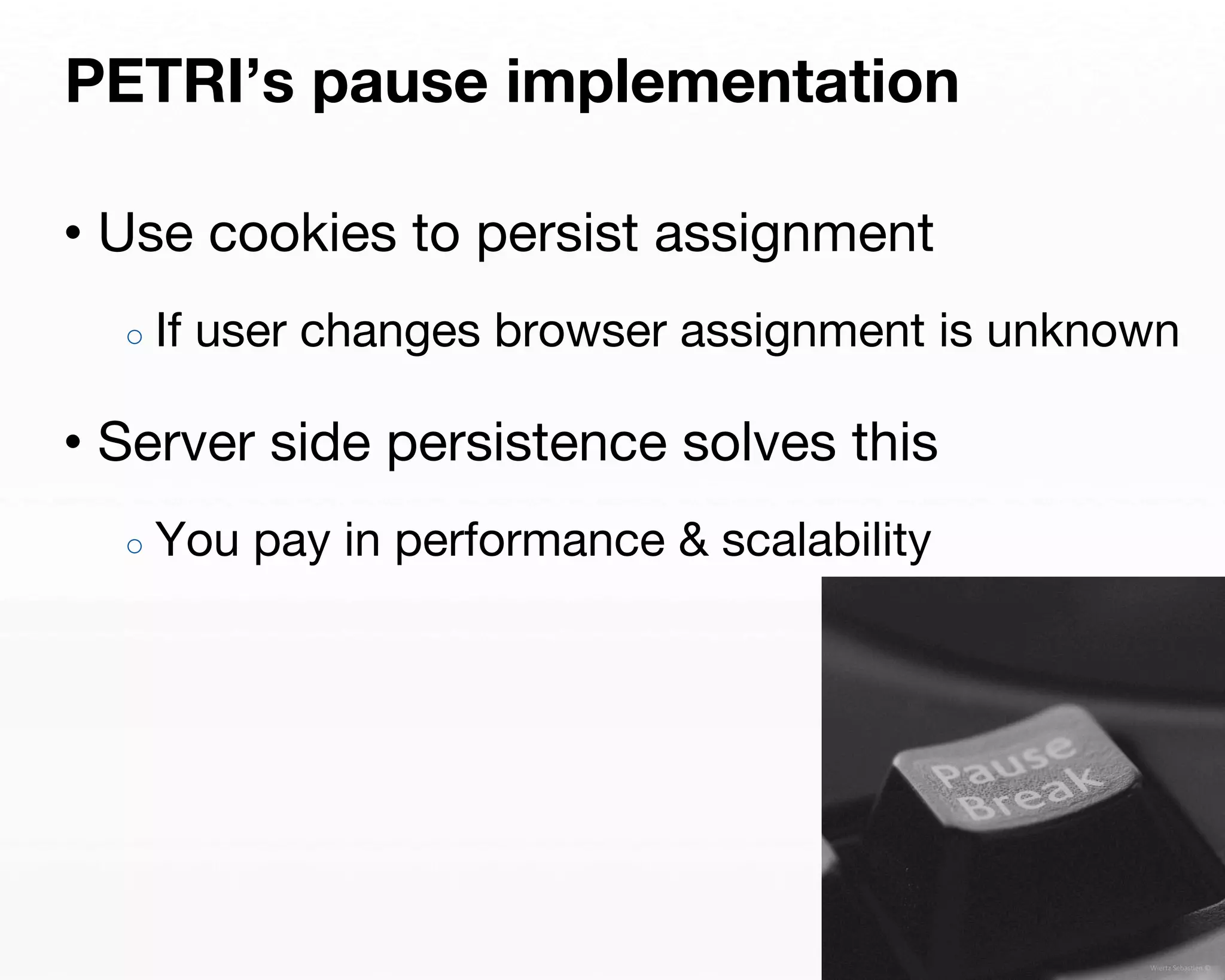

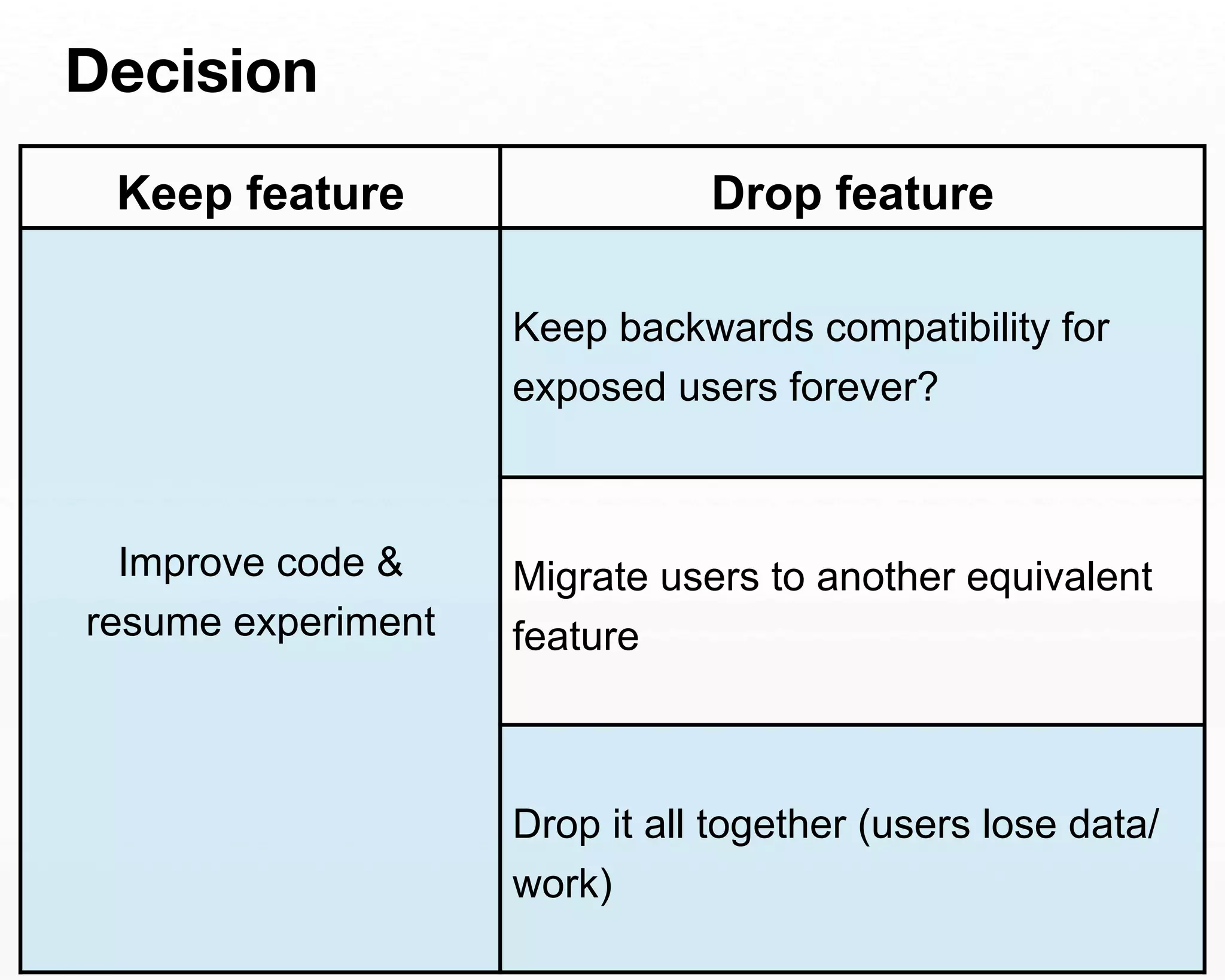

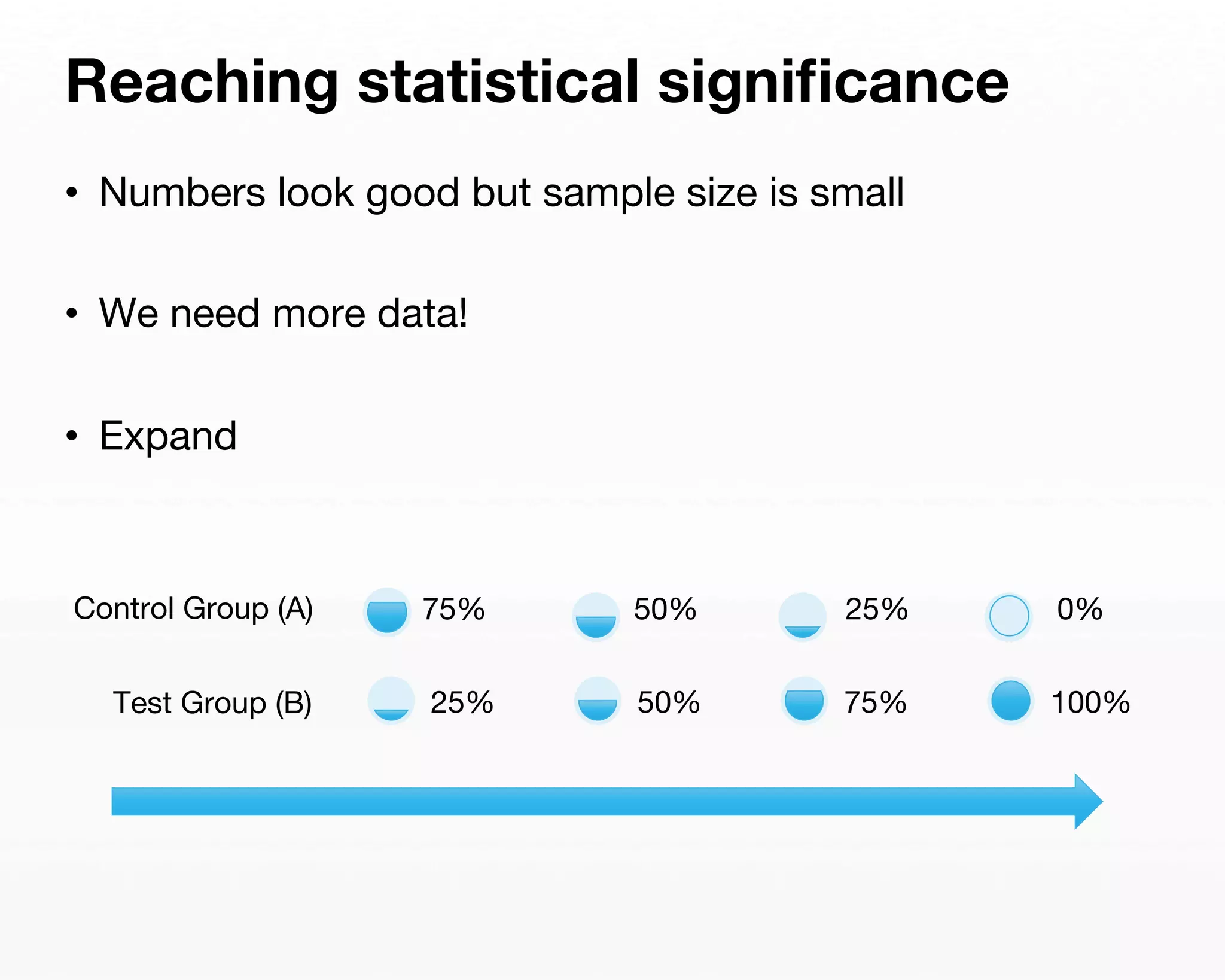

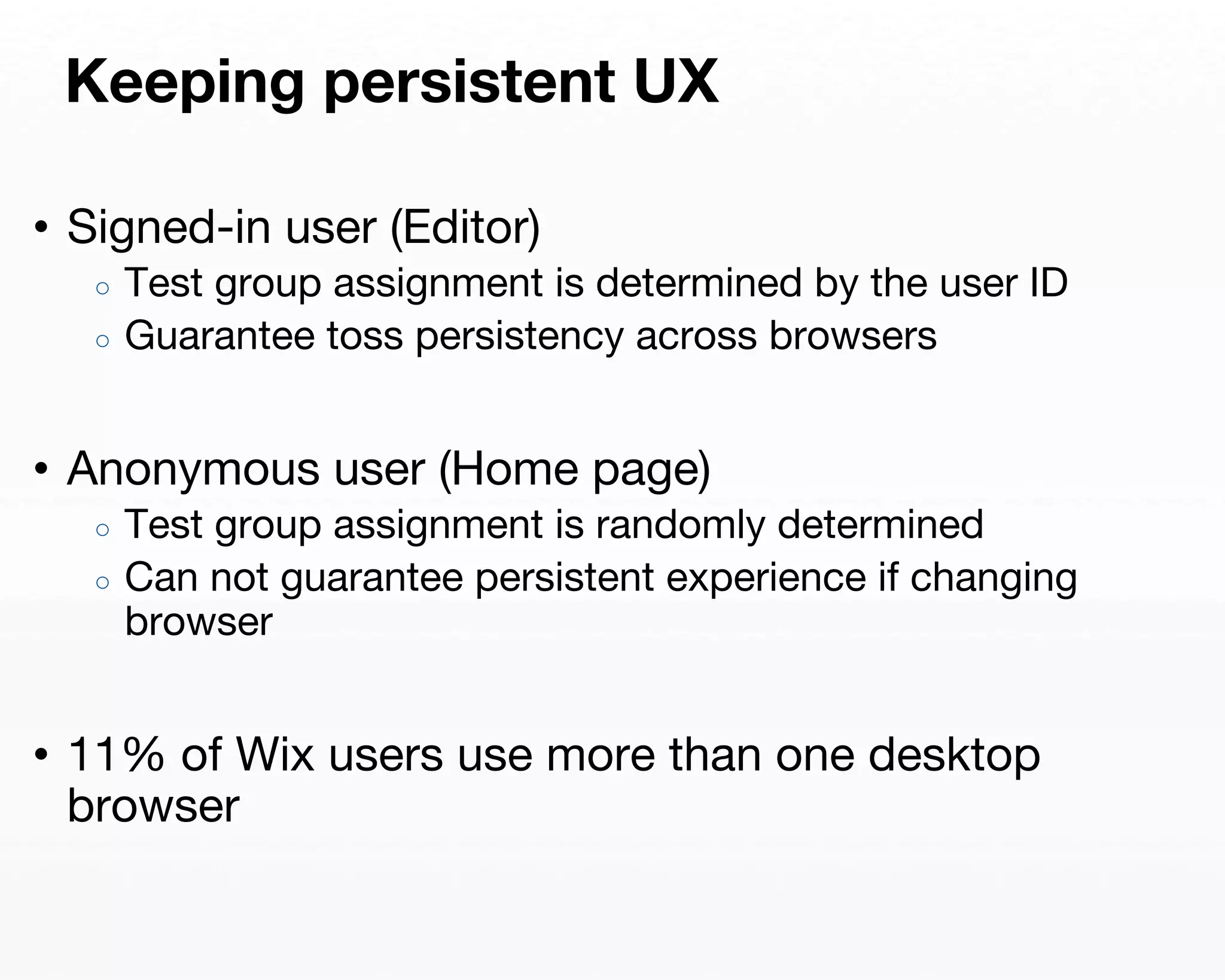

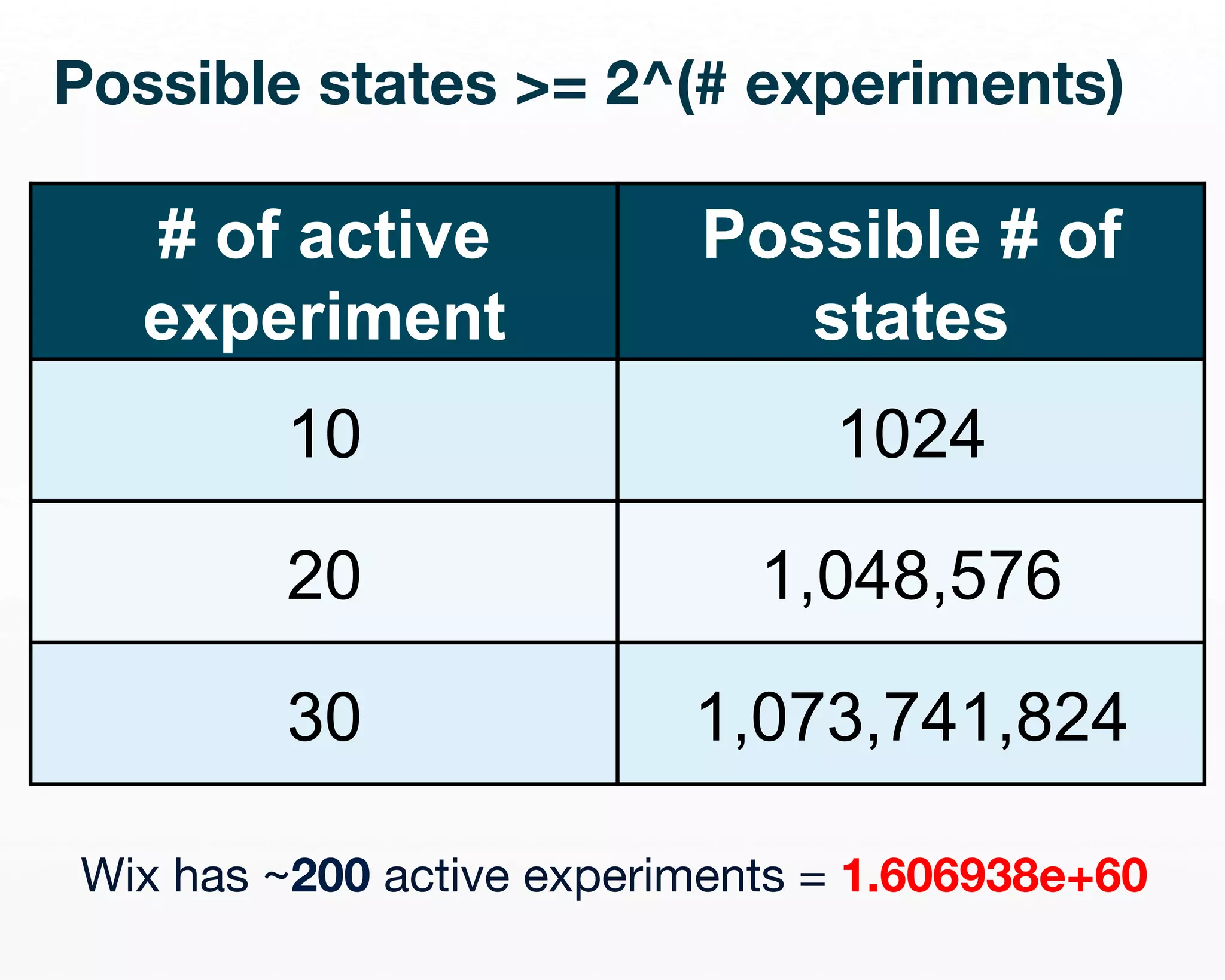

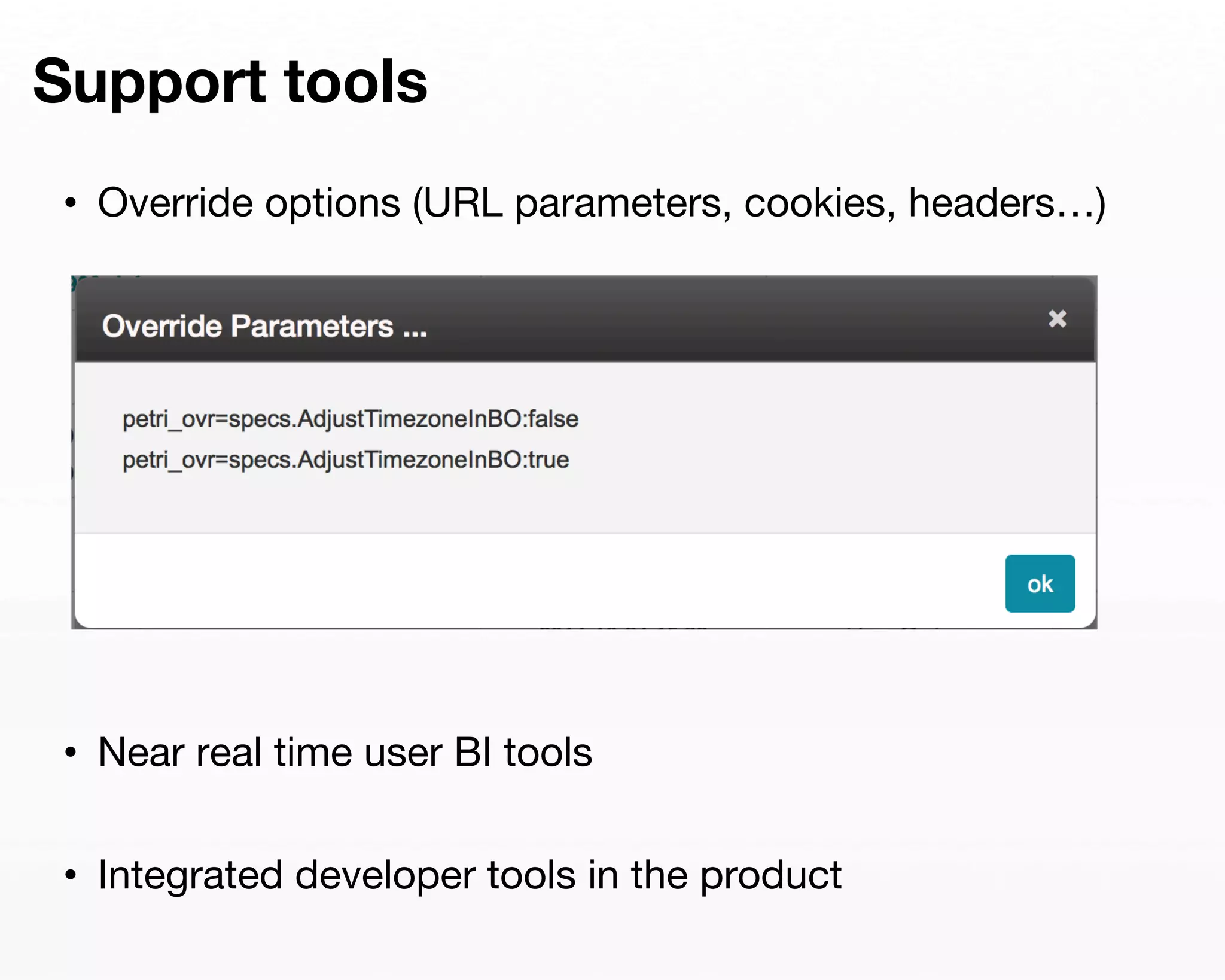

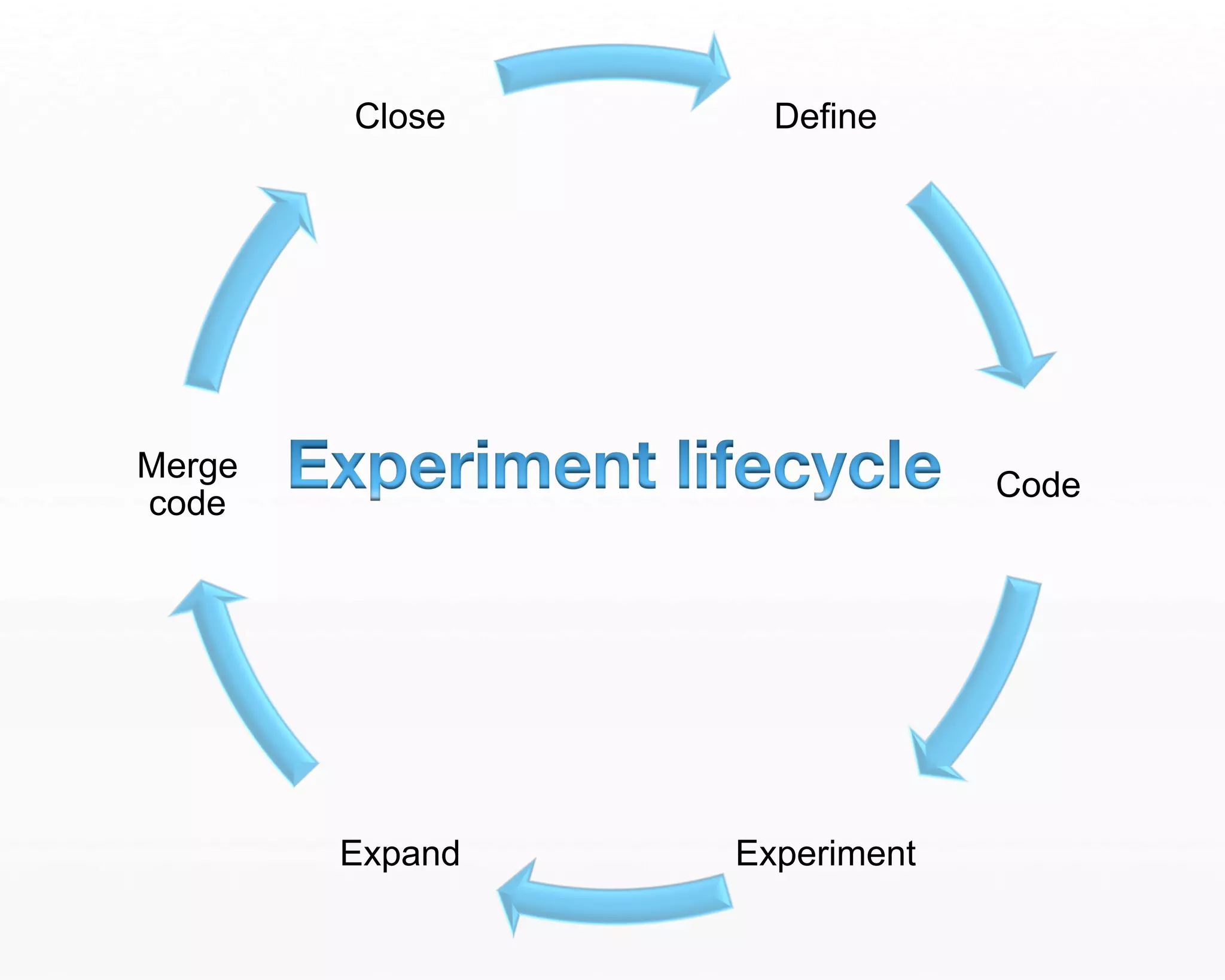

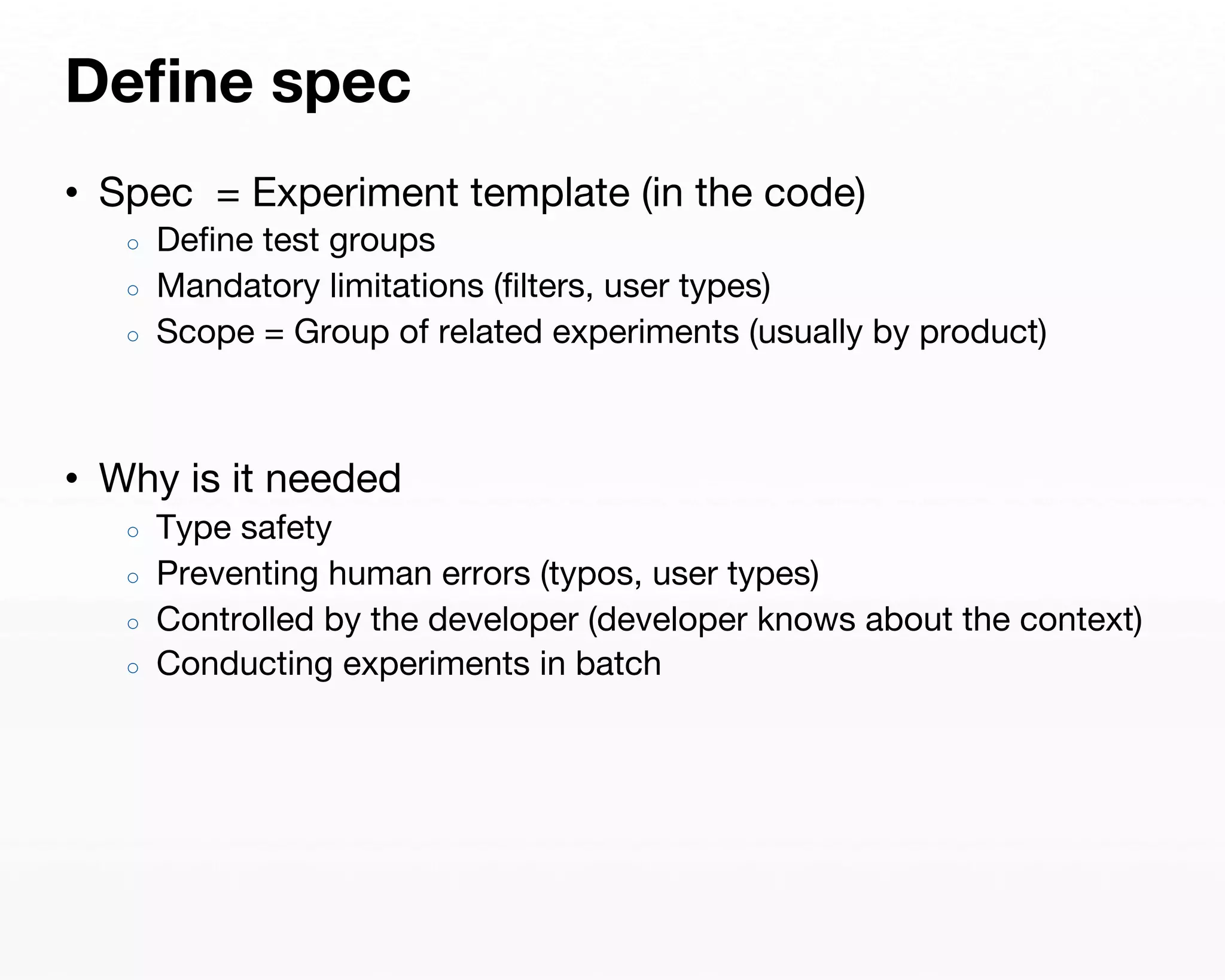

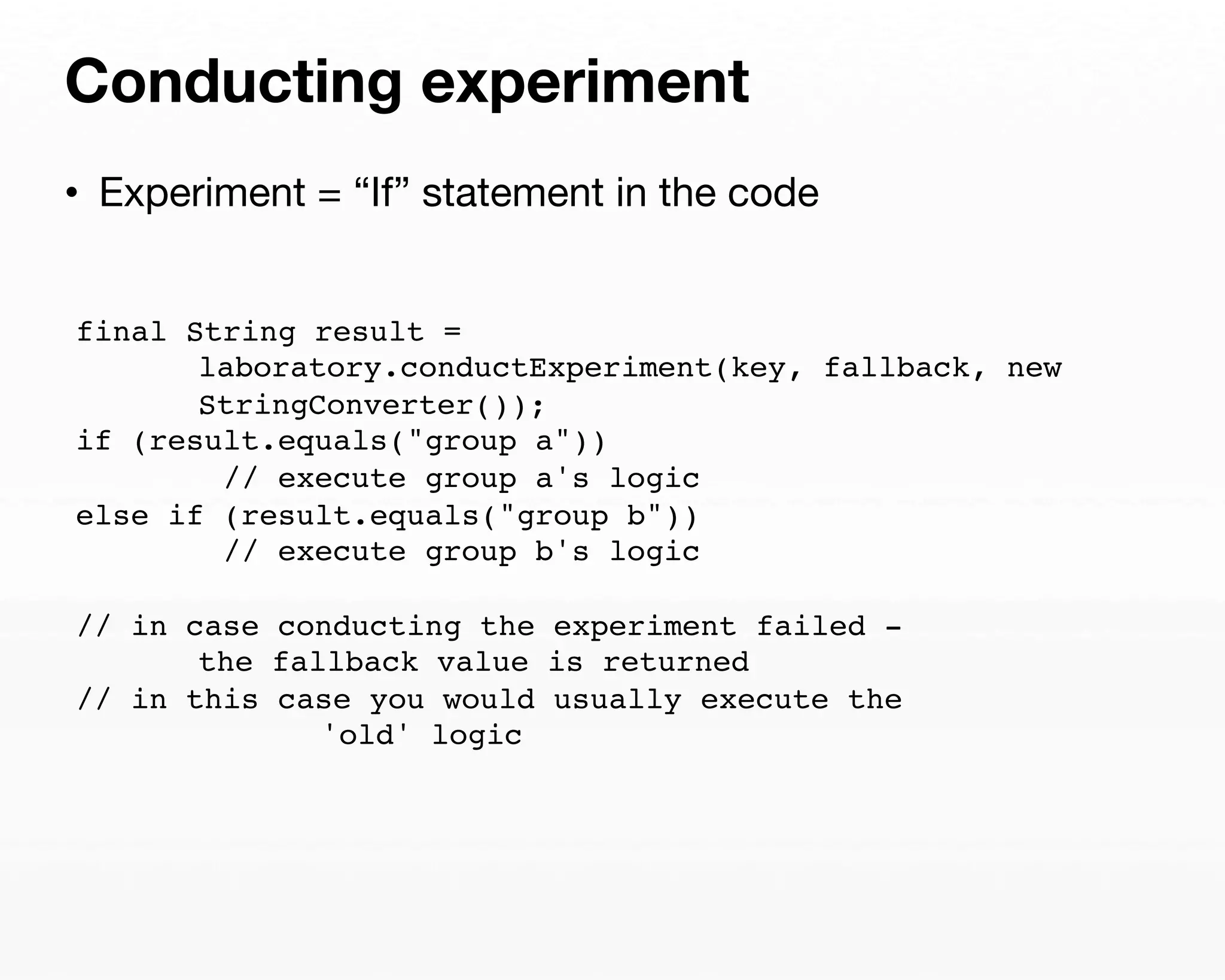

The document details the A/B testing framework used by Wix, led by Aviran Mordo and Sagy Rozman, emphasizing the scale of their operations with over 55 million users and a sophisticated experimentation system named Petri. It outlines the process of experiment-driven development, the challenges faced, and best practices for implementing A/B tests while ensuring user experience consistency and minimizing potential negative impacts. Additionally, it discusses the importance of statistical significance, data management, and plans for future enhancements to the Petri system, making it an open-source project.

![Upload spec

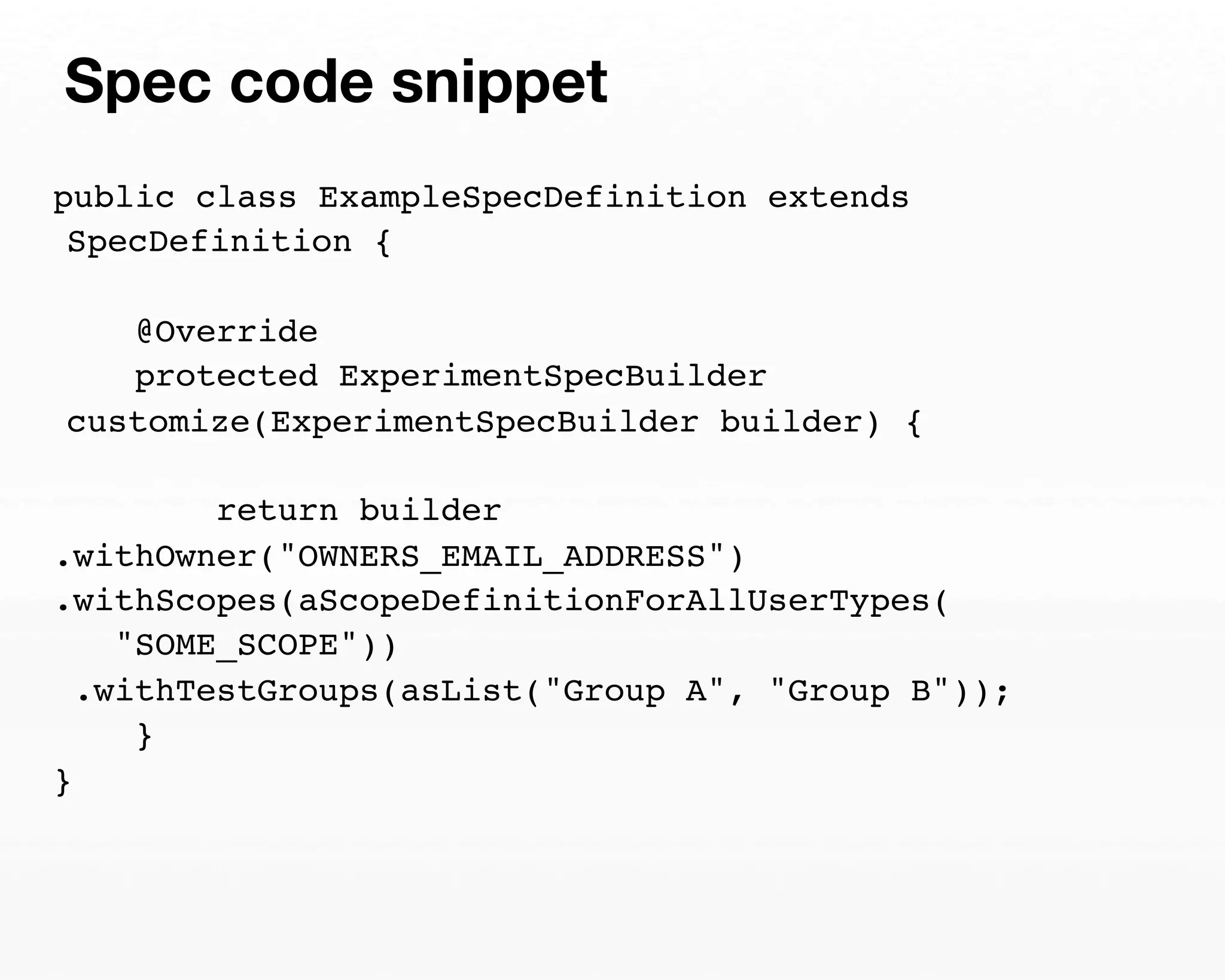

• Upload the specs to Petri server

○ Enables to define an experiment instance

{

"creationDate" : "2014-01-09T13:11:26.846Z",

"updateDate" : "2014-01-09T13:11:26.846Z",

"scopes" : [ {

"name" : "html-editor",

"onlyForLoggedInUsers" : true

}, {

"name" : "html-viewer",

"onlyForLoggedInUsers" : false

} ],

"testGroups" : [ "old", "new" ],

"persistent" : true,

"key" : "clientExperimentFullFlow1",

"owner" : ""

}](https://image.slidesharecdn.com/advanced-ab-testing-devopsdaystlv2014-141203011843-conversion-gate01/75/Advanced-A-B-Testing-at-Wix-Aviran-Mordo-and-Sagy-Rozman-Wix-com-47-2048.jpg)