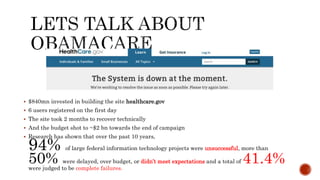

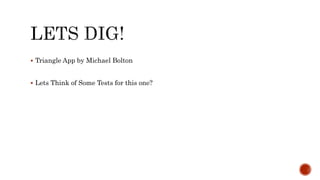

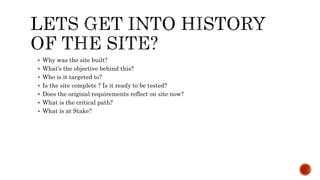

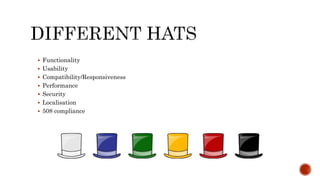

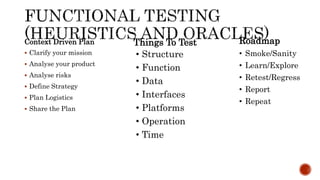

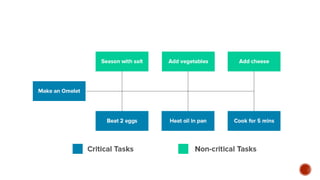

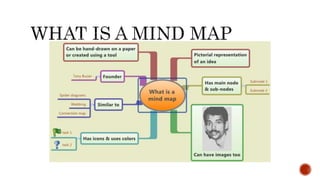

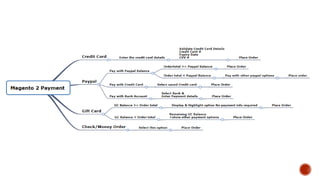

The document discusses testing of the healthcare.gov website which cost $840 million to build but had only 6 users register on the first day. It went over budget to $2 billion and took 2 months to recover technically. The document provides an overview of testing types like functionality, usability, compatibility, performance, security, localization, and 508 compliance testing. It discusses concepts like critical path analysis, risk-based testing, mind mapping, and testing web applications using a case study of the Triangle App.