The document examines the concept of information through the lens of three perspectives: Fred Dretske's semantic theory, Peter Kosso's interaction-information in scientific observation, and the syntactic approach by Thomas Cover and Joy Thomas. It argues for a clear terminological distinction among different concepts of information to prevent confusion in related areas such as knowledge and observation. The analysis highlights the complexities of defining information and emphasizes the need for a precise understanding given its wide application in various domains.

![108 OLIMPIA LOMBARDI

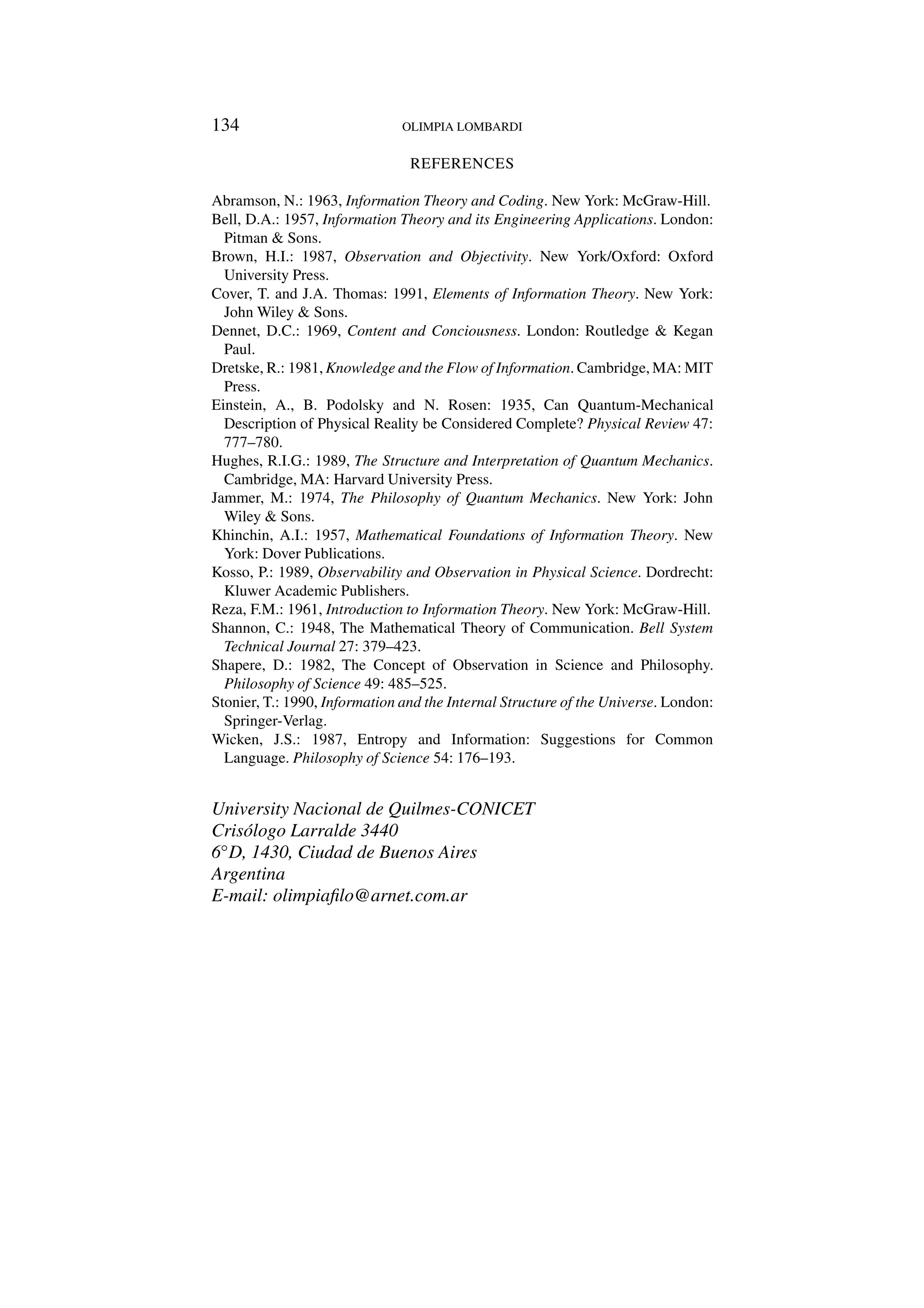

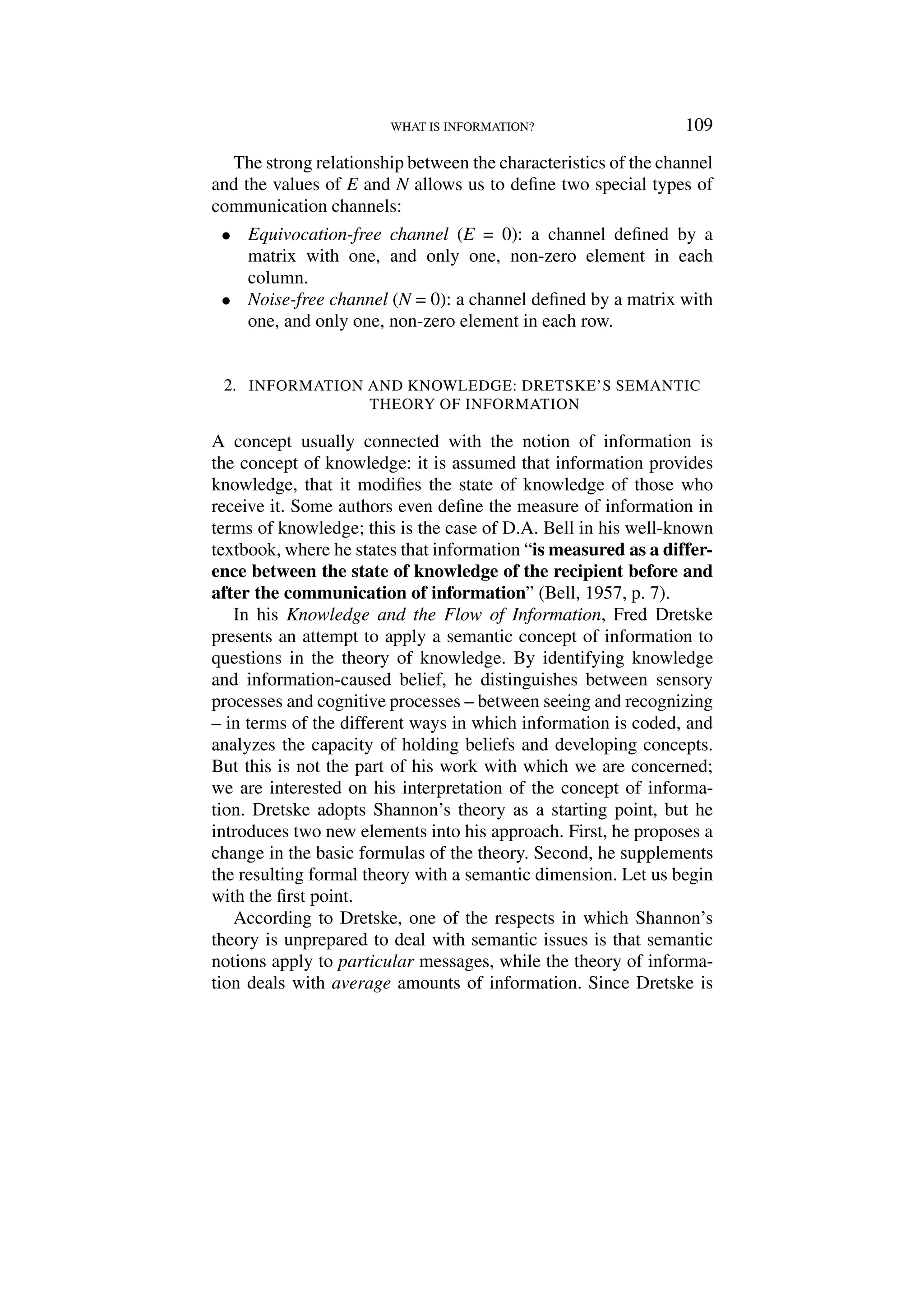

• I(S, R) transinformation. Average amount of information

generated at S and received at R.

• E: equivocation. Average amount of information generated at S

but not received at R.

• N: noise. Average amount of information received at R but not

generated at S.

As the diagram shows, I(S, R) can be computed as:

I(S, R) = I(S) − E = I(R) − N

(1.5)

E and N are measures of the amount of dependence between the

source S and de receiver R:

• If S and R are totally independent, the values of E and N are

maximum (E = I(S) and N = I(R)), and the value of I(S, R) is

minimum (I(S, R) = 0).

• If the dependence between S and R is maximum, the values of

E and N are minimum (E = N = 0), and the value of I(S, R) is

maximum (I(S, R) = I(S) = I(R)).

The values of E and N are not only function of the source and the

receiver, but also of the communication channel. The introduction of

the communication channel leads directly to the possibility of errors

arising in the process of transmission: the channel CH is defined by

the matrix [p(rj /si)], where p(rj /si) is the conditional probability of

the occurrence of rj given that si occurred, and the elements in any

row must sum to 1. Thus, the definitions of E and N are:

E = p(rj )p(si/rj )log1/p(si/rj )

(1.6)

= p(rj , si)log1/p(si/rj )

N = p(si)p(rj /si)log1/p(rj /si)

(1.7)

= p(si, rj )log1/p(rj /si)

where p(si, rj ) = p(rj , si) is the joint probability of si and rj (p(si,

rj ) = p(si)p(rj /si); p(rj , si) = p(rj )p(si/rj)). The channel capacity is

given by:

C = max I(S, R)

(1.8)

where the maximum is taken over all the possible distributions p(si)

at the source.4](https://image.slidesharecdn.com/whatisinformation-230307014433-911d5d68/75/What_is_Information-pdf-5-2048.jpg)

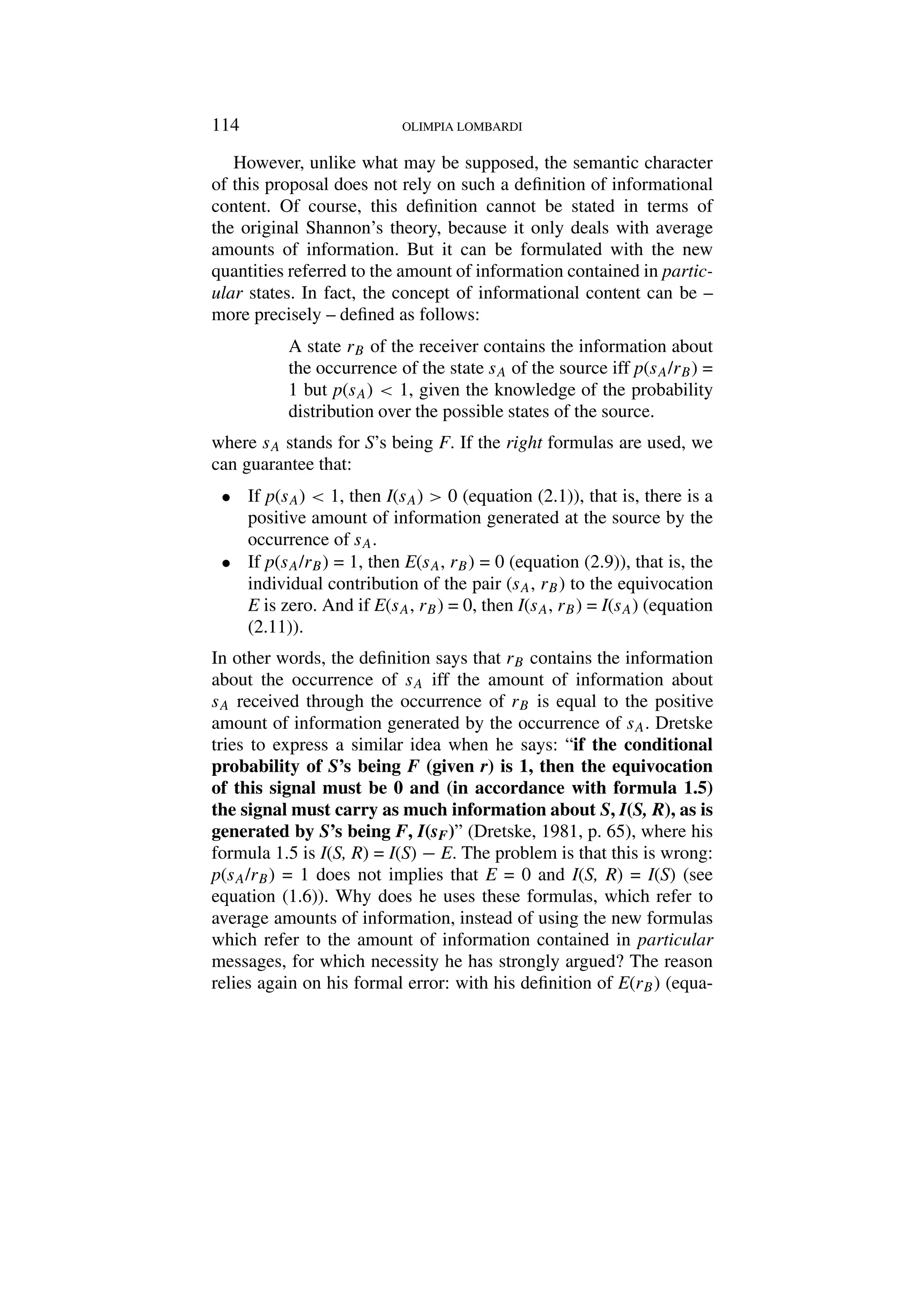

![WHAT IS INFORMATION? 113

equal to the amount of information I(si) generated at the source by

the occurrence of si. This means that there was no loss of informa-

tion through the individual communication, that is, the value of the

individual contribution E(si, rj ) to the equivocation is zero (Dretske,

1981, p. 55); according to equation (2.11):

E(si, rj ) = 0 ⇒ I(si, rj ) = I(si)

But, now, the value of E(si, rj ) must be obtained with the correct

formula (2.9). At this point, it should be emphasized again that,

contrary to Dretske’s assumption, the individual contribution to the

equivocation is function of the communication channel and not only

of the receiver. In other words, it is not the state rj which individu-

ally contributes to the equivocation E but the pair (si, rj ), with

its associated probabilities p(si) and p(rj ), and the corresponding

conditional probability p(rj /si) of the channel. This means that we

can get completely reliable information – we can get knowledge

– about the source even through a very low probable state of the

receiver, provided that the channel is appropriately designed.

But Dretske does not stop here. In spite of having begun

from the formal theory of information, he immediately reminds us

Shannon’s remark: “[the] semantic aspects of communication are

irrelevant to the engineering problem. The significant aspect is

that the actual message is one selected from a set of possible

messages” (Shannon, 1948, p. 379). Shannon’s theory is purely

quantitative: it only deals with amounts of information, but ignores

questions related to informational content. The main contribution

of Dretske is his semantic theory of information, which tries to

capture what he considers the nuclear sense of the term ‘informa-

tion’: “A state of affairs contains information about X to just that

extent to which a suitable placed observer could learn some-

thing about X by consulting it” (Dretske, 1981, p. 45). Dretske

defines the informational content of a state r in the following terms

(p. 65):

A state r carries the information that S is F = The condi-

tional probability of S’s being F, given r (and k), is 1 (but,

given k alone, less than 1).

where k stands for what the receiver already knows about the

possibilities existing at the source.](https://image.slidesharecdn.com/whatisinformation-230307014433-911d5d68/75/What_is_Information-pdf-10-2048.jpg)

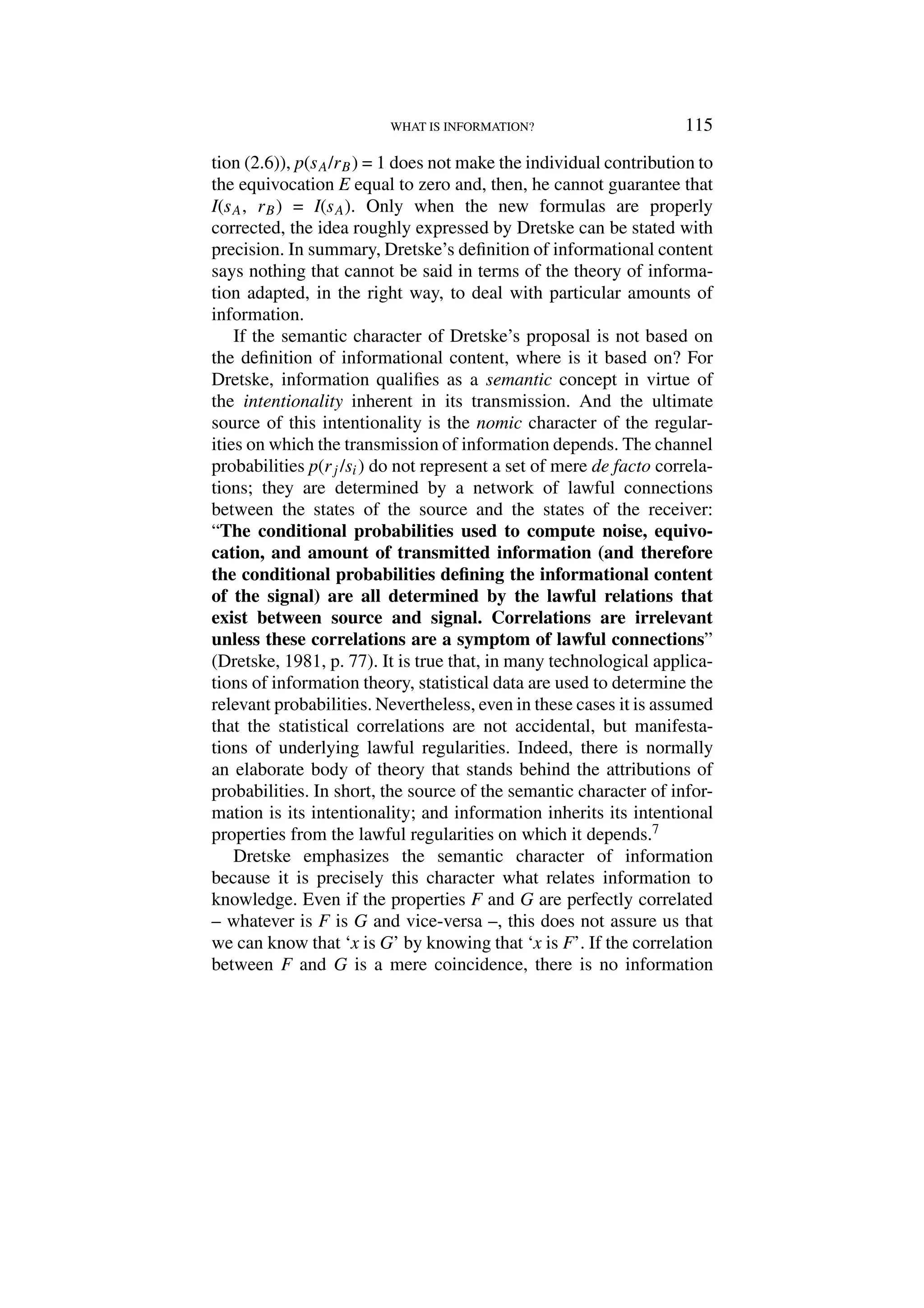

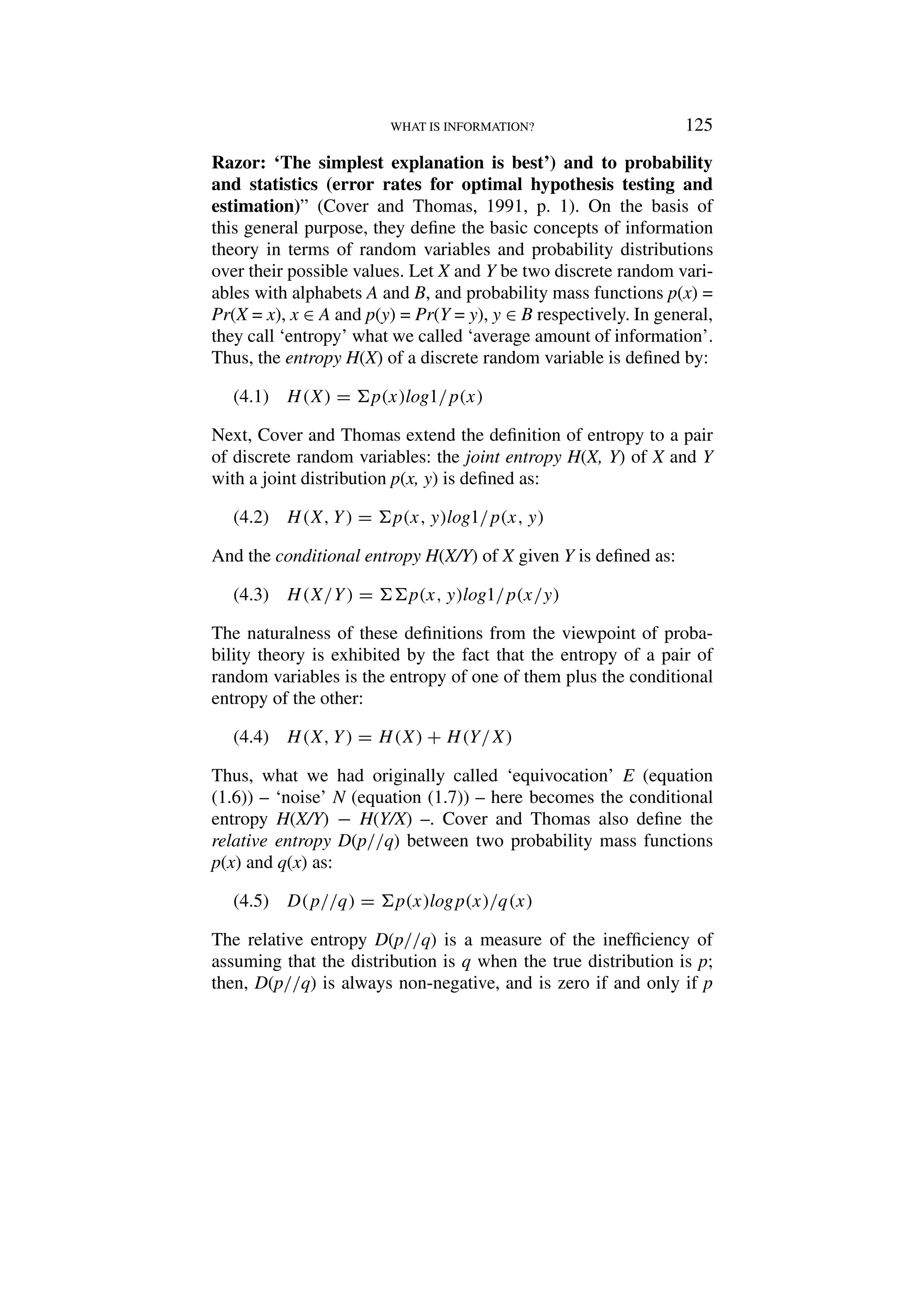

![120 OLIMPIA LOMBARDI

A source S is transmitting information to both receivers RA and RB

via some physical channel. RA and RB are isolated from one another

in the sense that there is no physical interaction between them.

But Dretske considers that, even though RA and RB are physically

isolated from one another, there is an informational link between

them. According to Dretske, it is correct to say that there is a

communication channel between RA and RB because it is possible to

learn something about RB by looking at RA and viceversa. Nothing

at RA causes anything at RB or viceversa; yet RA contains informa-

tion about RB and RB about RA. Dretske stresses the fact that the

correlations between the events occurring at both receivers are not

accidental, but they are functions of the common nomic dependen-

cies of RA and RB on S. However, for him this is an example of

an informational link between two points, despite the absence of a

physical channel between them. Dretske adds that the receiver RB

may be farther from the source than RA and, then, the events at RB

may occur later in time than those at RA, but this is irrelevant for

evaluating the informational link between them: even though the

events at RB occur later, RA carries information about what will

happen at RB. In short: “from a theoretical point of view [. . .]

the communication channel may be thought of as simply the

set of depending relations between S and R. If the statistical

relations defining equivocation and noise between S and R are

appropriate, then there is a channel between these two points,

and information passes between them, even if there is no direct

physical link joining S with R” (Dretske, 1981, p. 38).

As we have seen, in his interaction-information account of

scientific observation, Kosso asserts that interaction is not a suffi-

cient condition for information flow. But he also claims that: “obser-

vation must involve interaction. Interaction between x and an](https://image.slidesharecdn.com/whatisinformation-230307014433-911d5d68/75/What_is_Information-pdf-17-2048.jpg)

![124 OLIMPIA LOMBARDI

or absence –, but we do not observe the state at B; such a state is

inferred.

This discussion shows that it is possible to agree on the formal

theory of information and even on some interpretative points but,

despite this, to dissent on the very nature of information. Informa-

tion may be conceived as a semantic item, whose essential property

is its capability of providing knowledge. But information may also

be regarded as a physical entity ruled and constrained by natural

laws.

4. THE SYNTACTIC APPROACH OF COVER AND THOMAS

The physical view of information has been the most widespread

view in physical sciences. Perhaps this fact was due to the specific

technological problems which led to the original theory of Shannon:

the main interest of communication engineers was, and still is,

to optimize the transmission of information by means of physical

signals, whose energy and bandwidth is constrained by techno-

logical and economic limitations. In fact, the physical view of

information is the most usual in the textbooks on the subject used

in engineer’s training. However, this situation is changing in recent

times: one can see that some very popular textbooks introduce infor-

mation theory in a completely syntactic way, with no mention of

sources, receivers or signals. Only when the syntactic concepts and

their mathematical properties have been presented, the theory is

applied to the traditional case of signal transmission.

Perhaps the best example of this approach is the presentation

offered by Thomas Cover and Joy Thomas in his book Elements of

Information Theory (1991).11 Just from the beginning of this book,

the authors clearly explain their perspective: “Information theory

answers two fundamental questions in communication theory:

what is the ultimate data compression [. . .] and what is the ulti-

mate transmission rate of communication [. . .]. For this reason

some consider information theory to be a subset of communi-

cation theory. We will argue that it is much more. Indeed, it

has fundamental contributions to make in statistical physics

(thermodynamics), computer sciences (Kolmogorov complexity

or algorithmic complexity), statistical inference (Occam’s](https://image.slidesharecdn.com/whatisinformation-230307014433-911d5d68/75/What_is_Information-pdf-21-2048.jpg)

![WHAT IS INFORMATION? 133

5. Dretske uses Is(r) for the transinformation and Is(ra) for the new individual

transinformation. We have adapted Dretske’s terminology in order to bring it

closer to the most usual terminology in this field.

6. In fact, the right-hand term of (2.7), when (2.5) and (2.6) are used, is:

ij p(si, rj )I(si, rj ) = ij p(si, rj )[I(si) − E(rj )] = ij p(si, rj )log1/

p(si) − ij p(si, rj )kp(sk/rj )log1/p(sk/rj ). Perhaps, Dretske made the

referred mistake by misusing the subindices of the summations.

7. Dretske says that, in this context, it is not relevant to discuss where the inten-

tional character of laws comes from: “For our purpose it is not important

where natural laws acquire this puzzling property. What is important is

that they have it” (Dretske, 1981, p. 77).

8. I have argued for this view of scientific observation elsewhere (Lombardi,

“Observación e Información”, future publication in Analogia): if we want

that every state of the receiver lets us know which state of the observed

entity occurred, it is necessary that the so-called “backward probabilities”

p(si/rj ) (cfr. Abramson, 1963, p. 99) have the value 0 or 1, and this happens

in an equivocation-free channel. This explains why noise does not prevent

observation: indeed, practical situations usually include noisy channels, and

much technological effort is devoted to design appropriate filters to block the

noise bearing spurious signal. I have also argued that, unlike the informational

account of observation, the causal account does not allow us to recognize

(i) situations observationally equivalent but causally different, and (ii) situ-

ations physically – and, then, causally – identical but informationally different

which, for this reason, represent different cases of observation.

9. The experiment included in the well-known article of Einstein, Podolsky and

Rosen (1935).

10. Note that this kind of situations does not always involve a common cause. In

Dretske’s example of the source transmitting to two receivers, the correlations

between RA and RB can be explained by a common cause at S. But it is usually

accepted the impossibility of explaining quantum EPR-correlations by means

of a common cause argument (cfr. Hughes, 1989). However, in both cases

correlations depend on underlying nomic regularities.

11. This does not mean that Cover and Thomas are absolutely original. For

example, Reza (1961, p. 1) considers information theory as a new chapter

of the theory of probability; however, his presentation of the subject follows

the orthodox way of presentation in terms of communication and signal trans-

mission. An author who adopts a completely syntactic approach is Khinchin

(1957); nevertheless his text is not as rich in applications as the book of Cover

and Thomas and was not so widely used.

12. In the definition of D(p//q), the convention – based on continuity arguments

– that 0 log 0/q = 0 and p log p/0 = ∞ is used. D(p//q) is also referred to

as the ‘distance’ between the distributions p and q; however, it is not a true

distance between distributions since it is not symmetric and does not satisfy

the triangle inequality.](https://image.slidesharecdn.com/whatisinformation-230307014433-911d5d68/75/What_is_Information-pdf-30-2048.jpg)