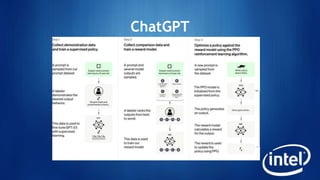

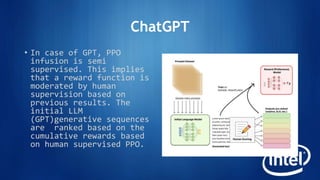

The webinar discusses ChatGPT's training methodology, which employs reinforcement learning from human feedback and supervised fine-tuning from human AI trainers. It highlights the technical aspects of the underlying models, including the autoregressive nature of GPT-3 and specific variants of the Proximal Policy Optimization (PPO) algorithm. The training utilizes a reward function to optimize responses while preventing significant deviations from initial outputs.