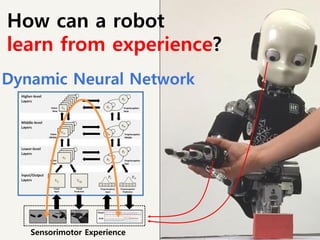

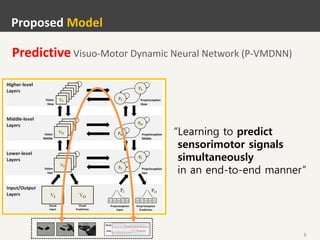

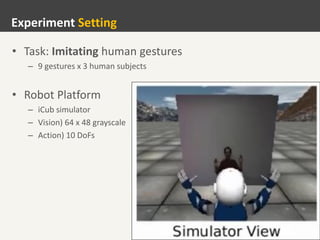

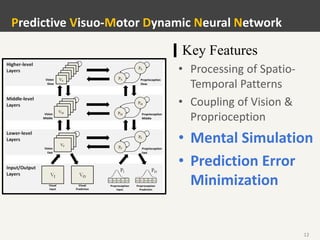

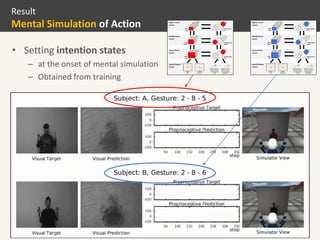

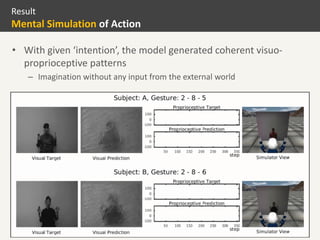

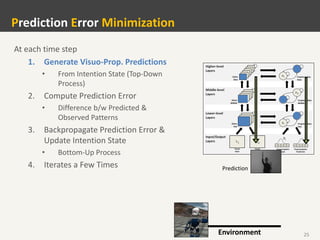

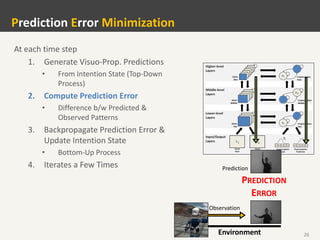

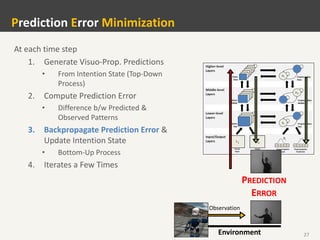

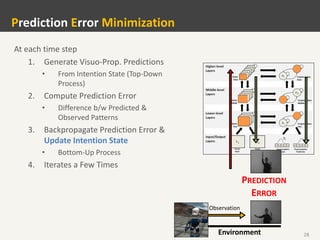

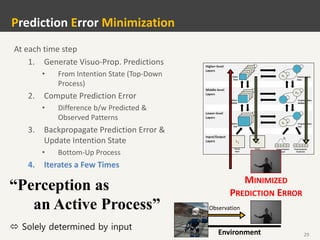

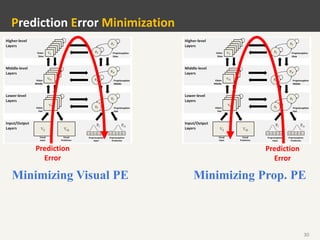

1. The document presents a predictive visuo-motor dynamic neural network model for a robot to learn gestures from human demonstrations through predictive coding.

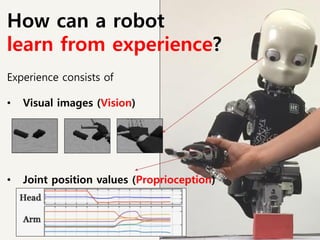

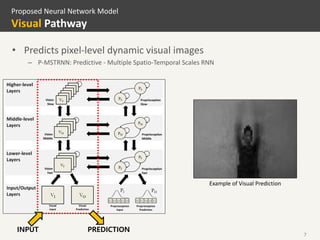

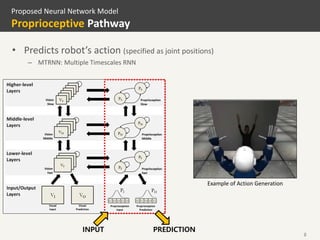

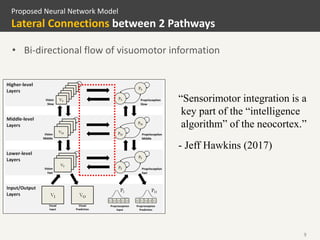

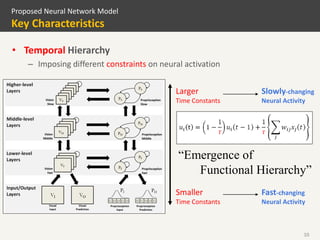

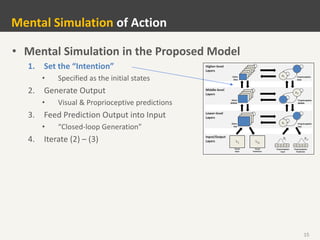

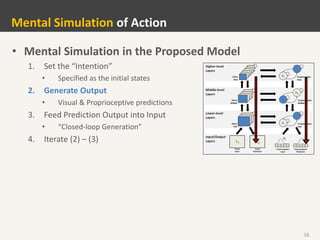

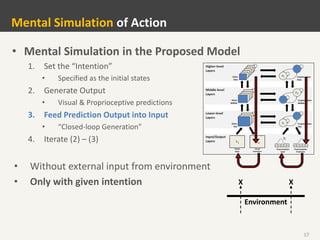

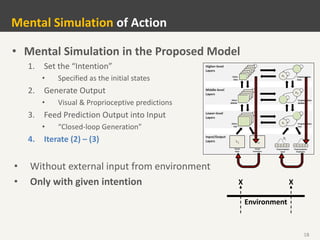

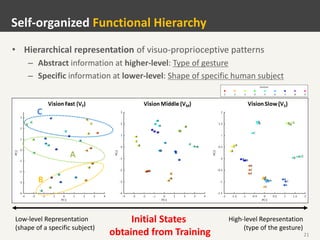

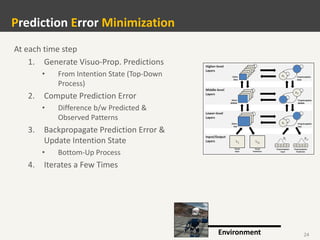

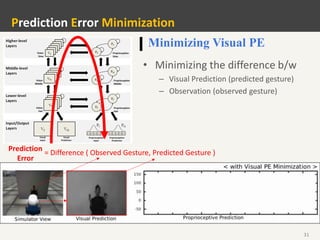

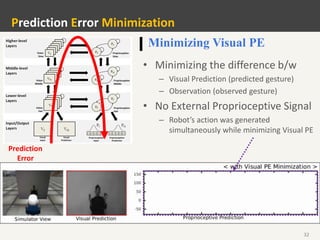

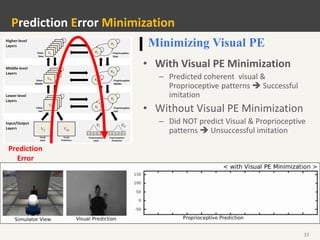

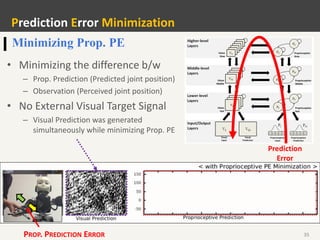

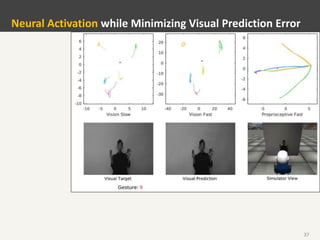

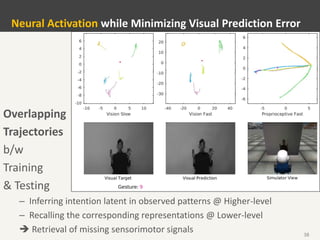

2. The model uses recurrent neural networks to predict pixel-level visual images and robot joint positions. It incorporates lateral connections between visual and proprioceptive pathways to integrate sensorimotor information.

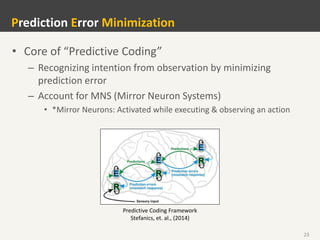

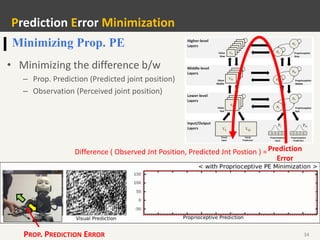

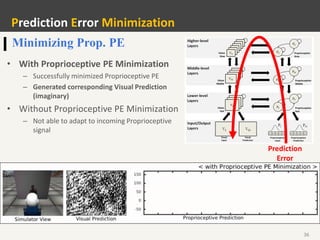

3. By minimizing prediction error between predicted and observed sensorimotor signals, the model enables the robot to mentally simulate actions from intentions, recognize intentions from observations like mirror neurons, and successfully imitate human gestures.