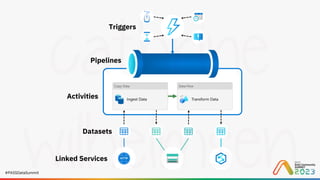

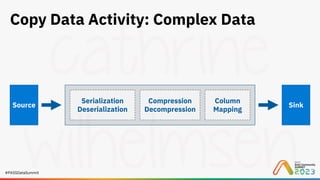

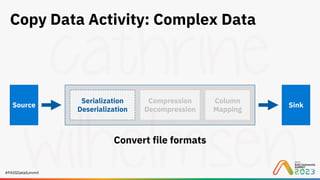

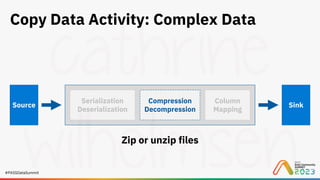

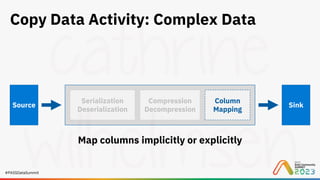

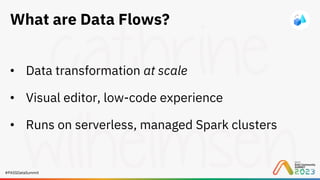

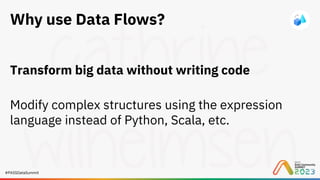

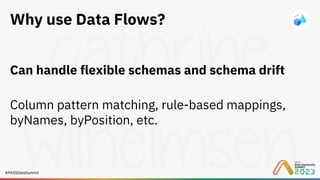

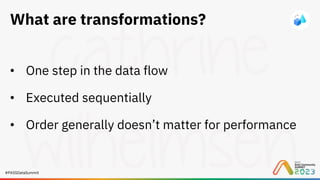

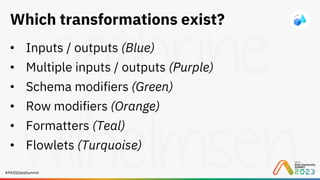

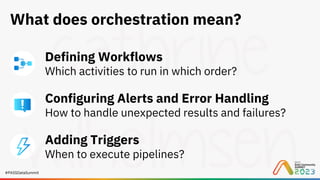

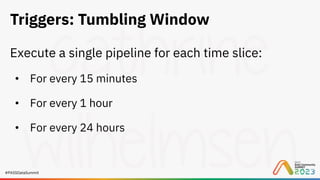

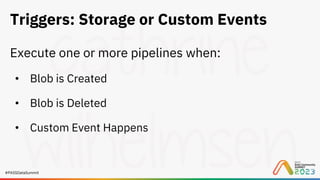

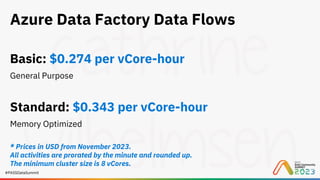

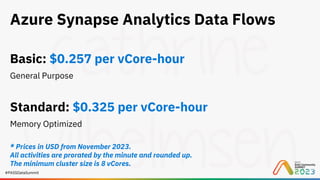

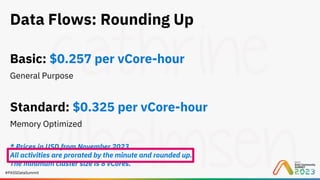

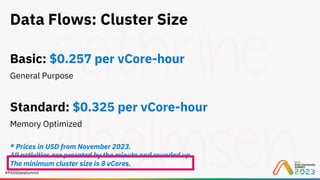

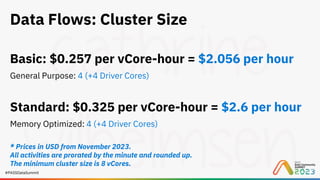

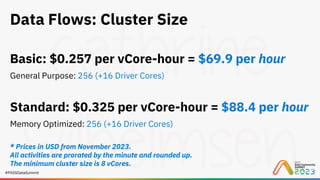

The document discusses visual data transformation using Azure Data Factory and Azure Synapse Analytics, highlighting data flows as a low-code tool for large data manipulation. It covers capabilities, use cases, orchestration, and pricing models for data flows, comparing them to Power Queries. The session aims to simplify data processing without requiring extensive programming knowledge, offering demos and lessons learned.