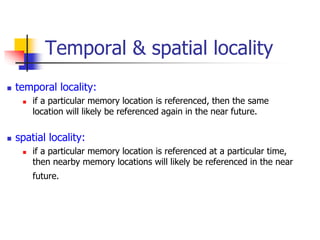

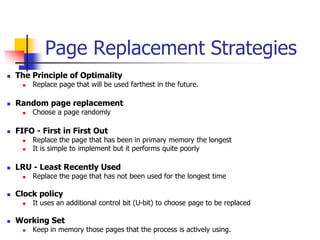

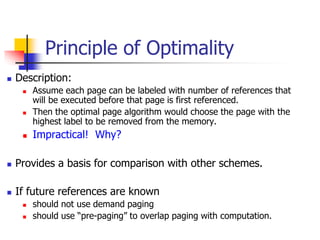

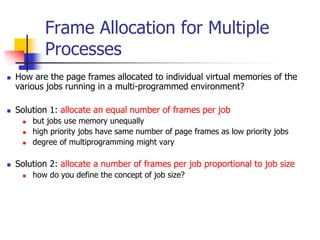

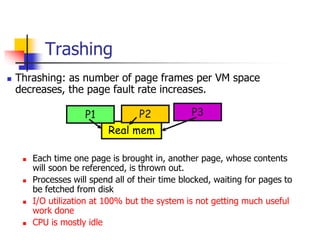

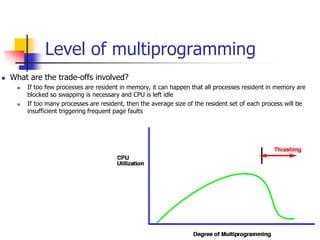

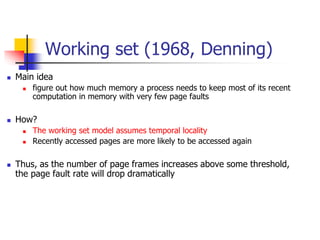

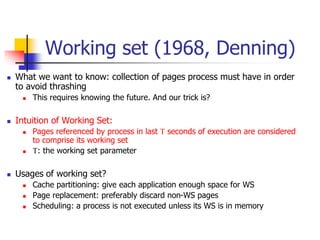

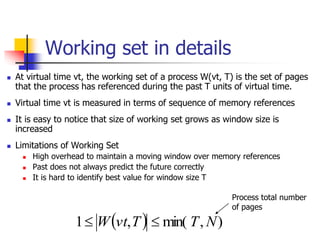

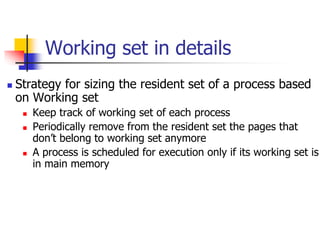

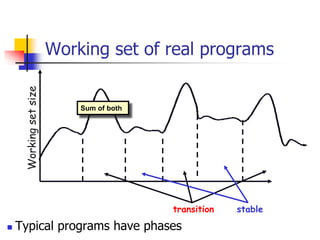

The document discusses virtual memory management, including the concepts of logical and physical addresses, page faults, and the importance of resident sets in a multi-process environment. It describes various page replacement strategies such as optimal, LRU, and FIFO, as well as the impact of page size and frame allocation on performance and thrashing issues. Additionally, it introduces the working set model to minimize page faults by maintaining recently accessed pages in memory, highlighting its applications and limitations.