Validation gaining confidence in simulation Darre Odeleye CEng MIMechE

This document summarizes a presentation on gaining confidence in simulation models through validation. It discusses: - The importance of distinguishing between verification and validation of simulation components, with validation determining how accurately a model represents reality. - Validation is important for generating credibility for decision makers from a program office perspective. - A variety of techniques can be used to validate models, including comparing simulation results to experimental data from physical tests and controlled test environments. - Sensitivity analysis and parametric studies can help identify influential factors and validate input data. - Comparing components of the simulation process and conducting validation studies increases confidence in simulation for improving quality, reducing costs, and accelerating delivery timelines.

Recommended

Recommended

More Related Content

What's hot

What's hot (20)

Similar to Validation gaining confidence in simulation Darre Odeleye CEng MIMechE

Similar to Validation gaining confidence in simulation Darre Odeleye CEng MIMechE (20)

Validation gaining confidence in simulation Darre Odeleye CEng MIMechE

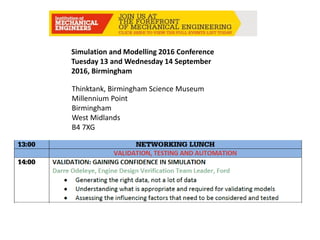

- 1. Simulation and Modelling 2016 Conference Tuesday 13 and Wednesday 14 September 2016, Birmingham Thinktank, Birmingham Science Museum Millennium Point Birmingham West Midlands B4 7XG

- 2. VALIDATION: GAINING CONFIDENCE IN SIMULATION A Program Office perspective

- 3. Distinction must be made between the verification and validation of the key components that constitute a simulation • Verification - Does the code implement the physics of the problem correctly and does the solution generated compared favourably to exact analytical results ? • Validation – Does the actual simulation agree with physical reality? Is the level of uncertainty and error acceptable ? From a Program Office perspective Validation assessment generates credibility for decision makers

- 4. Simulation verification may include analysis of: • Discretization strategy, • Application of boundary conditions, • Grid convergence criteria, • Handling non-linearity, • Iterative convergence, • Numerical stability ( relaxation factors etc.) D.Odeleye CEng MIMechE 4

- 5. –Validation is the process of determining the degree to which a model is an accurate representation of the real world from the perspective of the intended use of the model. –High confidence simulations offers the promise of developing higher quality products with fewer resources in less time D.Odeleye CEng MIMechE 5

- 6. • Generating the right data, not a lot of data • Understanding what is appropriate and required for validating models • Assessing the influencing factors that need to be considered and tested

- 9. • What does the Program need to know and when? – This defines the problem and what CAE methods that can be used • Lean product creation concepts require – Compatibility with Quality, Cost& Delivery before design maturity – Front loading using all design options before commitment – No late changes D.Odeleye CEng MIMechE 9

- 10. • Generating the right data, not a lot of data • Understanding what is appropriate and required for validating models • Assessing the influencing factors that need to be considered and tested teg

- 11. Correlate and compare the components of simulation in order to validate models i.e. – Invest upfront resources to generate the most accurate inputs for Simulation – Compare results from different Simulation tools, – Compare real world measurements to simulated results, – Compare “controlled” test environment ( i.e. wind tunnel) results to simulation results. D.Odeleye CEng MIMechE 11

- 12. Extend legacy/baseline physical tests to generate comprehensive model input parameters – Typical durability testing focuses on “ has it broken yet” . providing little information on optimisation and distance from failure modes – Testing to failure provides quantitative data i.e. time to failure, cycles to failure etc. and damage mode provides insight into distance from failure mode and performance degradation supports better predictive simulations. D.Odeleye CEng MIMechE 12

- 13. D.Odeleye CEng MIMechE 13

- 14. Bogey Testing Testing to Failure Degradation testing Trade off between different test schemes and implications Delivery Cost Quality Long test duration impact on engineering sign off timing High Cost Comprehensive data Shortest test duration Most likely to meet program timing Lowest relative Cost. Results of test cannot be used To predict performance with sufficient confidence D.Odeleye CEng MIMechE 14

- 15. Bogey test Test to failure D.Odeleye CEng MIMechE 15

- 16. Predicted life Crankshaft #1 Predicted lif Crankshaft # ( revised #2 @125PS) ( revised #2 @150PS) ed #2) PS

- 17. D.Odeleye CEng MIMechE Original design is the purple part and the green part is the revised part with the width of web 6 increased 17

- 18. •Based on sampling at the extremes of either product strength, or level of induced key stresses. i.e. Selection of weakest parts, worst clearances, etc. •If Input data is based on the weakest part test data, all stronger parts would also pass the test ( for a given test cycle). D.Odeleye CEng MIMechE 18

- 19. D.Odeleye CEng MIMechE 19

- 20. D.Odeleye CEng MIMechE 20

- 21. D.Odeleye CEng MIMechE 21

- 22. D.Odeleye CEng MIMechE 22

- 23. D.Odeleye CEng MIMechE 23

- 24. D.Odeleye CEng MIMechE 24

- 25. D.Odeleye CEng MIMechE 25

- 26. • Generating the right data, not a lot of data • Understanding what is appropriate and required for validating models • Assessing the influencing factors that need to be considered and tested teg

- 27. Conduct studies to identify sources of – Variation in required simulation output, what input parameters have what effect on the required results ? – Conduct sensitivity analysis of input parameters , – Classify influencing factors and identify main effects • Validate input data from experimental tests, does it make engineering sense D.Odeleye CEng MIMechE 27

- 28. D.Odeleye CEng MIMechE 28

- 29. D.Odeleye CEng MIMechE 29

- 30. Inputs CAD, Mesh generation, space ( F.E, Volume, elements) and time ( stability constraints) discretization Parameters, Boundary Conditions. Solver Settings i.e. mesh refinement vs solution convergence time Different Simulation tools, iterative solver Output Simulation Solutions Experimental data ( controlled noise environment), real world data Compare components , conduct sensitivity analysis and Parametric studies to validate simulations Simulation components

- 31. Confidence in Simulation is gained by demonstrating value added to – Quality – credible data necessary to make decisions at each stage of the product development , fidelity of predictions over time. – Cost – return on investment versus e.g. cost of testing prototypes – Delivery – are simulations conducted and results available exactly when needed ? D.Odeleye CEng MIMechE 31

- 32. Further considerations and opportunities for increasing confidence • Faster post processing • Use of virtual reality & augmented reality, • Real time simulation ( running simulations on the ‘fly’ ), • Cloud computing • Artificial intelligence ( using techniques such as evolutionary computations, artificial neural networks, fuzzy systems , general machine learning and data mining methods • Simulating Electric vehicles and EV powertrains • Simulating Autonomous vehicle performance. D.Odeleye CEng MIMechE 32

- 33. • Electric/hybrid vehicles present the next opportunities for the use of simulation to further compress product development timescales. D.Odeleye CEng MIMechE 33

- 34. DISCUSS

- 35. The better the Validation the better the prediction consequently the more confidence customers have in ongoing and future Simulations D.Odeleye CEng MIMechE 35