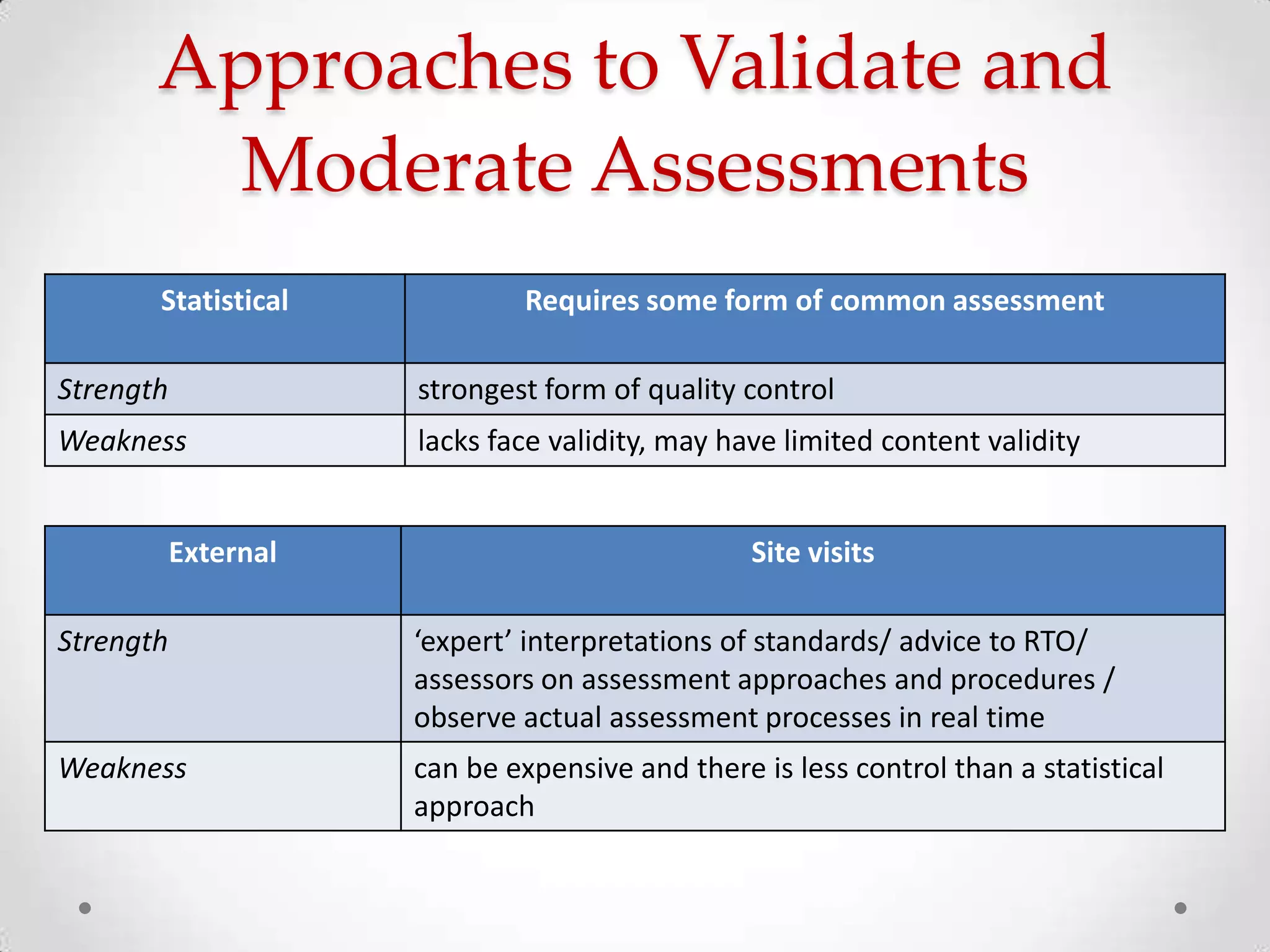

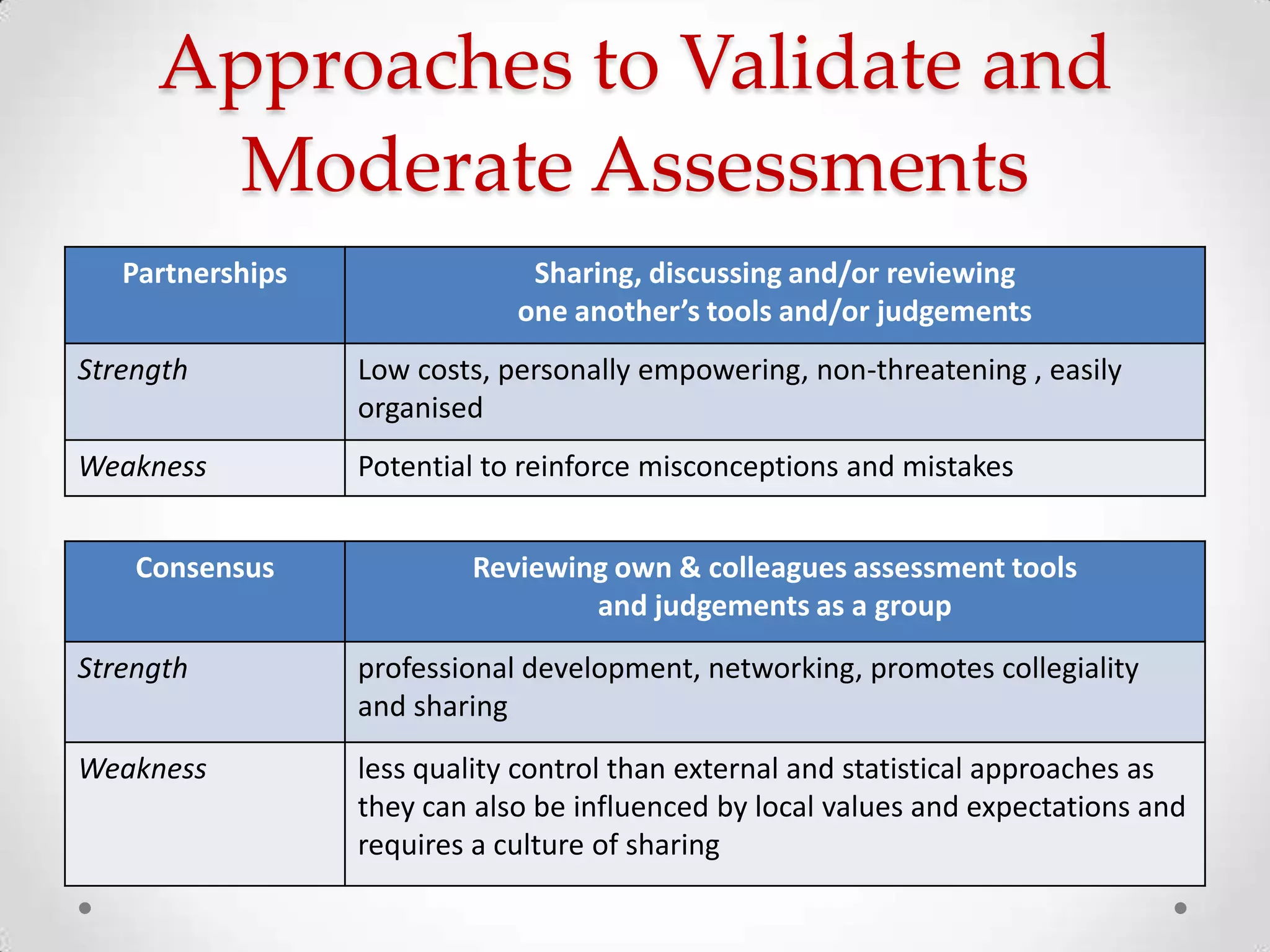

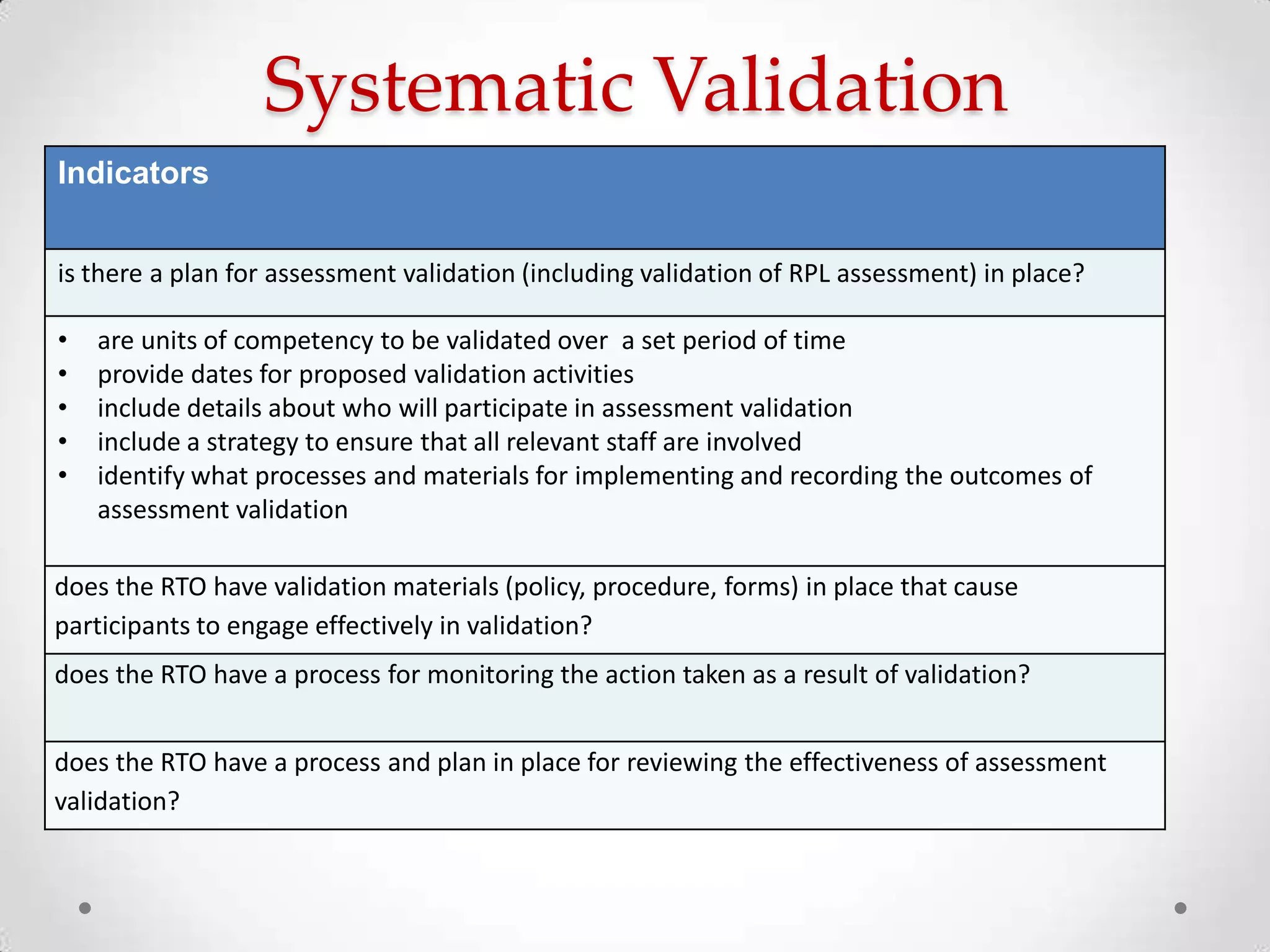

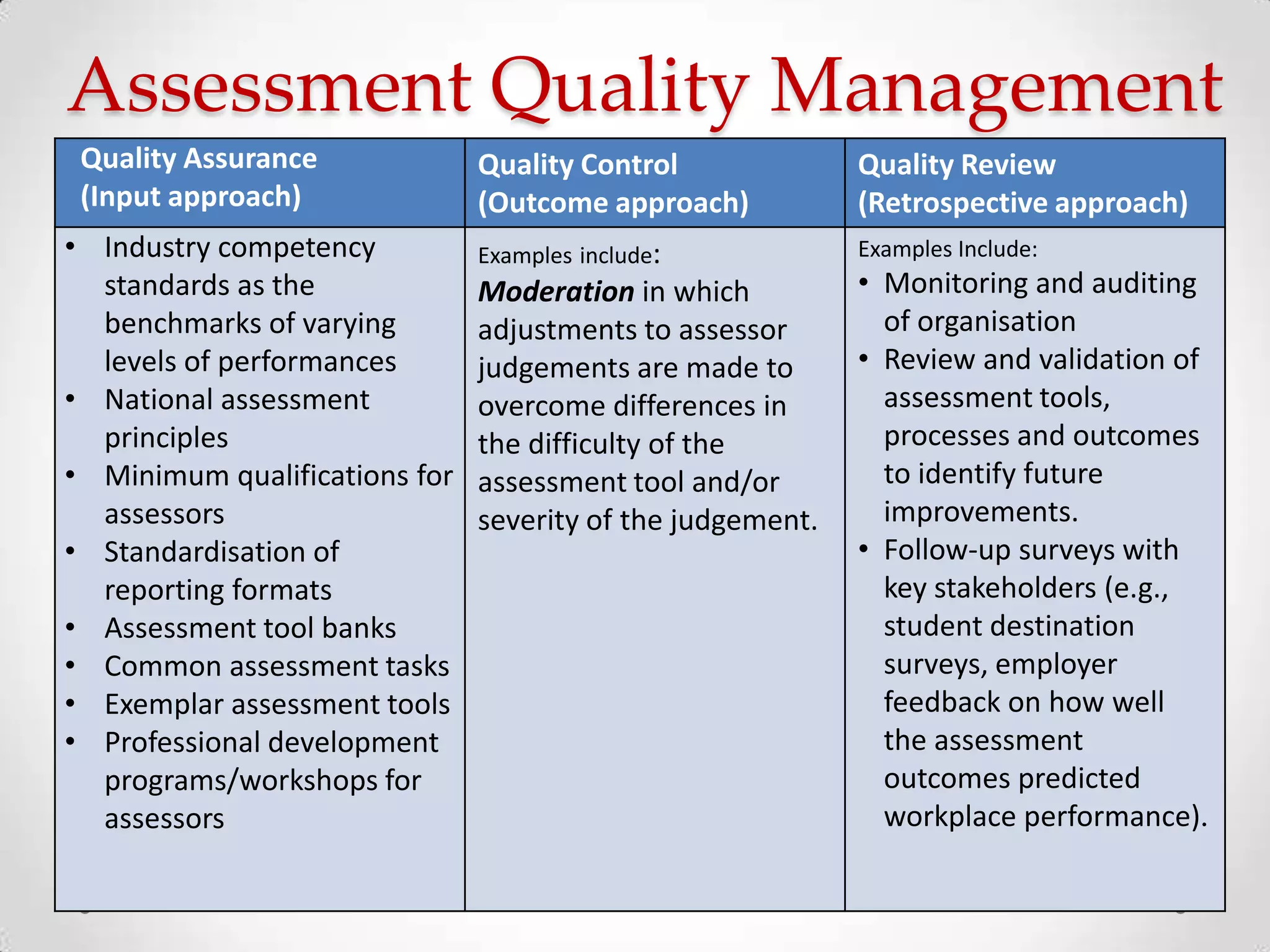

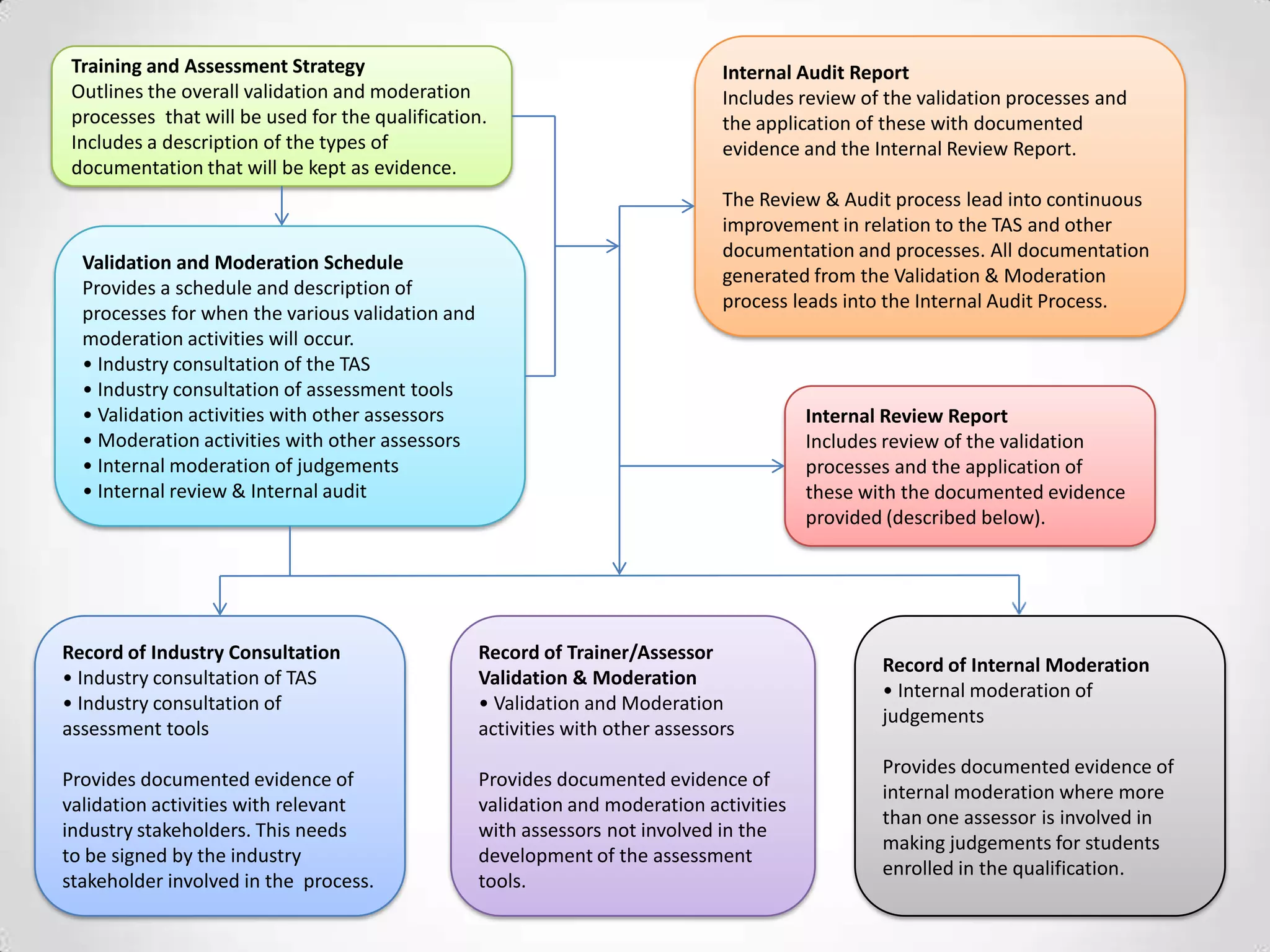

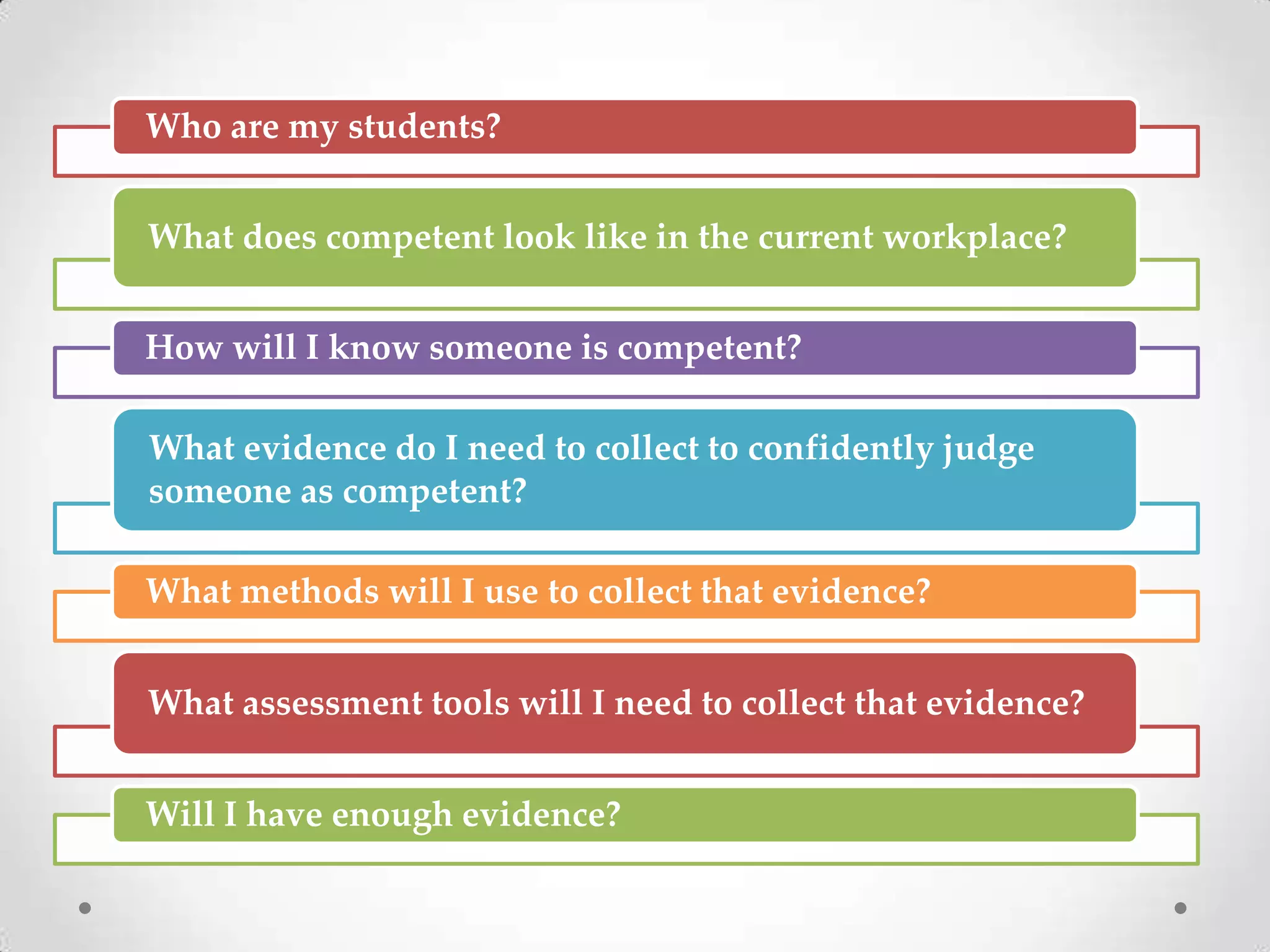

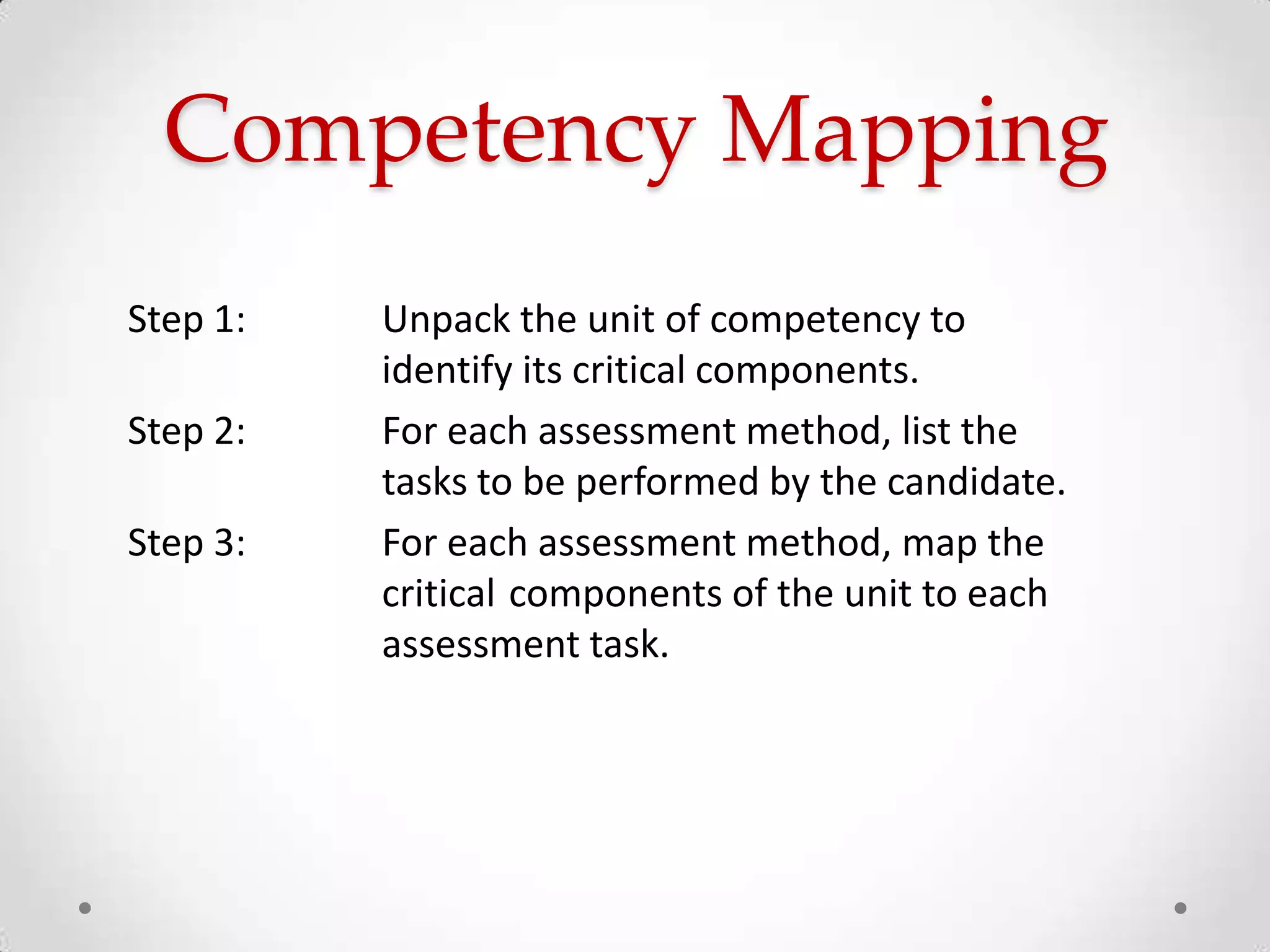

The document provides information on validation and moderation processes for assessment in vocational education. It discusses the key differences between validation and moderation, with validation focusing on continuous improvement and moderation aiming to ensure consistent assessment standards. A variety of approaches to validation and moderation are presented, including statistical analysis, external reviews, partnerships between assessors, and consensus meetings. The outcomes of validation and moderation processes may include recommendations to improve assessment tools, tasks, and judgements. Ensuring valid, reliable assessment through ongoing validation and moderation is important for quality assurance and compliance with standards.