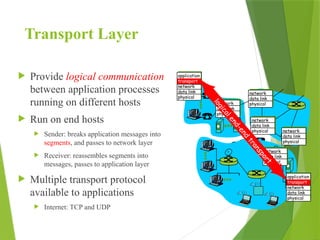

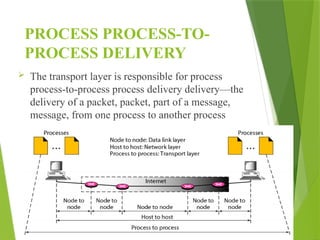

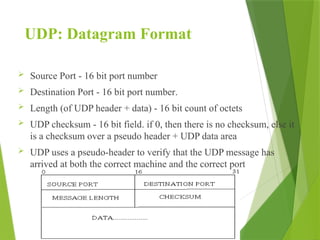

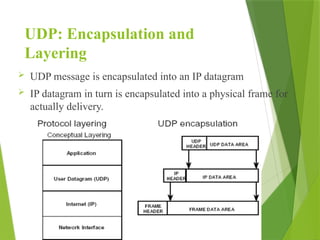

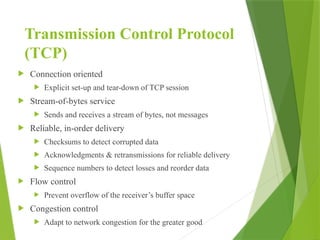

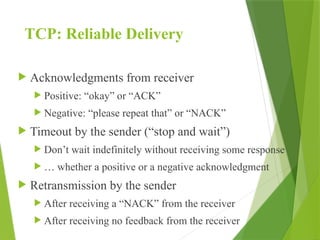

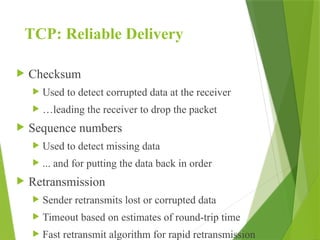

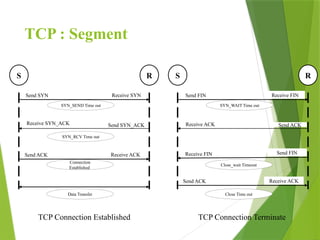

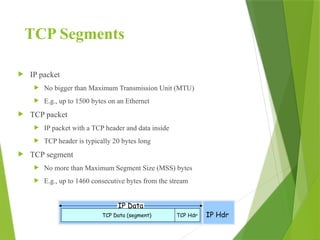

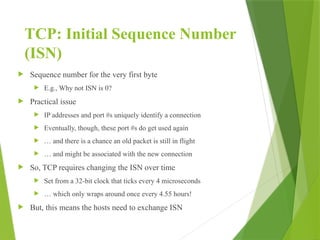

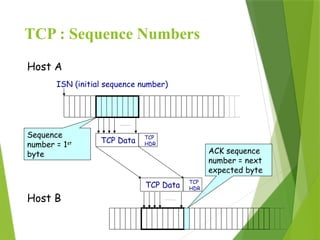

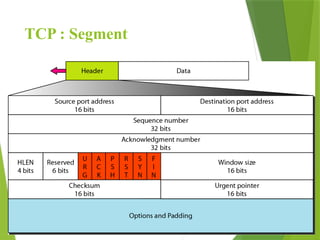

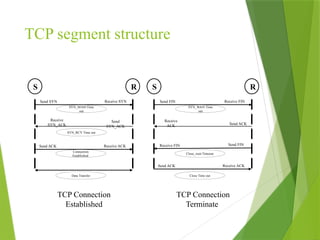

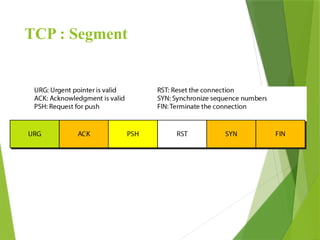

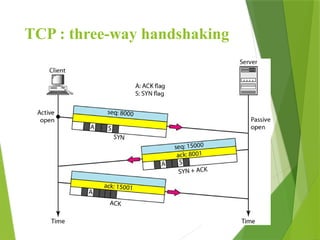

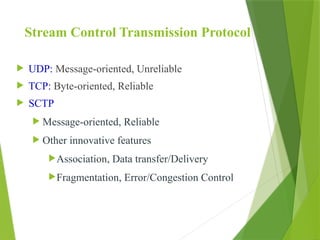

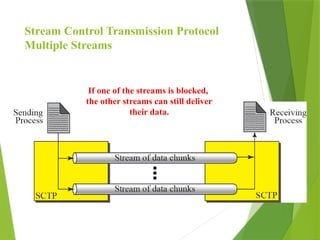

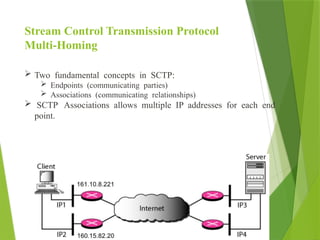

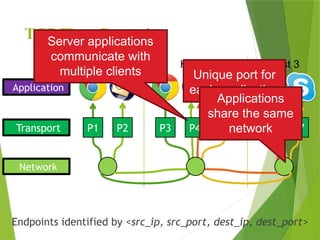

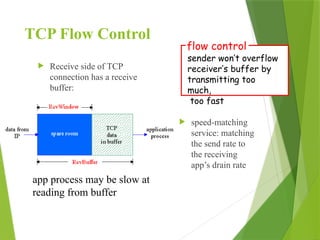

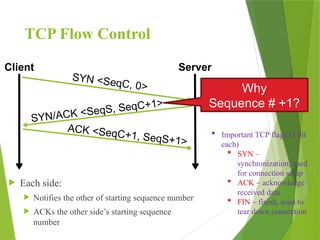

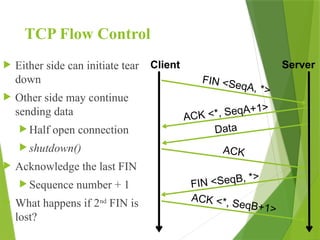

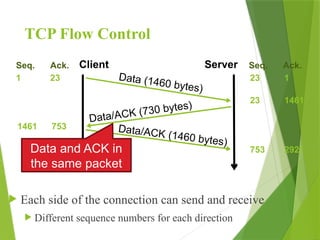

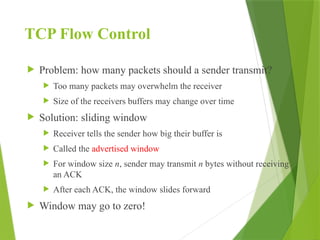

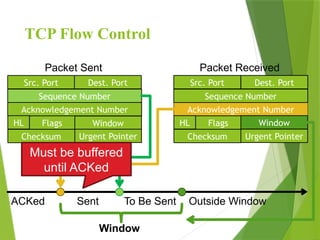

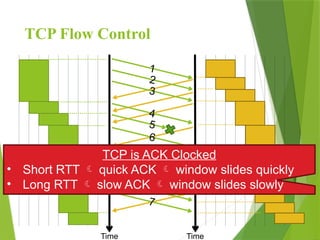

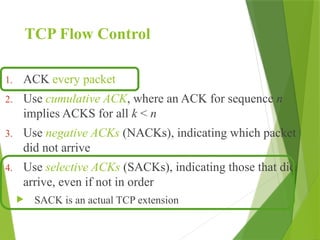

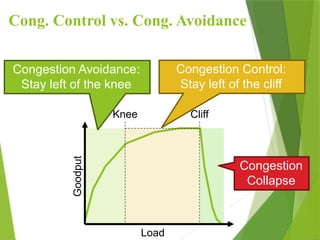

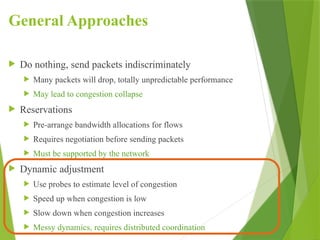

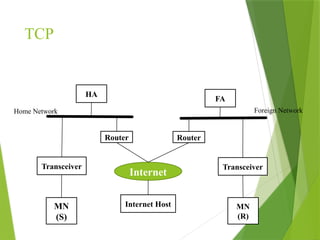

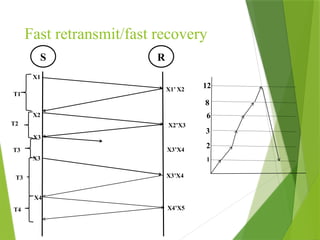

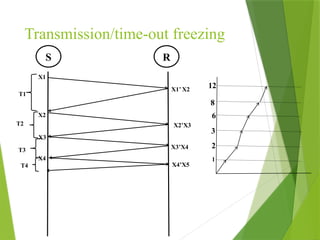

The transport layer is responsible for reliable data exchange between processes on different computers, ensuring error-free delivery and proper sequencing. It utilizes various transport protocols, such as TCP for reliable, connection-oriented communication and UDP for connectionless, best-effort delivery. Additionally, flow control and congestion management techniques are discussed to optimize data transmission efficiency.