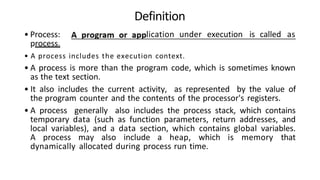

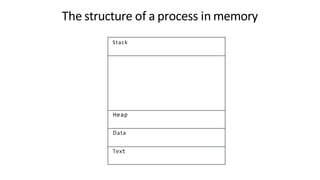

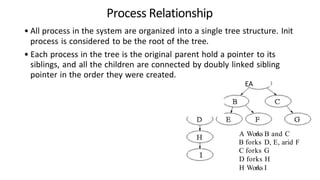

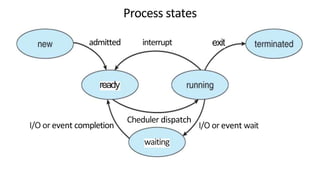

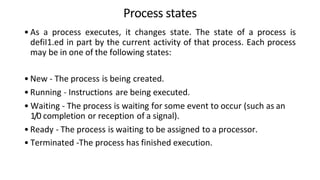

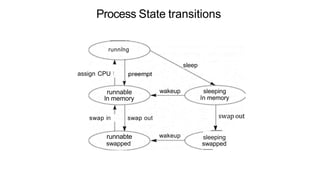

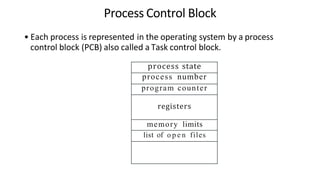

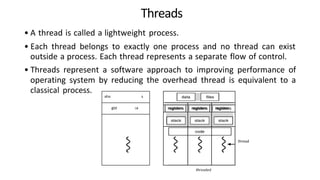

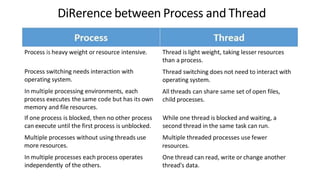

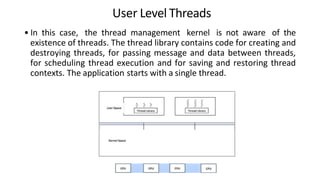

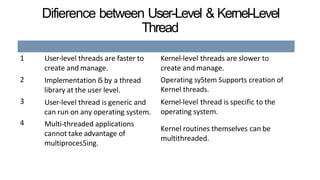

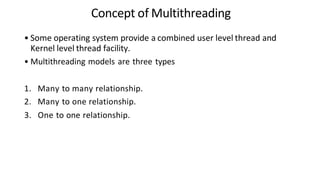

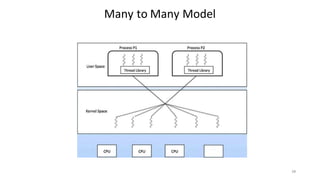

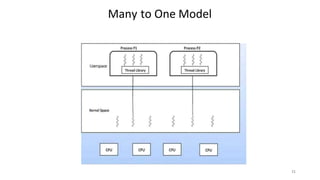

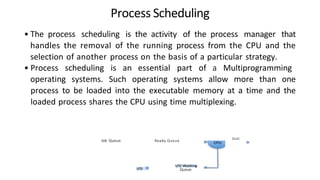

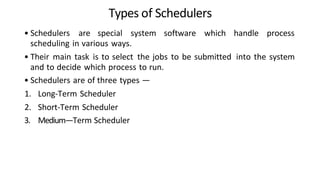

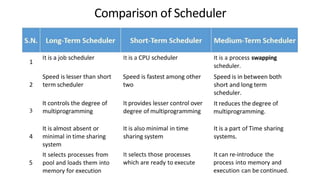

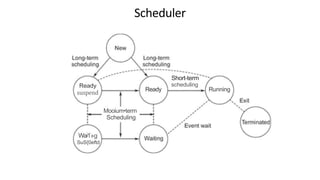

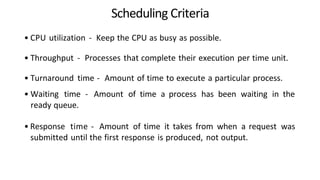

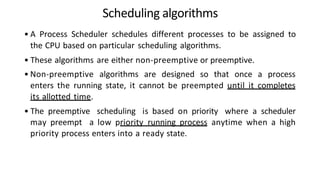

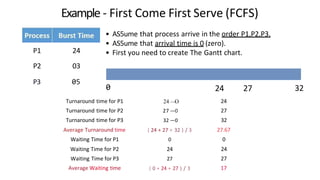

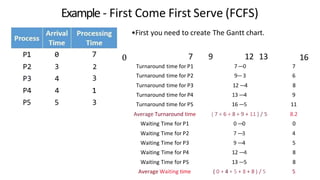

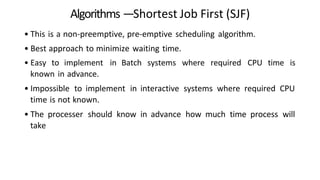

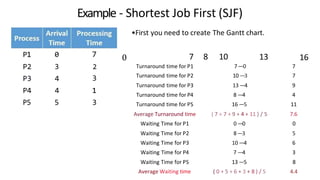

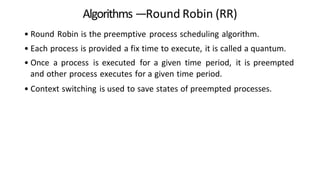

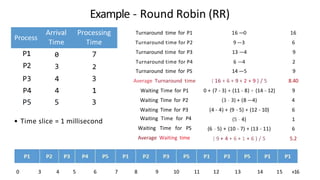

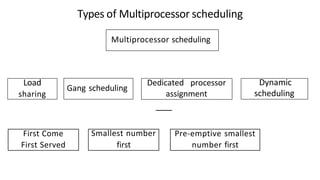

The document provides an in-depth overview of process management in operating systems, covering critical concepts such as process definitions, relationships, states, and control blocks. It also discusses threads, multithreading, and various process scheduling algorithms, including their types and criteria for CPU utilization. Additionally, the document highlights the differences between user-level and kernel-level threads, along with multiprocessor scheduling techniques for optimizing performance.