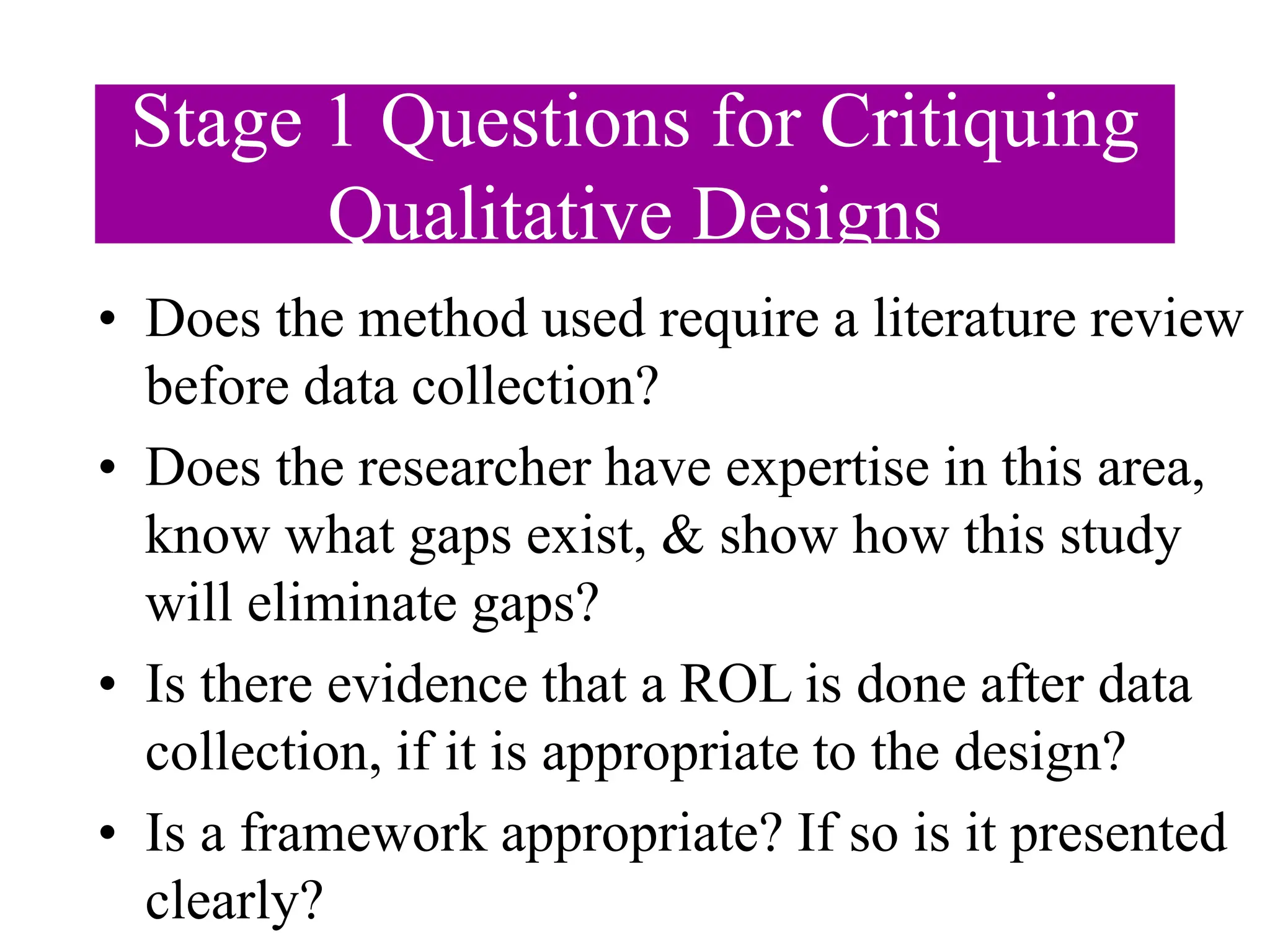

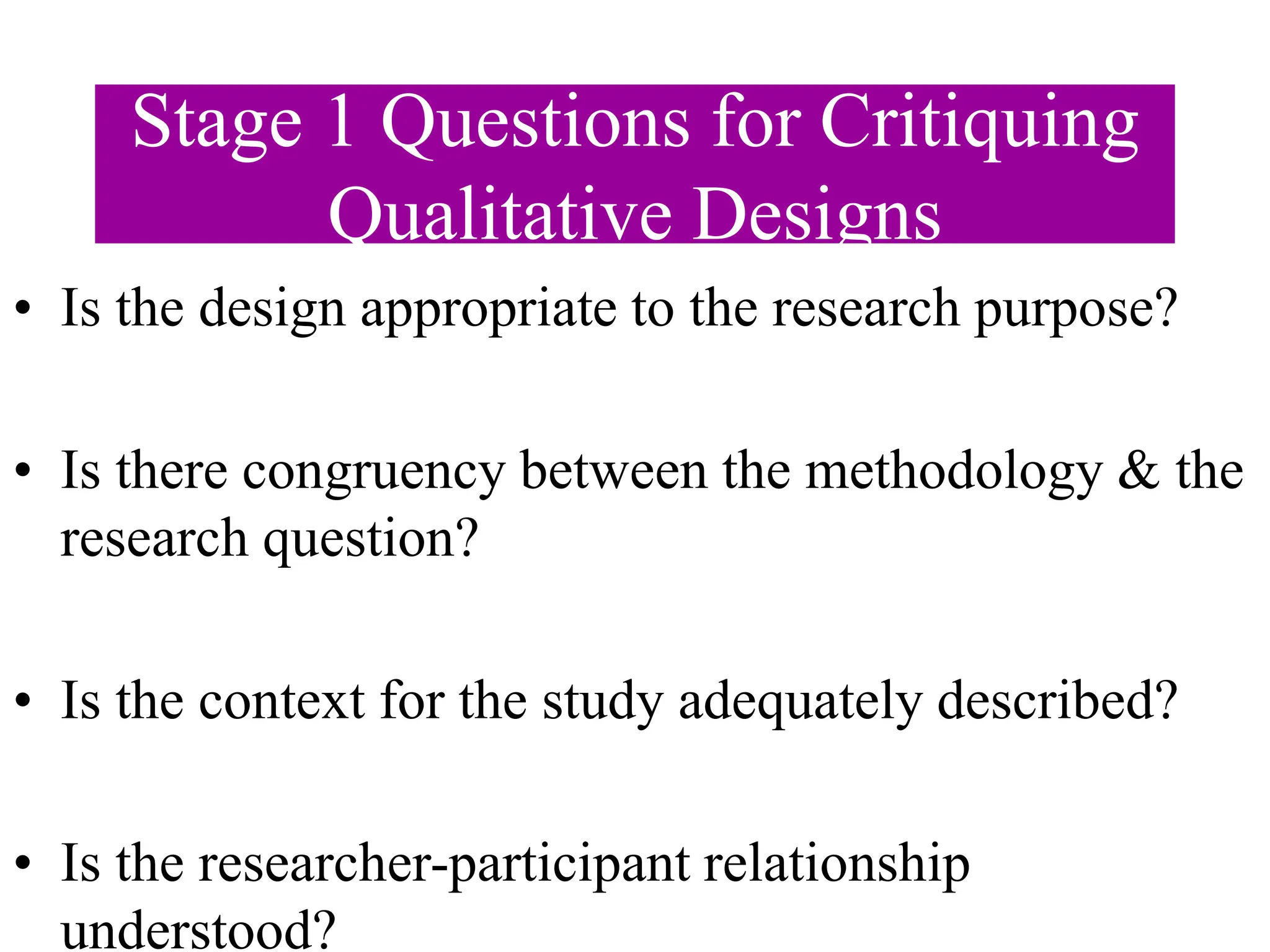

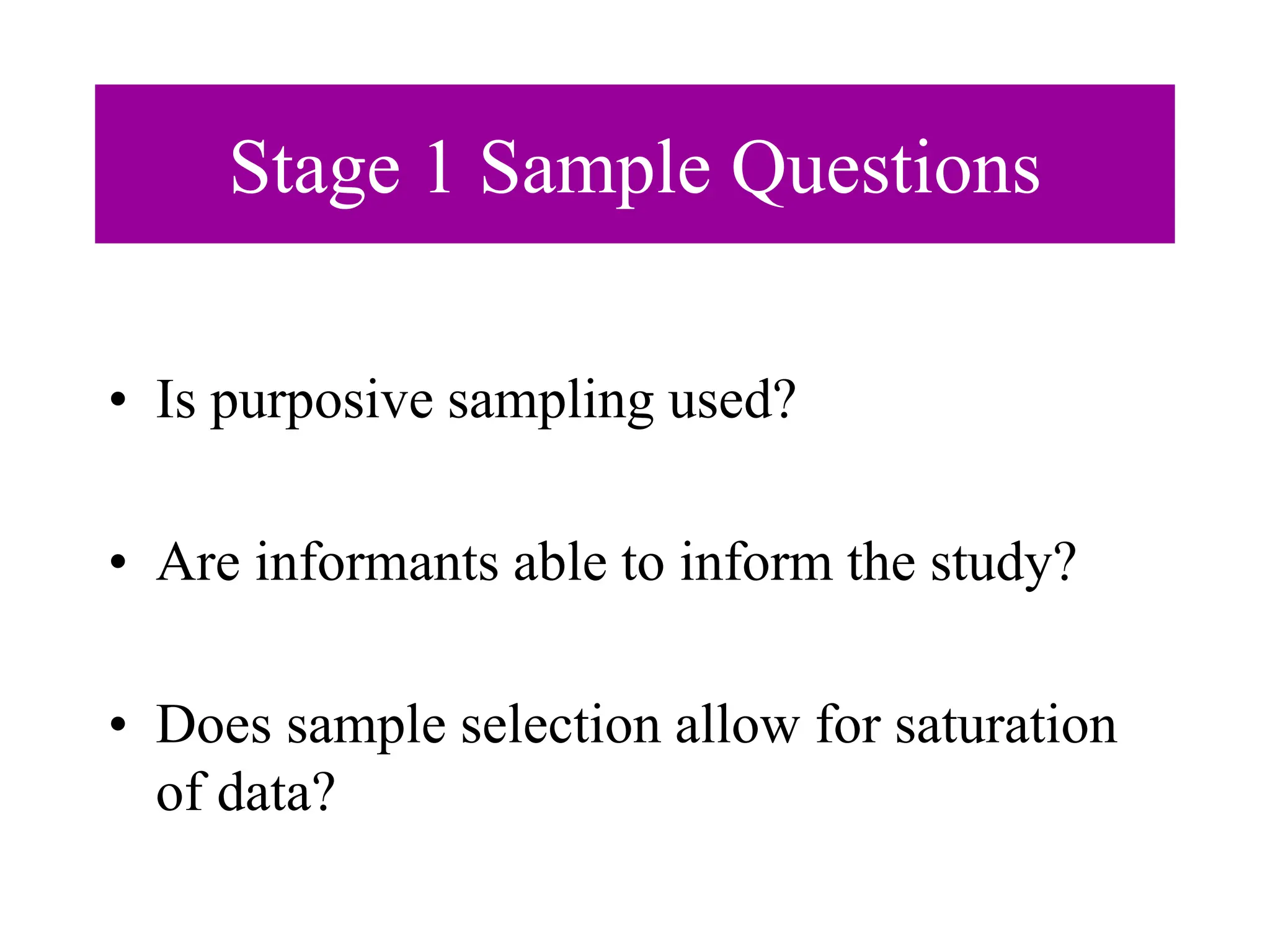

Chapter 20 discusses the research critique process, which involves a critical appraisal of completed research to identify strengths and limitations. It outlines the four stages of critique, criteria for both quantitative and qualitative studies, and essential questions to assess the study's purpose, design, methodology, data collection, and analysis. The chapter emphasizes the importance of ethical considerations and the overall quality of the research in contributing to nursing knowledge.