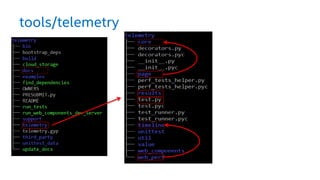

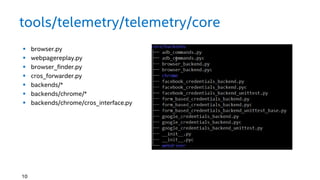

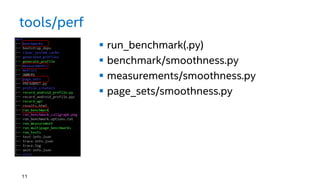

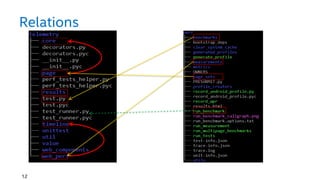

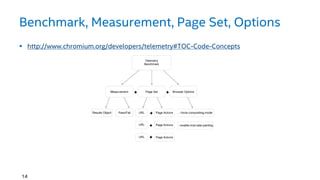

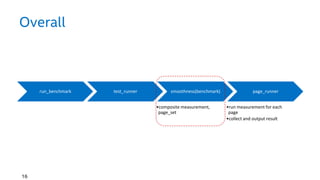

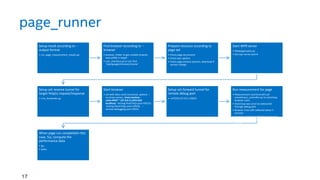

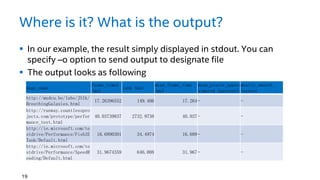

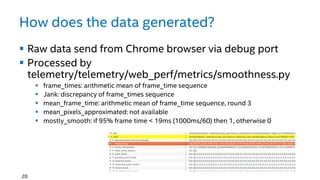

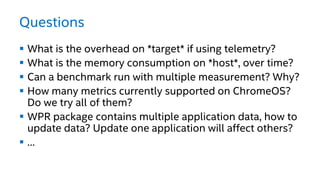

The document discusses the Telemetry performance testing framework in Chrome. It provides an overview of Telemetry and its goals. The methodology section describes running a smoothness benchmark case as an example. Key concepts in Telemetry like benchmarks, measurements, page sets and options are explained. The document details how these concepts are connected in code and outlines the overall workflow when running a benchmark, from starting the browser to collecting and outputting results. It describes where results are located and how the performance metrics in results are generated and interpreted.