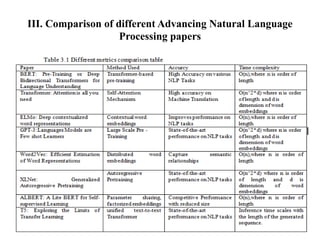

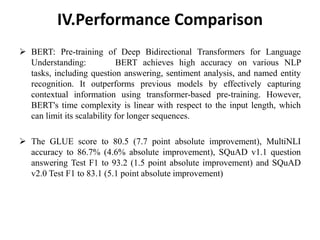

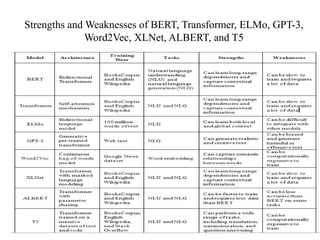

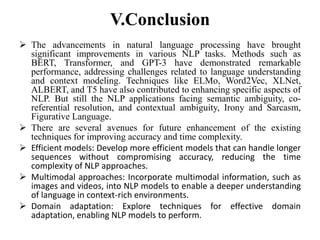

This document summarizes recent advances in natural language processing and identifies remaining challenges. It discusses influential papers on BERT and Transformer models that have significantly improved language understanding but have limitations regarding context and long sequences. The document compares methods including BERT, Transformer, ELMo, Word2Vec, XLNet, ALBERT and T5, and identifies their strengths and weaknesses. It concludes that while NLP has made progress, challenges around ambiguity, co-reference resolution and context remain, and more efficient, multimodal and domain-adaptive models are needed to further enhance NLP.

![II. Literature Survey

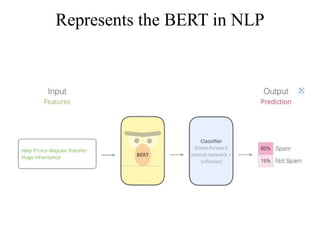

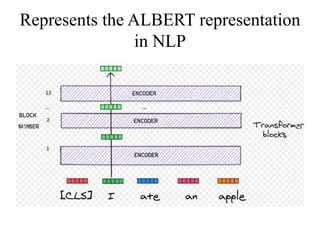

This influential paper introduces BERT (Bidirectional Encoder

Representations from Transformers), a powerful pre-training technique that

significantly advances language understanding tasks. BERT demonstrates

remarkable performance on a wide range of NLP benchmarks, addressing

challenges related to context modeling and language understanding [1].

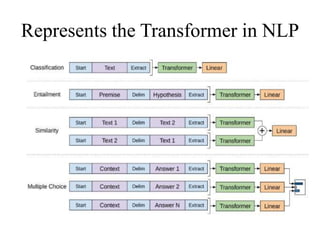

The Transformer model introduced in this paper revolutionized NLP by utilizing

self-attention mechanisms. This approach improves the modeling of dependencies

between words, leading to significant advancements in machine translation and

other NLP tasks. The Transformer architecture addresses challenges related to long-

range dependencies and facilitates parallelization during training [2].](https://image.slidesharecdn.com/srinu-231214100810-a660cfad/85/srinu-pptx-4-320.jpg)