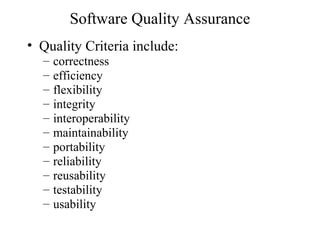

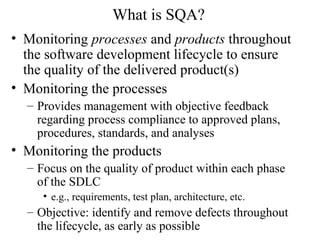

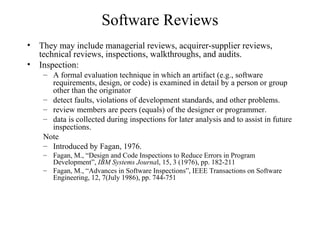

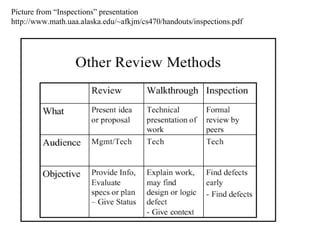

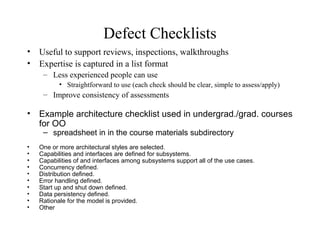

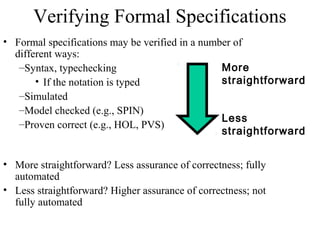

The document discusses software quality assurance. It defines quality as meeting requirements and user expectations. Quality criteria include correctness, efficiency, flexibility, and others. Software quality assurance involves monitoring processes and products throughout development to ensure quality. This includes reviewing requirements, design, code, and testing products. It also involves assessing conformance to standards and processes.