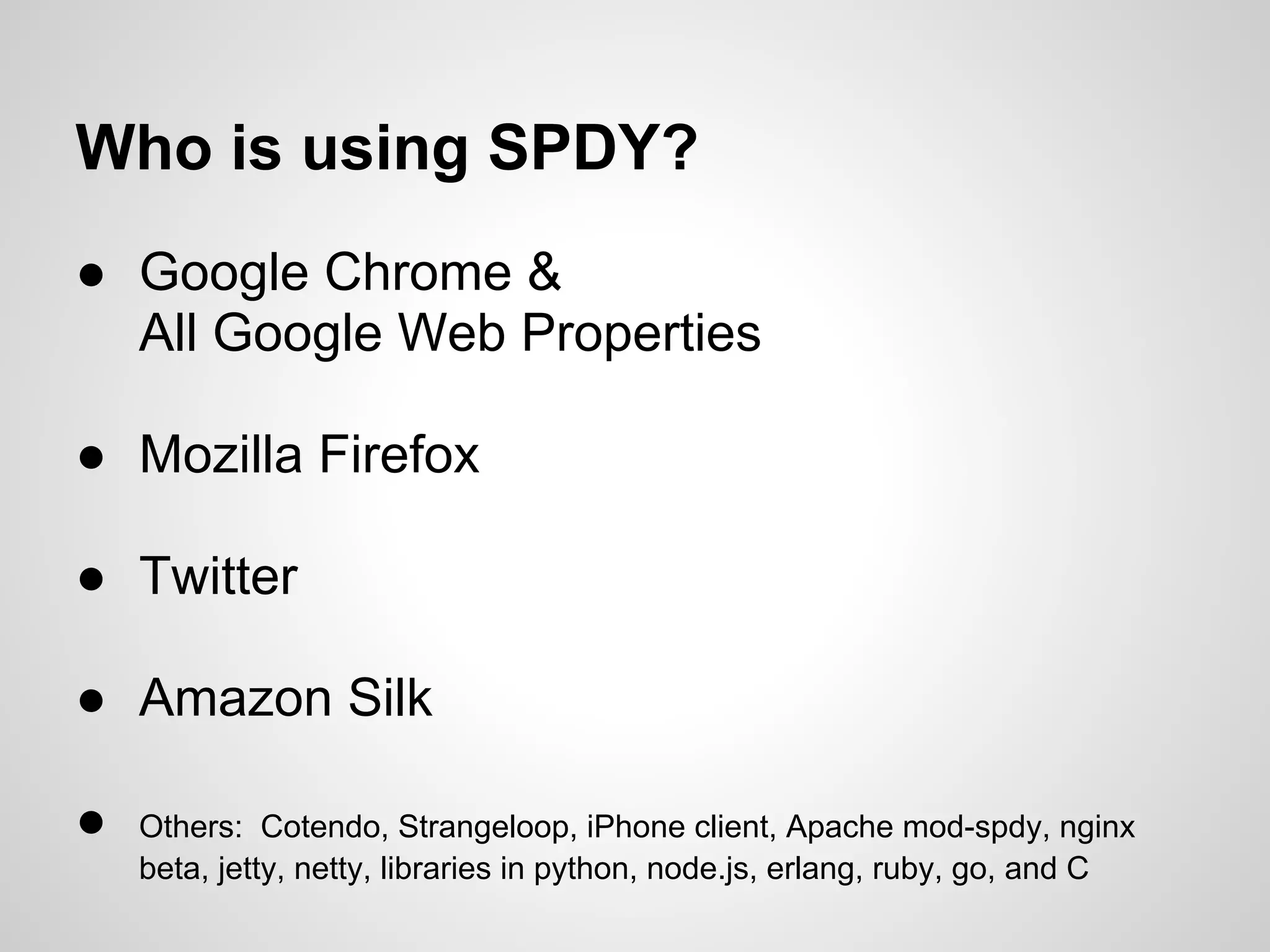

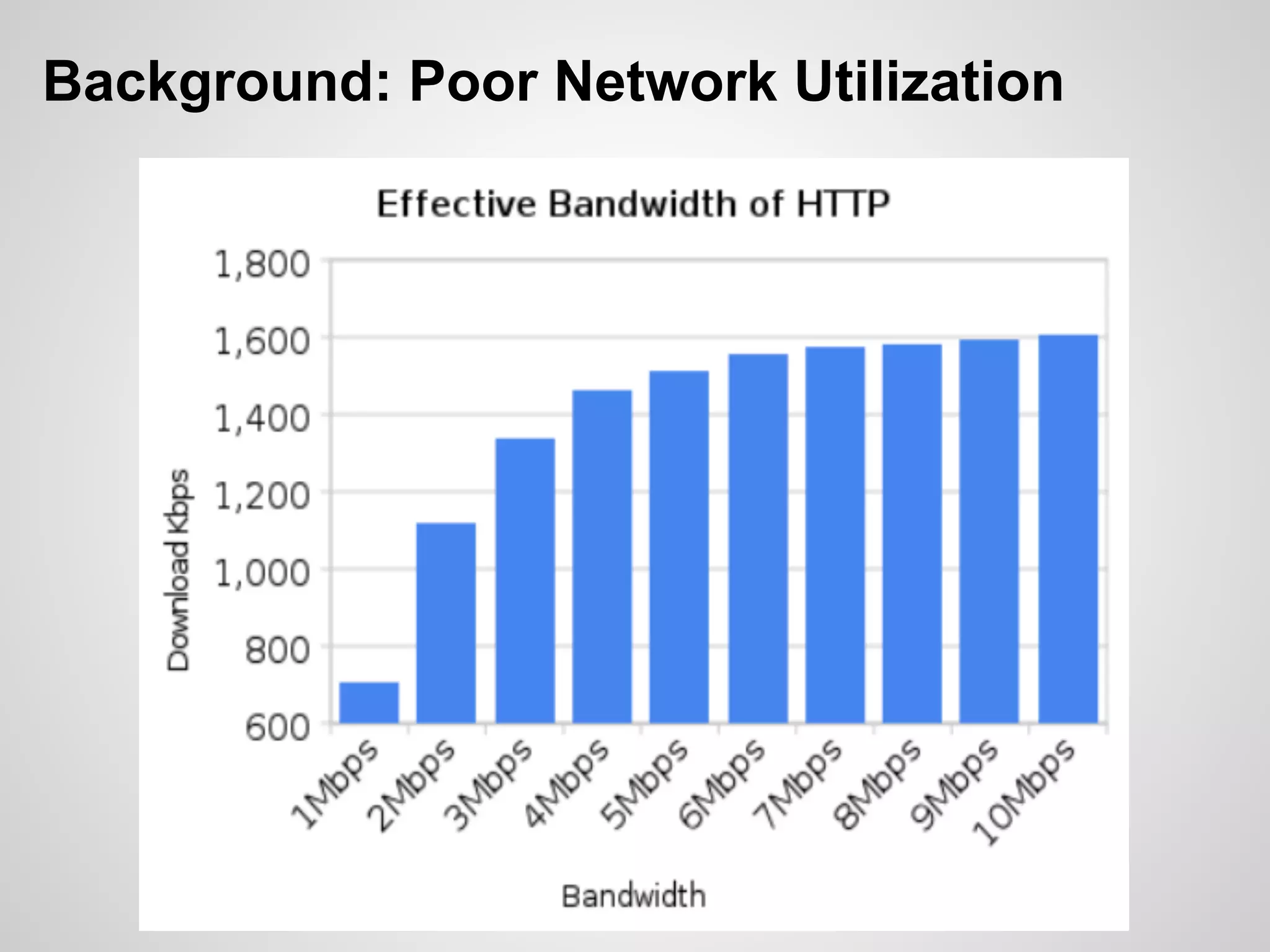

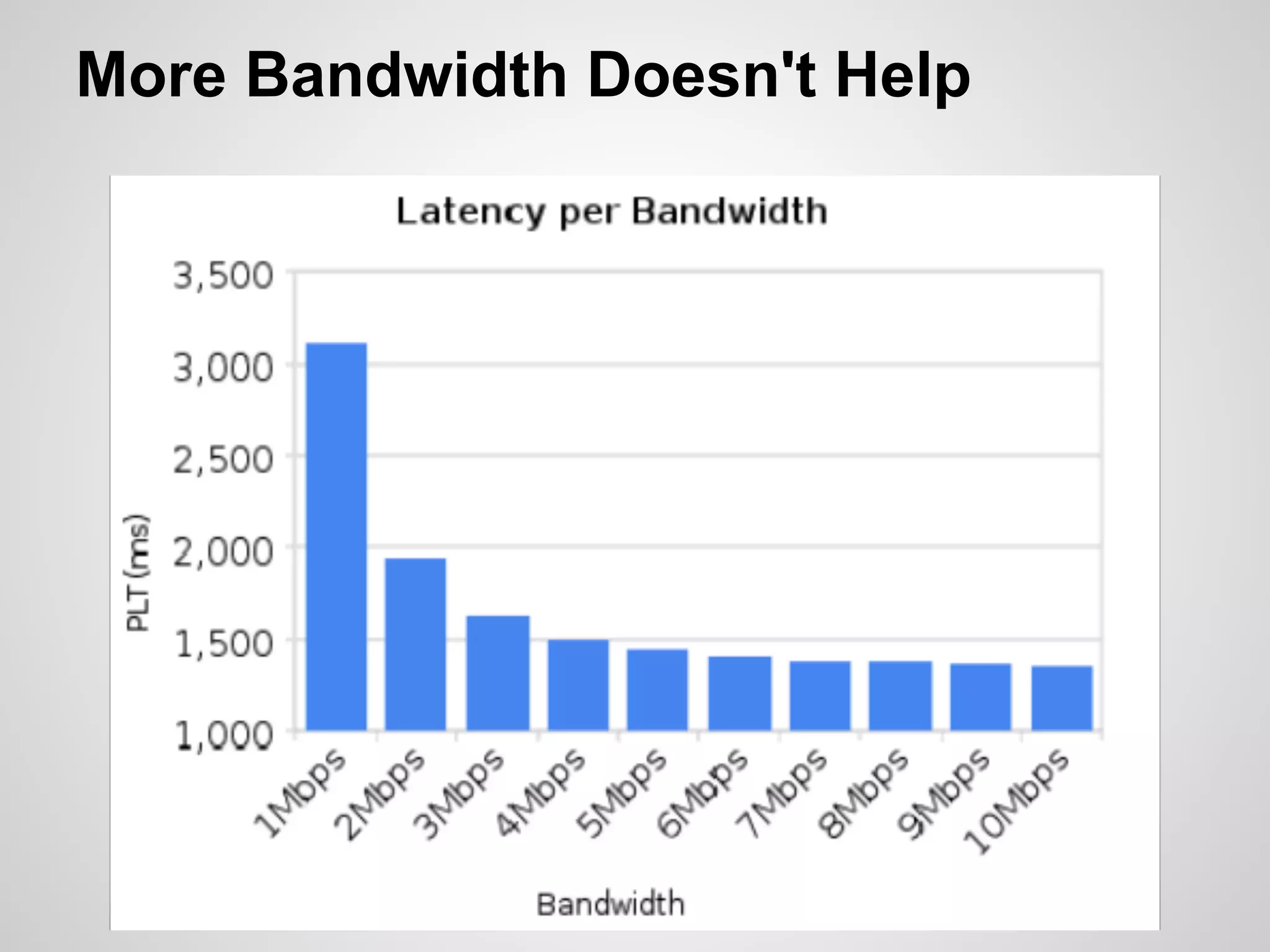

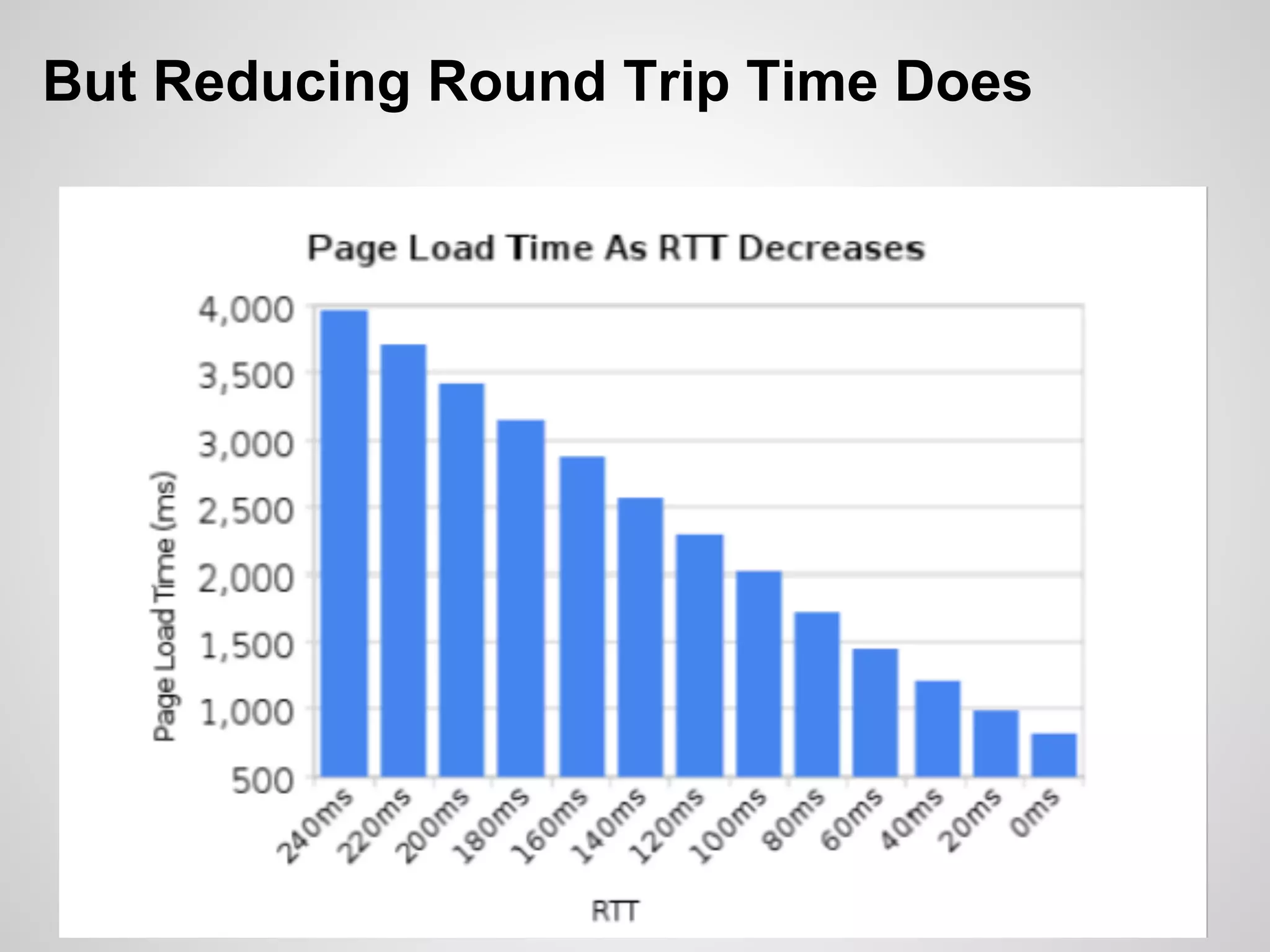

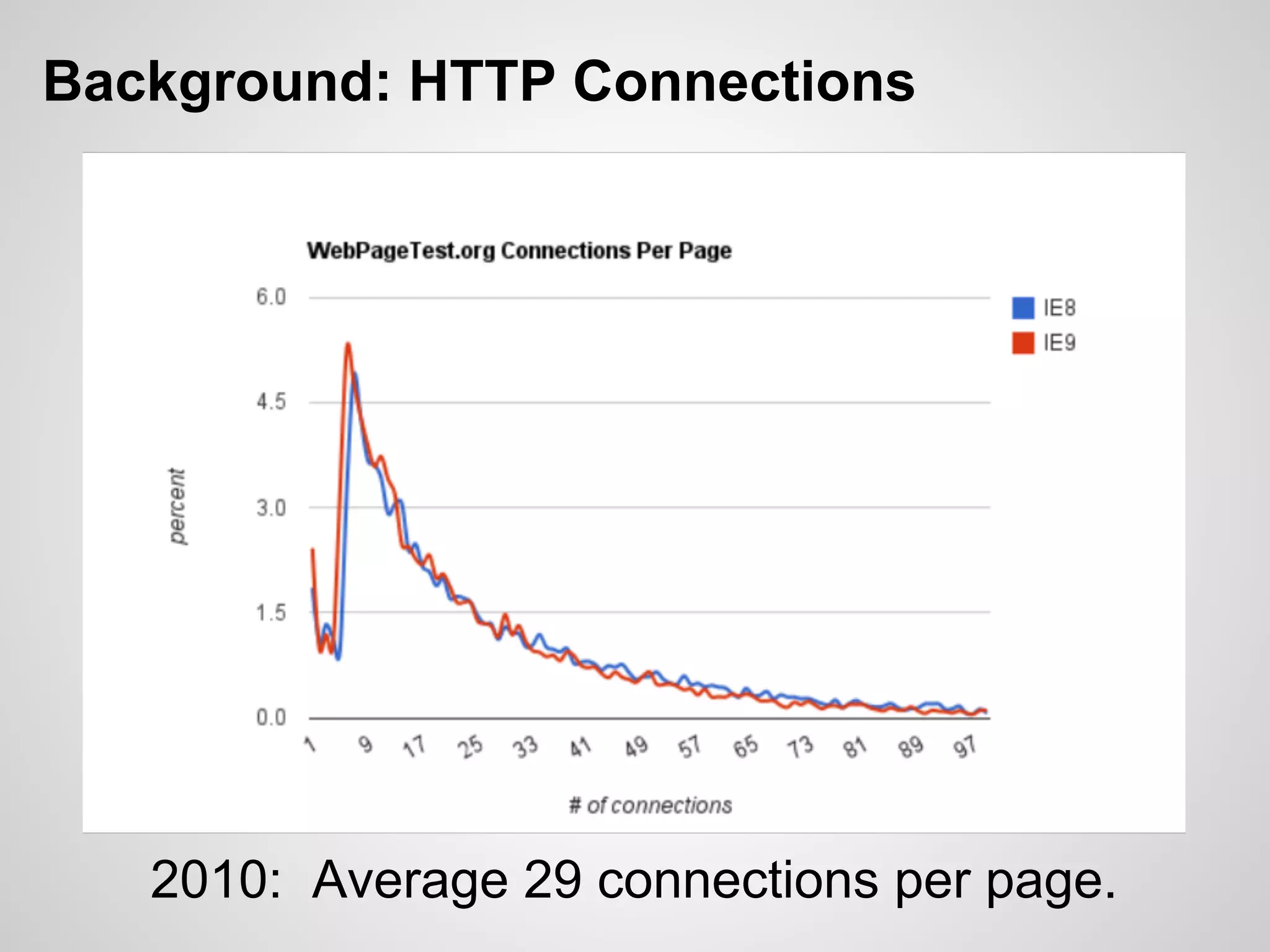

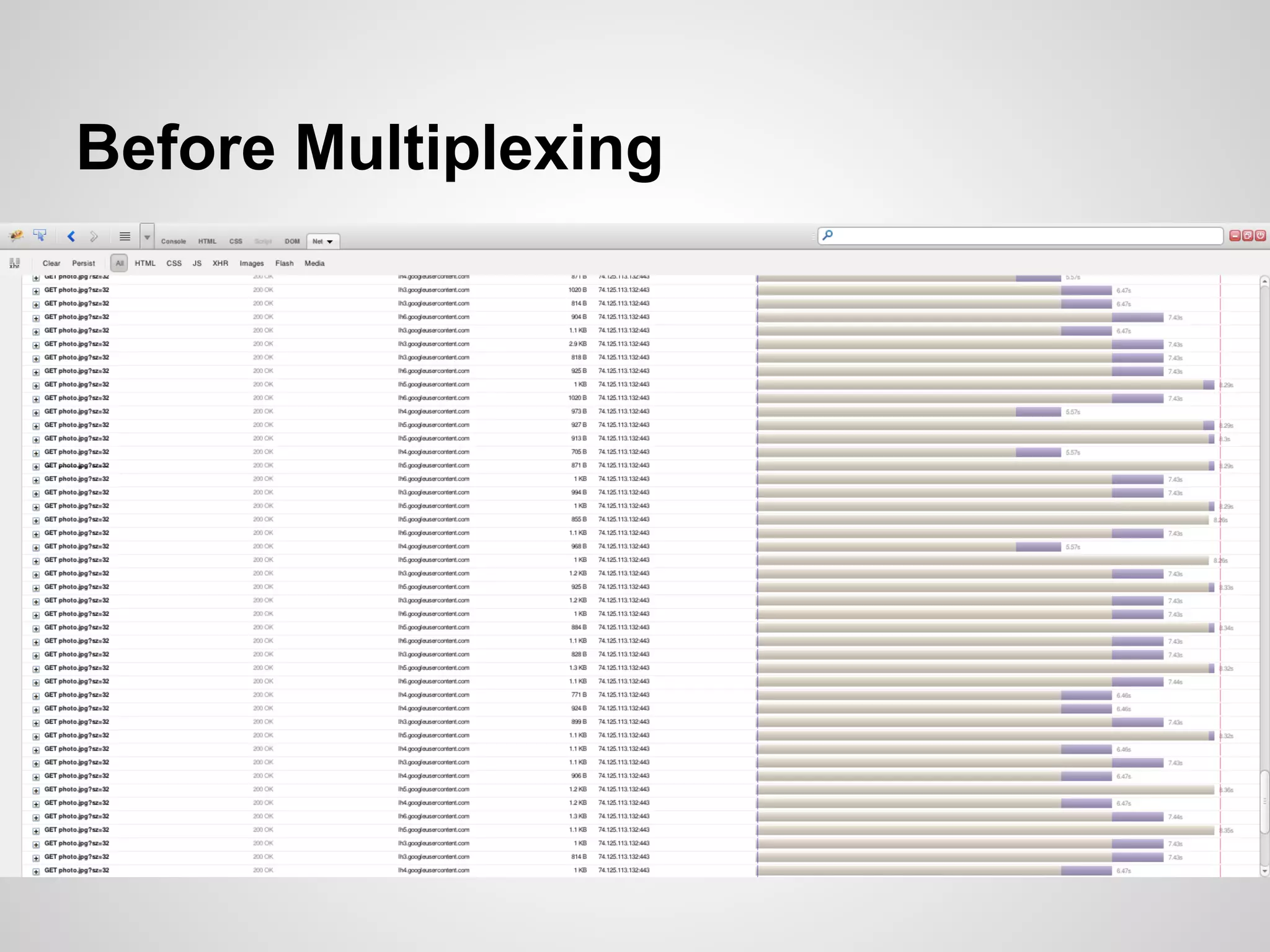

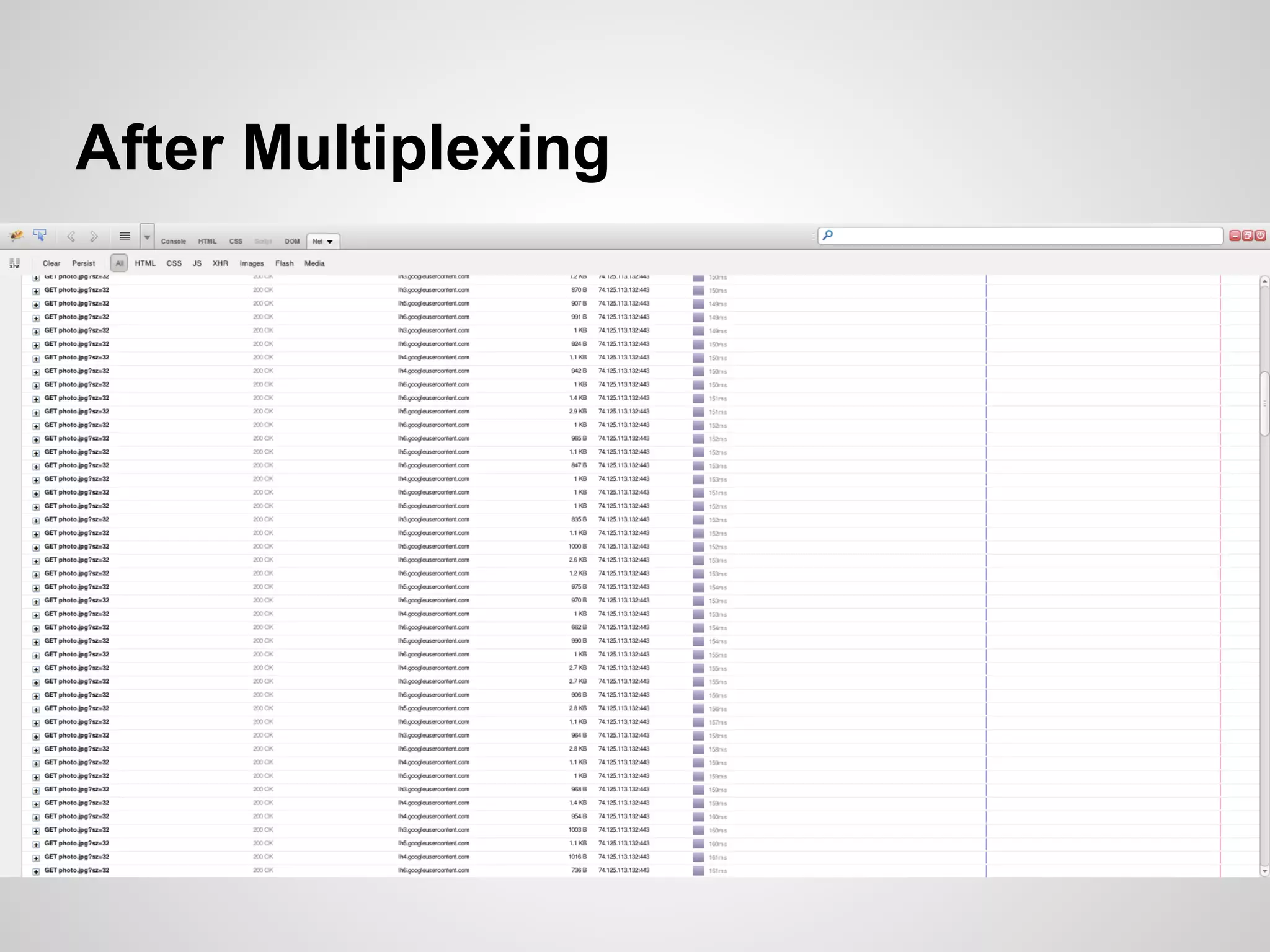

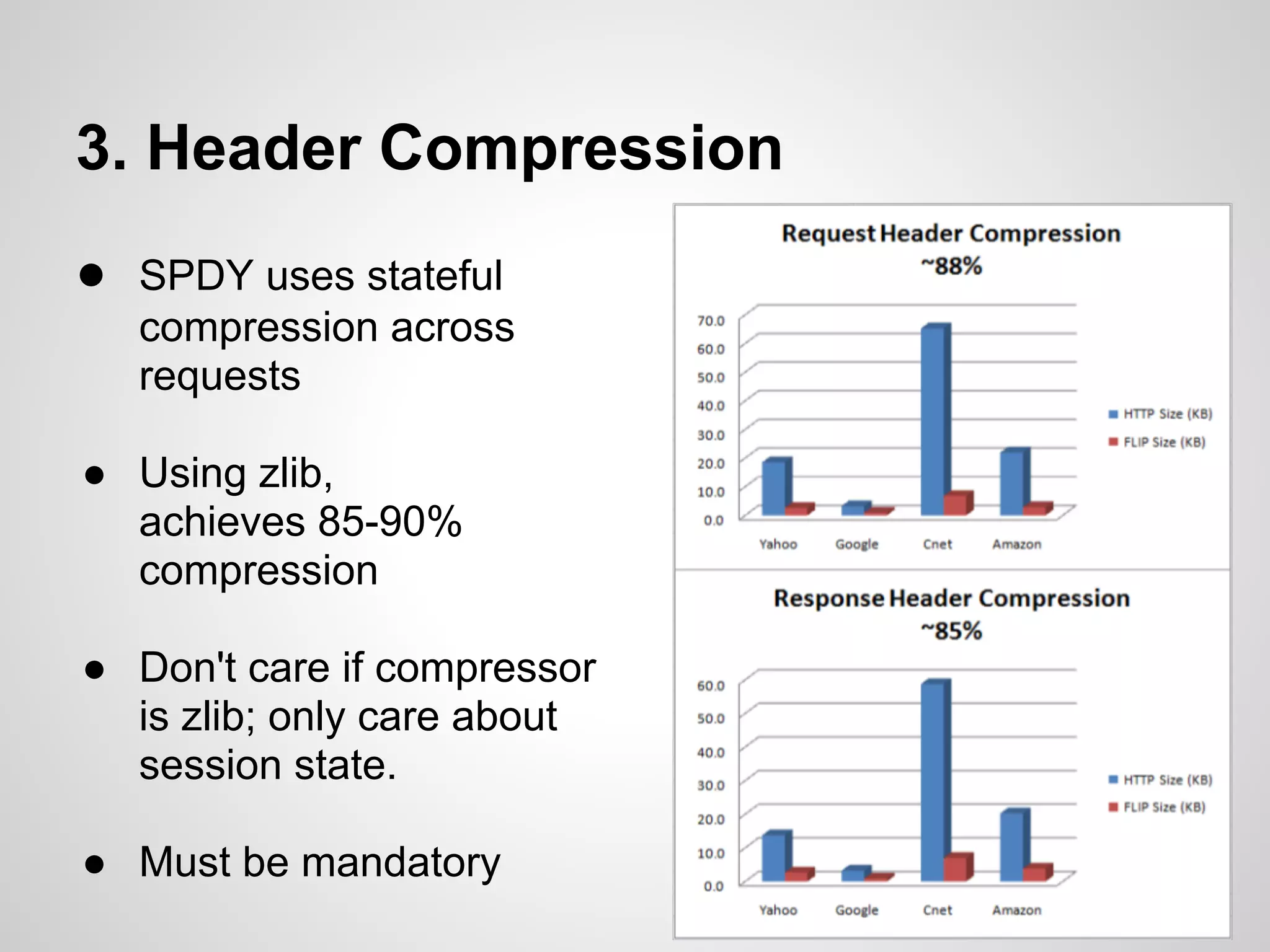

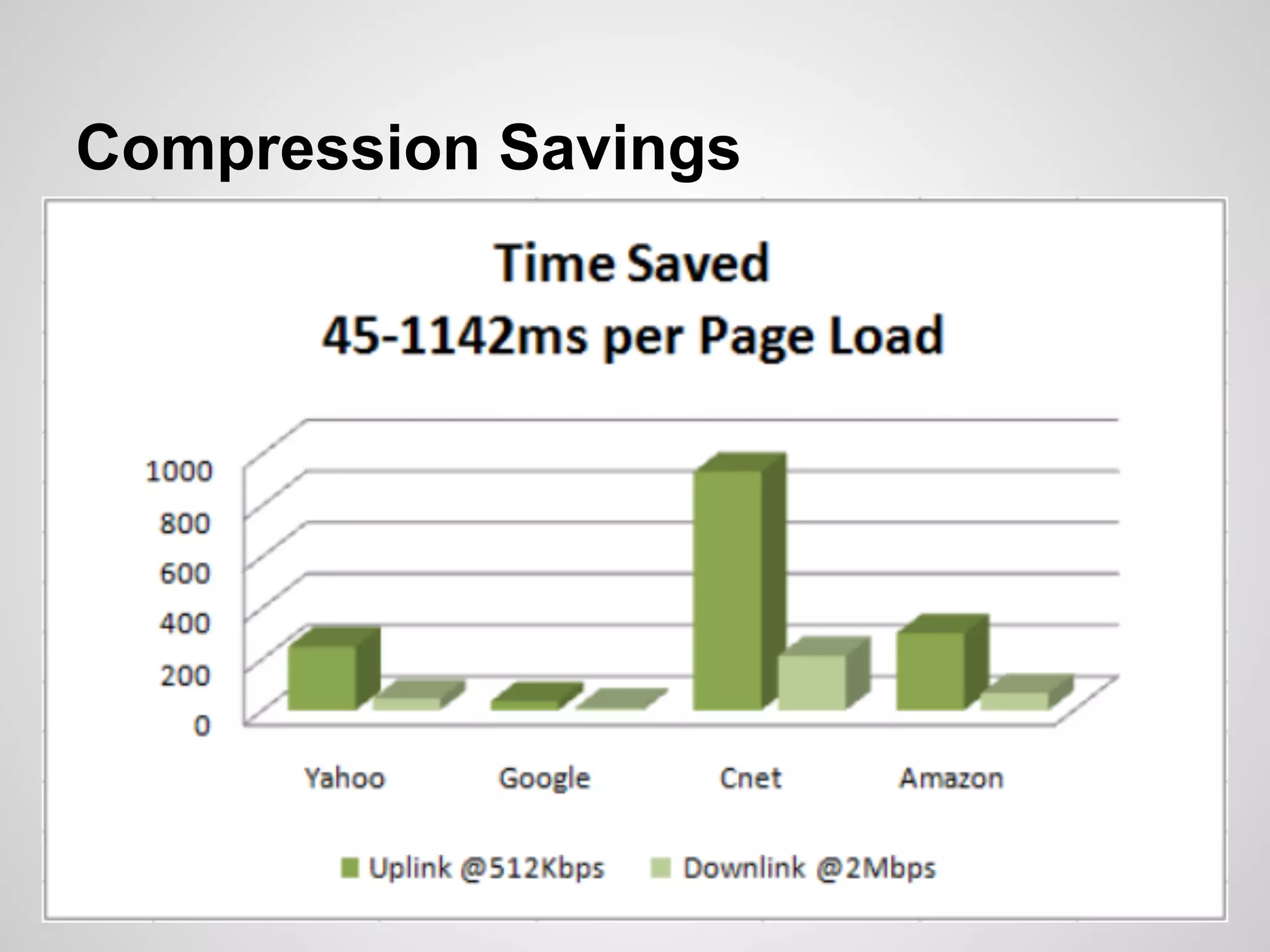

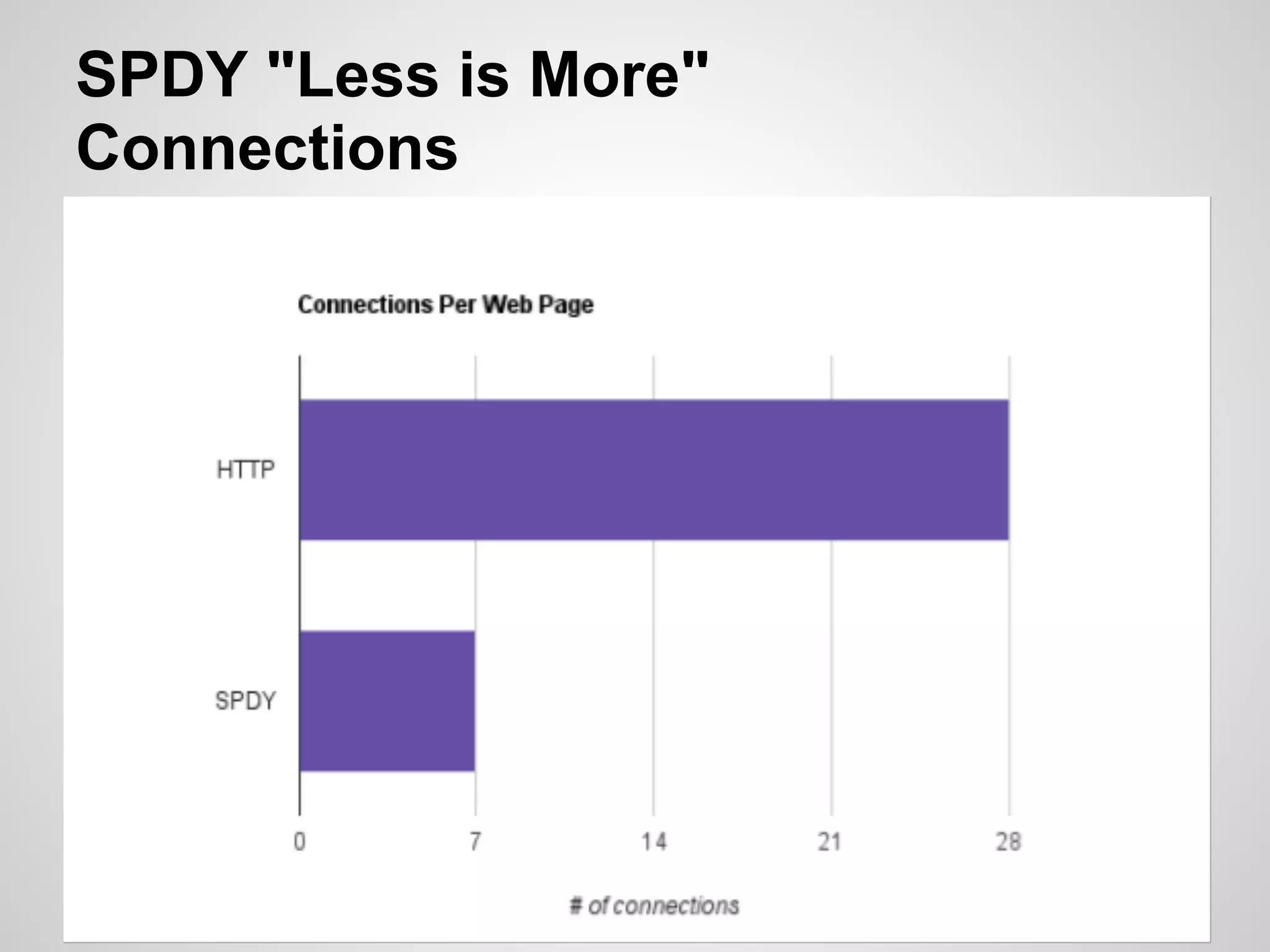

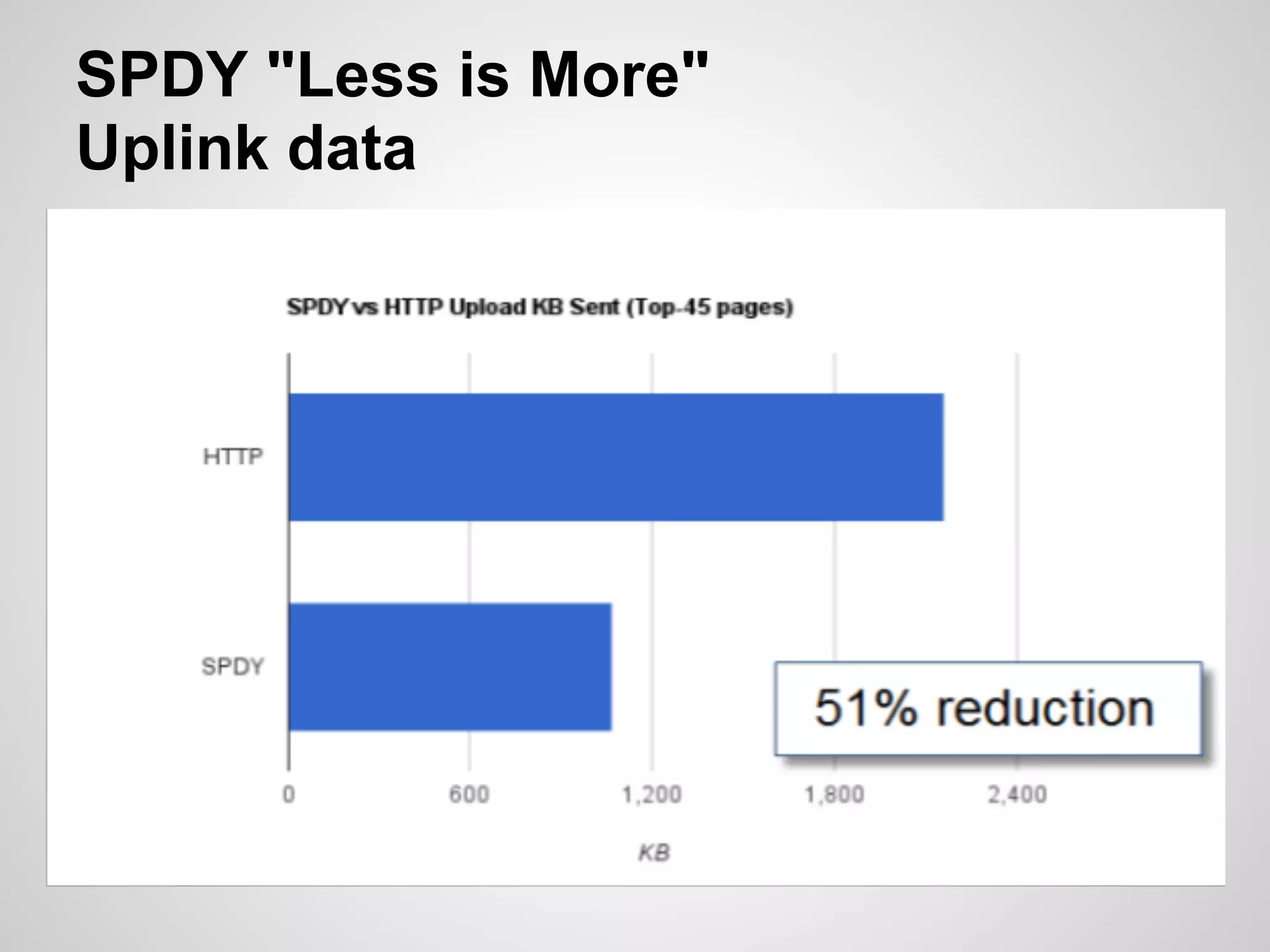

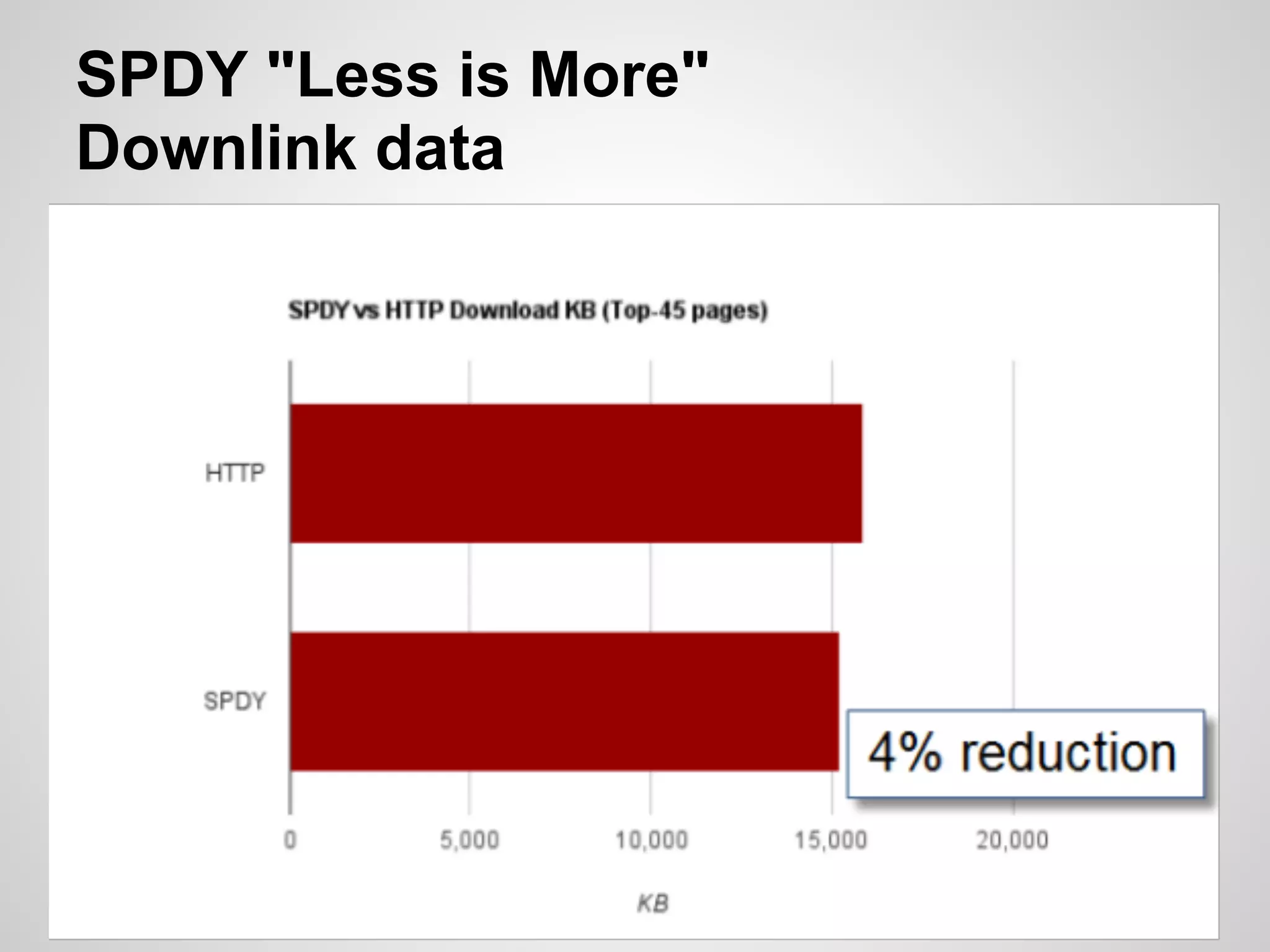

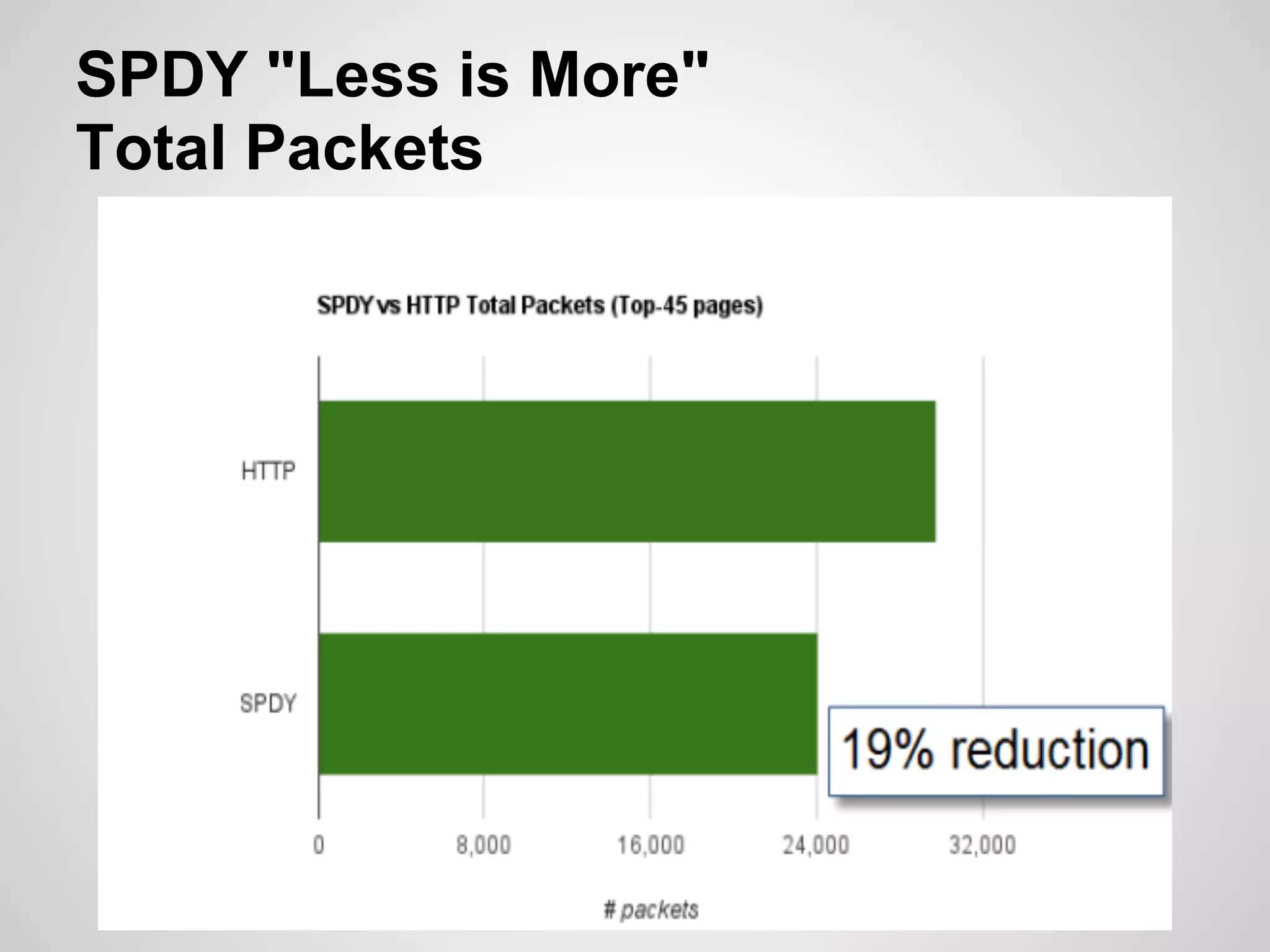

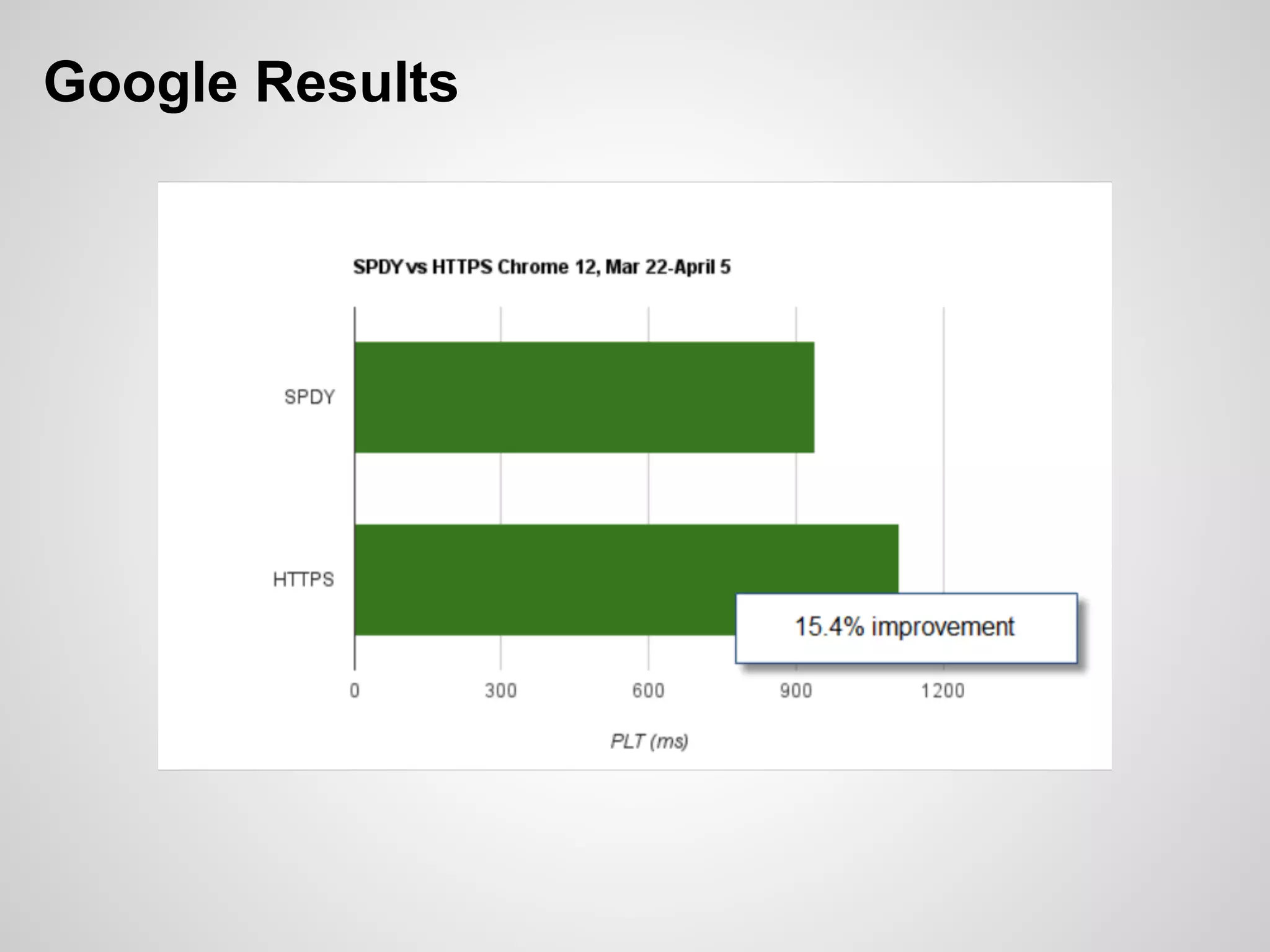

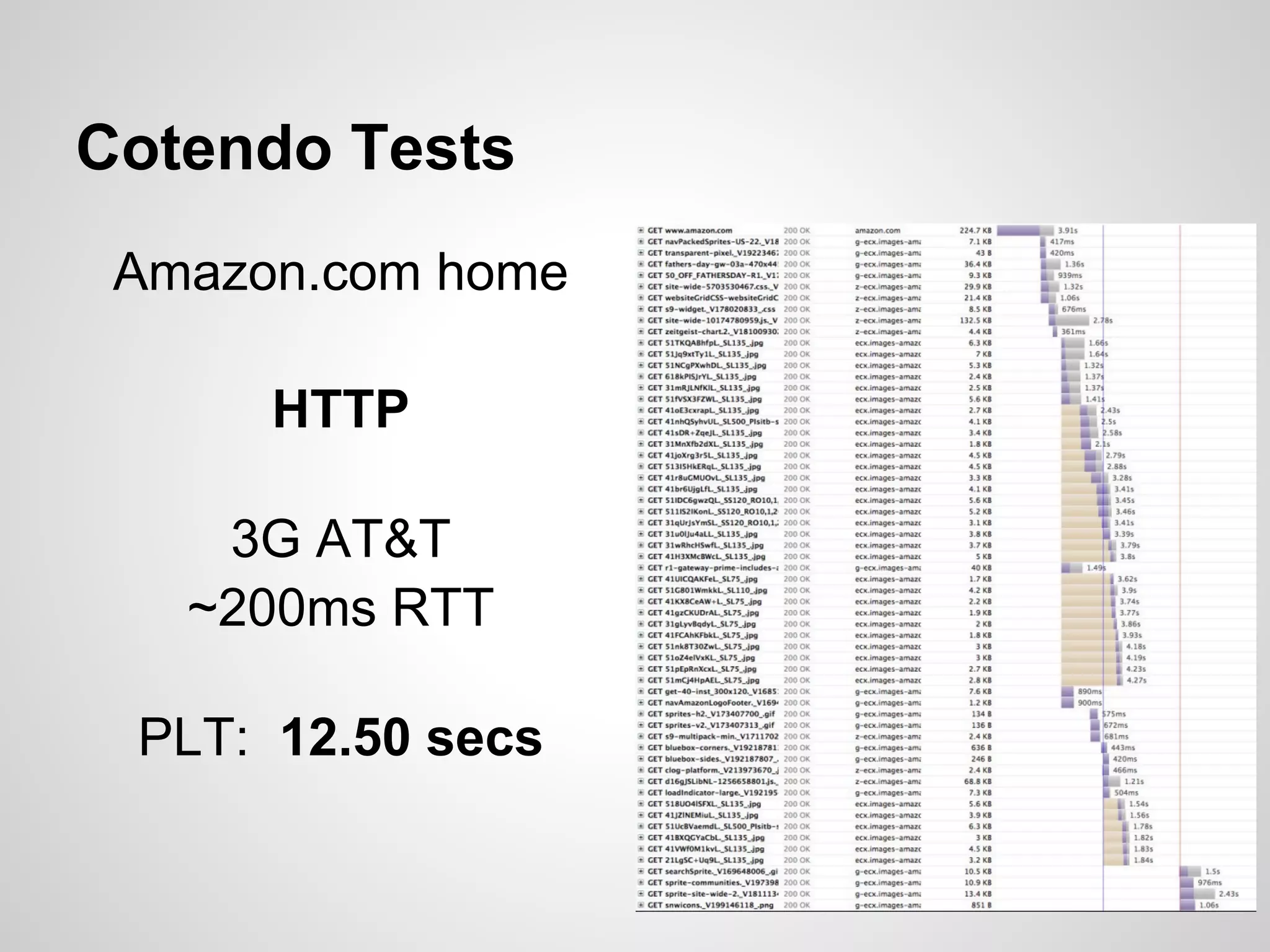

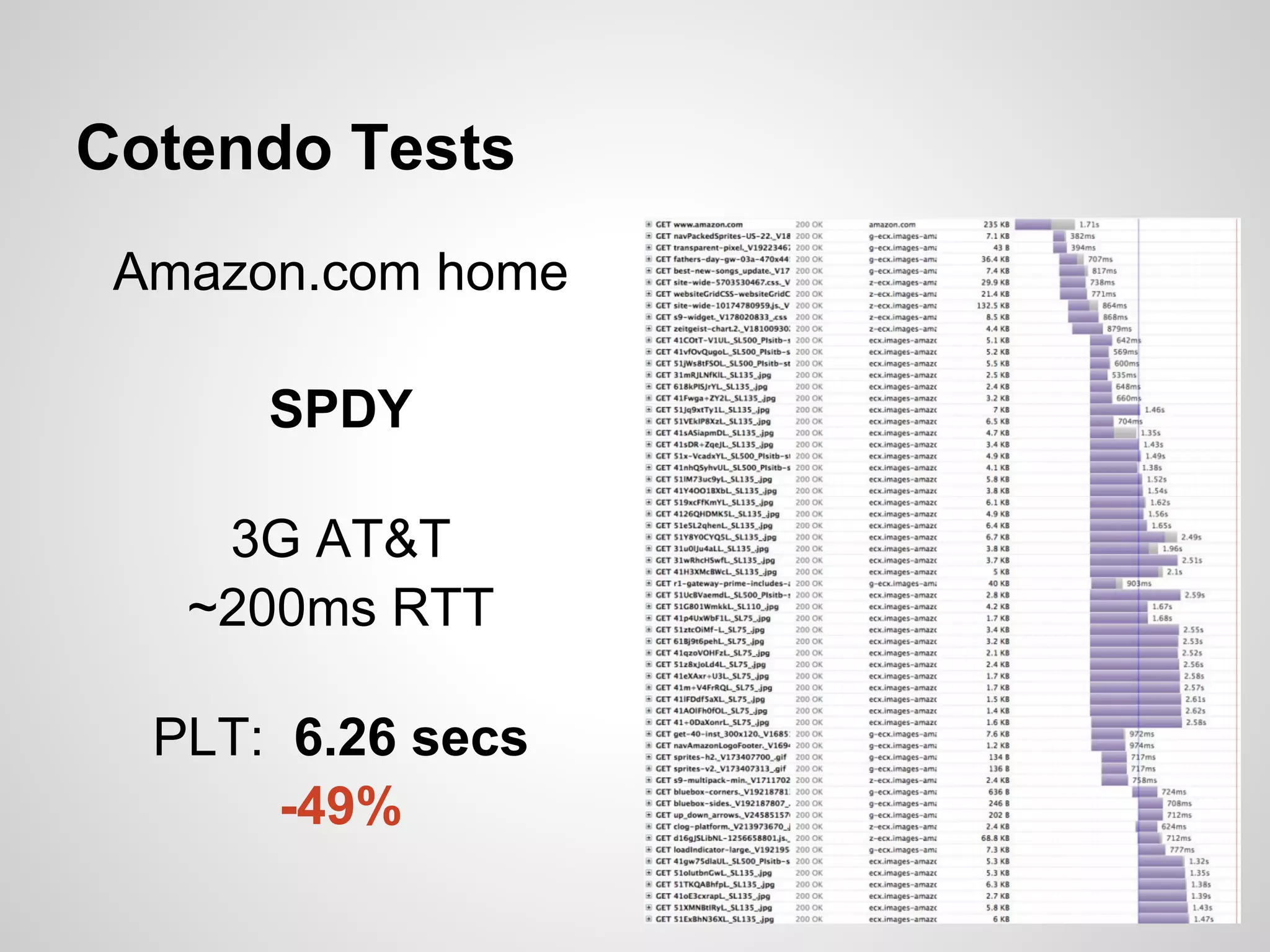

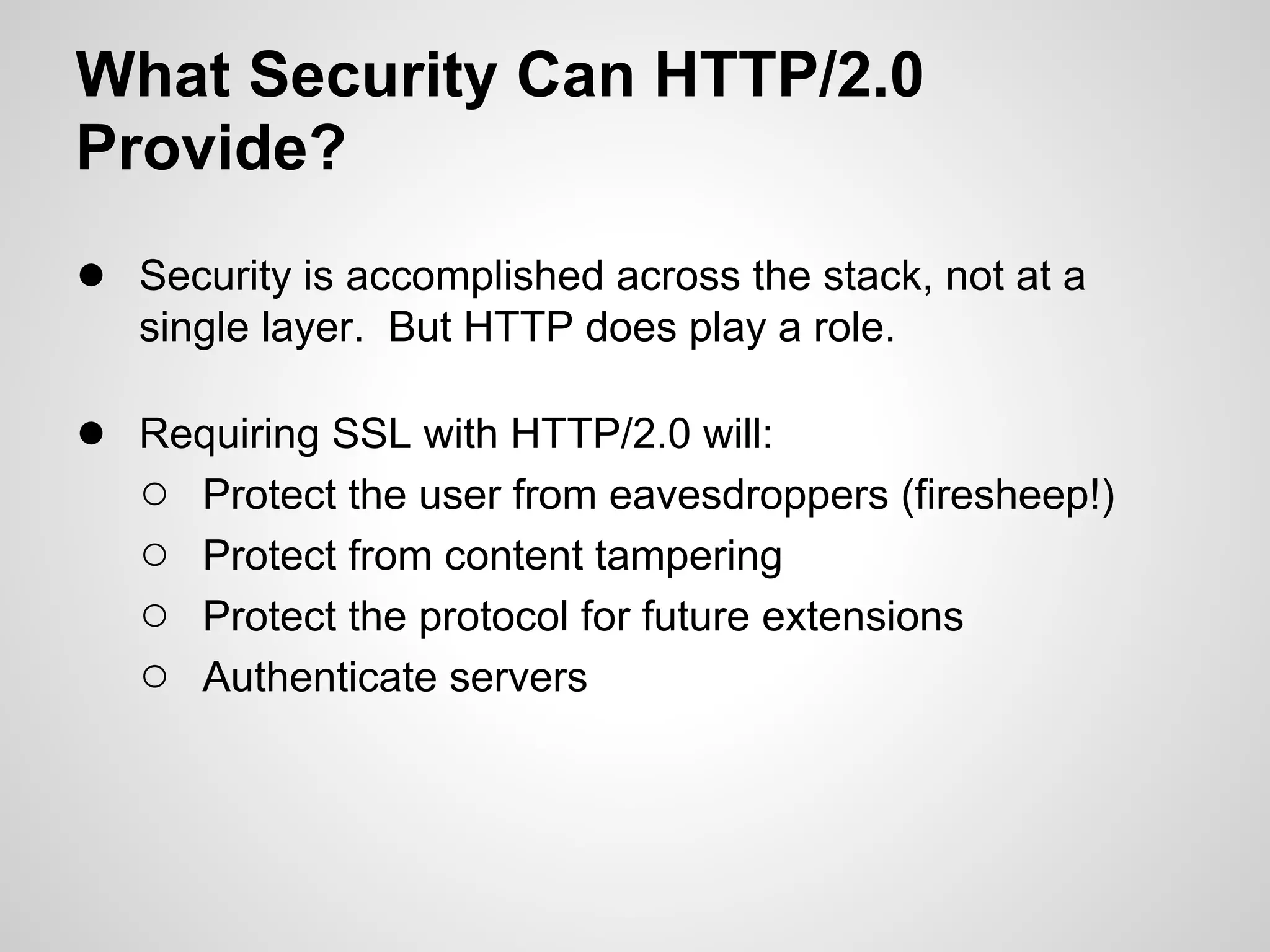

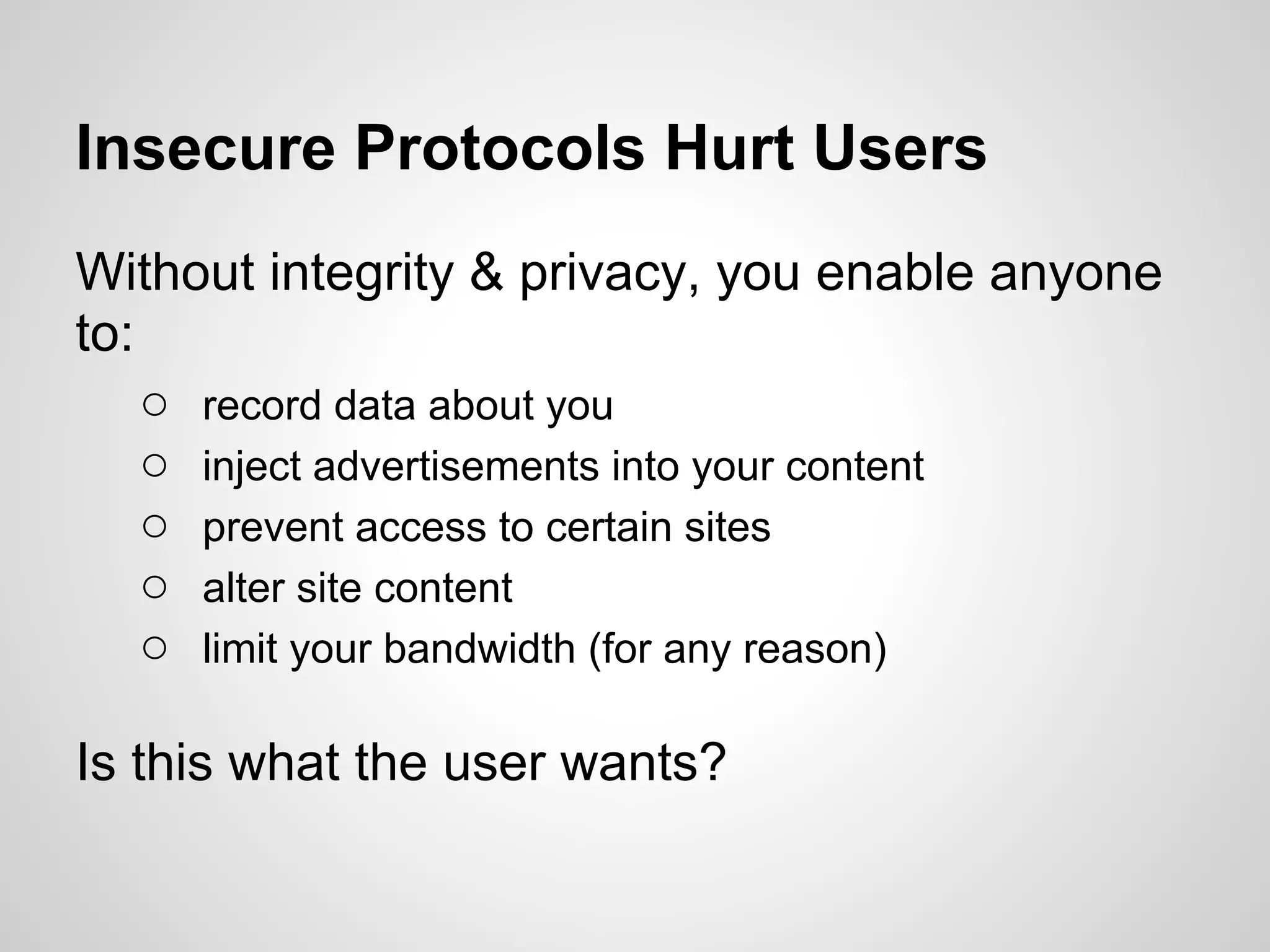

The document discusses SPDY, a protocol designed to reduce web page latency and improve network performance through features like multiplexing, prioritization, and header compression. It highlights the adoption of SPDY by major browsers and platforms, emphasizes its benefits over traditional HTTP connections, and addresses challenges such as security and mobile usage. The author advocates for incorporating SPDY's concepts into HTTP/2.0 while noting the importance of maintaining a focus on usability and performance improvements.