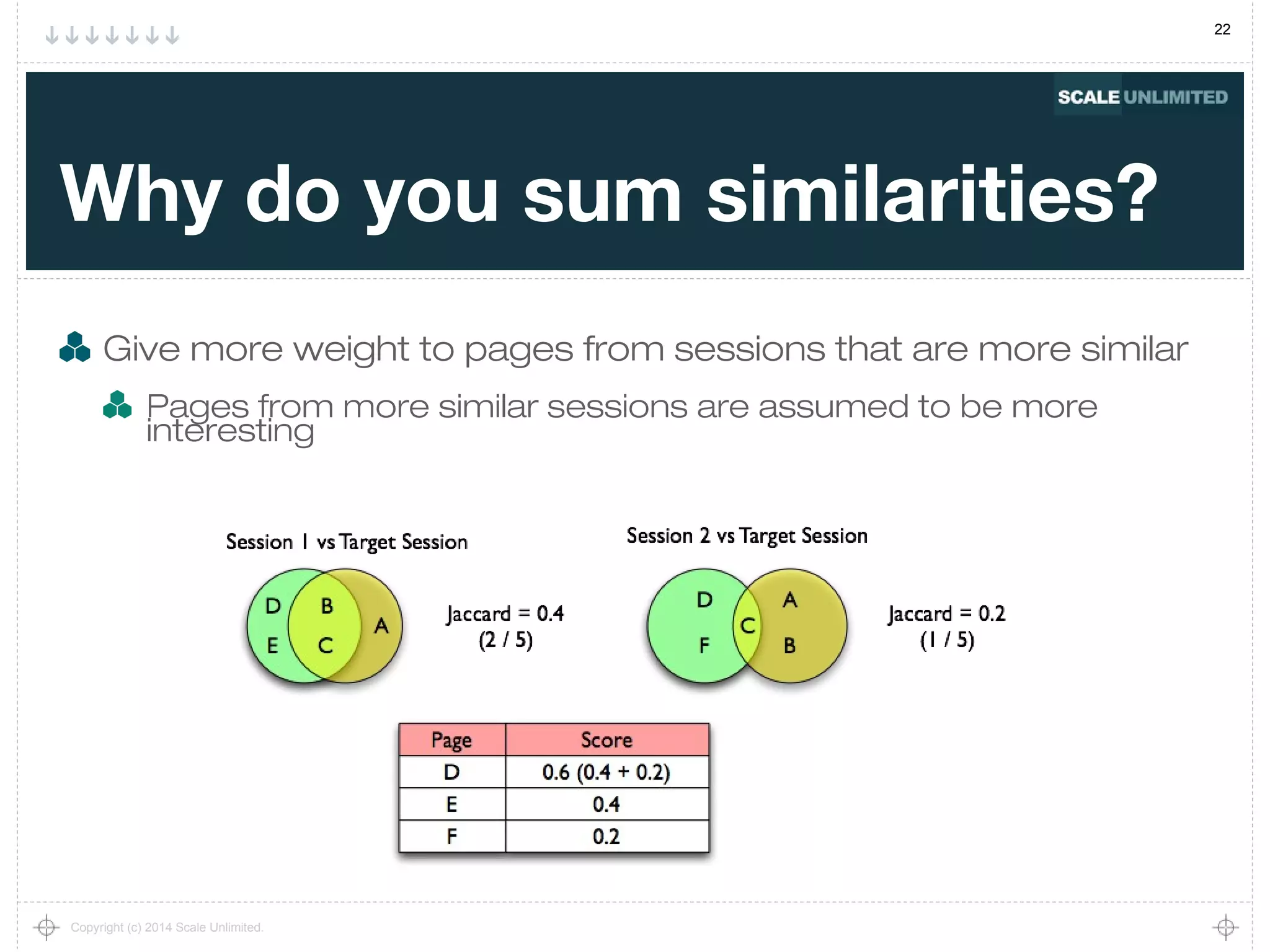

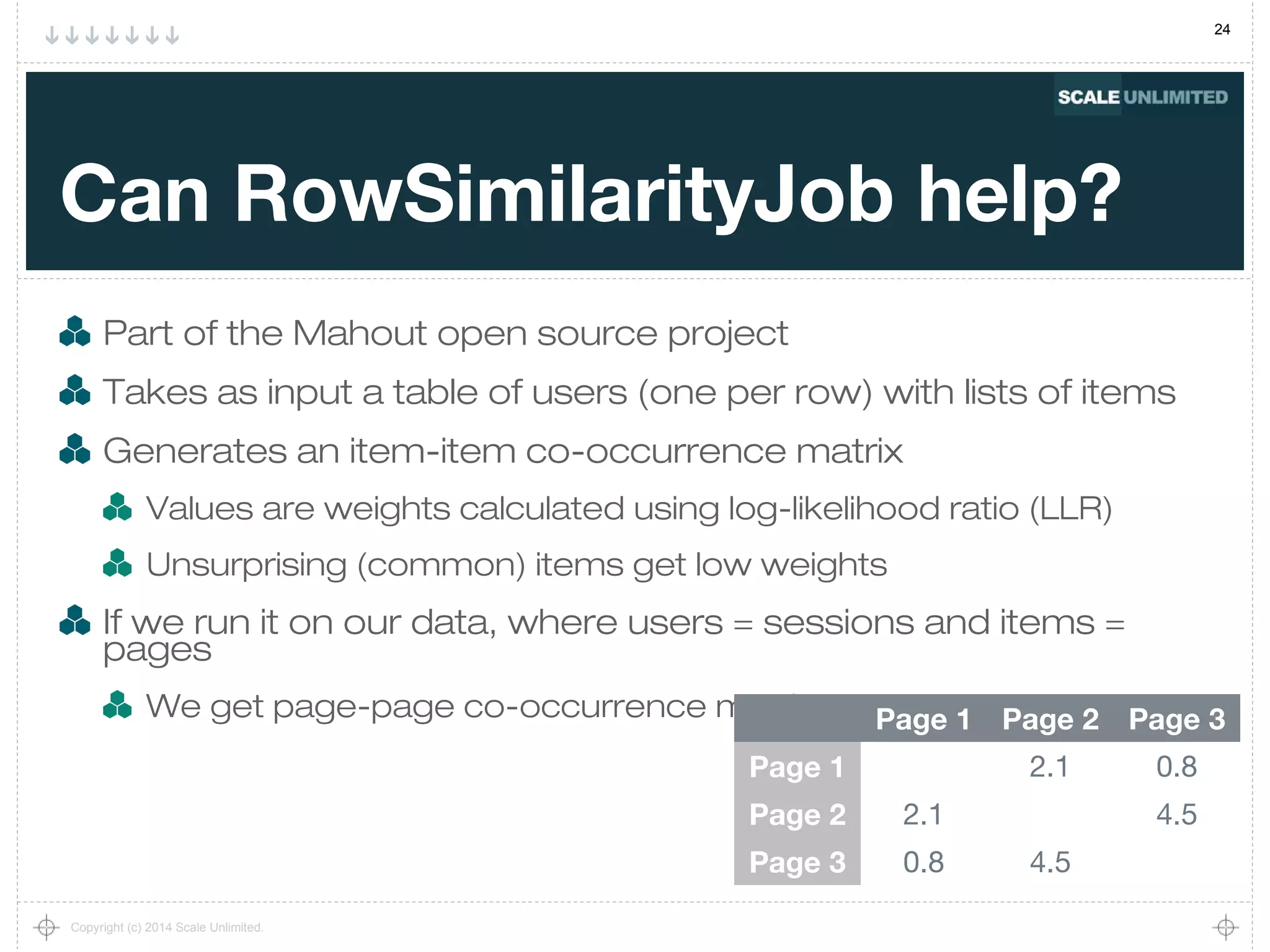

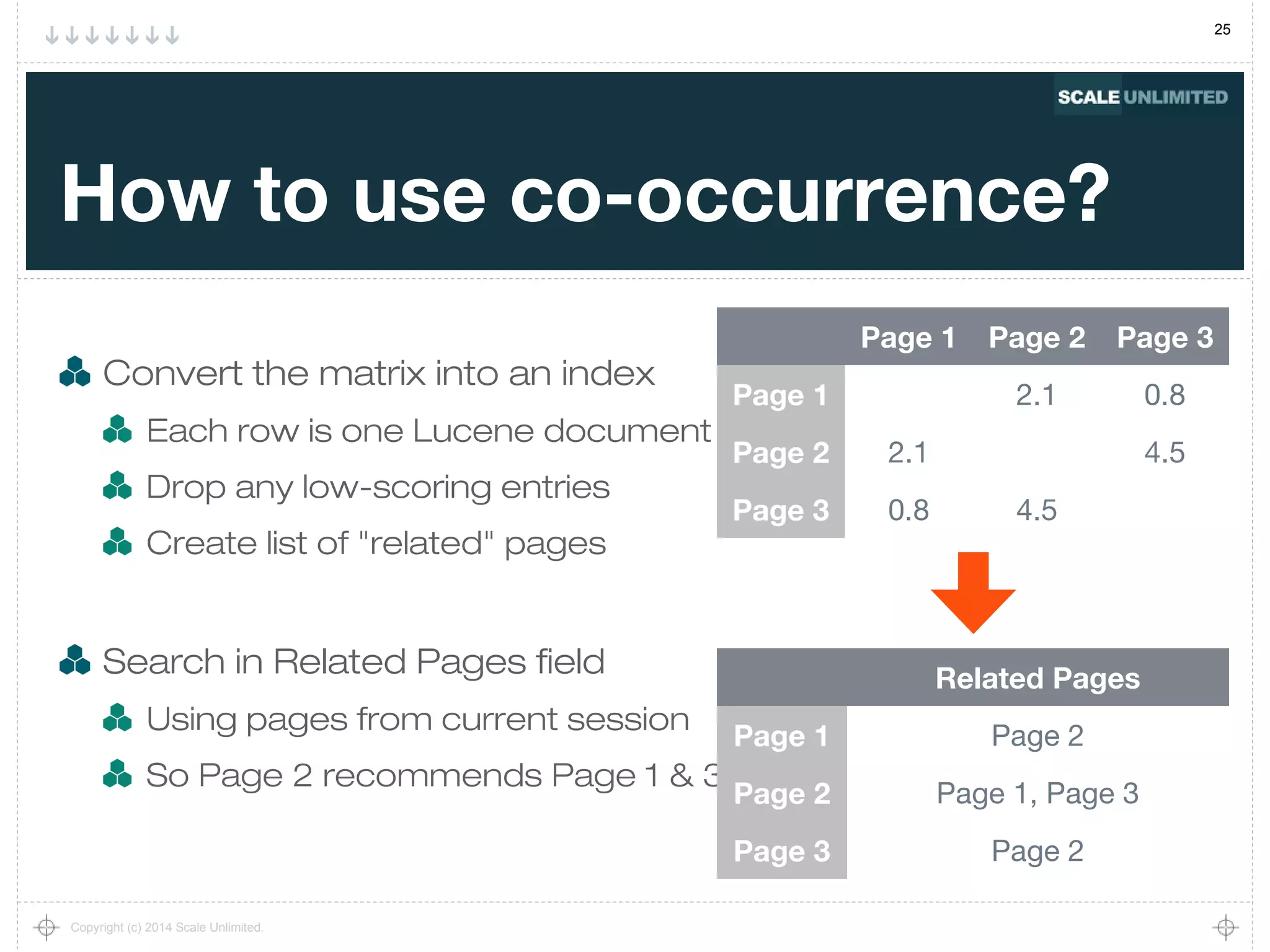

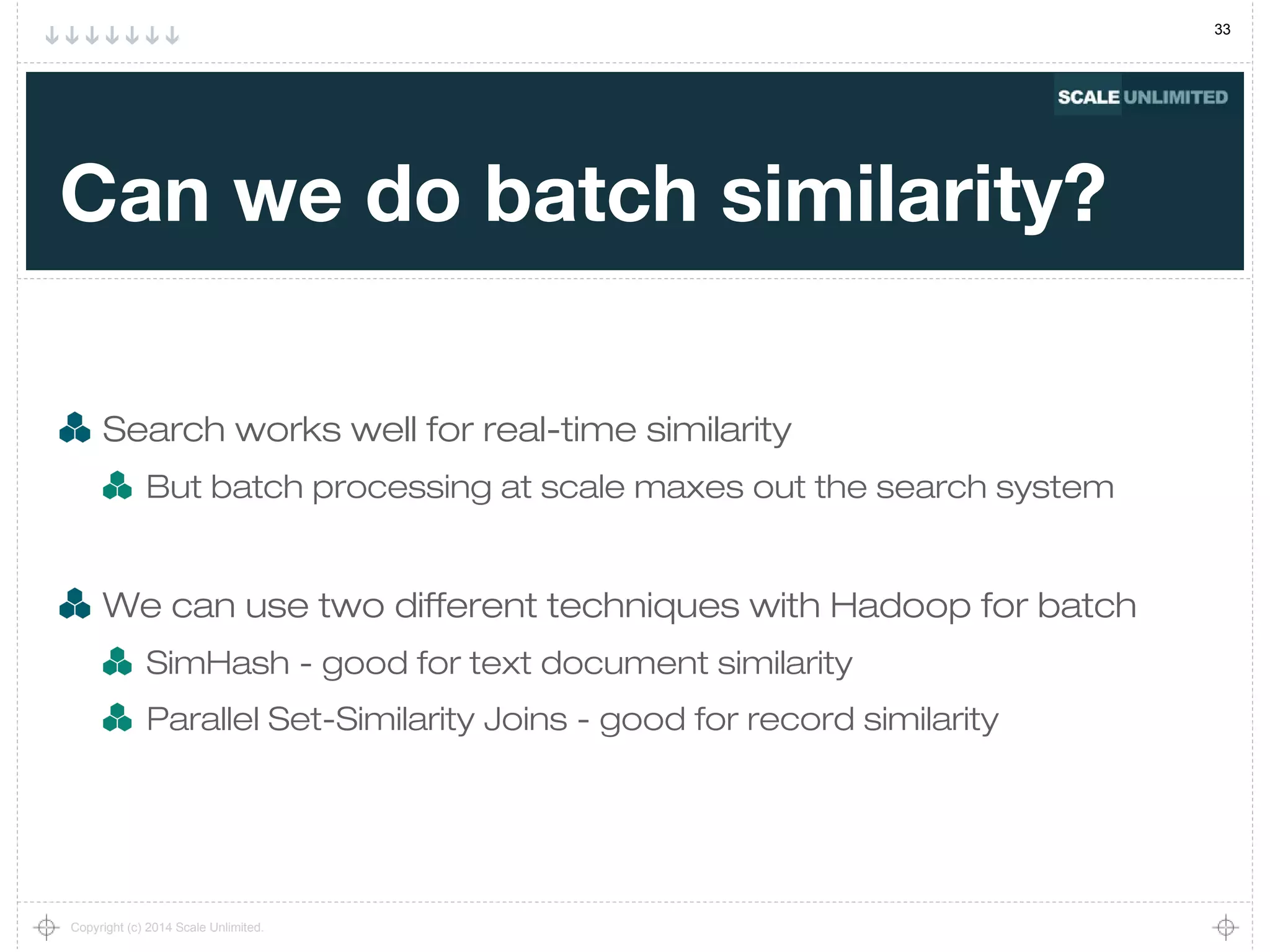

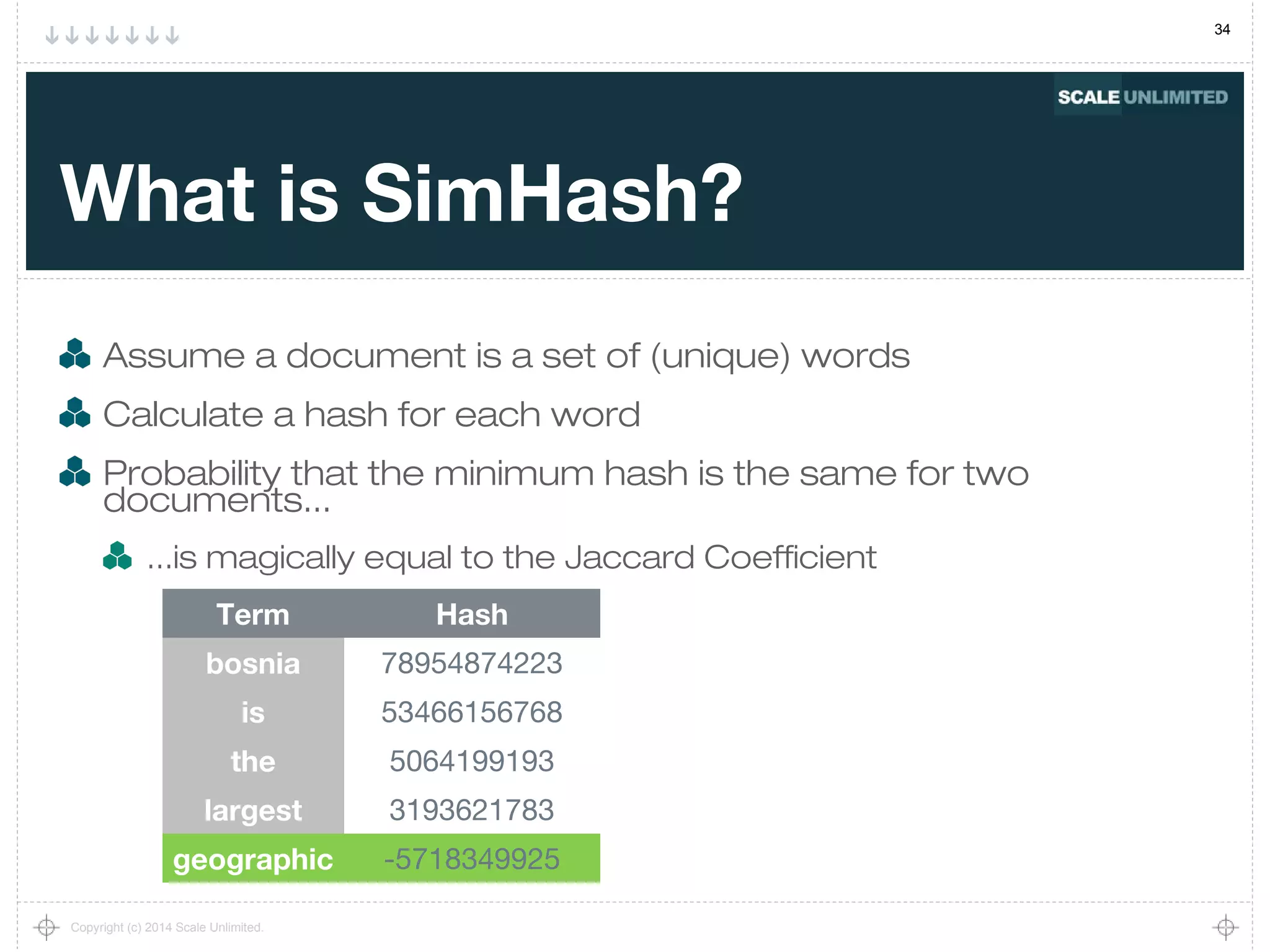

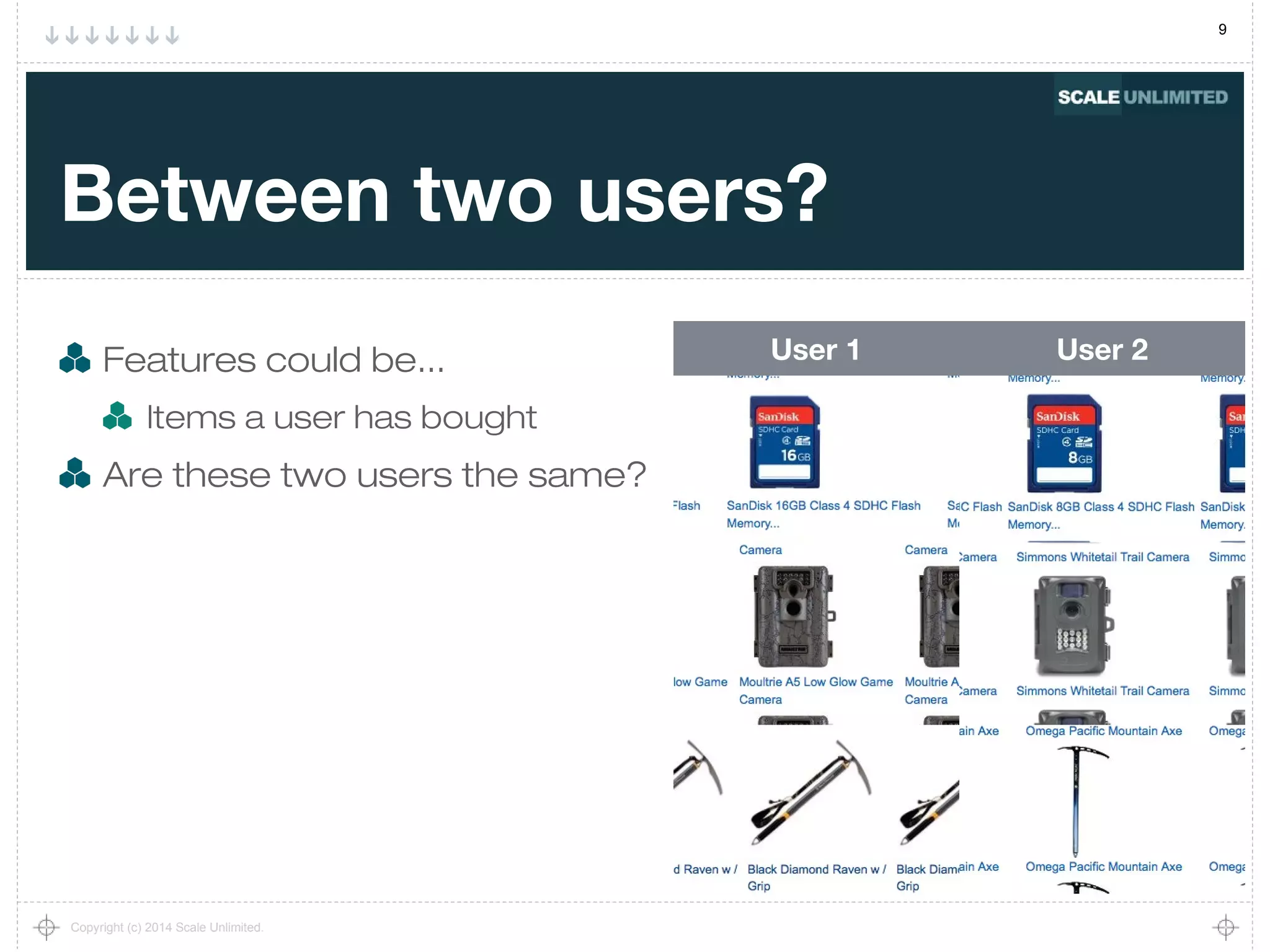

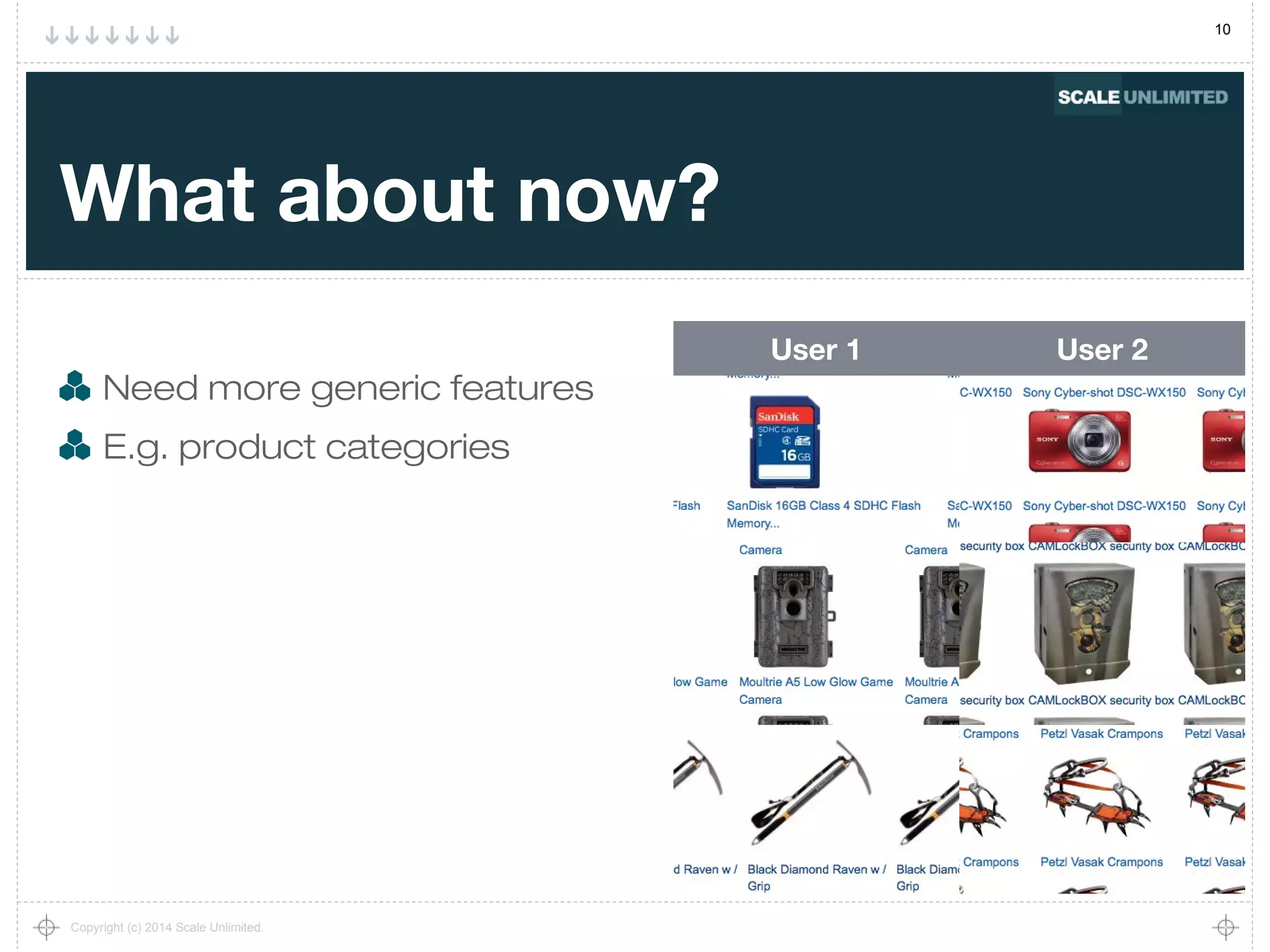

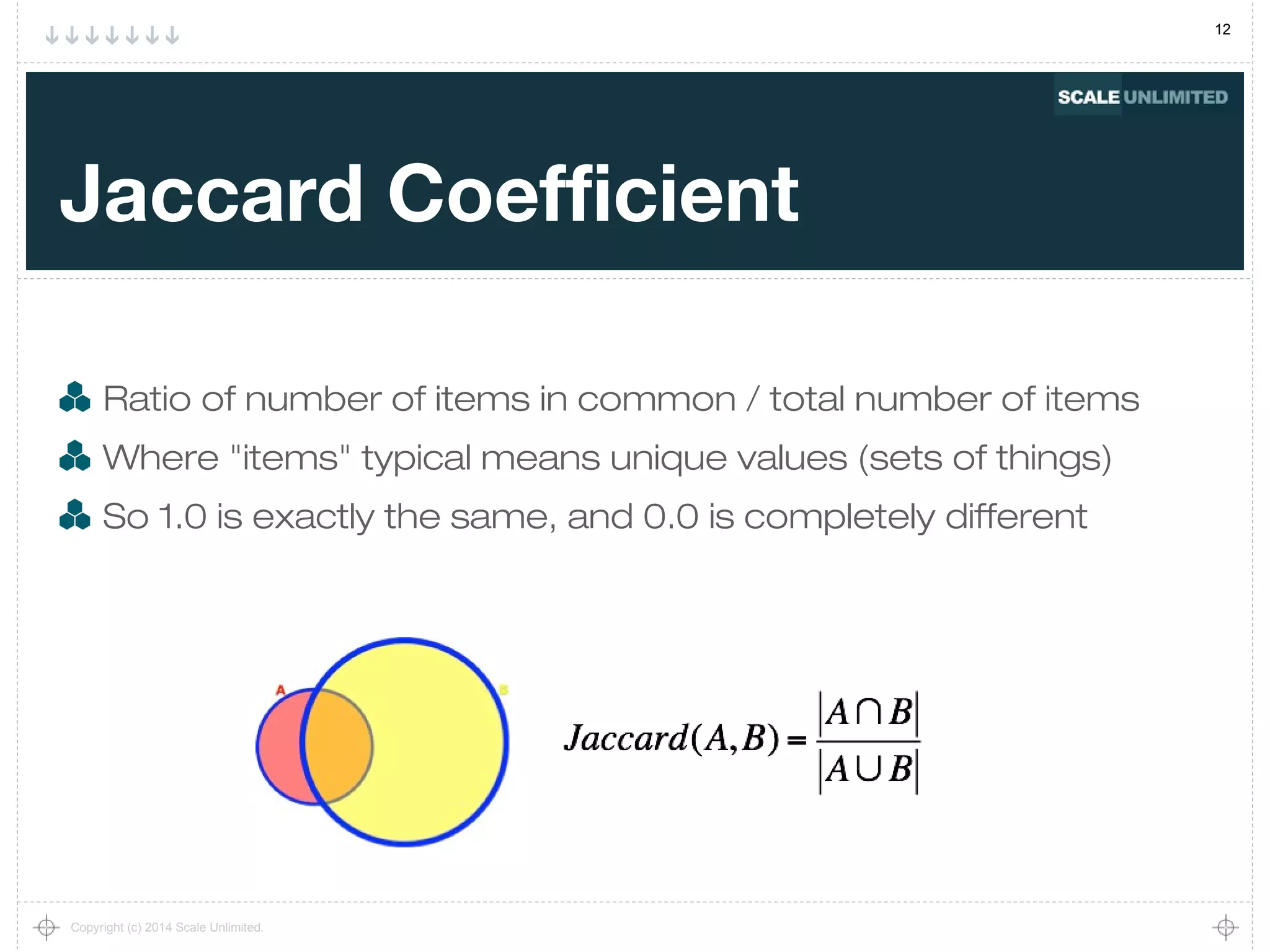

The document discusses the concepts of similarity and fuzzy matching in data processing, highlighting their applications in clustering, deduplication, and recommendations using tools like Hadoop and Solr. It outlines methods for measuring similarity, such as the Jaccard coefficient and cosine similarity, and explores challenges related to scalability when handling large datasets. Additionally, the document covers practical approaches for page recommendations and entity resolution within financial systems to combat fraud.

![18

Copyright (c) 2014 Scale Unlimited.

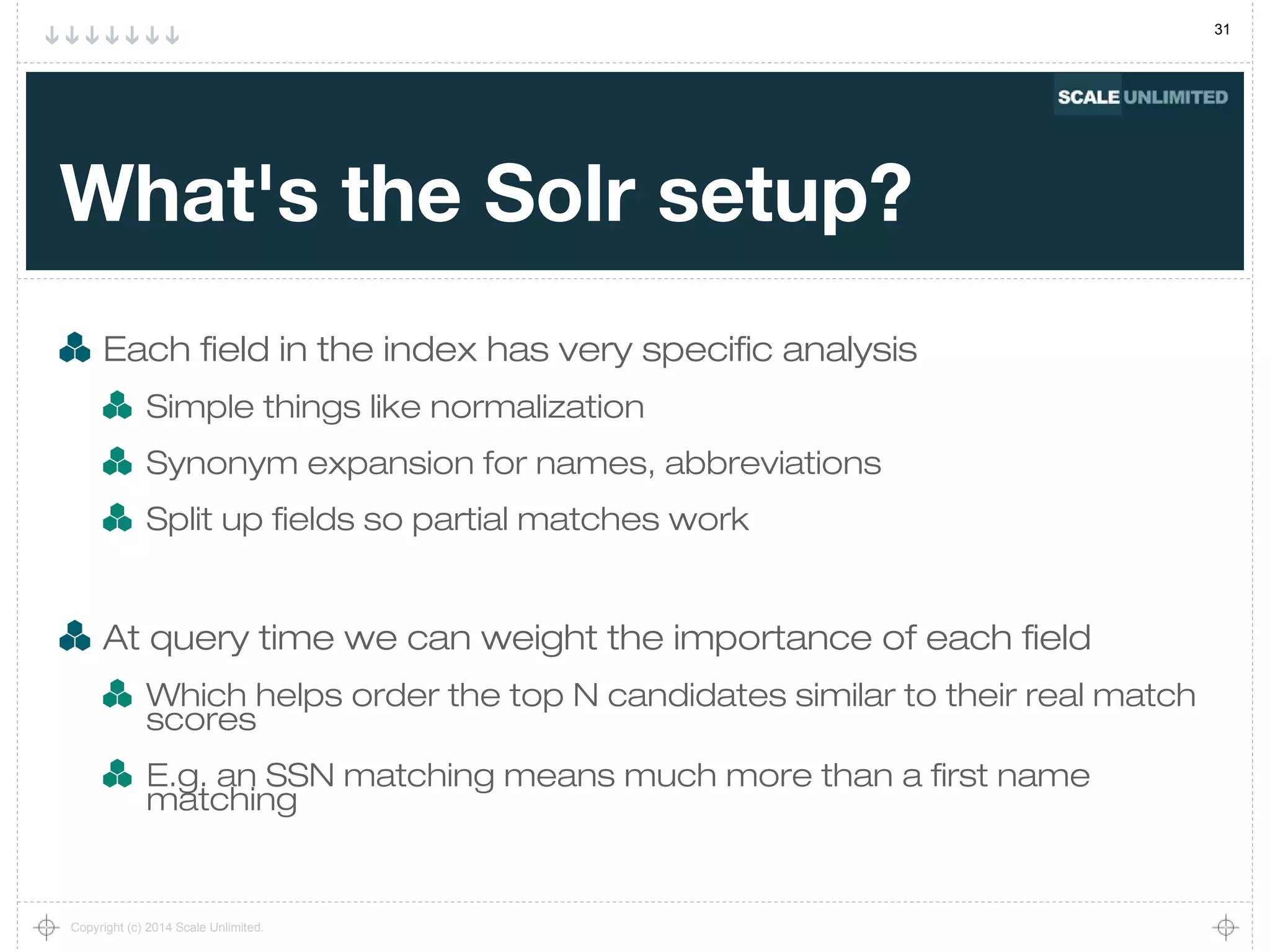

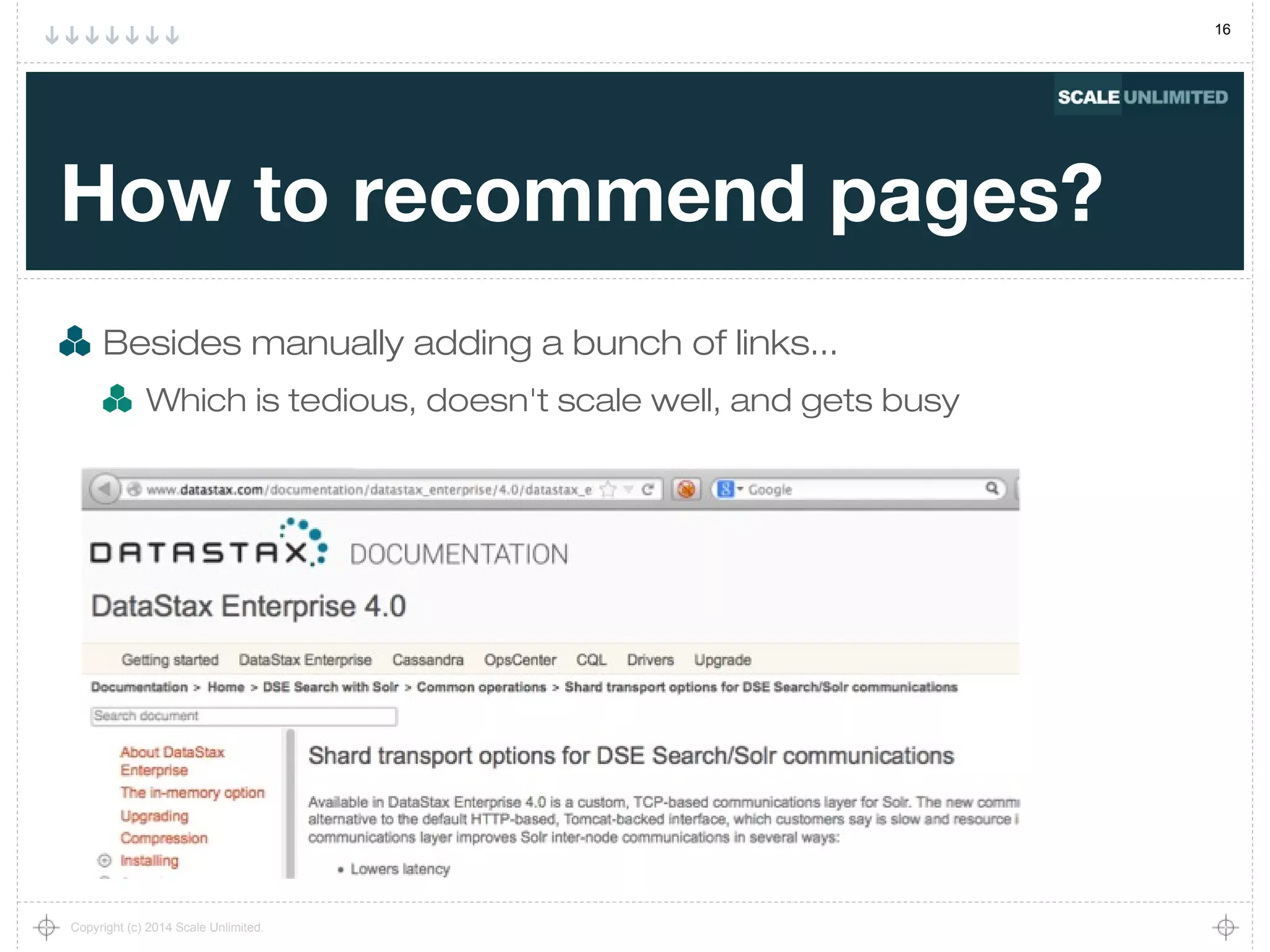

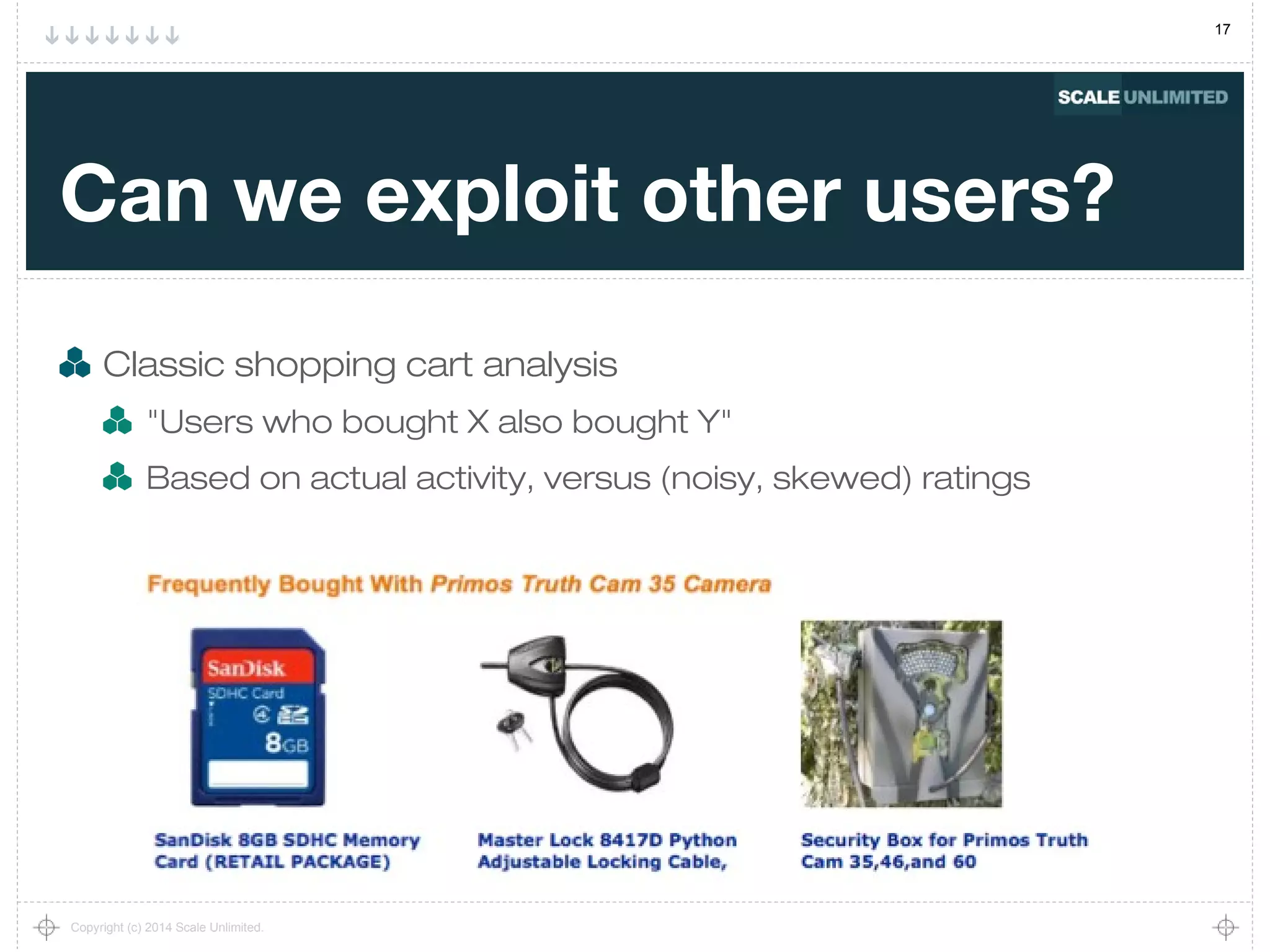

What's the general approach?

We have web logs with IP addresses, time, path to page

157.55.33.39 - - [18/Mar/2014:00:01:00 -0500]

"GET /solutions/nosql HTTP/1.1"

A browsing session is a series of requests from one IP address

With some maximum time gap between requests

Find sessions "similar to" the current user's session

Recommend pages from these similar sessions](https://image.slidesharecdn.com/similarityatscale-140616090303-phpapp02/75/Similarity-at-scale-18-2048.jpg)