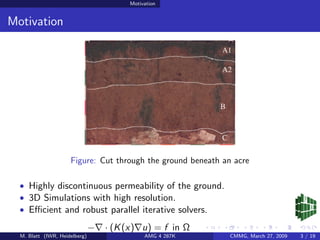

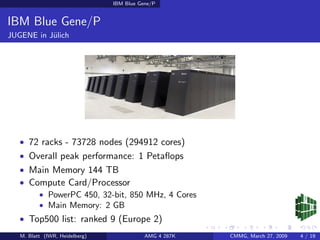

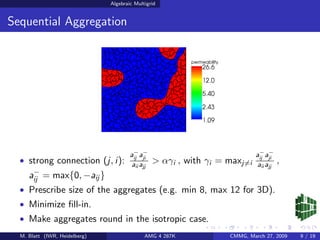

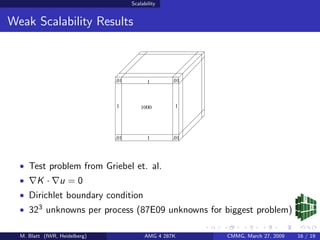

The document discusses scaling algebraic multigrid methods on the IBM Blue Gene/P supercomputer with over 287,000 processors, highlighting the challenges and parallelization strategies involved. It details the architecture's memory constraints and emphasizes the importance of efficient data handling and scalability for large computational problems. The implementation achieves reasonable scalability despite the complexities of the problems addressed, such as those involving high-dimensional spaces or discontinuous coefficients.

![Scalability

Weak Scalability Results

140

120

Computation Time [s]

100

80

60

40

20

0

1e+00 1e+01 1e+02 1e+03 1e+04 1e+05 1e+06

# Processors [-]

build time solve time total time

M. Blatt (IWR, Heidelberg) AMG 4 287K CMMG, March 27, 2009 17 / 19](https://image.slidesharecdn.com/blattamg-110406090622-phpapp02/85/Scaling-algebraic-multigrid-to-over-287K-processors-21-320.jpg)