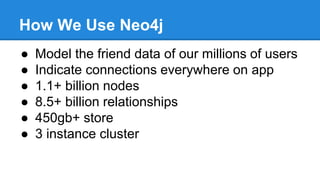

This document summarizes tips for running Neo4j in production from a talk given by David Fox from SNAP Interactive, a social dating app. Some key points include: using Java batch inserters and sorting to import billions of nodes and relationships efficiently; testing Cypher CSV import; benchmarking and caching queries; distributing writes with Baserunner; tuning the garbage collector; using SSDs for large working sets; and looking forward to new features in upcoming Neo4j versions. The talk provided lessons learned from scaling SNAP's graph to support millions of users on their dating application.