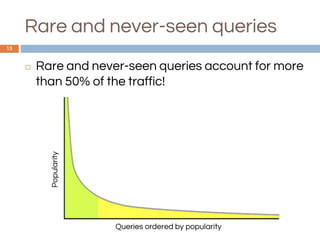

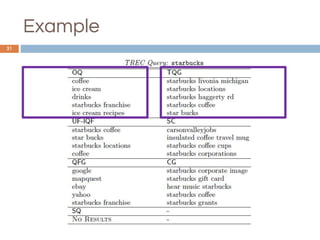

The document discusses query recommendation methods used in search engines, focusing on the challenges of addressing long-tail and unseen queries. It highlights various models such as term-query graphs and hierarchical recurrent encoder-decoder approaches for generating context-aware suggestions. Key findings indicate that over 50% of queries may be long-tail, necessitating robust frameworks to enhance query recommendation effectiveness.

![Query Suggestion SOTA

10

◻ Query-Flow Graph and Term-Query Graph

[Bonci et al. 2008, Vahabi et al. 2012]

Robust to long-tail queries but computationally

complex

◻ Context-awareness by VMM models [He et al.

2009, Cao et al. 2008]

Sparsity issues and not robust to long-tail

queries](https://image.slidesharecdn.com/presentation-schibsted-12september2-170928062441/85/Query-Recommendation-Barcelona-2017-10-320.jpg)

![Query Suggestion SOTA

11

◻ Learning to rank by featurizing query context

[Shokhoui et al. 2013, Ozertem et al. 2012]

Order of queries / words in the queries is often

lost

◻ Synthetic queries by template-based

approaches [Szpektor et al. 2011, Jain et al.

2012]](https://image.slidesharecdn.com/presentation-schibsted-12september2-170928062441/85/Query-Recommendation-Barcelona-2017-11-320.jpg)

![Word and query embedding

36

◻Learn vector representations for words and queries

encoding their syntactic and semantic

characteristics.

⬜“Similar” queries associated to “near” vectors.

“game”

[ 0.1, 0.05, -0.3, … , 1.1 ] [ 0.35, 0.15, -0.12, … , 1.3 ]

“cartoon network

game”](https://image.slidesharecdn.com/presentation-schibsted-12september2-170928062441/85/Query-Recommendation-Barcelona-2017-36-320.jpg)