1) The document discusses test automation challenges at WorkFusion including long test run times, low coverage, and technical debt.

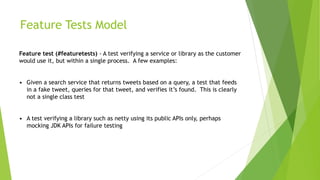

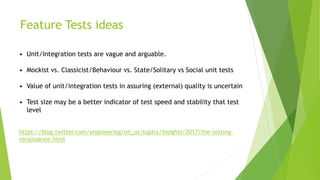

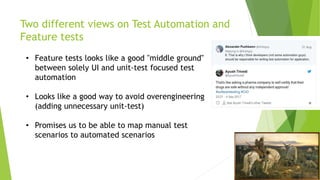

2) It proposes adopting a "feature test" approach between unit and UI tests to help address these issues by mapping manual test cases to automated scenarios.

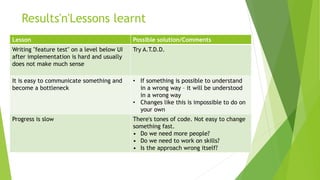

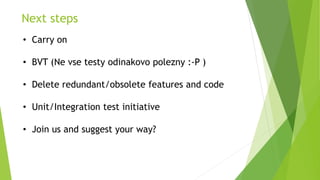

3) Key steps would be to identify redundant tests, delete obsolete code, start a unit/integration test initiative, and get additional resources to help scale the efforts. Outcomes and lessons learned would be reviewed.

![Who am I? Alex Pushkarev (Саша)

• ~ 11 years in IT

• Software Engineer [In test]

• Agile fan, XP practitioner

• Context-driven test school

• Test automation tech lead at WorkFusion](https://image.slidesharecdn.com/presentation-delex-180217141540/85/Presentation-delex-2-320.jpg)