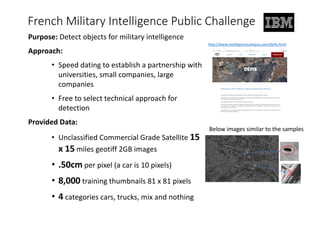

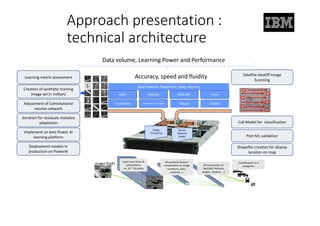

The document discusses using IBM's PowerAI platform and deep learning techniques to solve an object detection challenge involving satellite imagery. The initial approach uses a convolutional neural network trained on real and synthetic images. Accuracy improves from 44% to 64% by adding layers, dropout, and synthetic images. Residual and inception networks provide unstable gains. Further innovation allows non-differentiable features to boost accuracy above 90%. PowerAI is concluded to provide strong performance for deep learning and computer vision tasks.