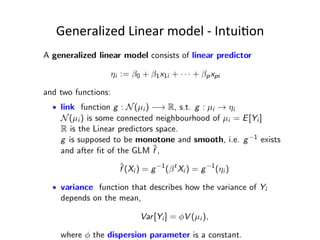

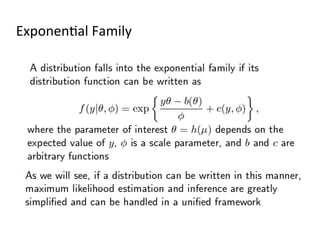

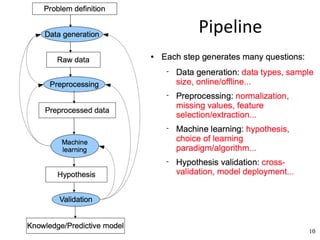

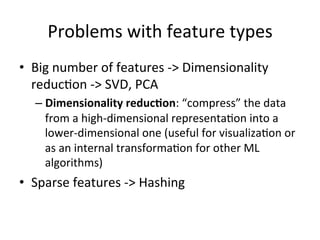

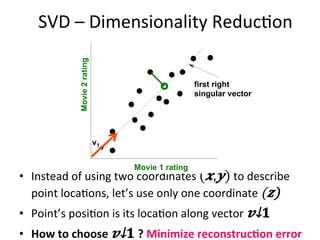

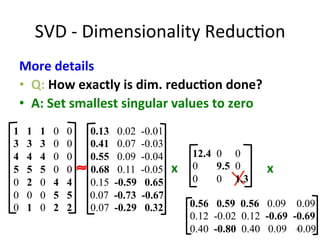

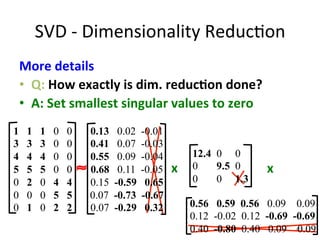

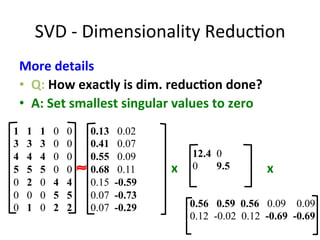

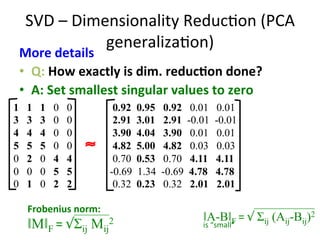

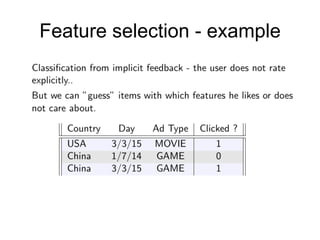

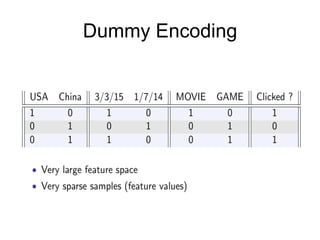

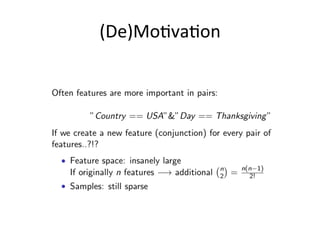

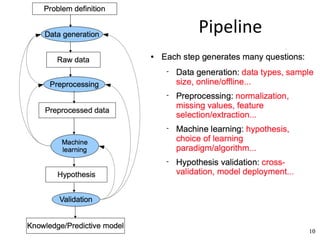

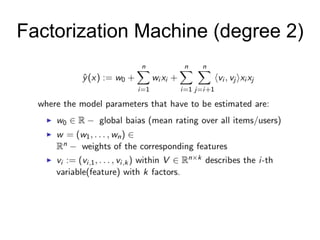

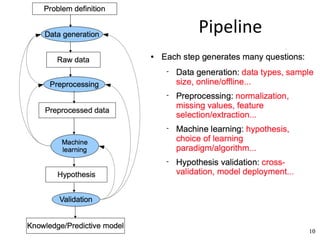

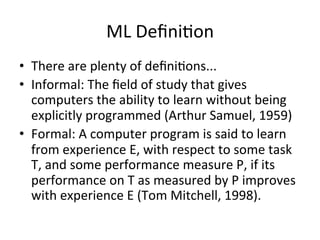

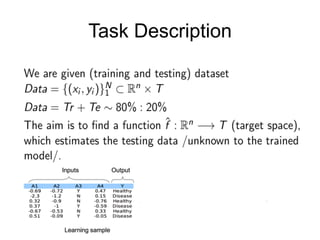

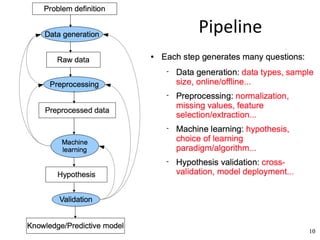

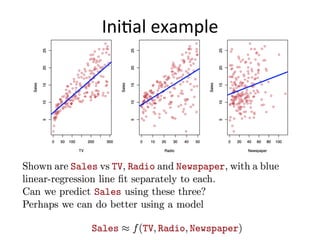

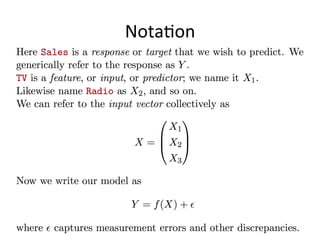

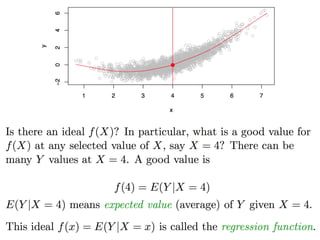

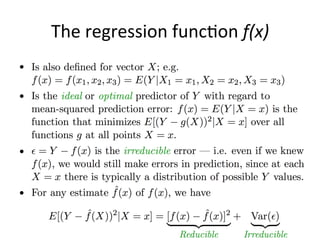

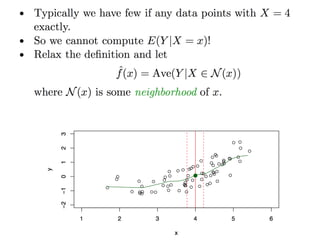

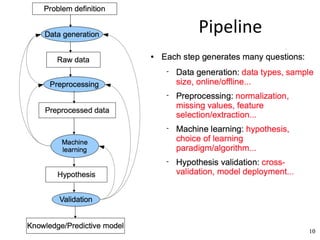

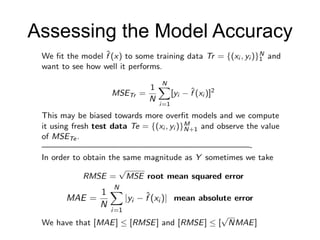

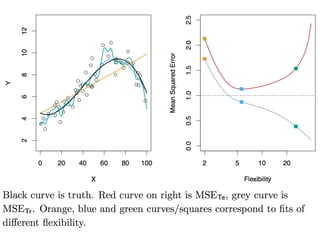

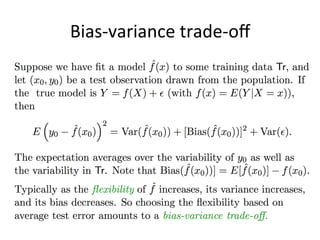

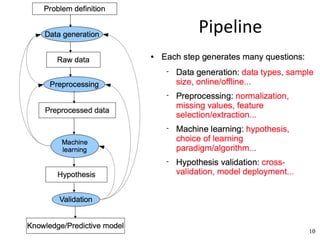

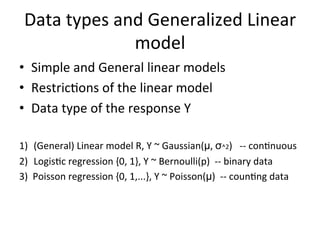

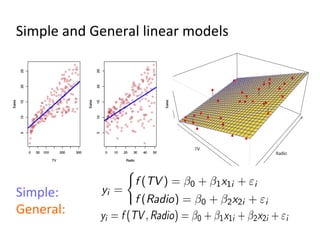

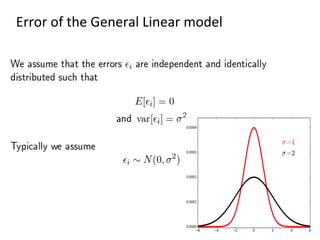

The document discusses supervised learning algorithms and their importance in the field of machine learning, highlighting key concepts like data collection methods, model evaluation, and dimensionality reduction. It covers generalized linear models for different types of data and addresses the challenges faced when selecting features due to high dimensionality. Additionally, it provides a summary of methodological insights and references for further reading.

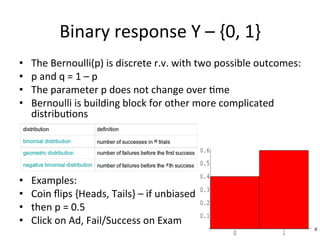

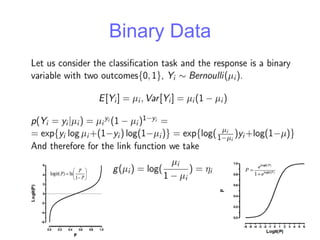

![RestricCons of Linear models

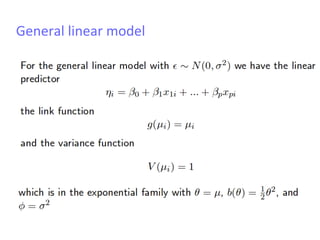

Although the General linear model is a useful

framework, it is not appropriate in the following cases:

• The range of Y is restricted (e.g. binary, count,

posiCve/negaCve)

• Var[Y] depends on the mean E[Y] (for the Gaussian

they are independent)

Name Mean Variance

Bernoulli(p) p p(1 - p)

Binomial(p, n) np np(1 - p)

Poisson(p) p p](https://image.slidesharecdn.com/supervised-learning-algorithms-analysis-of-different-approaches2-180731084937/85/Pipeline-of-Supervised-learning-algorithms-34-320.jpg)