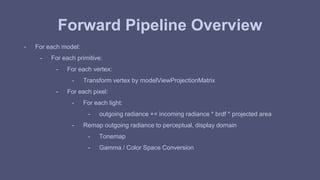

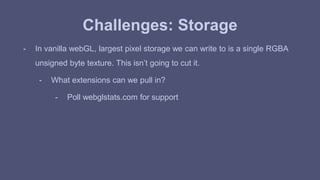

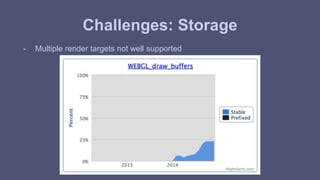

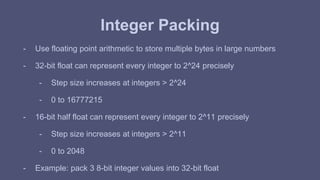

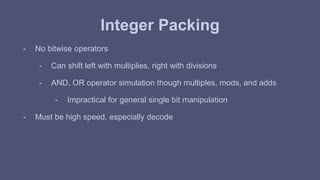

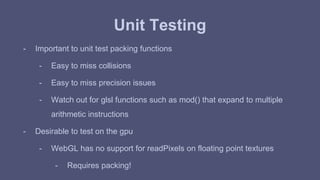

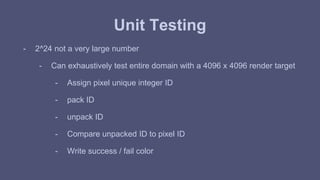

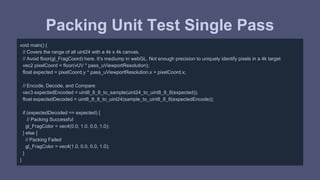

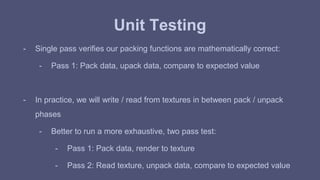

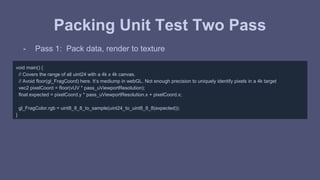

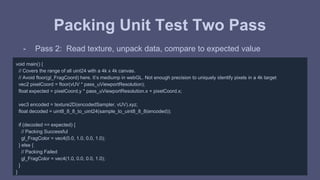

The document discusses challenges and approaches for real-time 3D architectural visualization and virtual reality using webGL. Some key challenges mentioned include the clean aesthetic required, complex lighting, and accurate material representation. The approaches discussed are physically based shading, deferred rendering using a g-buffer to store scene information, and integer packing to store g-buffer data in a single texture given webGL limitations. Unit testing of the packing functions is also emphasized.

![Approach

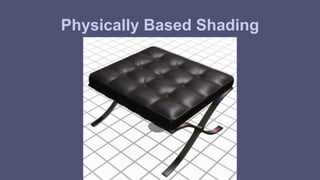

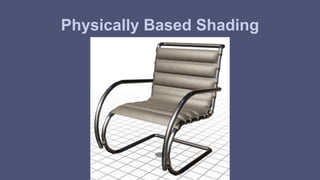

- Physically Based Shading

- Deferred Rendering

- Temporal Amortization [Yang 09][Herzog 10][Wronski 14][Karis 14]](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-11-320.jpg)

![Microfacet BRDF

- Microfacet Specular:

- D: Normal Distribution Function: GGX [Walter 07]

- G: Geometry Shadow Masking Function: Height-Correlated Smith [Heitz 14]

- F: Fresnel: Spherical Gaussian Schlick’s Approximation [Schlick 94]

- Microfacet Diffuse

- Qualitative Oren Nayar [Oren 94]](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-23-320.jpg)

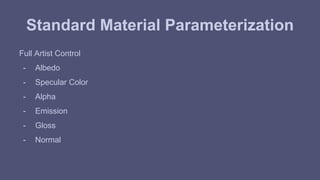

![Standard Material Parameterization

Time to shameless steal from Real-Time Rendering [Möller 08]...](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-24-320.jpg)

![Standard Material Parameterization

Time to shameless steal from Real-Time Rendering [Möller 08]...](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-25-320.jpg)

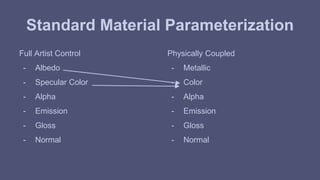

![Standard Material Parameterization

- Give color parameter conditional meaning [Burley 12], [Karis 13]

if (!metallic) {

albedo = color;

specularColor = vec3(0.04);

} else {

albedo = vec3(0.0);

specularColor = color;

}](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-26-320.jpg)

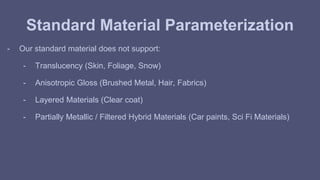

![Standard Material Parameterization

- Can throw out a whole vec3 parameter

- Less knobs help enforce physically plausible materials

- Significantly lighter g-buffer storage

- Less textures, better download times

- What control did we lose?

- Video of non-metallic materials sweeping through physically plausible range of

specular colors

- 0.02 to 0.05 [Hoffman 10][Lagarde 11]](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-27-320.jpg)

![Normal Compression

- Normal data encoded in octahedral space [Cigolle 14]

- Transform normal to 2D Basis

- Reasonably uniform discretization across the sphere

- Uses full 0 to 1 domain

- Cheap encode / decode](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-70-320.jpg)

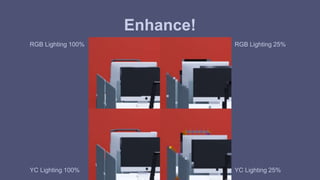

![Color Compression

- Transform to perceptual basis: YUV, YCrCb, YCoCg

- Human perceptual system sensitive to luminance shifts

- Human perceptual system fairly insensitive to chroma shifts

- Color swatches / textures can be pre-transformed

- Already a practice for higher quality dxt compression [Waveren 07]

- Store chroma components at a lower frequency

- Write 2 components of the signal, alternating between chroma bases

- Color data encoded in checkerboarded YCoCg space [Mavridis 12]](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-72-320.jpg)

![Light Pre-pass

- Many resources:

- [Geldreich 04][Shishkovtsov 05][Lobanchikov 09][Mittring 09][Hoffman 09][Sousa 13][Pranckevičius 13]

- Accumulate lighting, unmodulated by albedo or specular color

- Modulate by albedo and specular color in resolve pass

- Pulls fresnel out of the integral with nDotV approximation

- Bad for microfacet model. We want nDotH.

- Could light pre-pass all non-metallic pixels due to constant 0.04

- Keep fresnel inside the integral for nDotH evaluation

- Requires running through all lights twice](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-98-320.jpg)

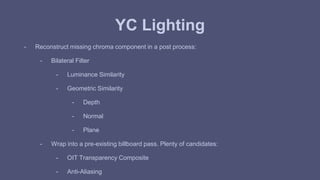

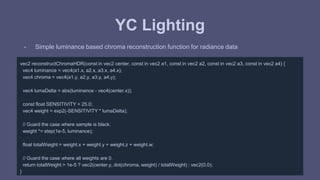

![YC Lighting

- RGB Schlick’s Approximation of Fresnel [Schlick 94]:

vec3 fresnelSchlick(const in float vDotH, const in vec3 reflectionCoefficient) {

float power = pow(1.0 - vDotH, 5.0);

return (1.0 - reflectionCoefficient) * power + reflectionCoefficient;

}](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-115-320.jpg)

![YC Lighting

- Works fine with spherical gaussian [Lagarde 12] approximation too

vec2 fresnelSchlickSphericalGaussianYC(const in float vDotH, const in vec2 reflectionCoefficientYC) {

float power = exp2((-5.55473 * vDotH - 6.98316) * vDotH);

return vec2(

(1.0 - reflectionCoefficientYC.x) * power + reflectionCoefficientYC.x,

reflectionCoefficientYC.y * -power + reflectionCoefficientYC.y

);

}](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-117-320.jpg)

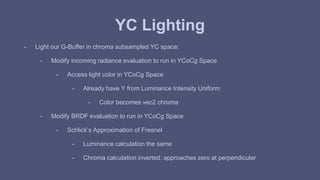

![YC Lighting

- Write YC to RG components of render target

- Could write to an RGBA target and light 2 pixels at once: YCYC

- Write bandwidth savings

- Where typical scenes are bottlenecked!

- Only applicable for billboard rasterization

- Can’t conservatively depth / stencil test light proxies

- Interesting for tiled deferred [Olsson 11] / clustered [Billeter 12] approaches.

- Future work.](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-119-320.jpg)

![Resources

[WebGLStats] WebGL Stats

http://webglstats.com, 2014.

[Möller 08] Real-Time Rendering,

Thomas Akenine-Möller, Eric Haines, Naty Hoffman, 2008

[Hoffman 10] Physically-Based Shading Models in Film and Game Production

http://renderwonk.com/publications/s2010-shading-course/hoffman/s2010_physically_based_shading_hoffman_a_notes.pdf, Naty Hoffman, Siggraph, 2010

[Lagarde 11] Feeding a Physically-Based Shading Model

http://seblagarde.wordpress.com/2011/08/17/feeding-a-physical-based-lighting-mode/, Sébastien Lagarde, 2011

[Burley 12] Physically-Based Shading at Disney,

http://disney-animation.s3.amazonaws.com/library/s2012_pbs_disney_brdf_notes_v2.pdf, Brent Burley, 2012

[Karis 13] Real Shading in Unreal Engine 4,

http://blog.selfshadow.com/publications/s2013-shading-course/karis/s2013_pbs_epic_notes_v2.pdf, Brian Karis, 2013](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-126-320.jpg)

![Resources

[Pranckevičius 09] Encoding Floats to RGBA - The final?

http://aras-p.info/blog/2009/07/30/encoding-floats-to-rgba-the-final, Aras Pranckevičius 2009.

[Cigolle 14] A Survey of Efficient Representations for Independent Unit Vectors,

http://jcgt.org/published/0003/02/01/, Cigolle, Donow, Evangelakos, Mara, McGuire, Meyer, 2014

[Mavridis 12] The Compact YCoCg Frame Buffer

http://jcgt.org/published/0001/01/02/, Mavridis and Papaioannou, Journal of Computer Graphics Techniques, 2012

[Waveren 07] Real-Time YCoCg-DXT Compression

http://developer.download.nvidia.com/whitepapers/2007/Real-Time-YCoCg-DXT-Compression/Real-Time%20YCoCg-DXT%20Compression.pdf, J.M.P van

Waveren, Ignacio Castaño, 2007

[Geldreich 04] Deferred Lighting and Shading

https://sites.google.com/site/richgel99/home, Rich Geldreich, Matt Pritchard, John Brooks, 2004.

[Hoffman 09] Deferred Lighting Approaches

http://www.realtimerendering.com/blog/deferred-lighting-approaches, Naty Hoffman, 2009.](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-127-320.jpg)

![Resources

[Shishkovtsov 05] Deferred Shading in S.T.A.L.K.E.R.

http://http.developer.nvidia.com/GPUGems2/gpugems2_chapter09.html, Oles Shishkovtsov, 2005

[Lobanchikov 09] GSC Game World’s S.T.A.L.K.E.R: Clear Sky - a Showcase for Direct3D 10.0/1

http://amd-dev.wpengine.netdna-cdn.com/wordpress/media/2012/10/01GDC09AD3DDStalkerClearSky210309.ppt, Igor A. Lobanchikov, Holger Gruen, Game

Developers Conference, 2009

[Mittring 09] A Bit More Deferred - CryEngine 3

http://www.crytek.com/cryengine/cryengine3/presentations/a-bit-more-deferred---cryengine3, Martin Mittring, 2009.

[Sousa 13] The Rendering Technologies of Crysis 3

http://www.crytek.com/cryengine/presentations/the-rendering-technologies-of-crysis-3, Tiago Sousa, 2013

[Pranckevičius 13] Physically Based Shading in Unity

http://aras-p.info/texts/files/201403-GDC_UnityPhysicallyBasedShading_notes.pdf, Aras Pranckevičius, Game Developers Conference, 2013

[Olsson 11] Clustered Deferred and Forward Shading

http://www.cse.chalmers.se/~olaolss/main_frame.php?contents=publication&id=tiled_shading, Ola Olsson, Ulf Assarsson, 2011](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-128-320.jpg)

![Resources

[Billeter 12] Clustered Deferred and Forward Shading

http://www.cse.chalmers.se/~olaolss/main_frame.php?contents=publication&id=clustered_shading, Markus Billeter, Ola Olsson, Ulf Assarsson, 2012

[Yang 09] Amortized Supersampling,

http://research.microsoft.com/en-us/um/people/hoppe/supersample.pdf, Lei Yang, Diego Nehab, Pedro V. Sander, Pitchaya Sitthi-amorn, Jason Lawrence,

Hugues Hoppe, 2009

[Herzog 10] Spatio-Temporal Upsampling on the GPU,

https://people.mpi-inf.mpg.de/~rherzog/Papers/spatioTemporalUpsampling_preprintI3D2010.pdf, Robert Herzog, Elmar Eisemann, Karol Myszkowski, H.-P.

Seidel, 2010

[Wronski 14] Temporal Supersampling and Antialiasing,

http://bartwronski.com/2014/03/15/temporal-supersampling-and-antialiasing/, Bart Wronski, 2014

[Karis 14] High Quality Temporal Supersampling,

https://de45xmedrsdbp.cloudfront.net/Resources/files/TemporalAA_small-71938806.pptx, Brian Karis, 2014

[Walter 07] Microfacet Models for Refraction Through Rough Surfaces,

http://www.cs.cornell.edu/~srm/publications/EGSR07-btdf.pdf, Bruce Walter, Stephan R. Marschner, Hongsong Li, Kenneth E. Torrance, 2007](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-129-320.jpg)

![Resources

[Heitz 14] Understanding the Shadow Masking Function,

http://jcgt.org/published/0003/02/03/paper.pdf, Eric Heitz, 2014

[Schlick 94] An Inexpensive BRDF Model for Physically-based Rendering

http://www.cs.virginia.edu/~jdl/bib/appearance/analytic%20models/schlick94b.pdf, Christophe Schlick, 1994

[Lagarde 12] Spherical Gaussian Approximation for Blinn-Phong, Phong, and Fresnel

http://seblagarde.wordpress.com/2012/06/03/spherical-gaussien-approximation-for-blinn-phong-phong-and-fresnel/, Sebastien Lagarde, 2012

[Oren 94] Generalization of Lambert’s Reflectance Model,

http://www1.cs.columbia.edu/CAVE/publications/pdfs/Oren_SIGGRAPH94.pdf, Michael Oren, Shree K. Nayar 1994](https://image.slidesharecdn.com/penngraphics-141203184433-conversion-gate02/85/Penn-graphics-130-320.jpg)