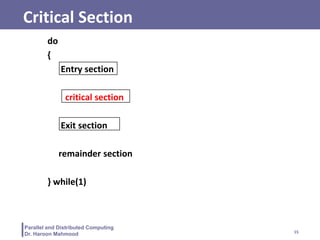

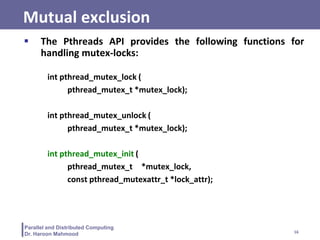

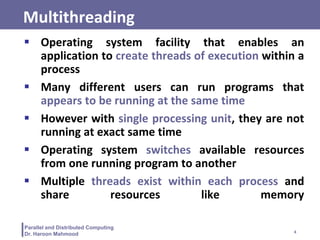

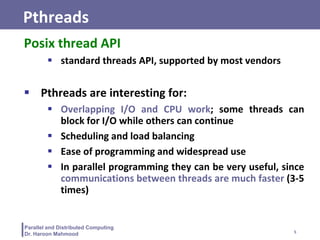

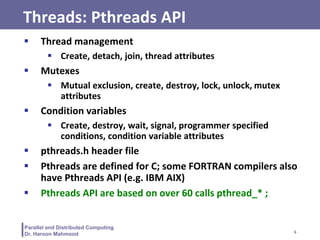

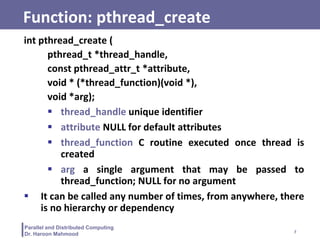

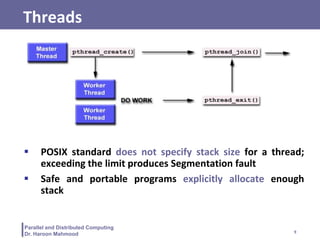

This document discusses parallel and distributed computing using POSIX threads (Pthreads). It defines threads as independent streams of instructions that can be scheduled by the operating system. Pthreads provide a standard API for thread management using functions like pthread_create() and pthread_join(). The document discusses how multithreading allows multiple users to run programs simultaneously by time-sharing resources. It also covers synchronization issues and how mutual exclusion locks (mutexes) allow threads to safely access shared resources through critical sections.

![12

Parallel and Distributed Computing

Dr. Haroon Mahmood

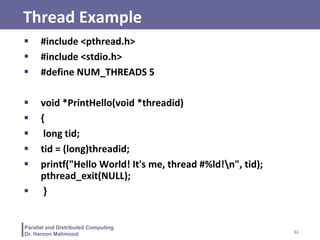

Thread Example

int main (int argc, char *argv[])

{

pthread_t threads[NUM_THREADS];

int rc; long t;

for(t=0; t<NUM_THREADS; t++)

{

rc = pthread_create(&threads[t], NULL, PrintHello, (void *)t);

if (rc)

{

printf("ERROR; return code from pthread_create() is %dn", rc);

exit(-1);

}

}

}](https://image.slidesharecdn.com/lecture-07-6e-6f-spring-2023-230511171639-36452a99/85/PDCCLECTUREE-pptx-12-320.jpg)