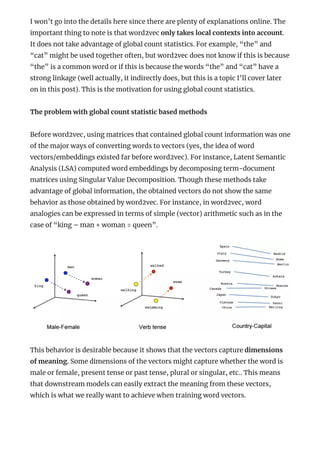

This document summarizes and explains the GloVe model for generating word embeddings. GloVe aims to capture word meaning in vector space while taking advantage of global word co-occurrence counts. Unlike word2vec, GloVe learns embeddings based on a co-occurrence matrix rather than streaming sentences. It trains vectors so their differences predict co-occurrence ratios. The document outlines the key steps in building GloVe, including data preparation, defining the prediction task, deriving the GloVe equation, and comparisons to word2vec.