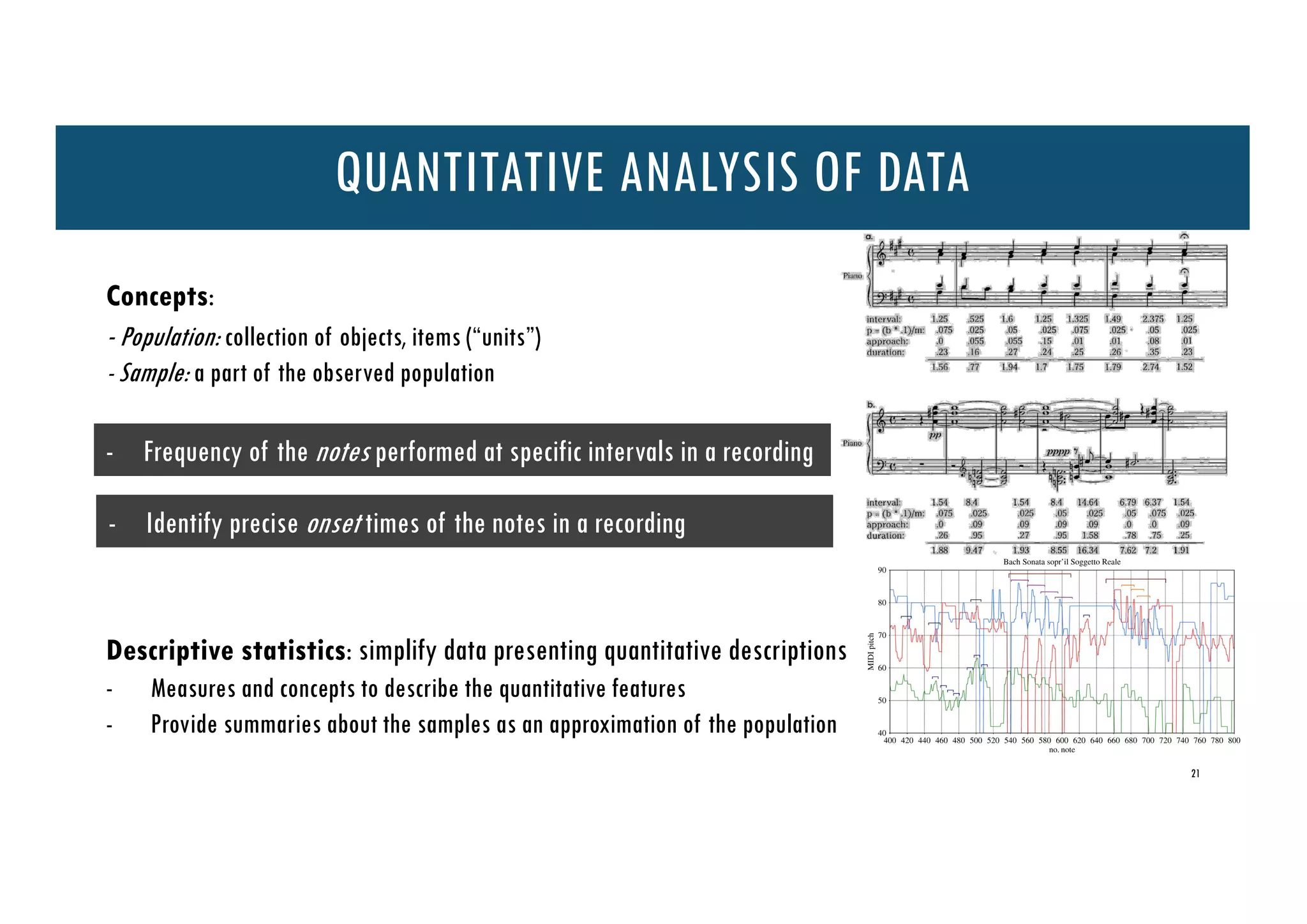

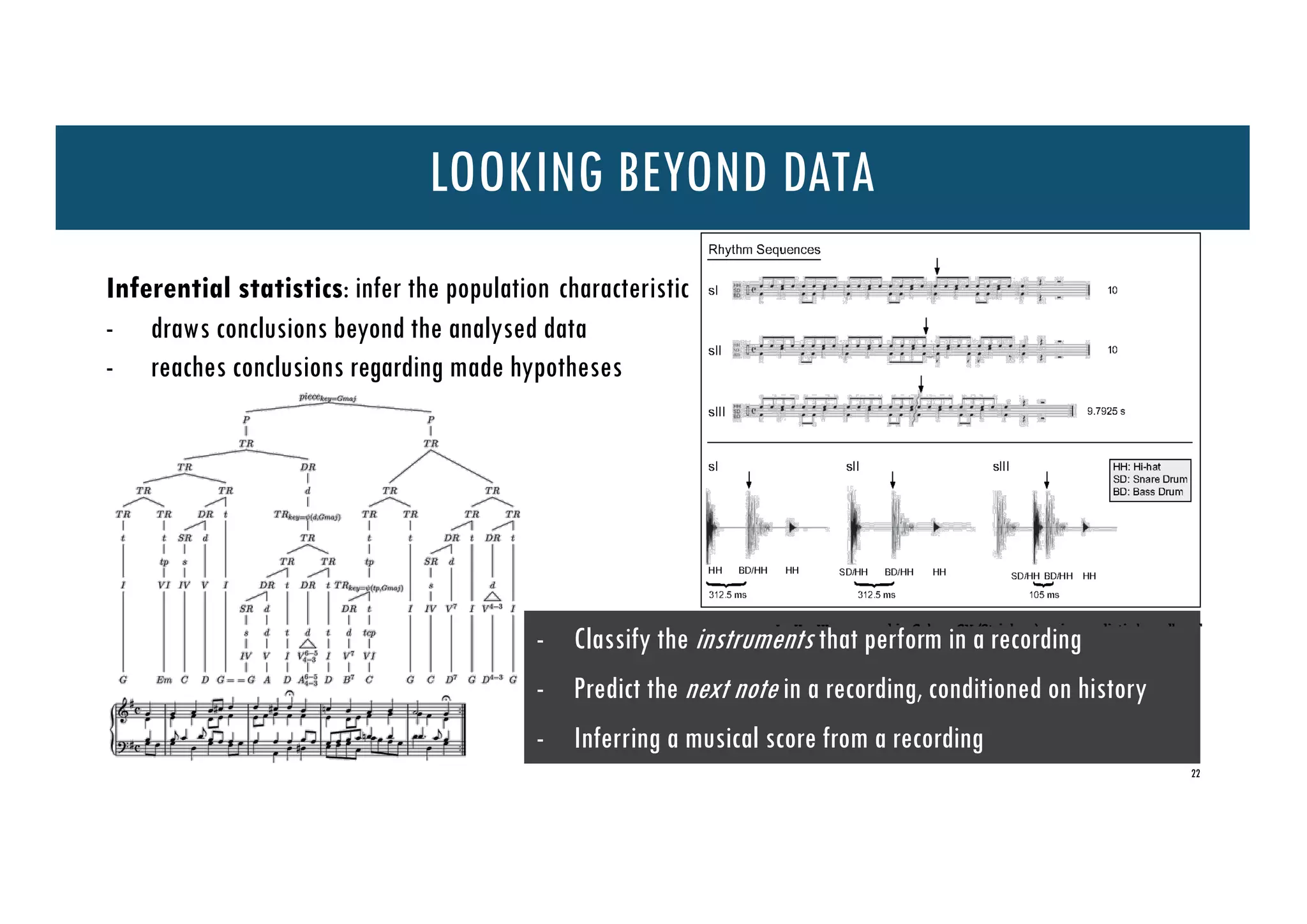

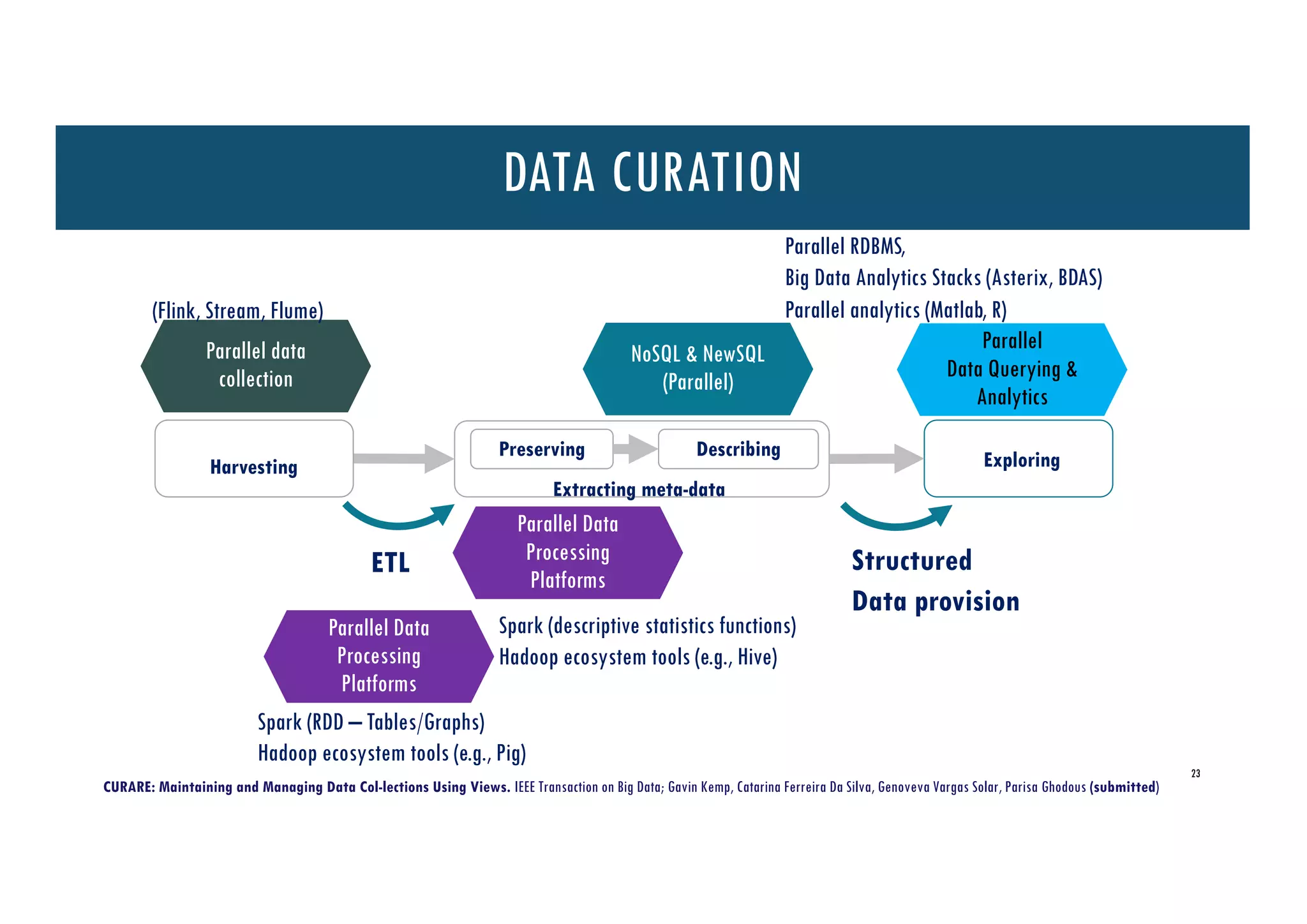

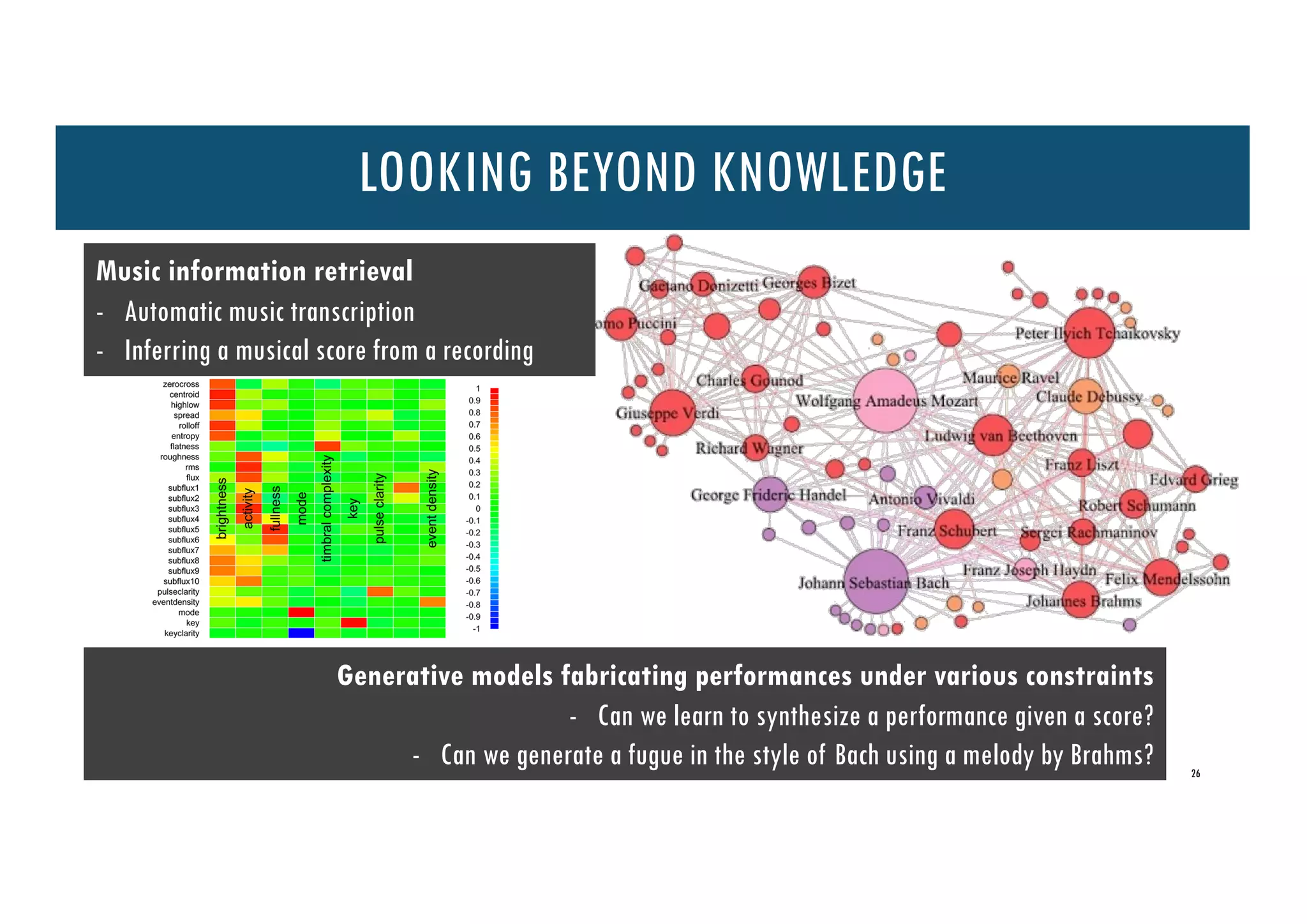

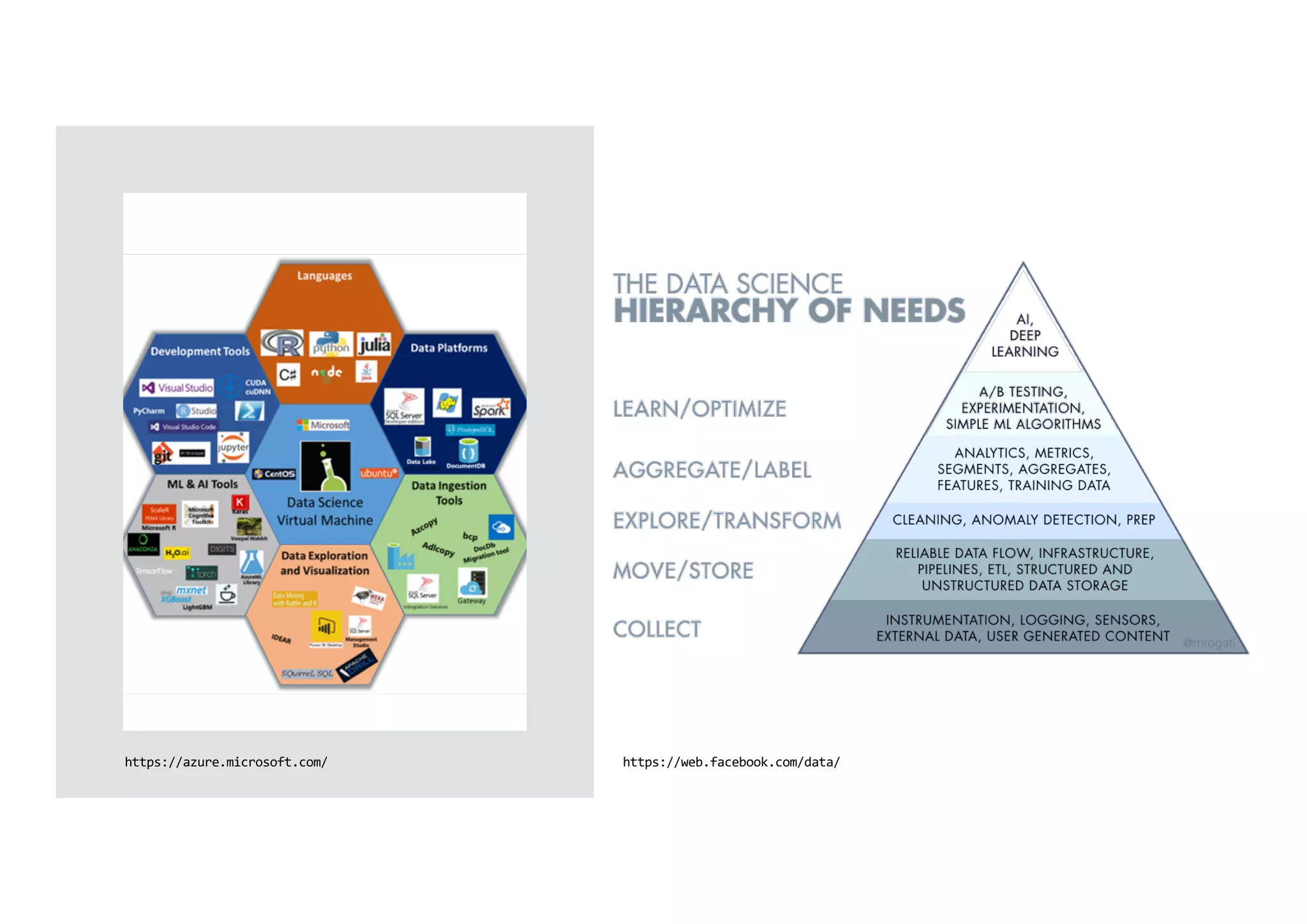

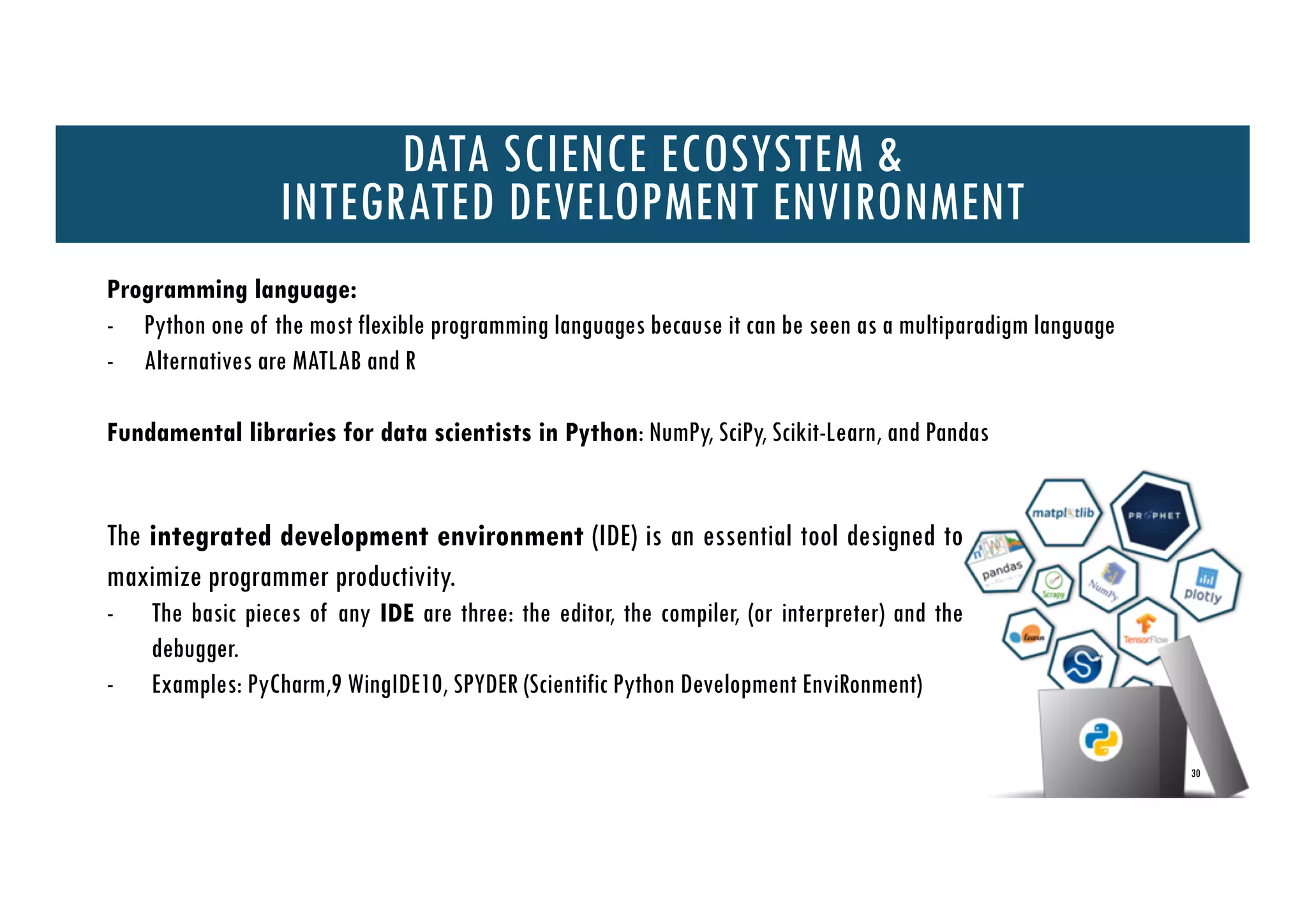

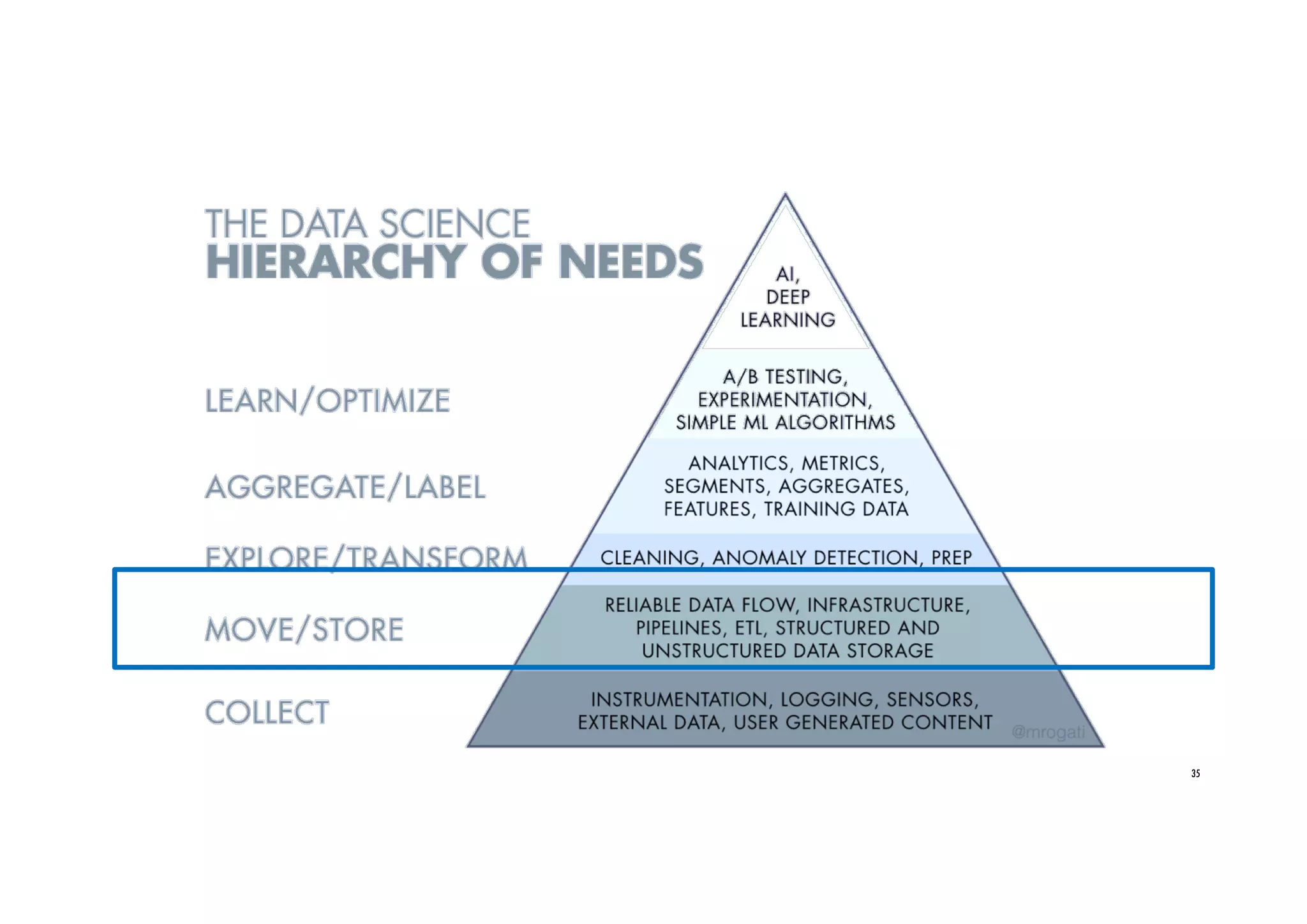

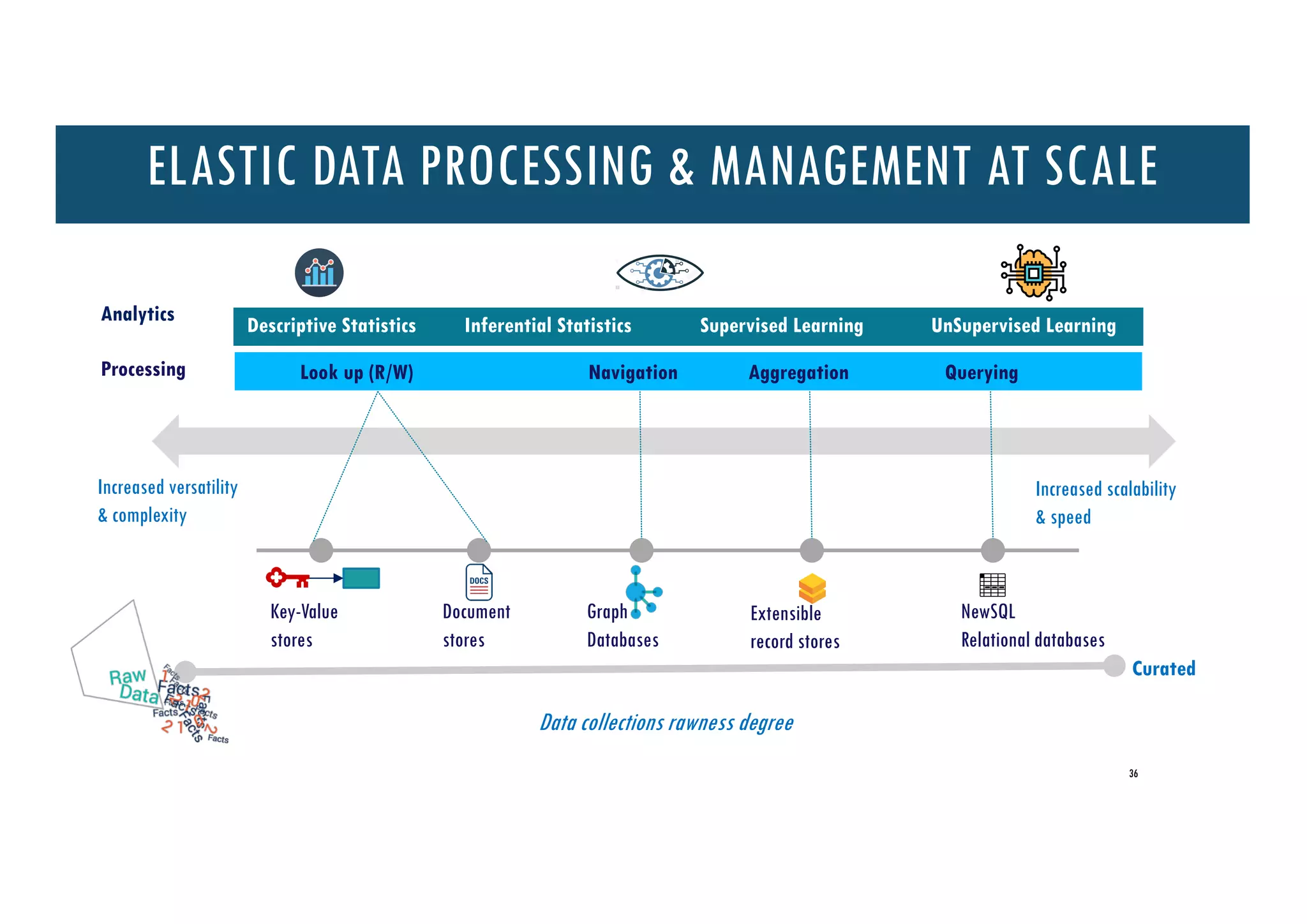

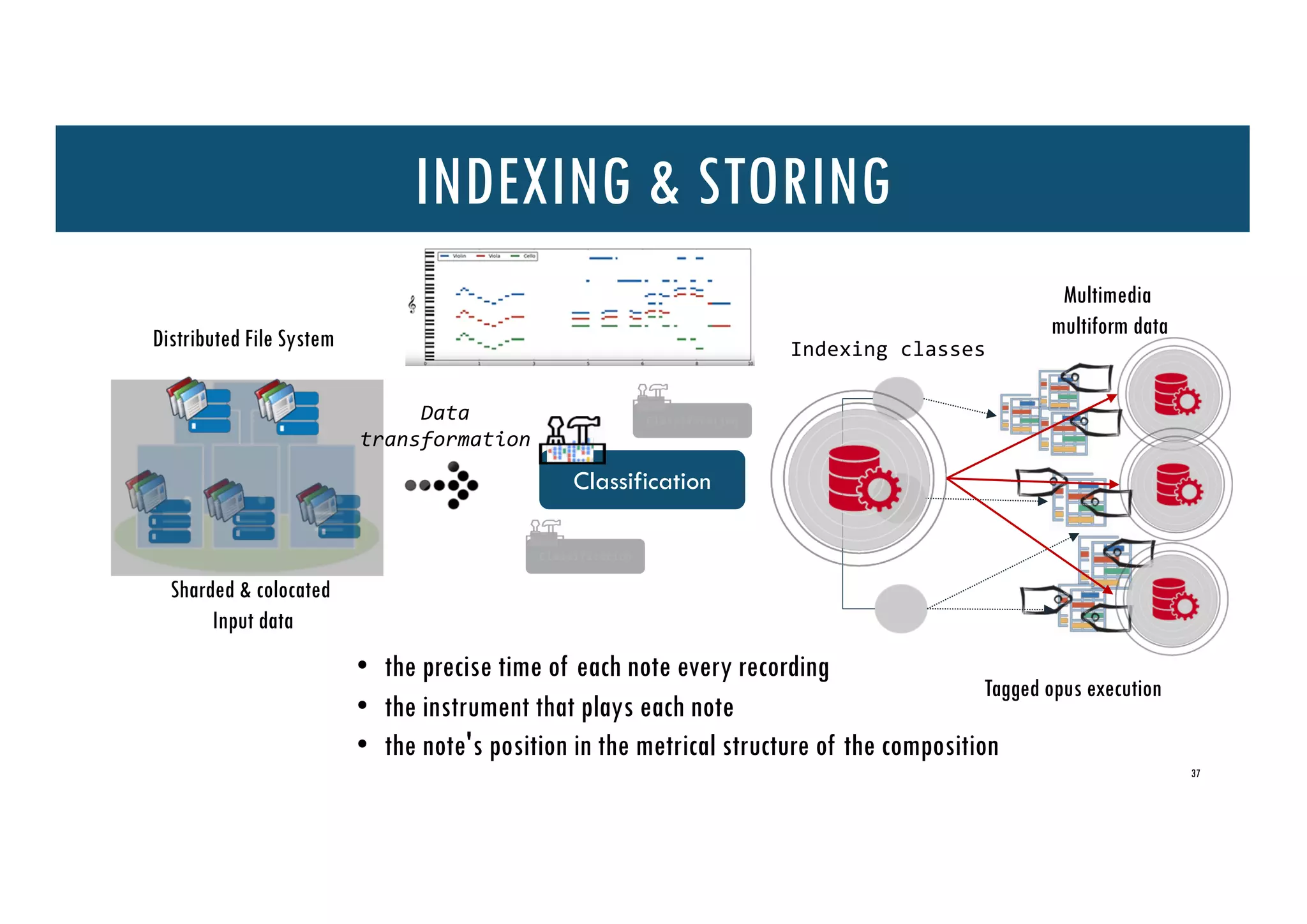

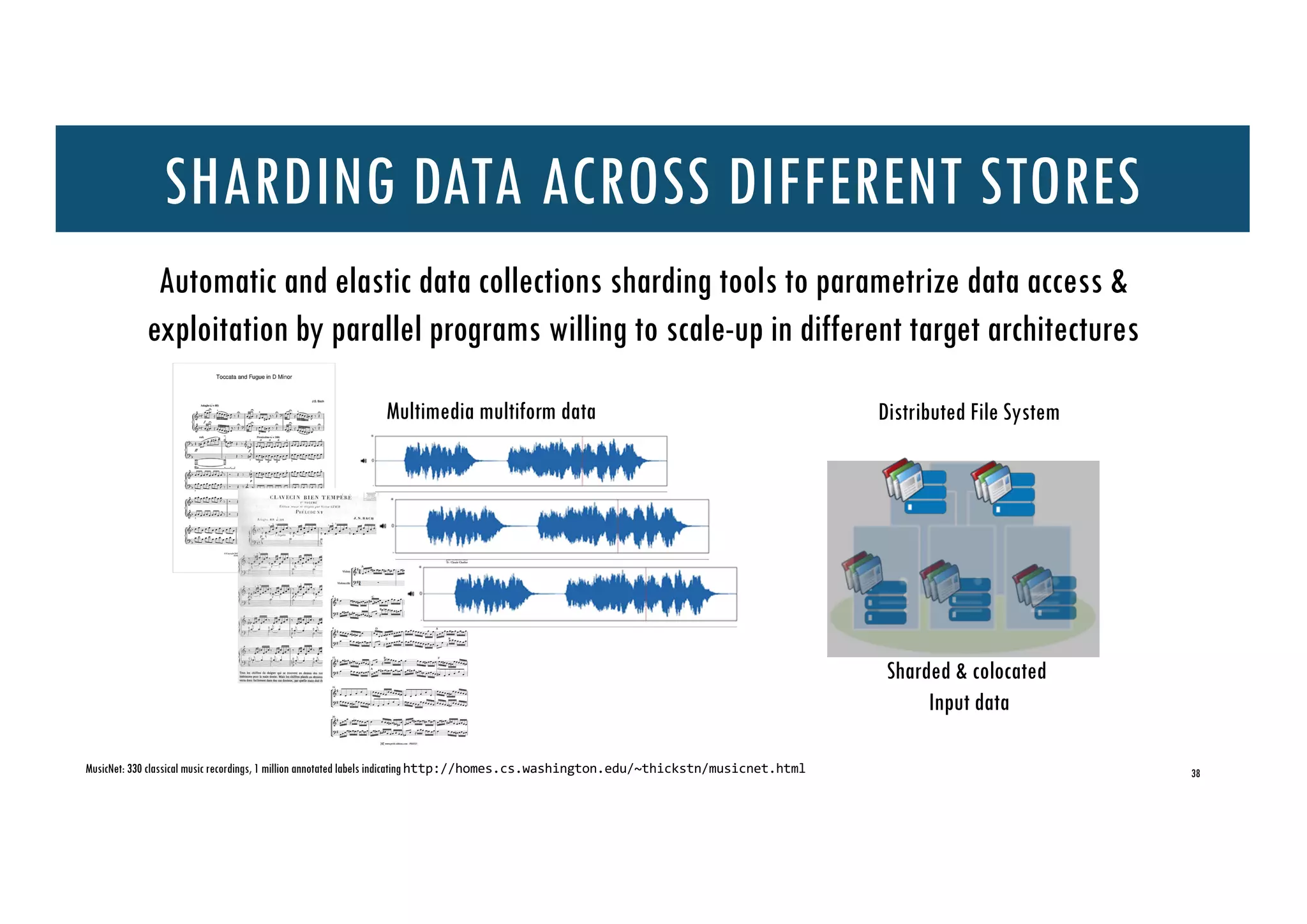

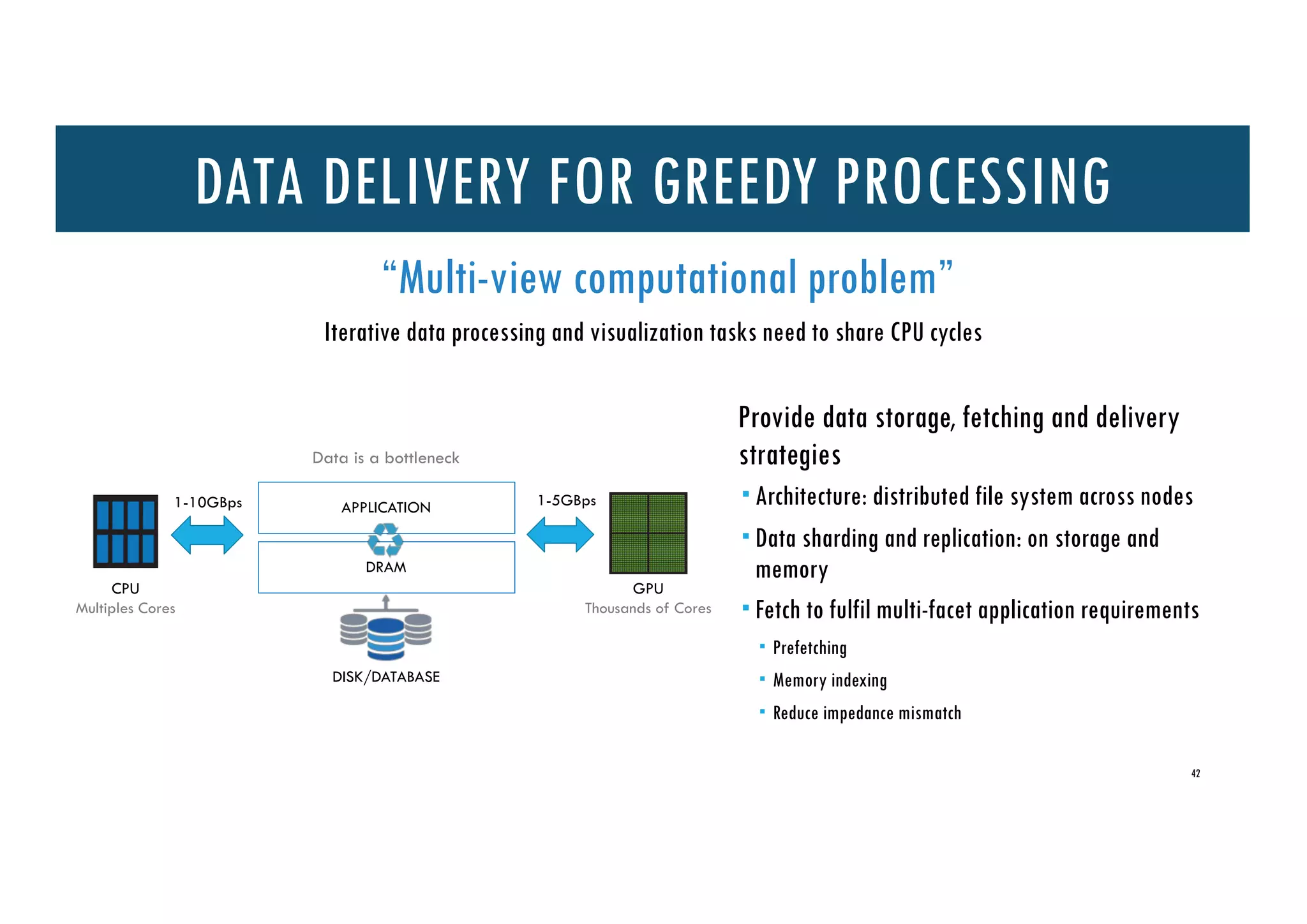

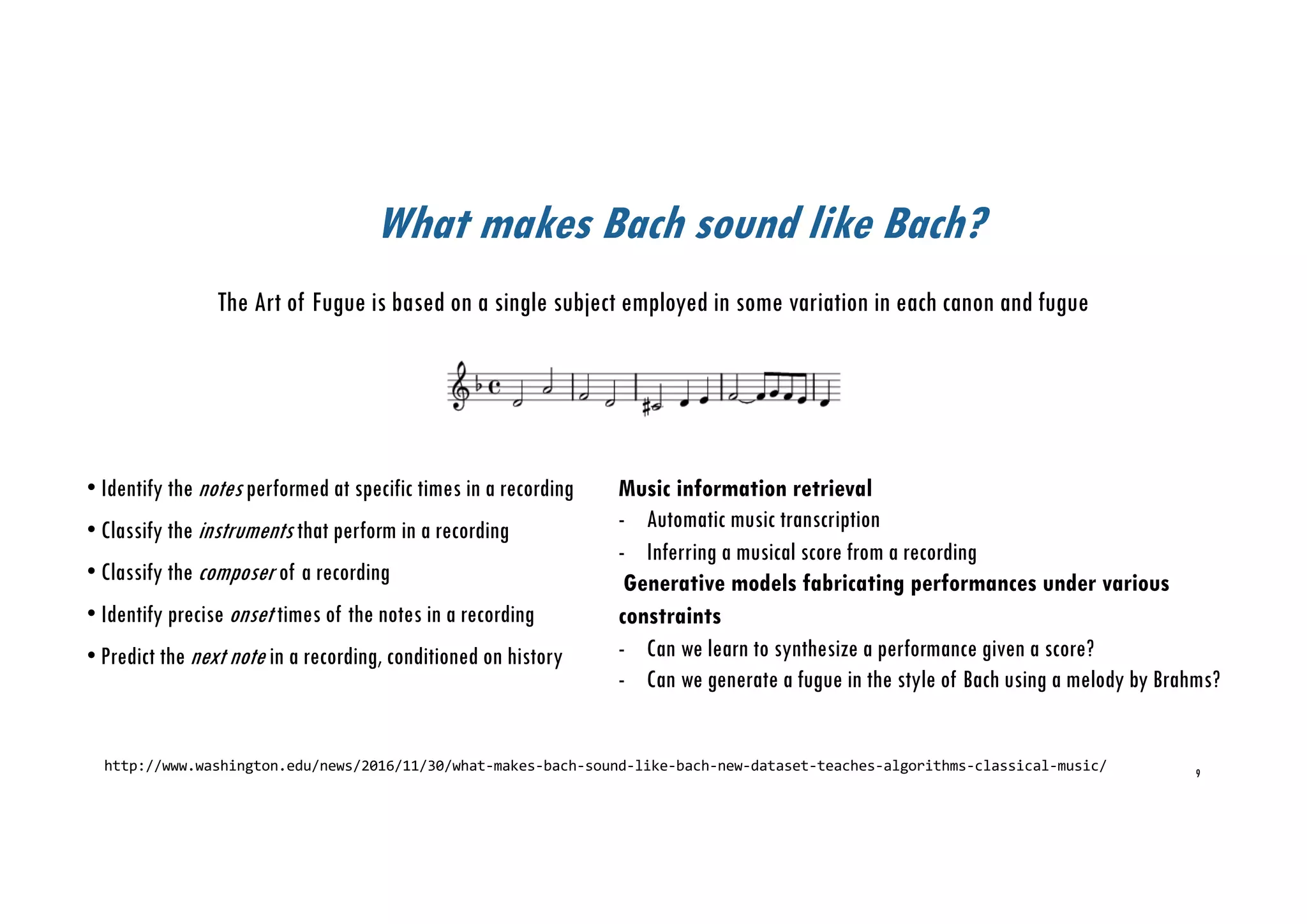

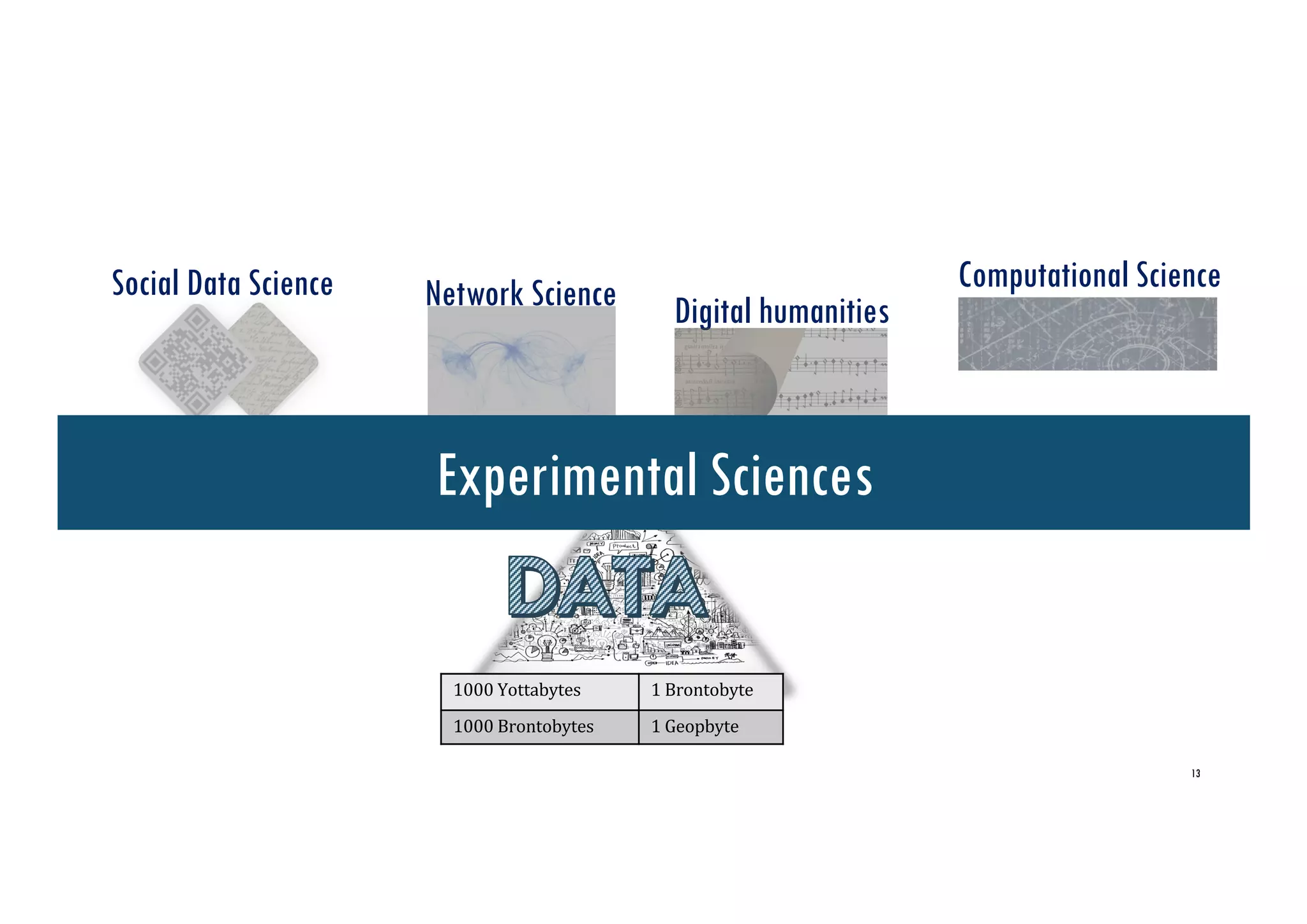

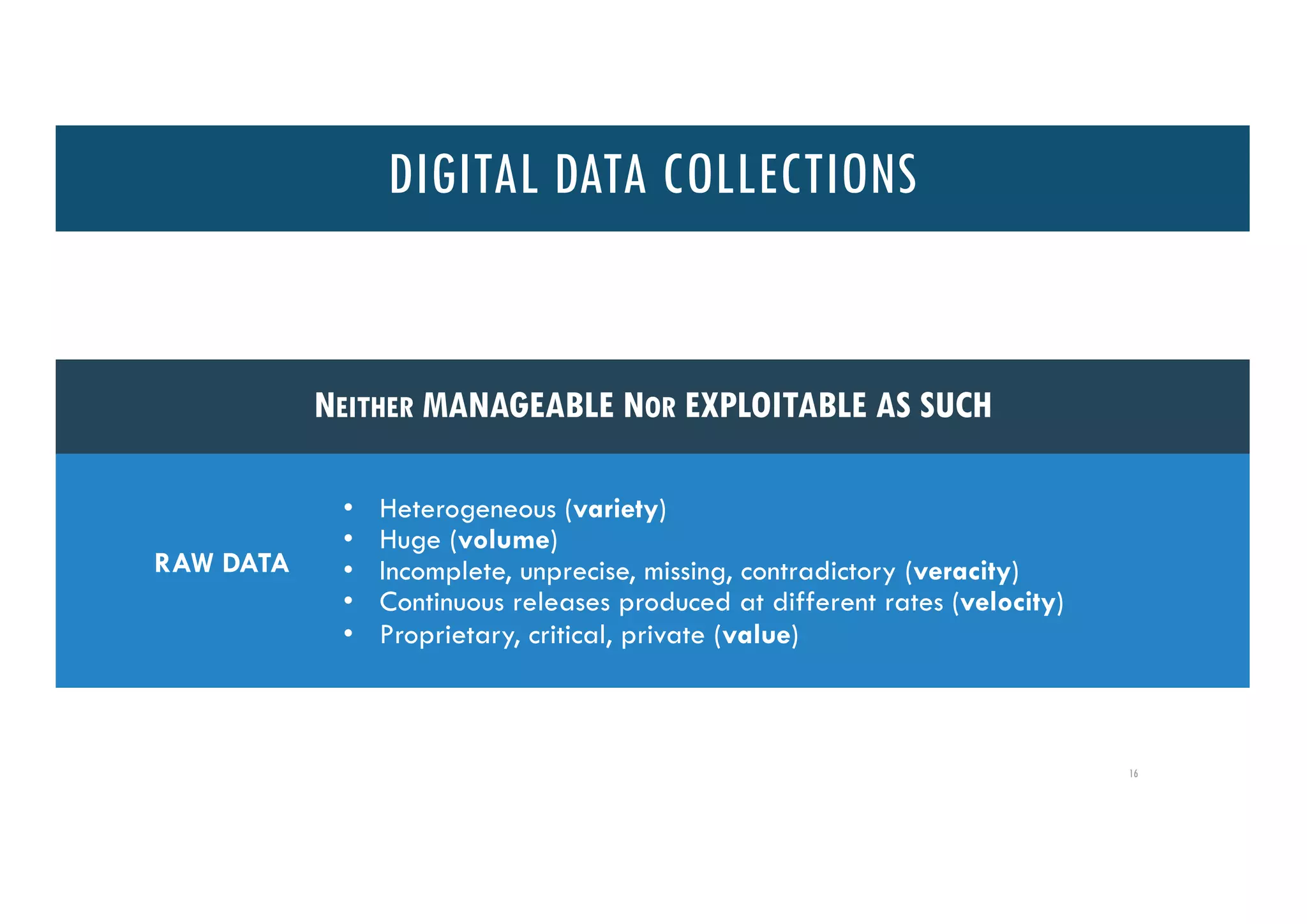

The document discusses the interplay between data-centric sciences, artificial intelligence, and high-performance computing (HPC), emphasizing the role of big data in transforming reality and enhancing decision-making. It covers methodologies for data analysis, the importance of computational frameworks, and the exploration of music information retrieval through various techniques like automatic music transcription. Furthermore, it highlights the need for efficient data management and processing to address complex scientific problems and support evidence-based conclusions.

![20

ü Helping to select the right tool for

preprocessing or analysis

ü Making use of humans’ abilities to

recognize patterns

Not always sure what we are looking for (until we find it)

Query expression [guidance ∣ automatic generation]3,2

• Multi-scale query processing for gradual exploration

• Query morphing to adjust for proximity results

• Queries as answers: query alternatives to cope with lack of providence

Results filtering, analysis, visualization2

• Result-set post processing for conveying meaningful data

Data exploration systems & environments1

• Data systems kernels are tailored for data exploration: no preparation easy-to-use fast database

cracking

• Auto-tuning database kernels : incremental, adaptive, partial indexing

1. Xi, S. L., Babarinsa, O., Wasay, A., Wei, X., Dayan, N., & Idreos, S. (2017, May). Data canopy: Accelerating exploratory statistical analysis. In Proceedings of the 2017 ACM International Conference on Management of Data (pp. 557-572). ACM.

2. Athanassoulis, M., & Idreos, S. (2015, May). Beyond the wall: Near-data processing for databases. In Proceedings of the 11th International Workshop on Data Management on New Hardware (p. 2). ACM.

3. Idreos, S., Dayan, M. A. N., Guo, D., Kester, M. S., Maas, L., & Zoumpatianos, K. Past and Future Steps for Adaptive Storage Data Systems: From Shallow to Deep Adaptivity.

KEY MOTIVATIONS

EXPLORING DATA COLLECTIONS](https://image.slidesharecdn.com/vargas-solar-final-181116144114/75/Moving-forward-data-centric-sciences-weaving-AI-Big-Data-HPC-18-2048.jpg)