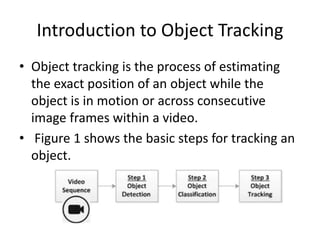

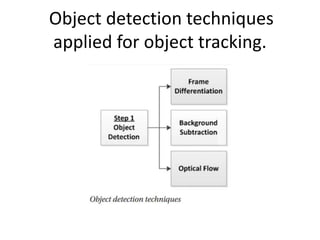

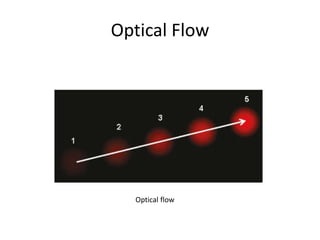

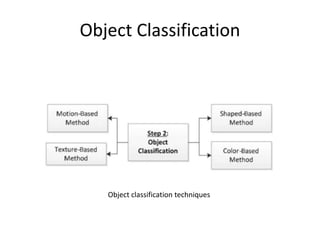

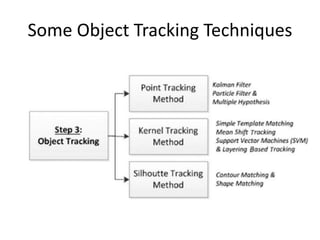

This document discusses techniques for motion analysis and object tracking in video. It covers topics like frame differencing, background subtraction, optical flow, and object tracking methods including point tracking using Kalman filtering, particle filtering, and multiple hypothesis tracking. It also discusses kernel-based tracking and classification techniques like shape-based, motion-based, color-based, and texture-based classification. The goal of object tracking is to estimate an object's position across video frames as it moves.