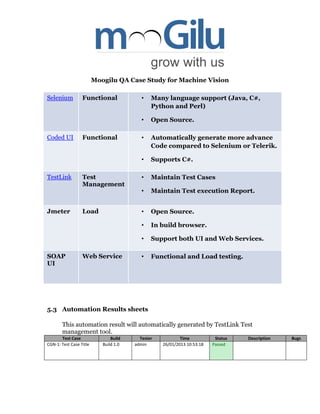

The Moogilu QA case study outlines a successful engagement focused on implementing quality assurance for machine vision products to improve shipping standards and customer satisfaction. Key objectives involved identifying product modules and developing a comprehensive testing strategy, which included functional, automation, and regression testing. The automation framework enabled efficient testing cycles and enhanced product quality, resulting in improved operational performance and no reported field issues post-shipment.