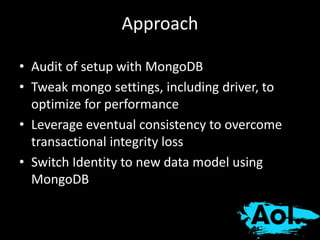

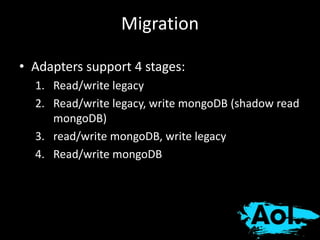

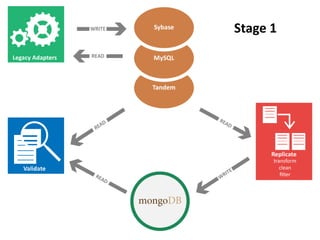

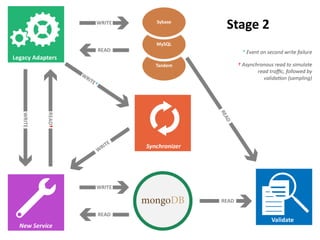

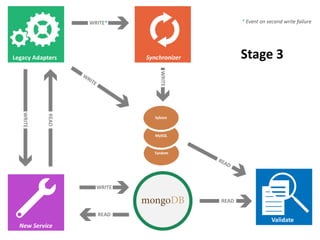

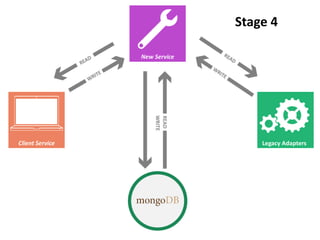

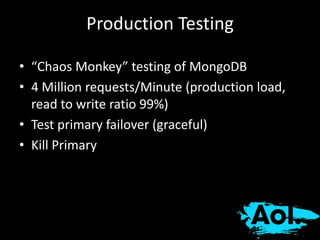

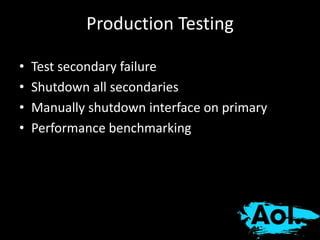

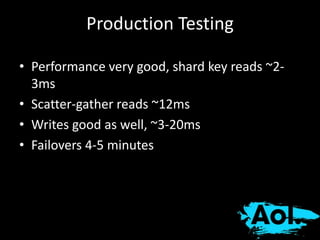

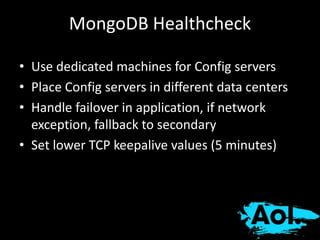

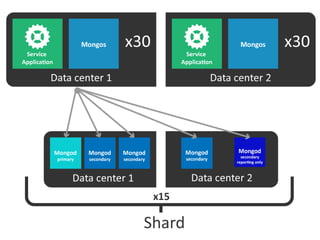

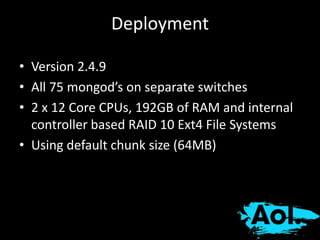

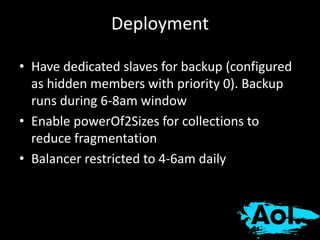

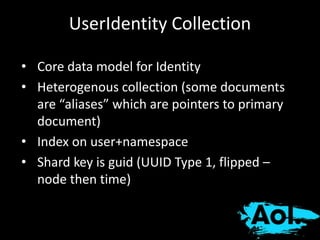

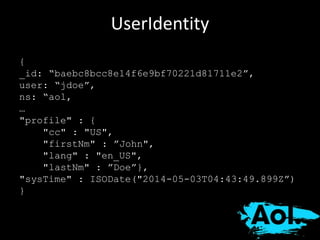

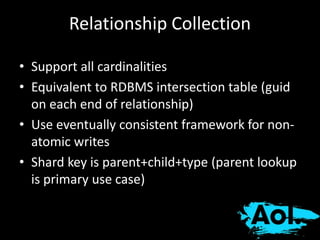

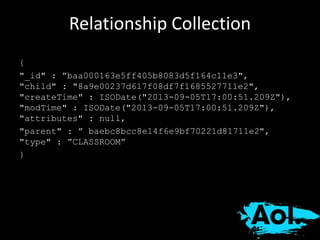

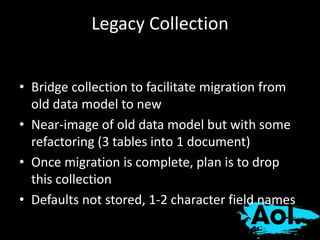

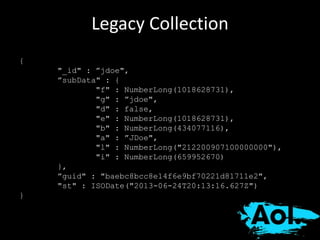

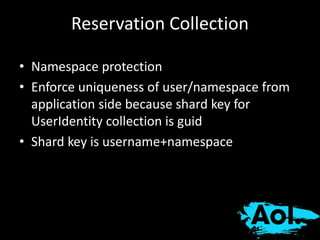

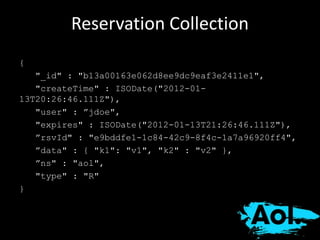

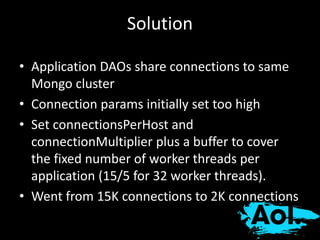

The document details AOL's implementation of MongoDB to improve data management, overcoming challenges like functional ambiguity and scaling. It discusses their migration strategy, performance testing, deployment details, and various collections, including user identities and relationships. Key lessons learned emphasize optimizing connections, minimizing read delays, and ensuring failovers are handled efficiently.