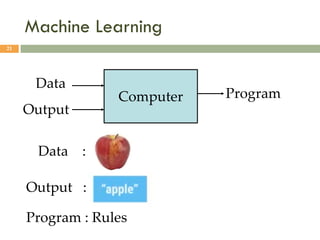

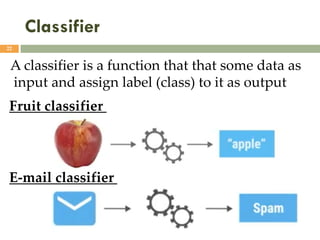

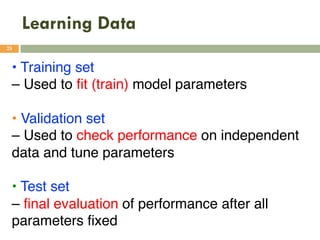

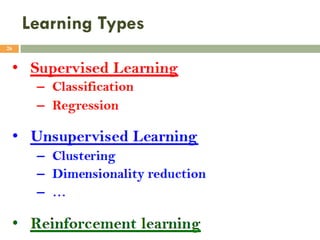

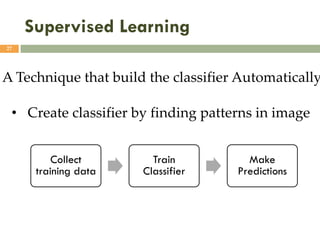

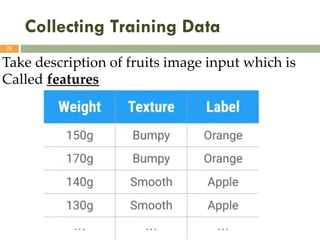

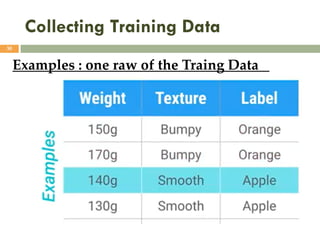

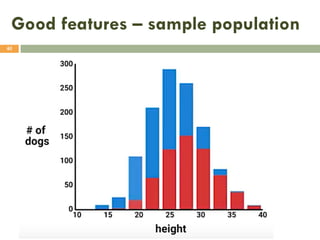

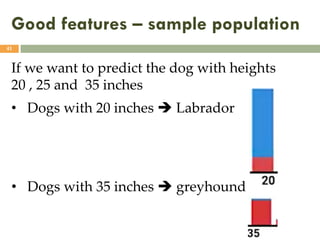

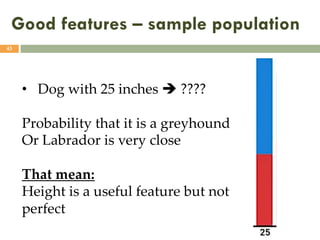

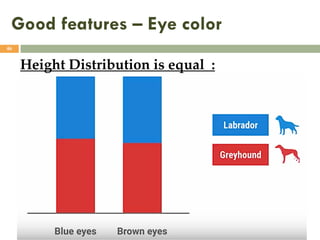

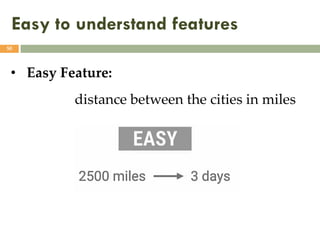

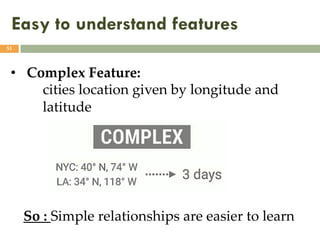

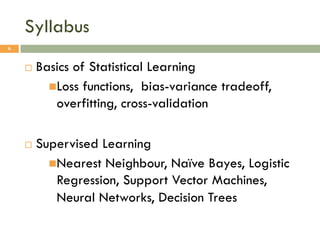

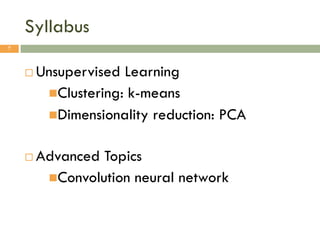

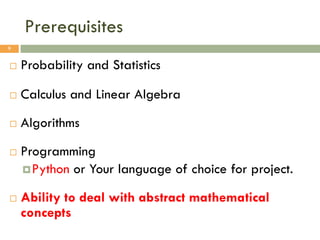

This document provides an introduction and overview of a machine learning course. It discusses the instructor's background and contact information. The syllabus outlines topics that will be covered, including supervised and unsupervised learning algorithms. Prerequisites for the course and recommended textbooks are also mentioned. The document concludes by discussing features that are important for machine learning models, such as being informative, independent, and simple.

![Textbook

¨ We will have lecture notes.

¨ Reference Books:

¤ [Online]

Machine Learning: A Probabilistic Perspective

Kevin Murphy

¤ [Free PDF from author’s webpage]

Bayesian reasoning and machine learning

David Barber

http://web4.cs.ucl.ac.uk/staff/D.Barber/pmwiki/pmwik

i.php?n=Brml.HomePage

¤ Fundamental of neural network by laurene fausett

10](https://image.slidesharecdn.com/ml1-230113182312-a3edcf3d/85/ML_1-pdf-10-320.jpg)