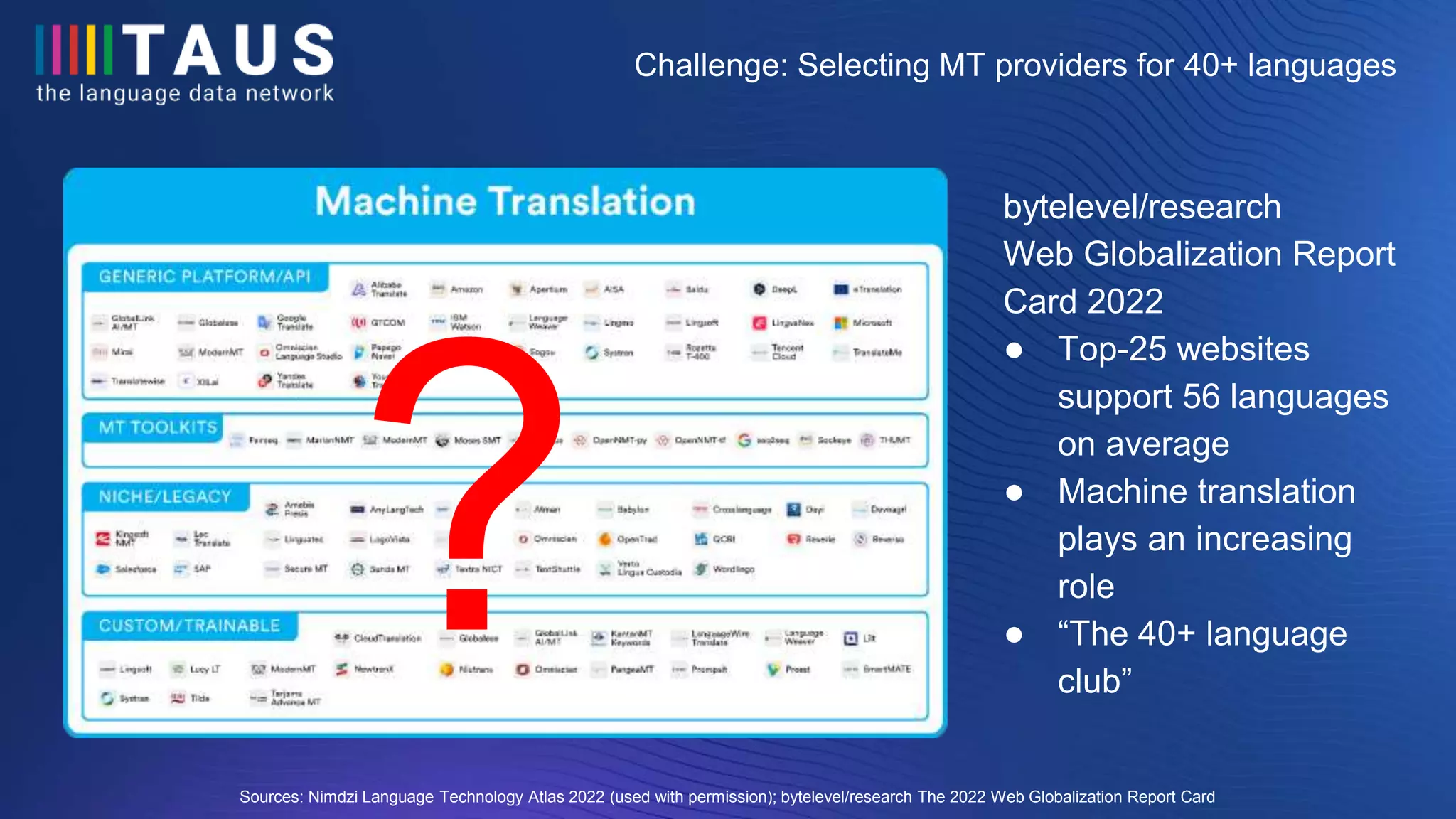

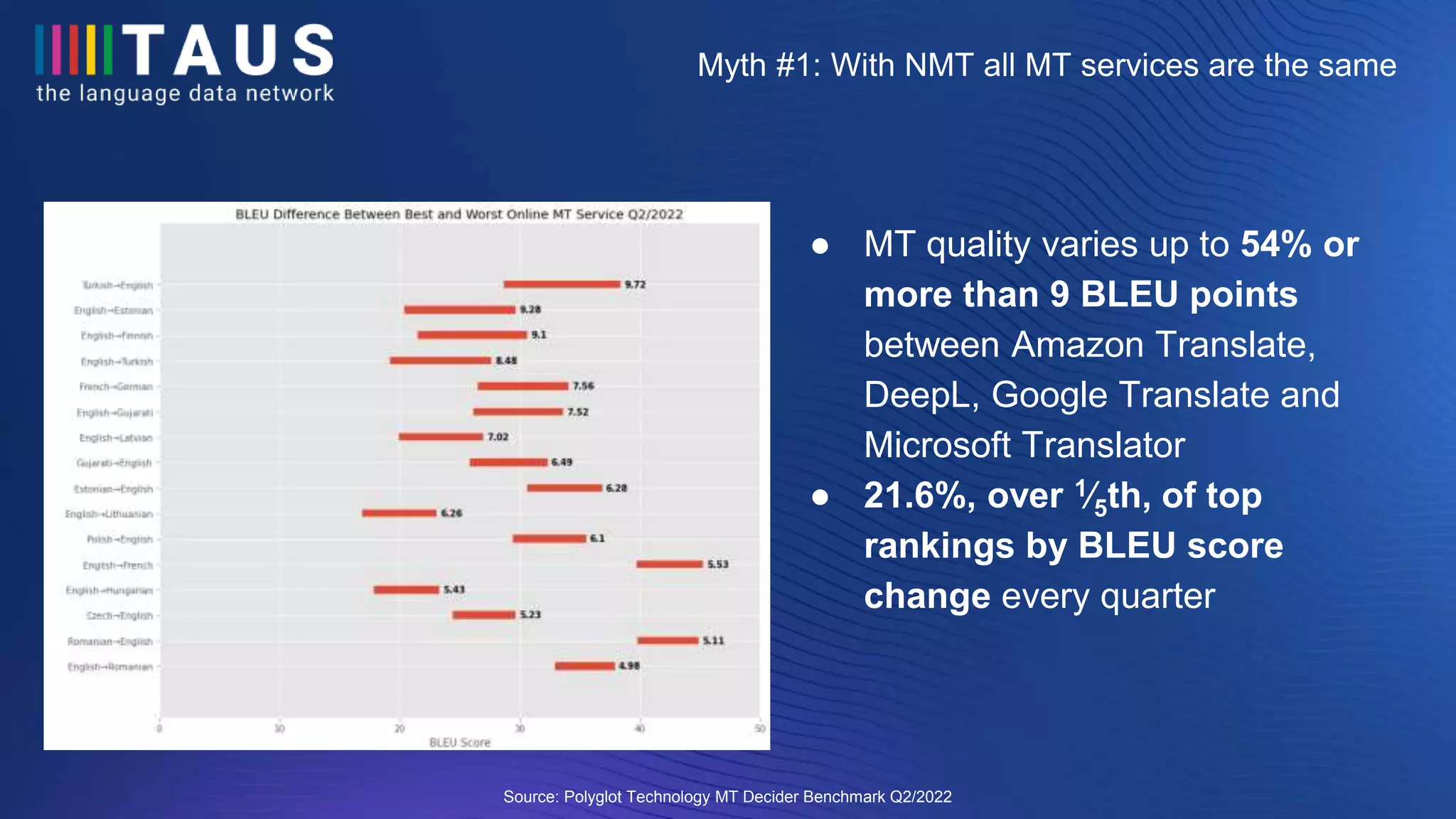

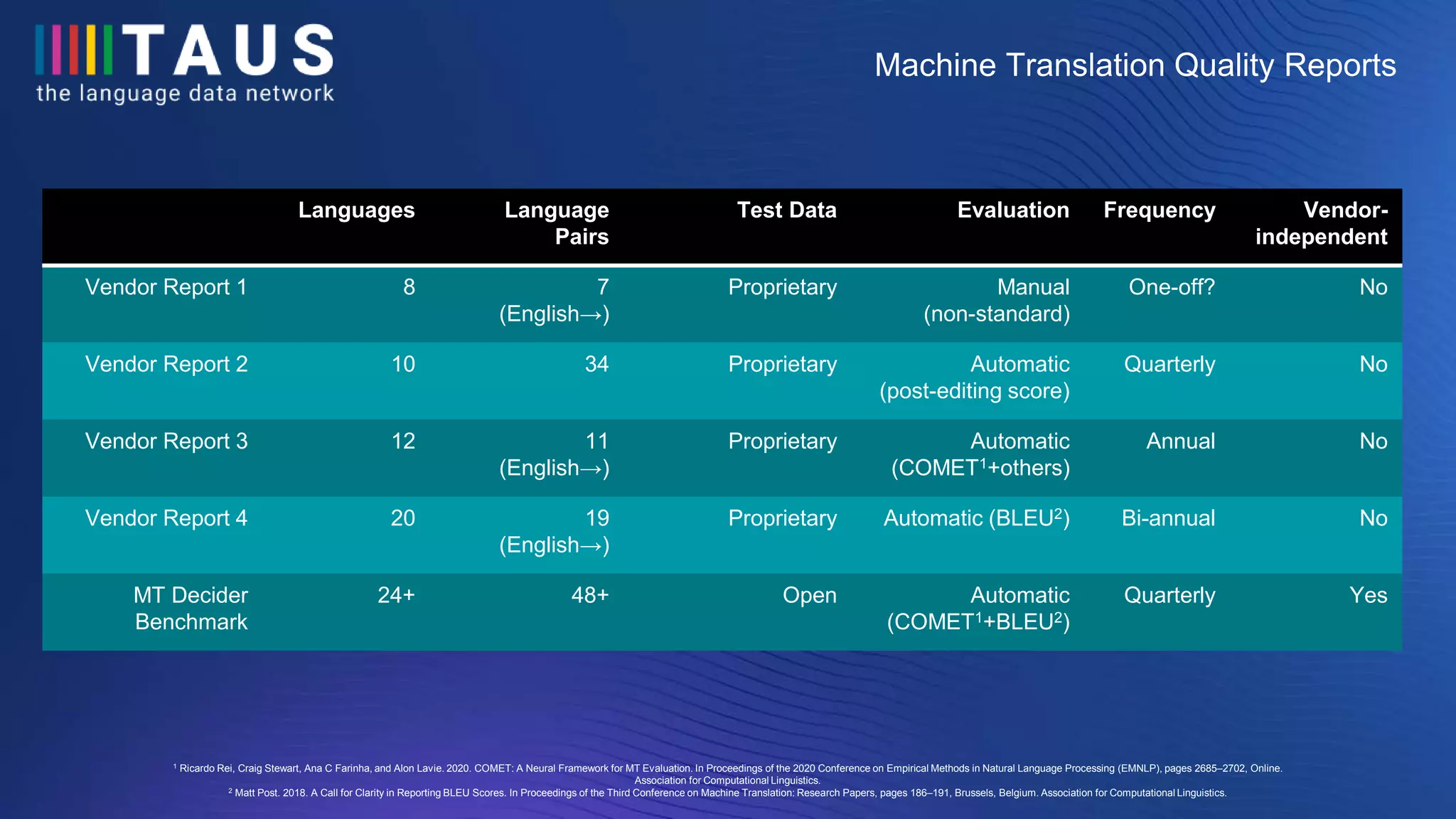

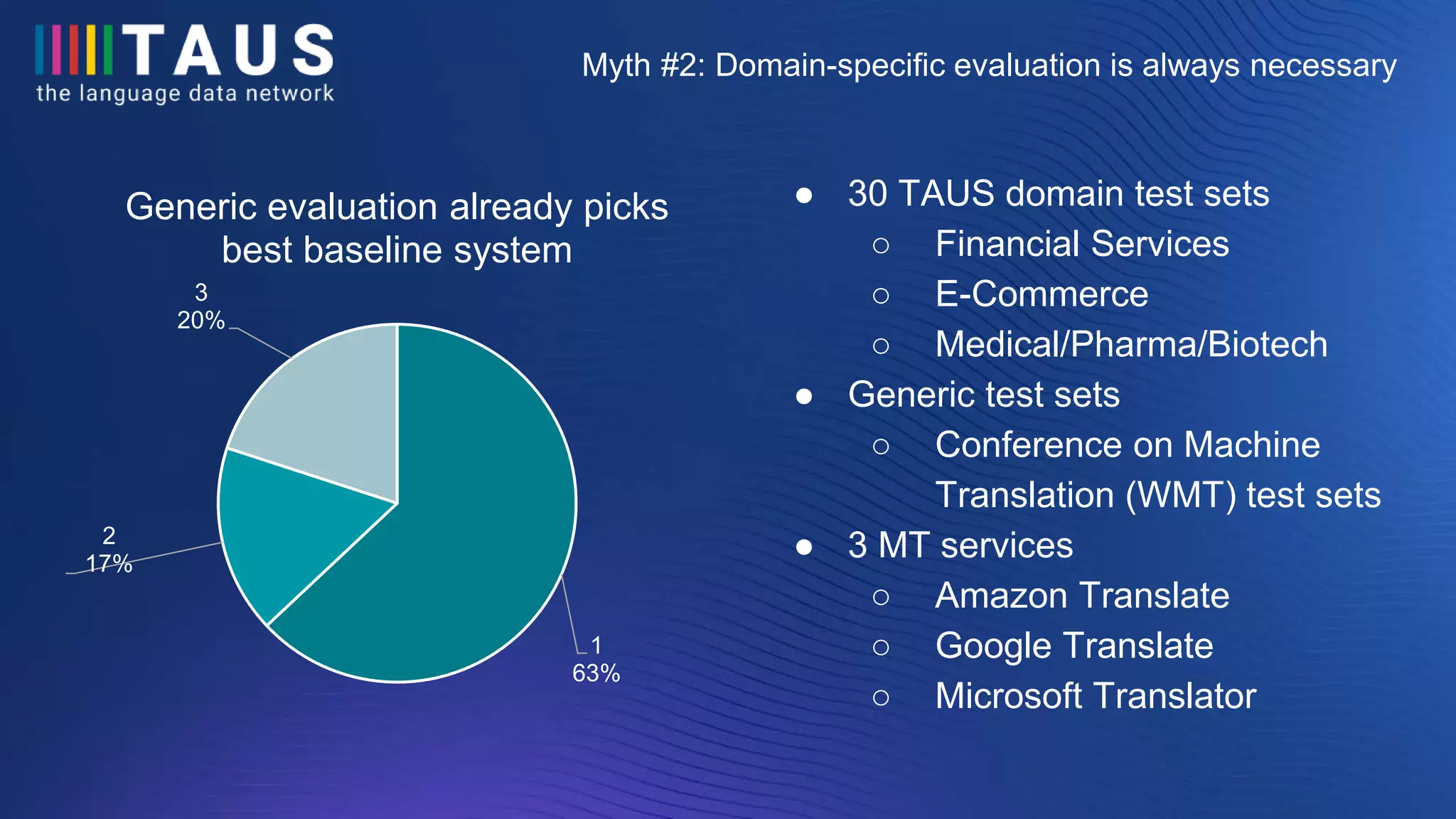

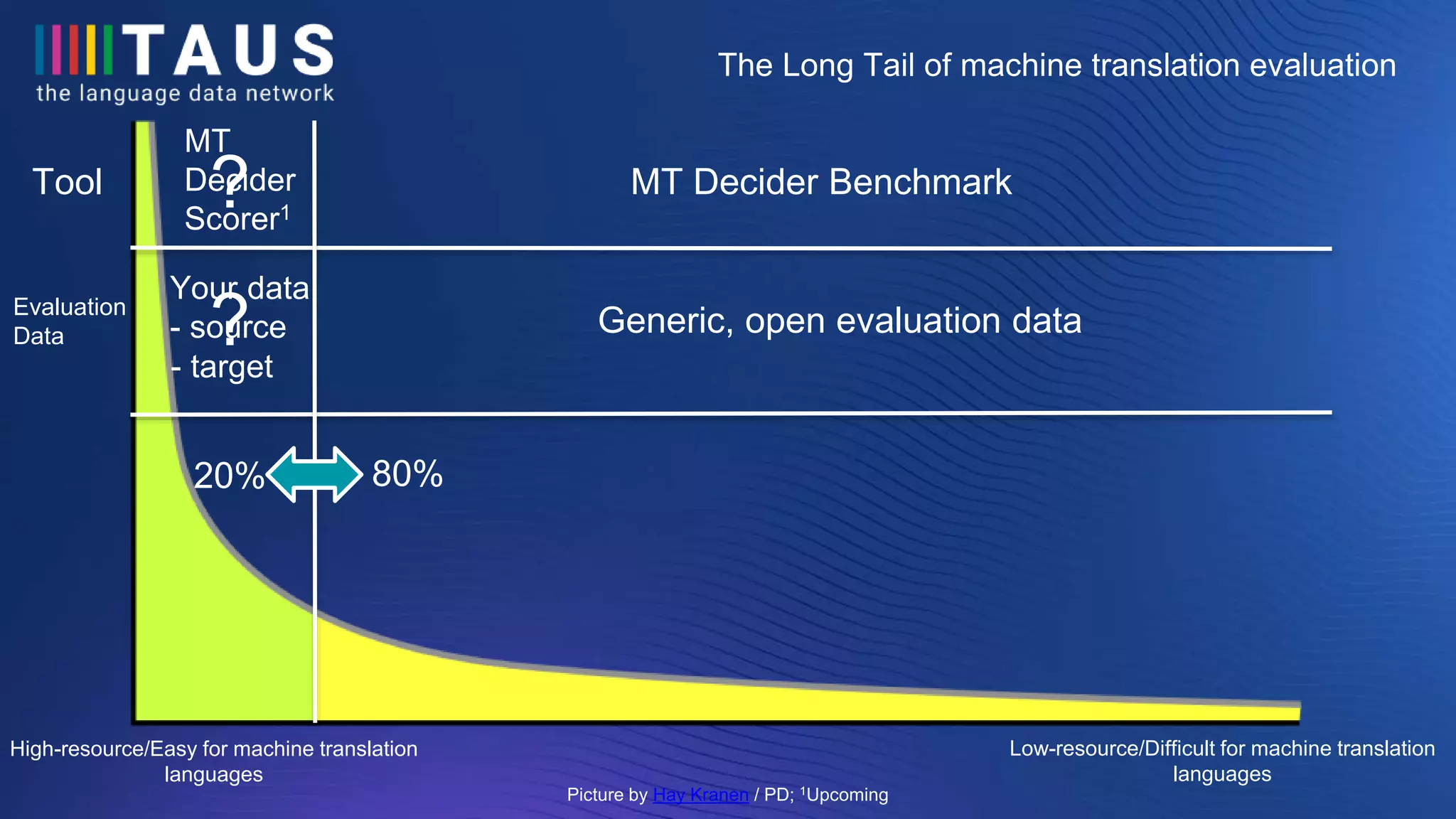

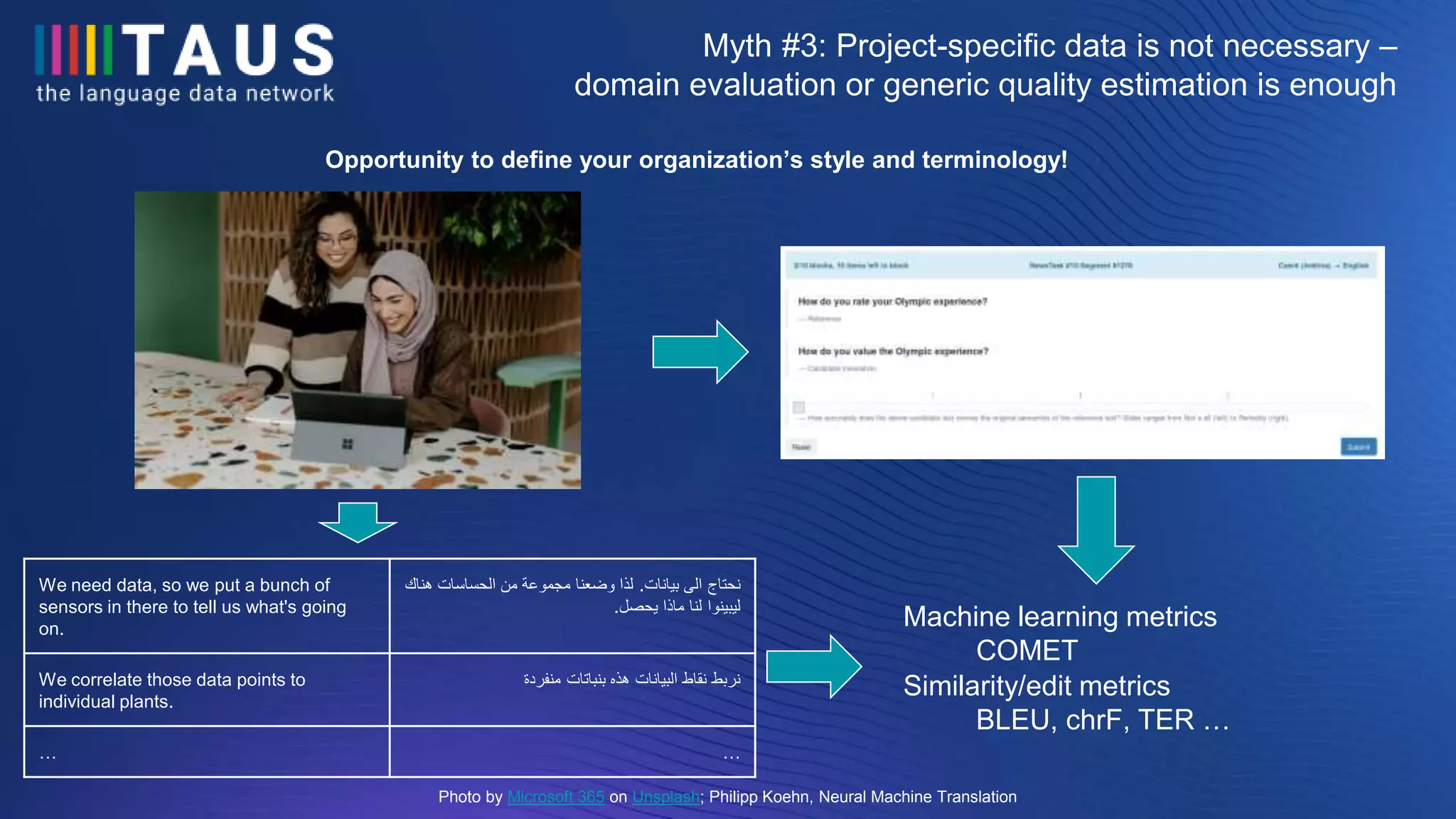

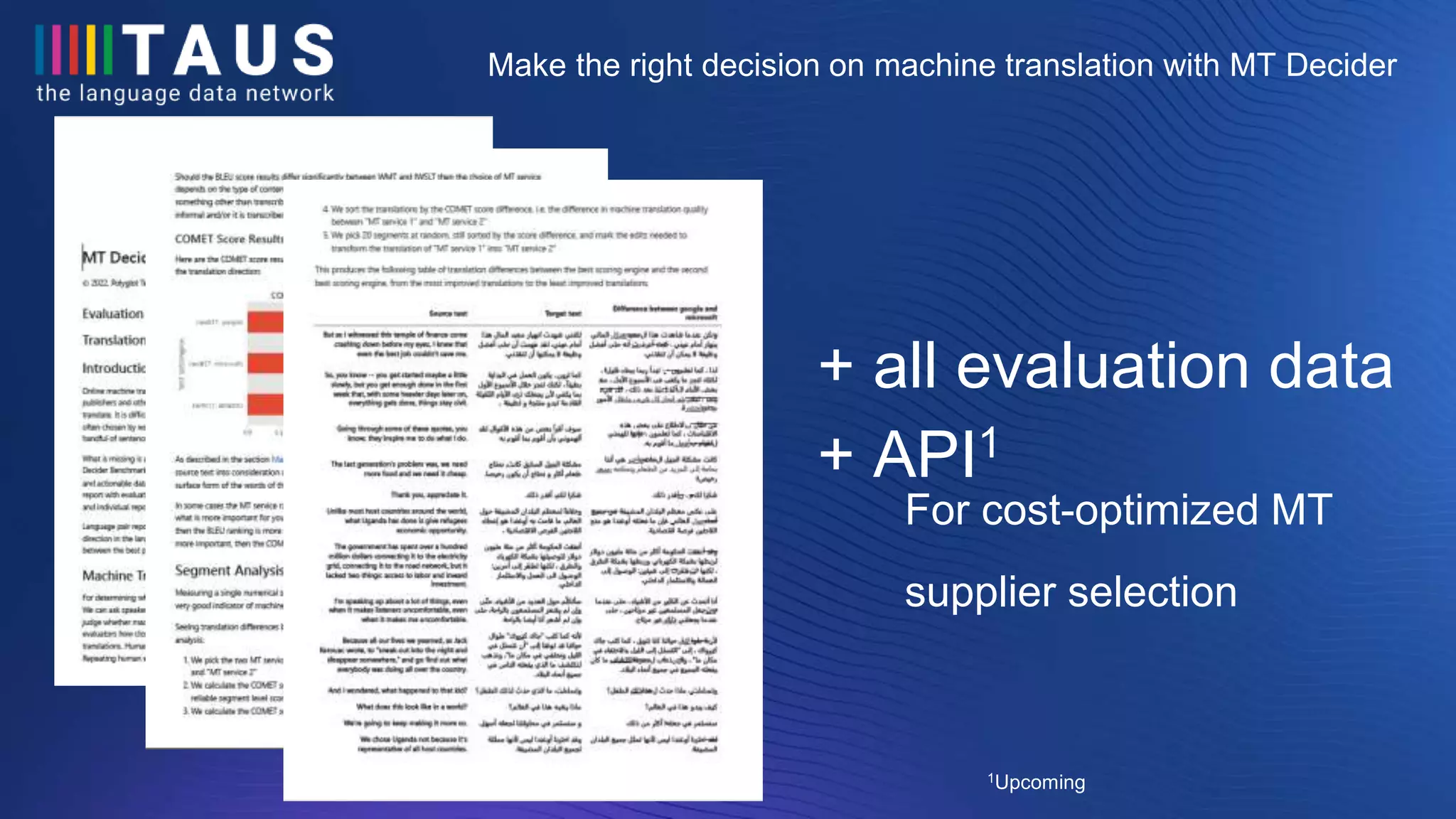

The document discusses the findings from a conference on machine translation, emphasizing the significant variability in quality among different machine translation services, with up to a 54% difference in performance. It challenges common myths about the necessity of domain-specific evaluation and the uniformity of NMT services, presenting findings from various machine translation evaluations. Additionally, it highlights the importance of data tracking and quality estimation for effective machine translation supplier selection.