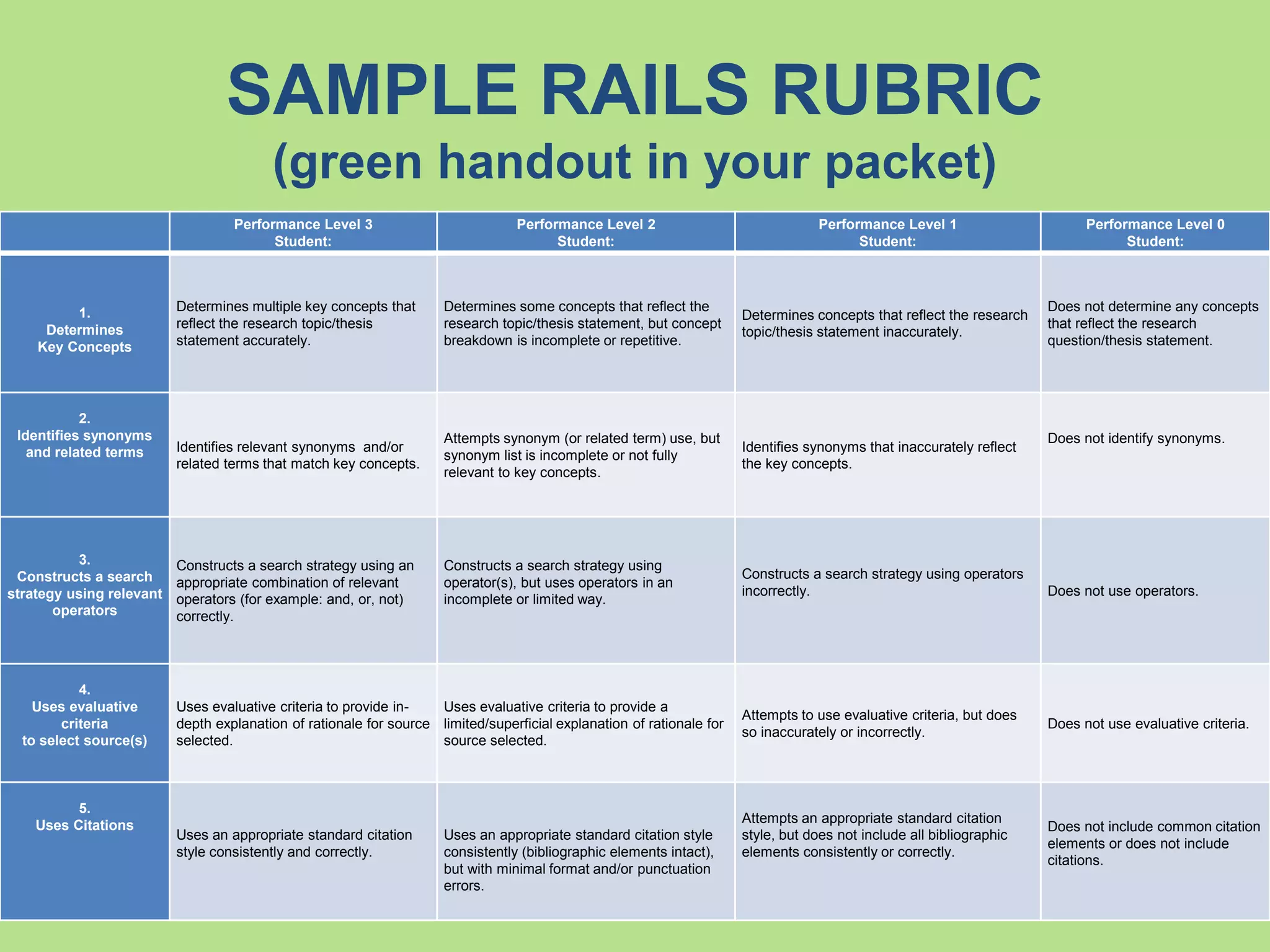

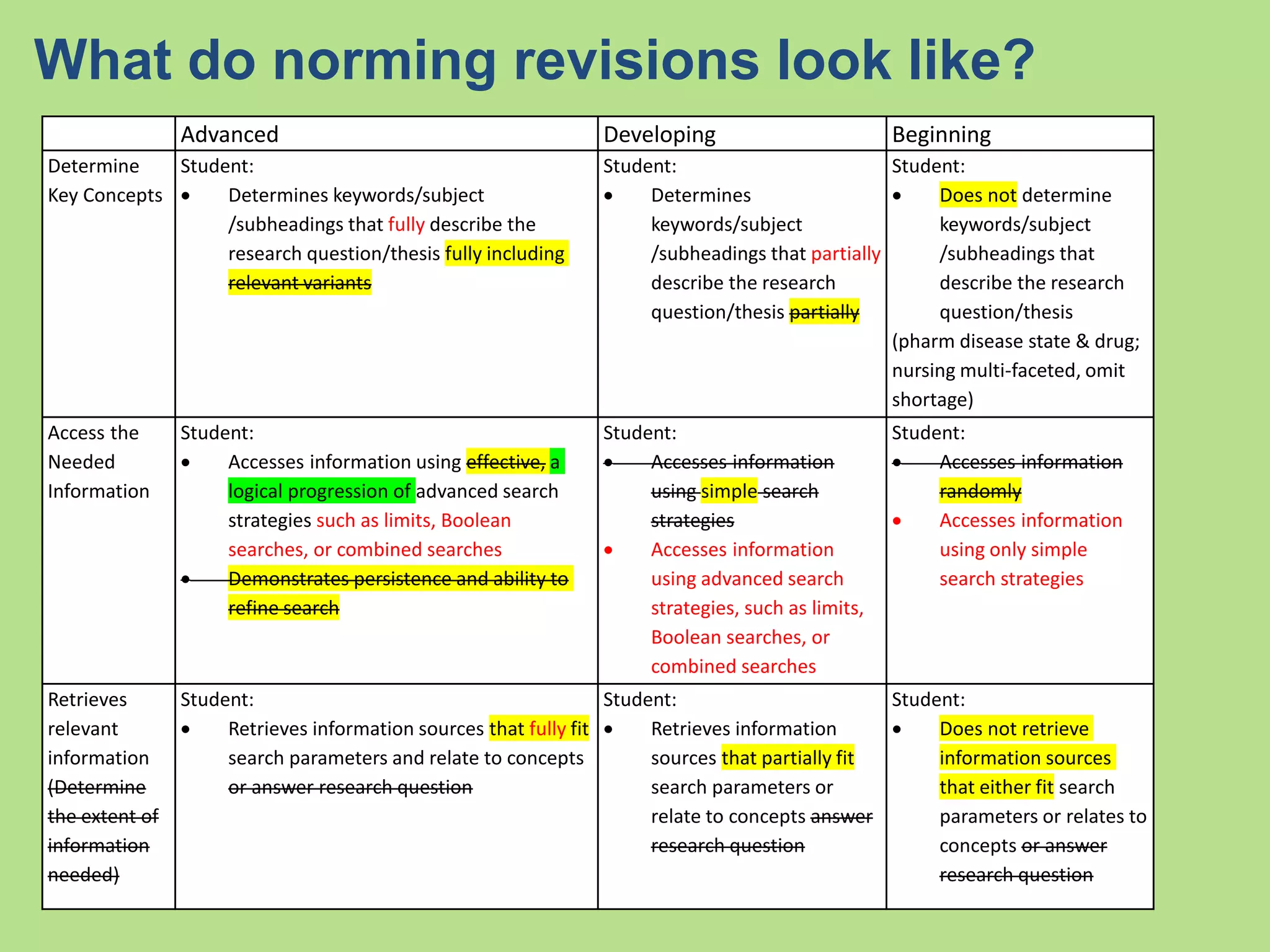

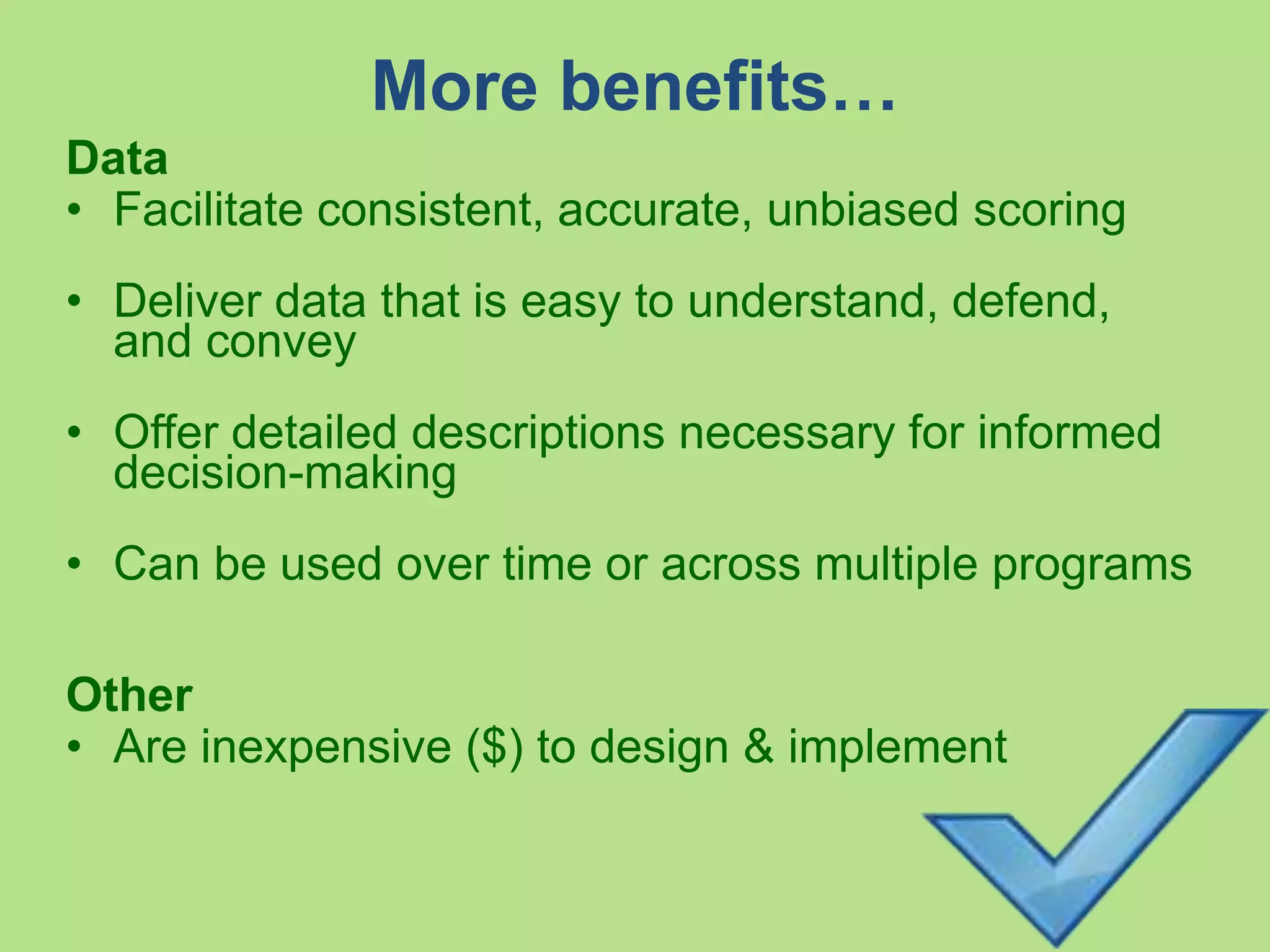

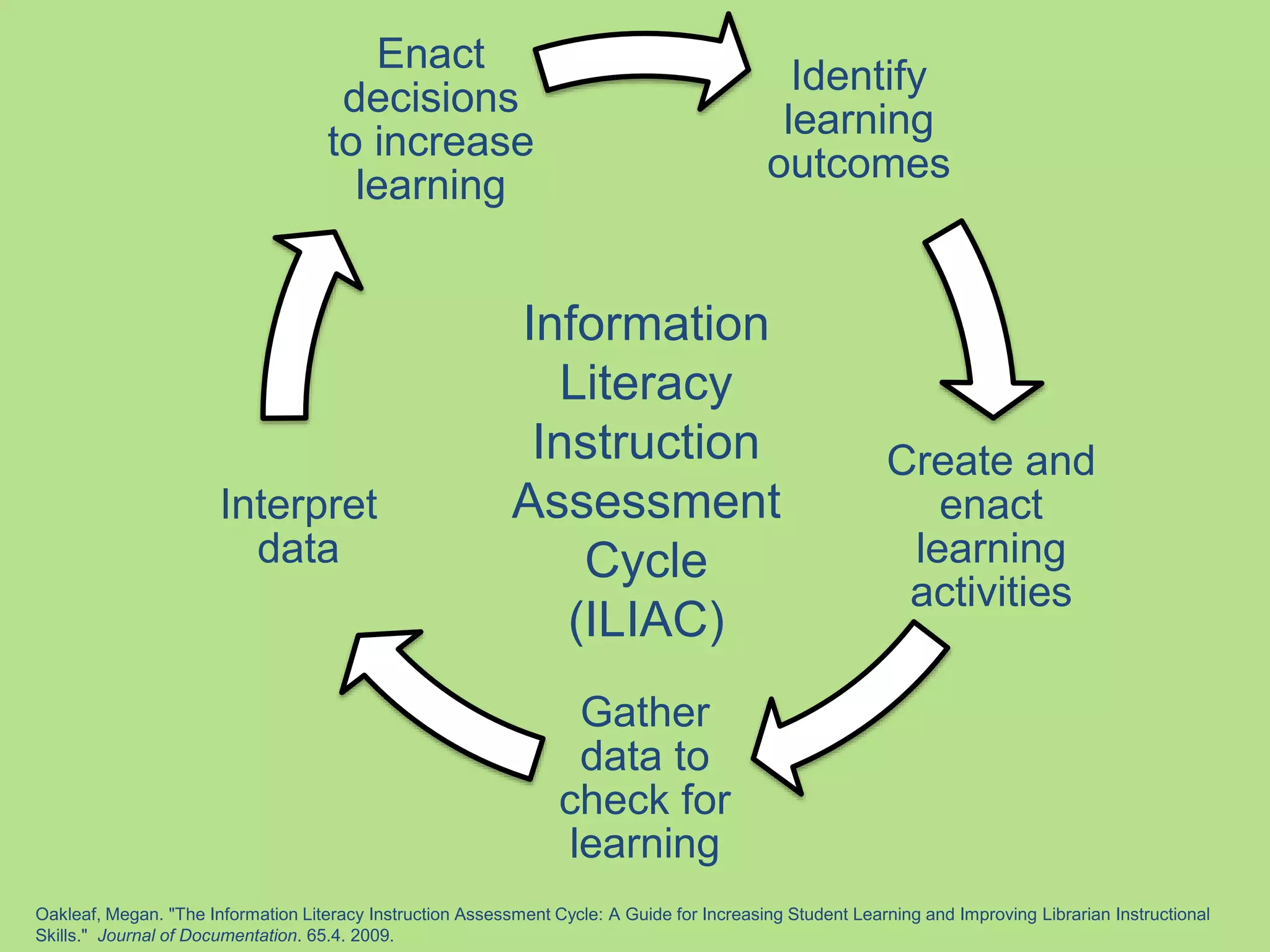

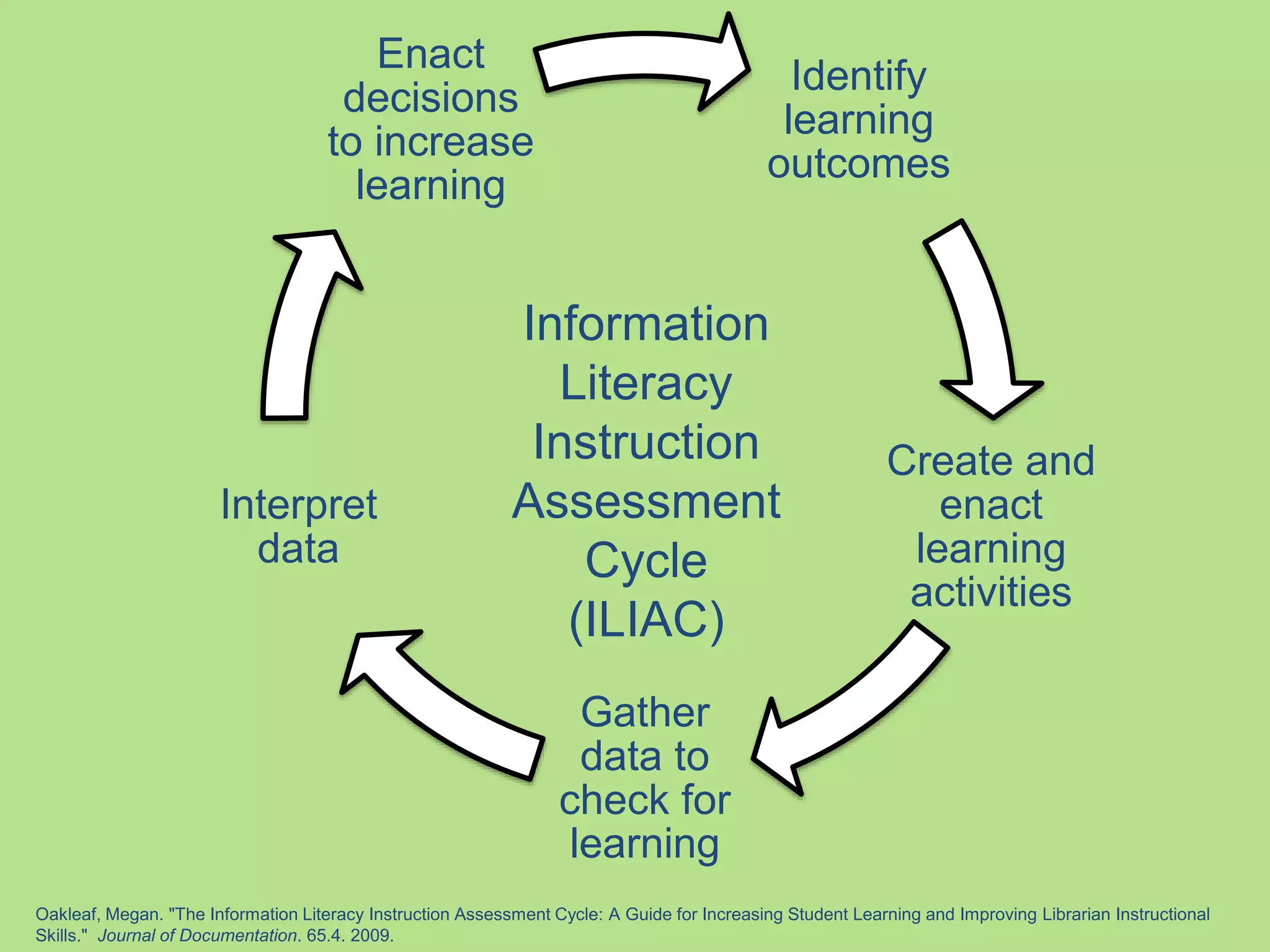

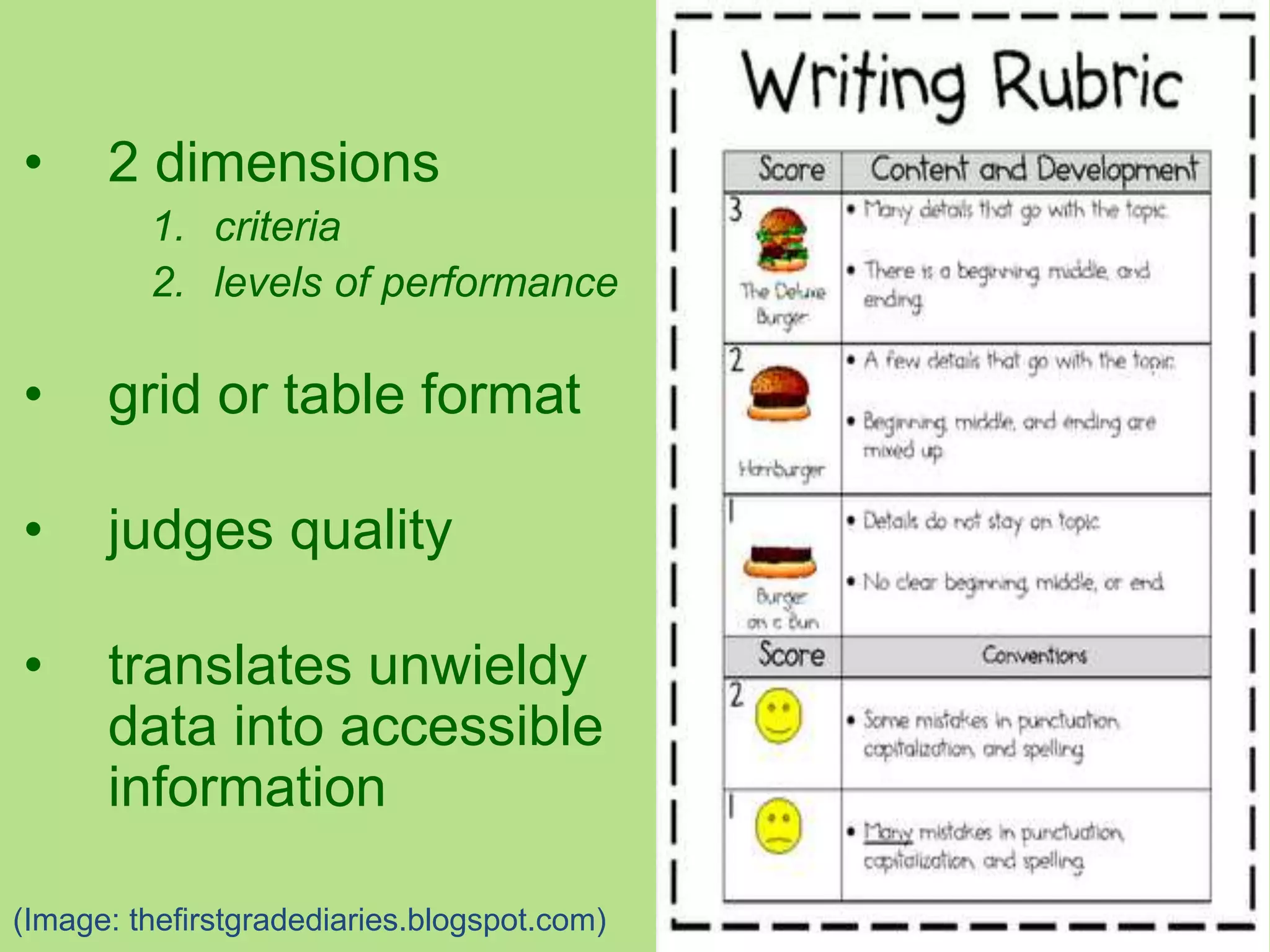

This presentation provided an overview of rubric assessment and the RAILS (Rubric Assessment of Information Literacy Skills) project. It discussed the benefits of rubrics for learning, assessment, and data collection. The presentation covered how to create rubrics using learning outcomes and evidence of student work, as well as how to norm rubrics to achieve inter-rater reliability. Participants practiced norming and rating student work samples using an information literacy skills rubric. The presentation emphasized that explicit performance levels in rubrics are important for reliable scoring.

![Criteria

1.“the conditions a [student] must meet to be

successful” (Wiggins)

2.“the set of indicators, markers, guides, or a list of

measures or qualities that will help [a scorer]

know when a [student] has met an outcome”

(Bresciani, Zelna & Anderson)

3.what to look for in [student] performance “to

determine progress…or determine when mastery

has occurred” (Arter)](https://image.slidesharecdn.com/milexassessnorm2014-140320102309-phpapp02/75/MILEXAssessmentRubrics2014-17-2048.jpg)