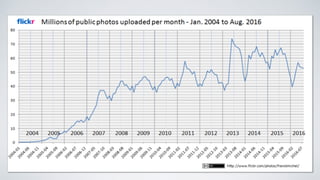

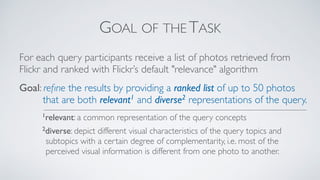

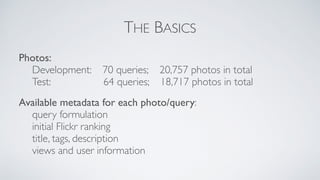

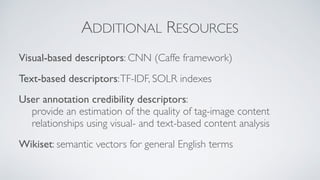

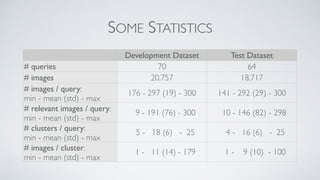

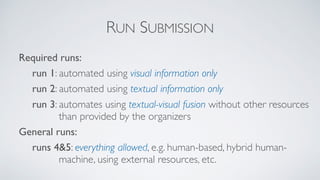

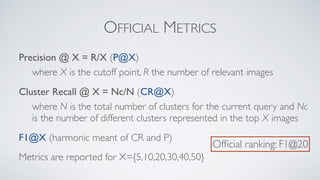

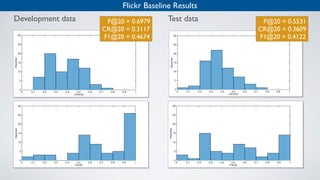

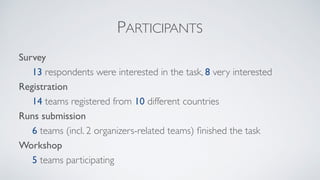

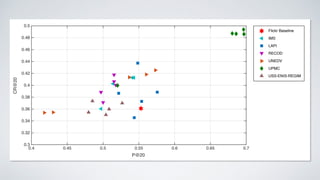

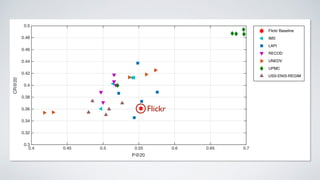

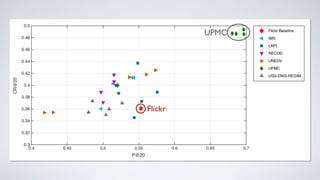

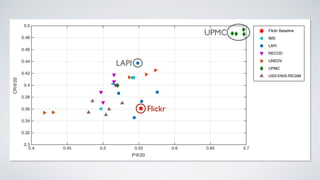

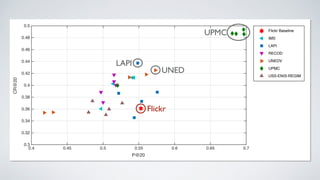

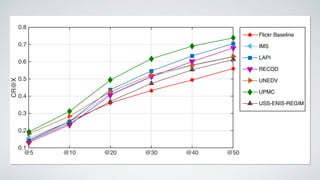

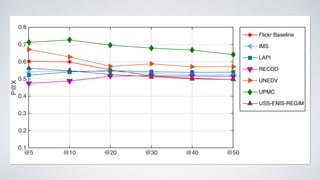

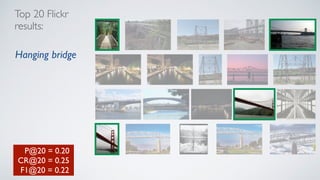

The document outlines a task aimed at improving the diversity of image search results using photos from Flickr, involving multiple participants and queries. The primary goal is to refine a ranked list of visually diverse and relevant images for general-purpose queries. Various metrics are employed for evaluation, including precision and recall, with specific submission requirements for participating teams.