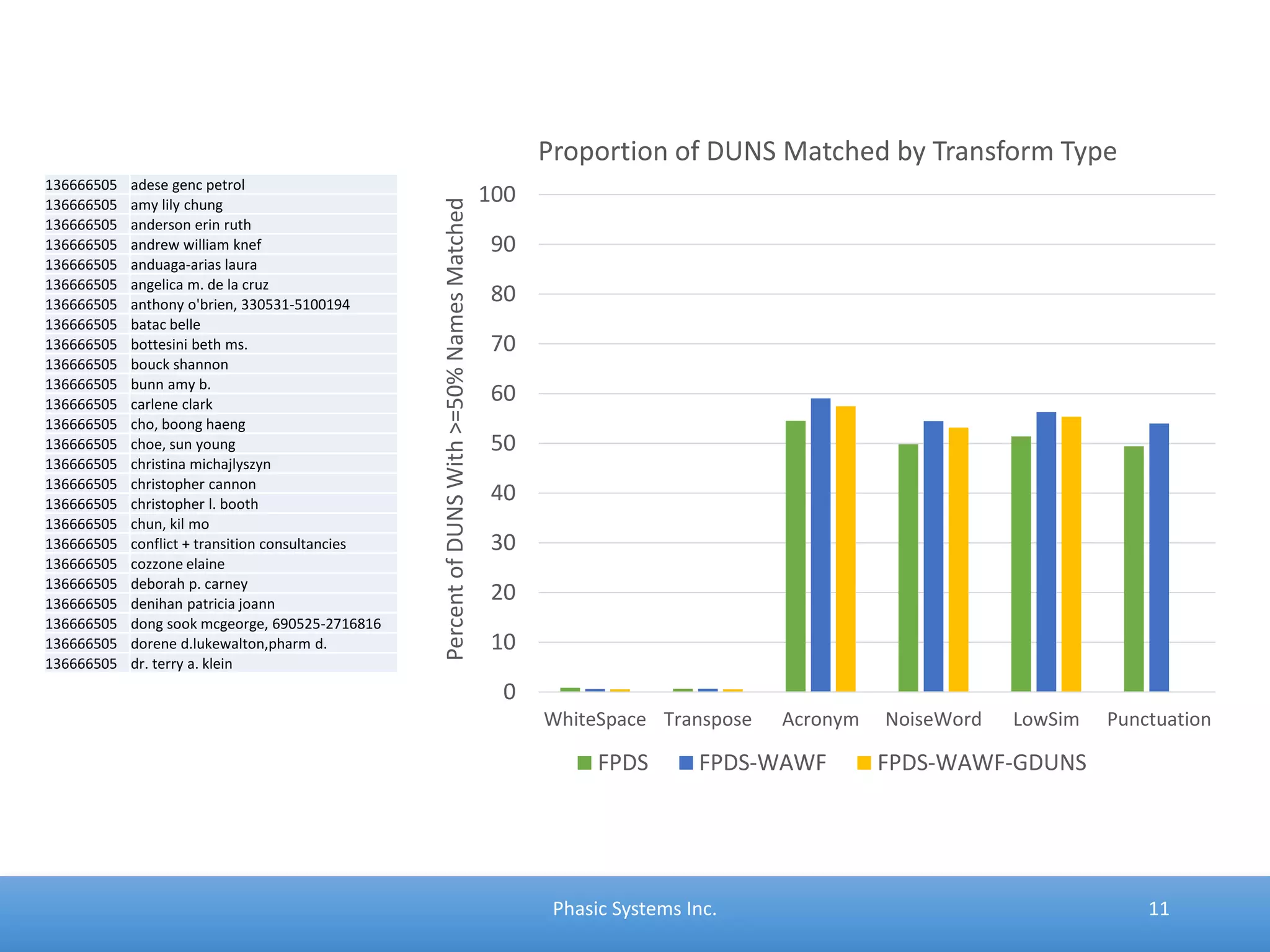

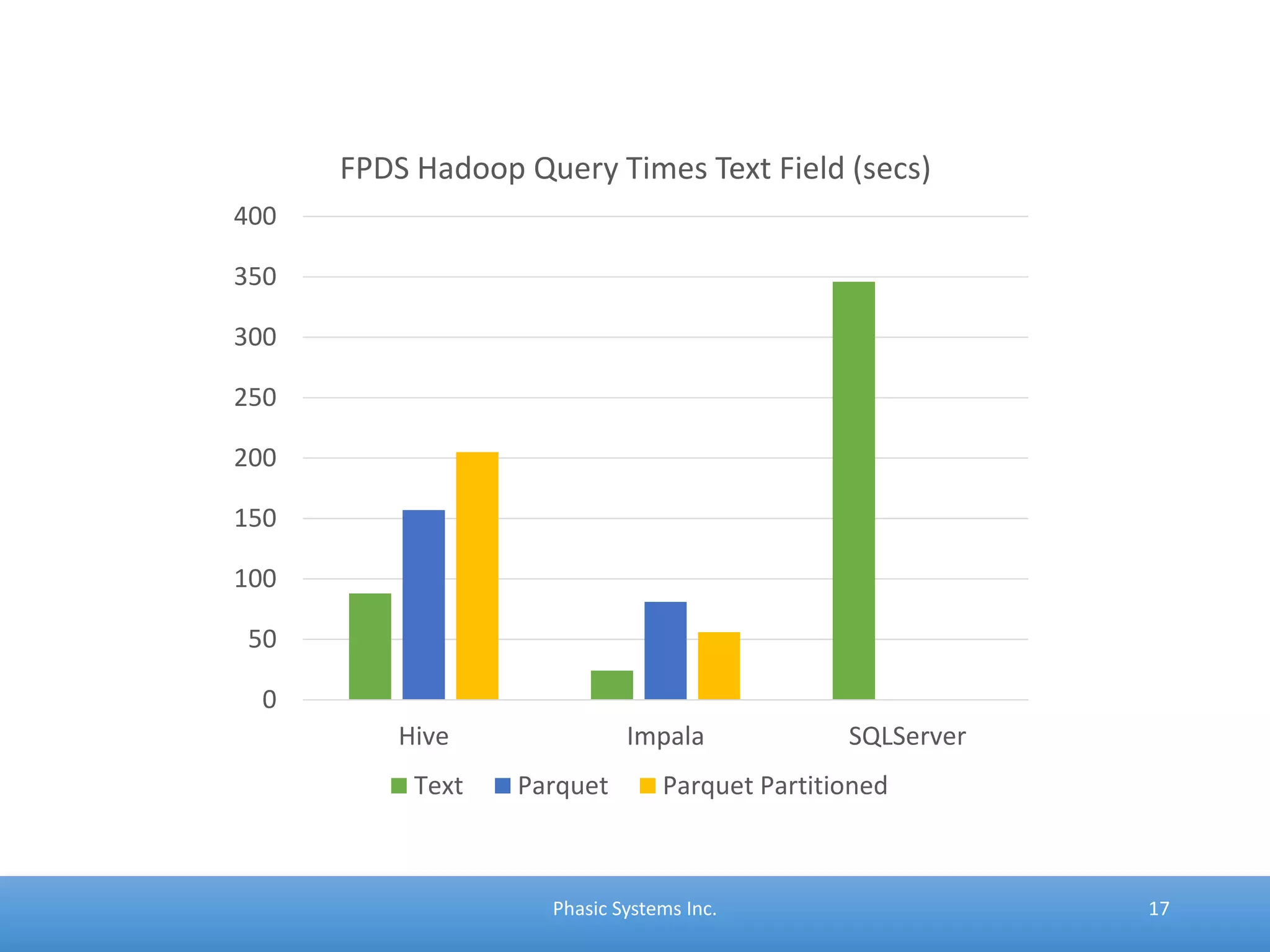

This document discusses how Hadoop can help solve challenges with key corporate data known as "small data". Small data refers to structured data that is critical to main business activities. It discusses issues with small data like multiple sources and definitions causing inconsistencies. It proposes using Hadoop to iteratively detect errors and inconsistencies in small data to allow normalization. Normalization combines subject knowledge and rules to make small data more consistent and meaningful for analysis. The document argues Hadoop provides a flexible, fast environment for data analytics that can help address requirements around understanding, preparing, and maintaining small but critical corporate data.