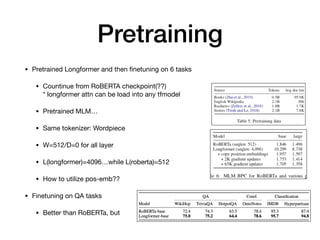

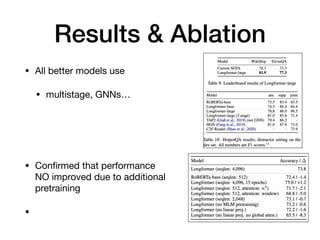

The Longformer model introduces an efficient attention mechanism that allows transformer models to process longer sequences than previous approaches. Longformer uses sliding window attention and dilated attention to attend to a subset of context positions while still enabling attention to positions far from the query. Experiments show Longformer outperforms RoBERTa on character-level language modeling tasks and achieves better results on downstream question answering tasks when finetuned from a Longformer pretraining compared to a RoBERTa pretraining.