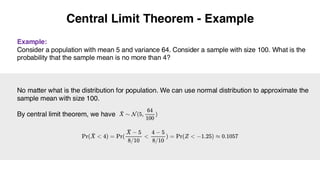

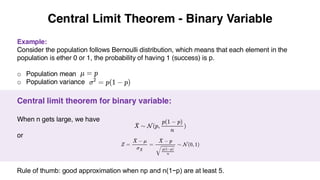

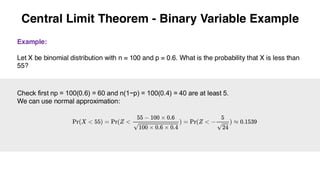

The document discusses statistical analysis concepts essential for business decisions, focusing on the Central Limit Theorem and Law of Large Numbers. It explains the importance of sampling, the properties of sample means, and how to estimate population parameters using samples. Additionally, it covers the implications of sample size on variance and the distribution of sample means, leading to the conclusion that large samples tend to approximate normal distributions.