Embed presentation

Download as PDF, PPTX

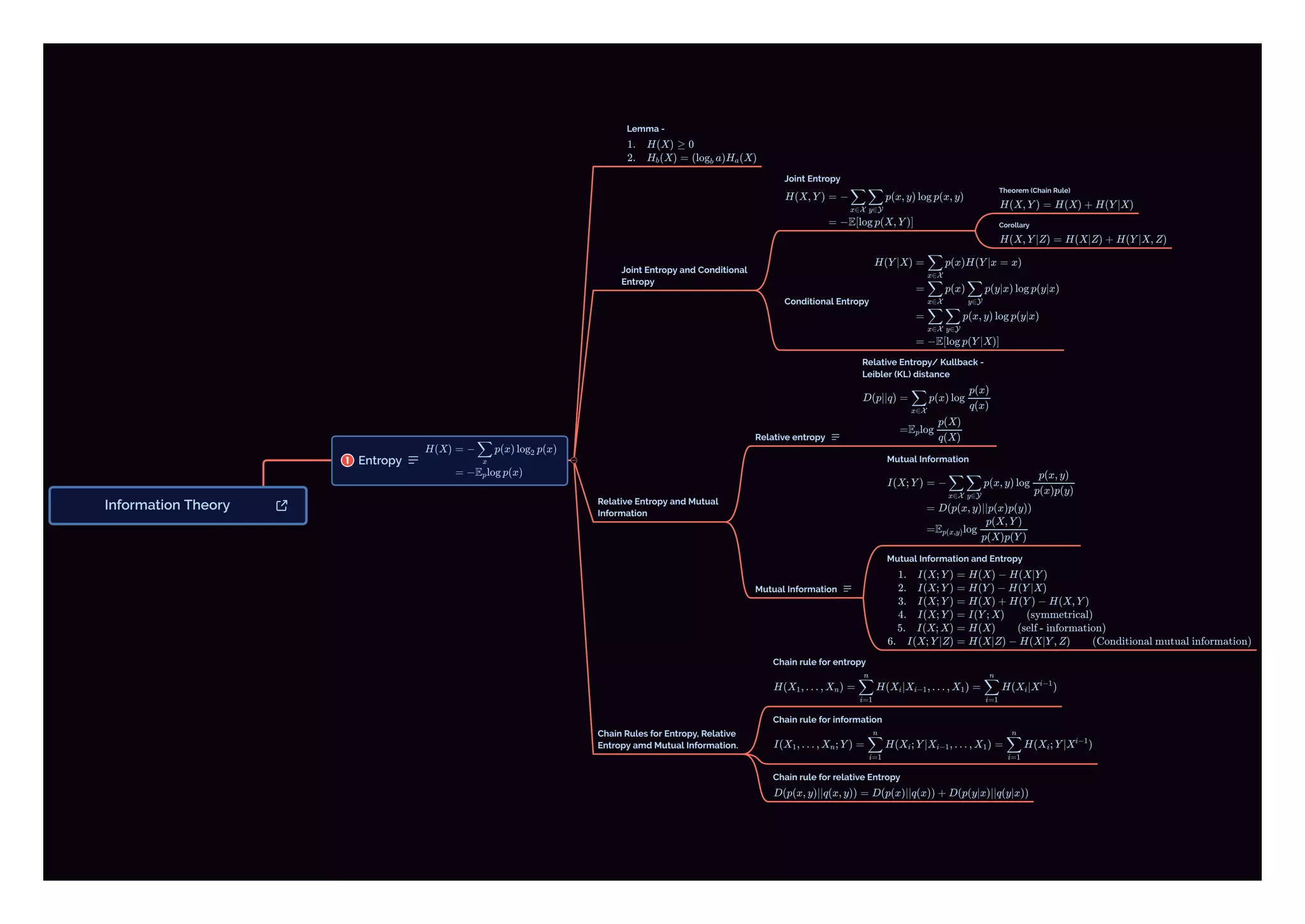

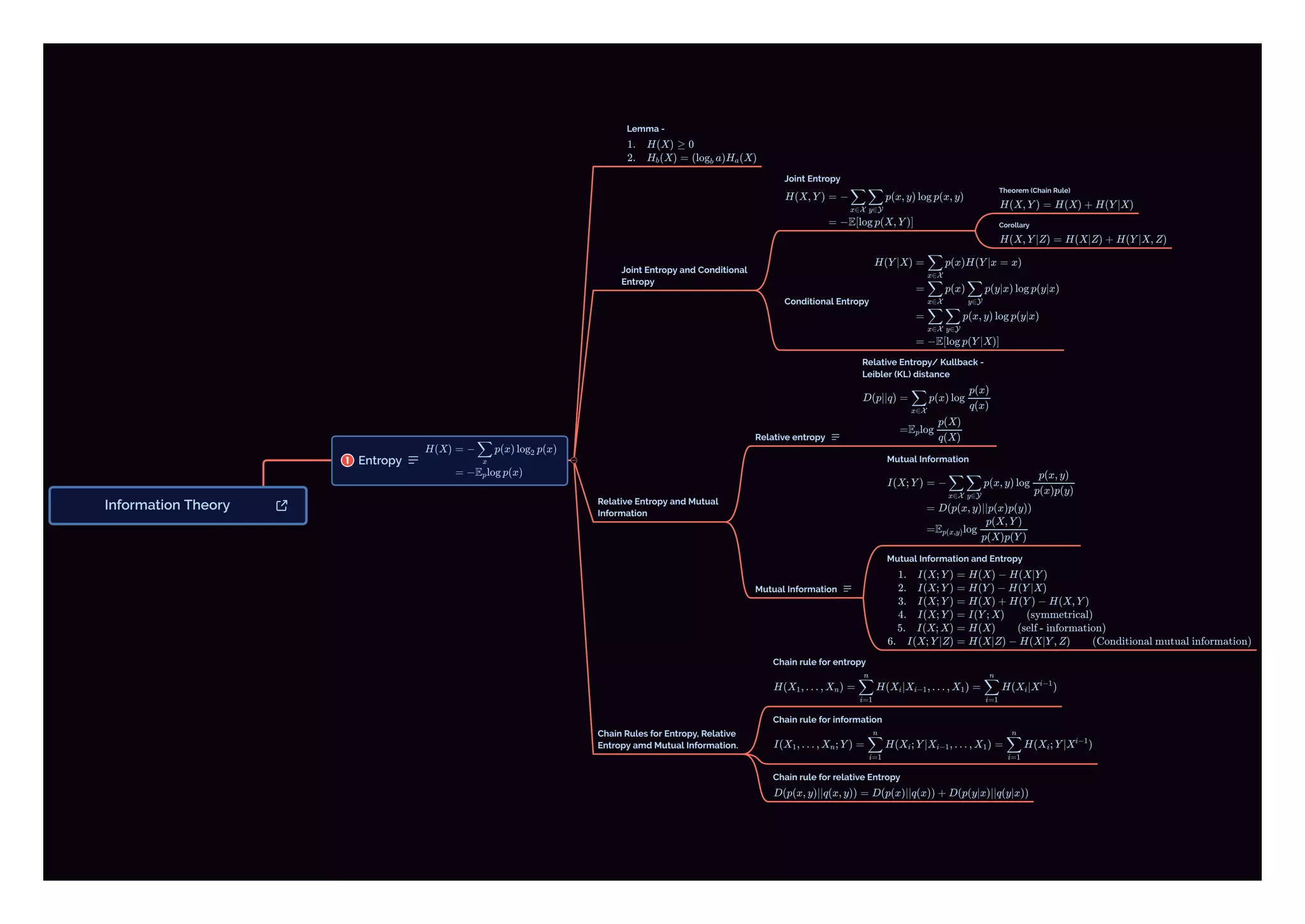

The document covers key concepts in information theory including entropy, joint and conditional entropy, and the chain rules associated with these concepts. It discusses relative entropy, also known as Kullback-Leibler distance, and mutual information, detailing theorems and corollaries related to these topics. Overall, it provides a foundational overview of how these elements interact in the field of information theory.