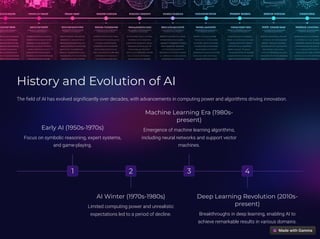

Artificial intelligence (AI) refers to the simulation of human intelligence processes by computers, enabling them to perform tasks such as learning, problem-solving, and decision-making. The document outlines the history of AI, from early development focused on symbolic reasoning to recent advancements in machine learning and deep learning techniques, which have revolutionized the field. Fundamental AI techniques include supervised learning, unsupervised learning, reinforcement learning, and knowledge representation.