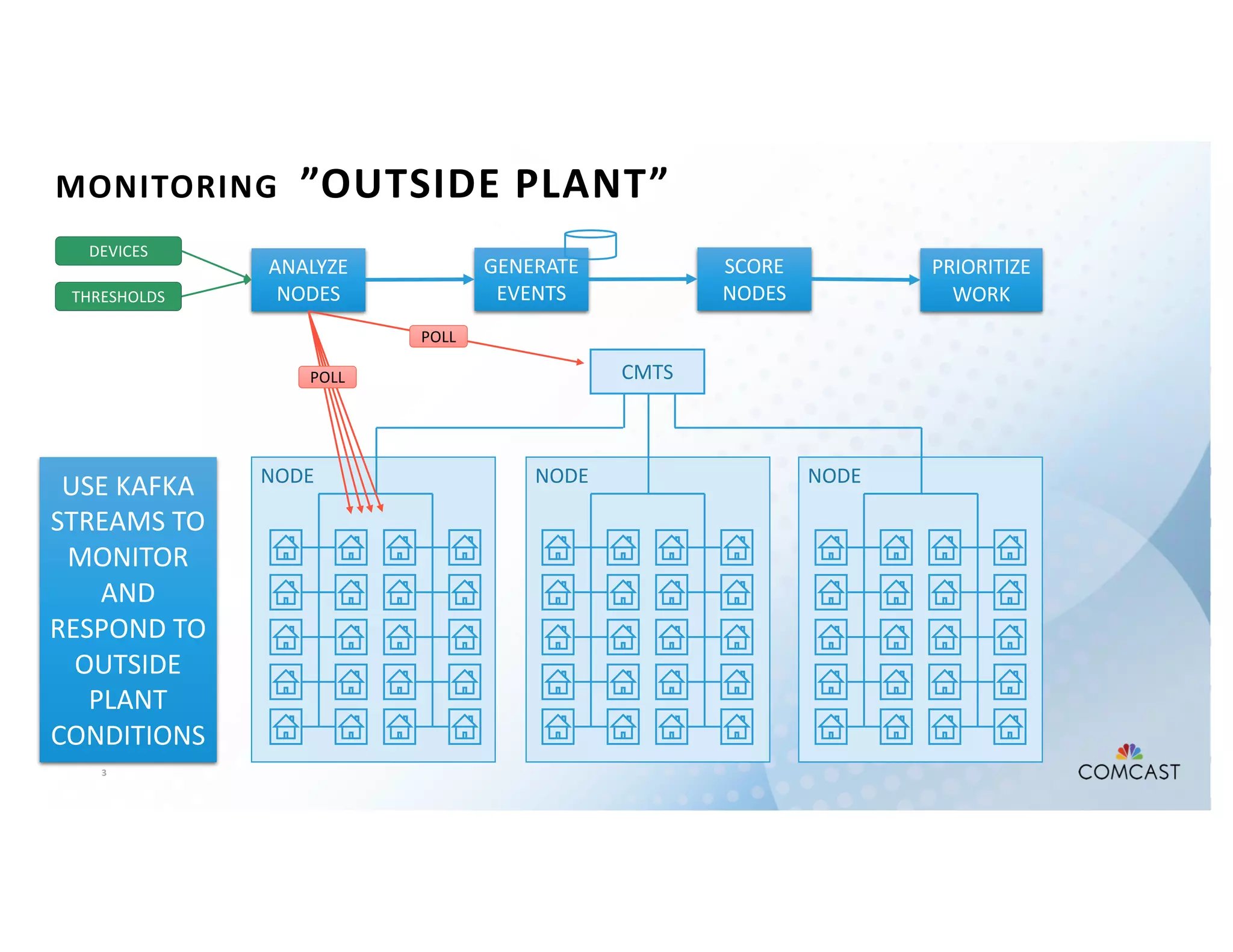

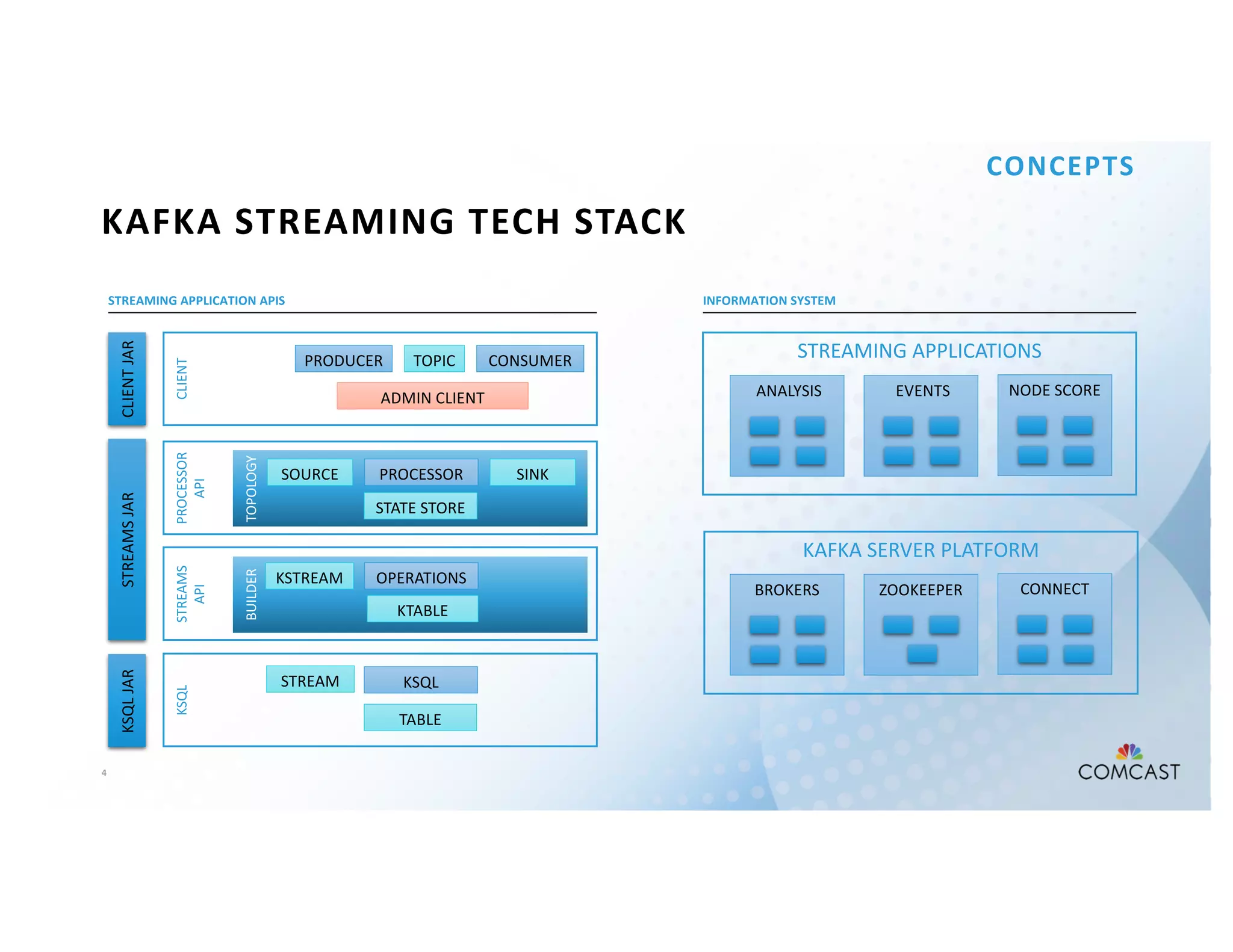

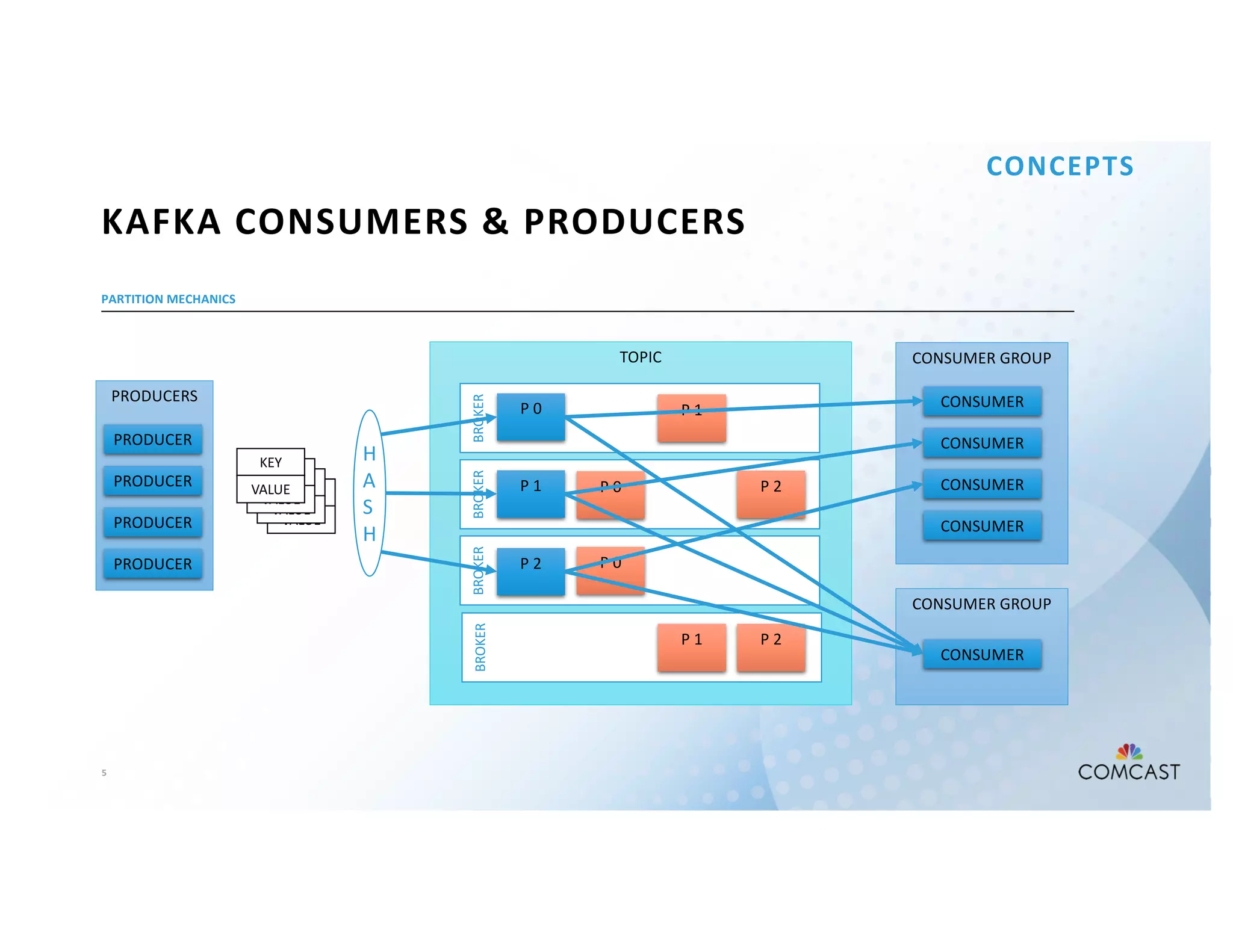

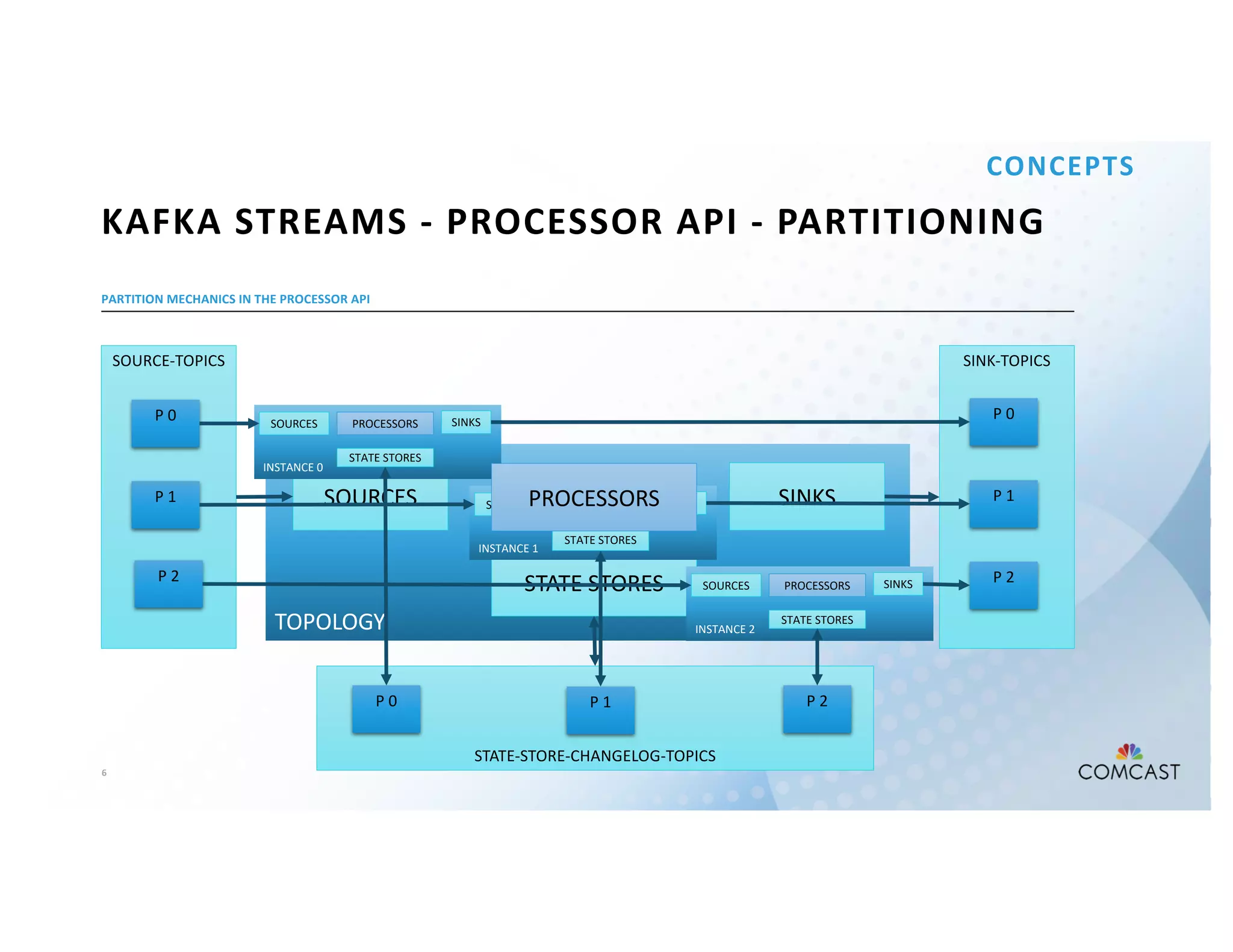

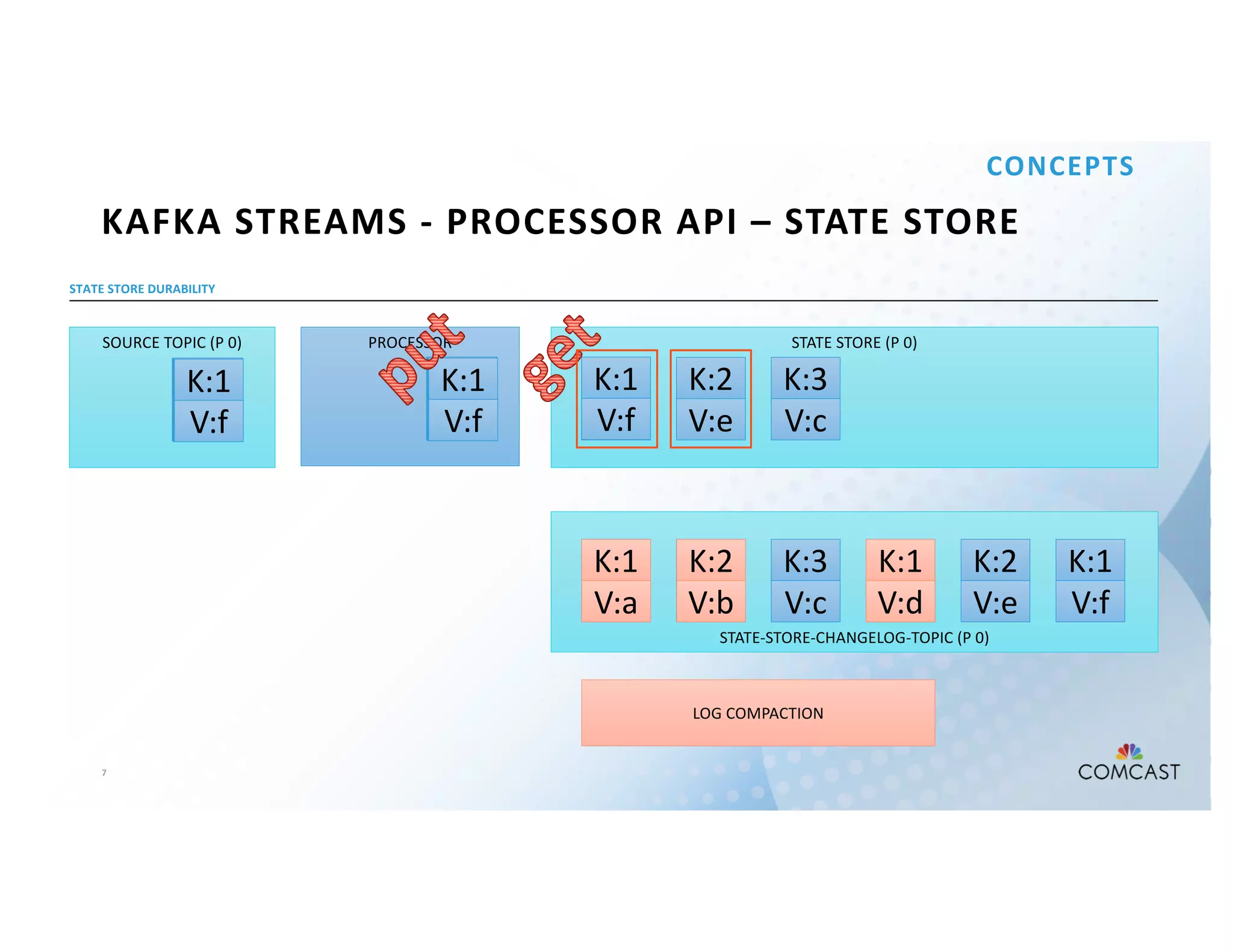

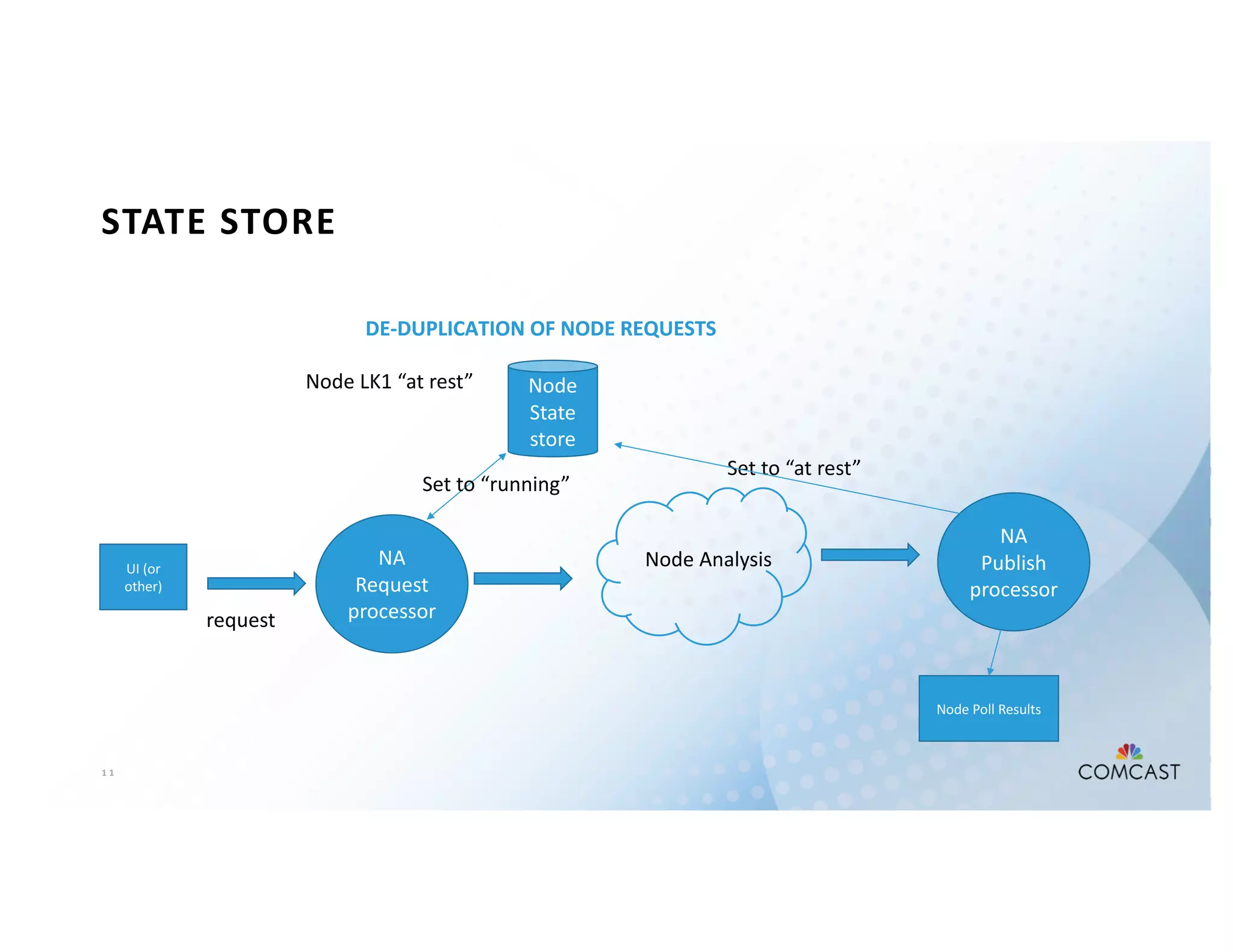

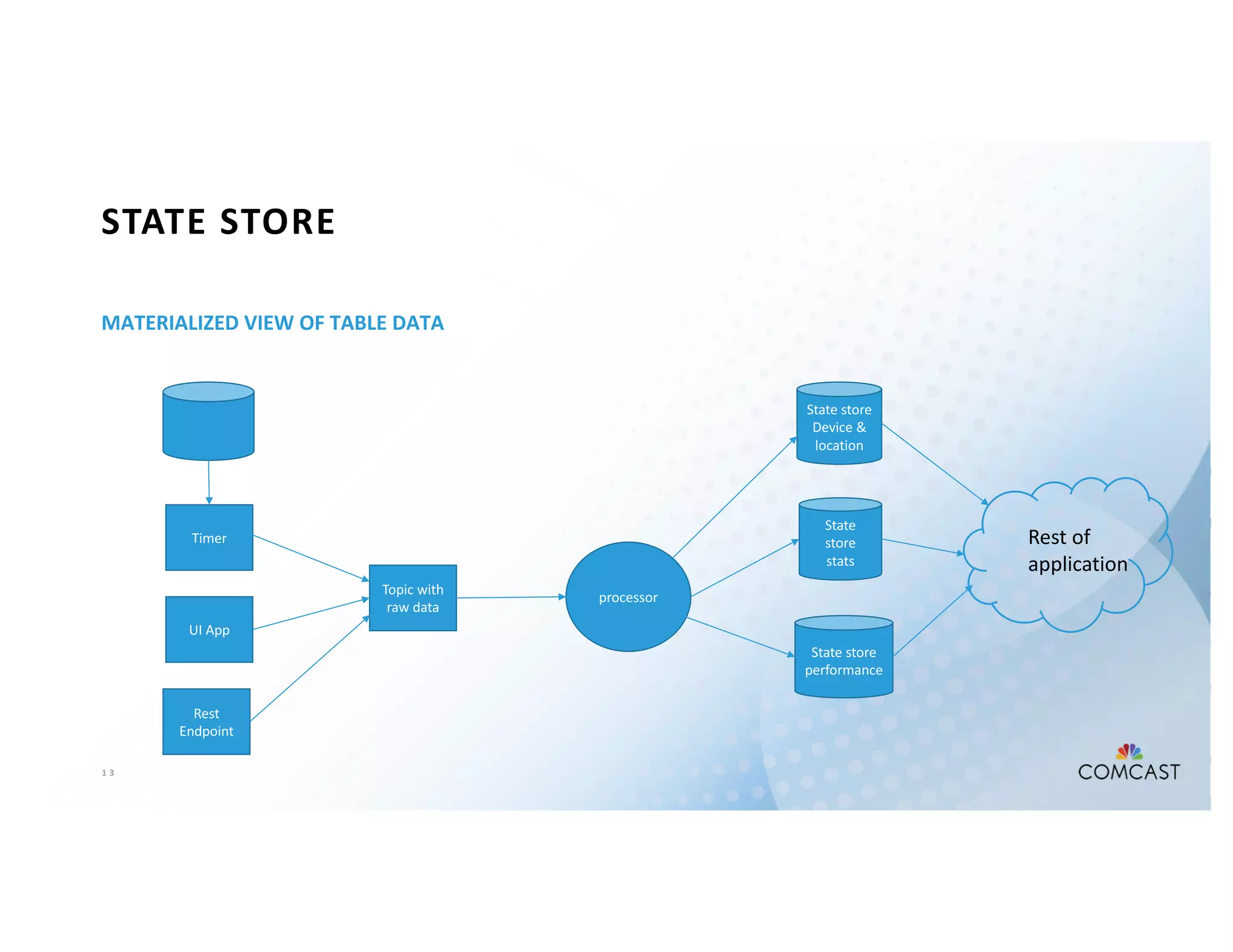

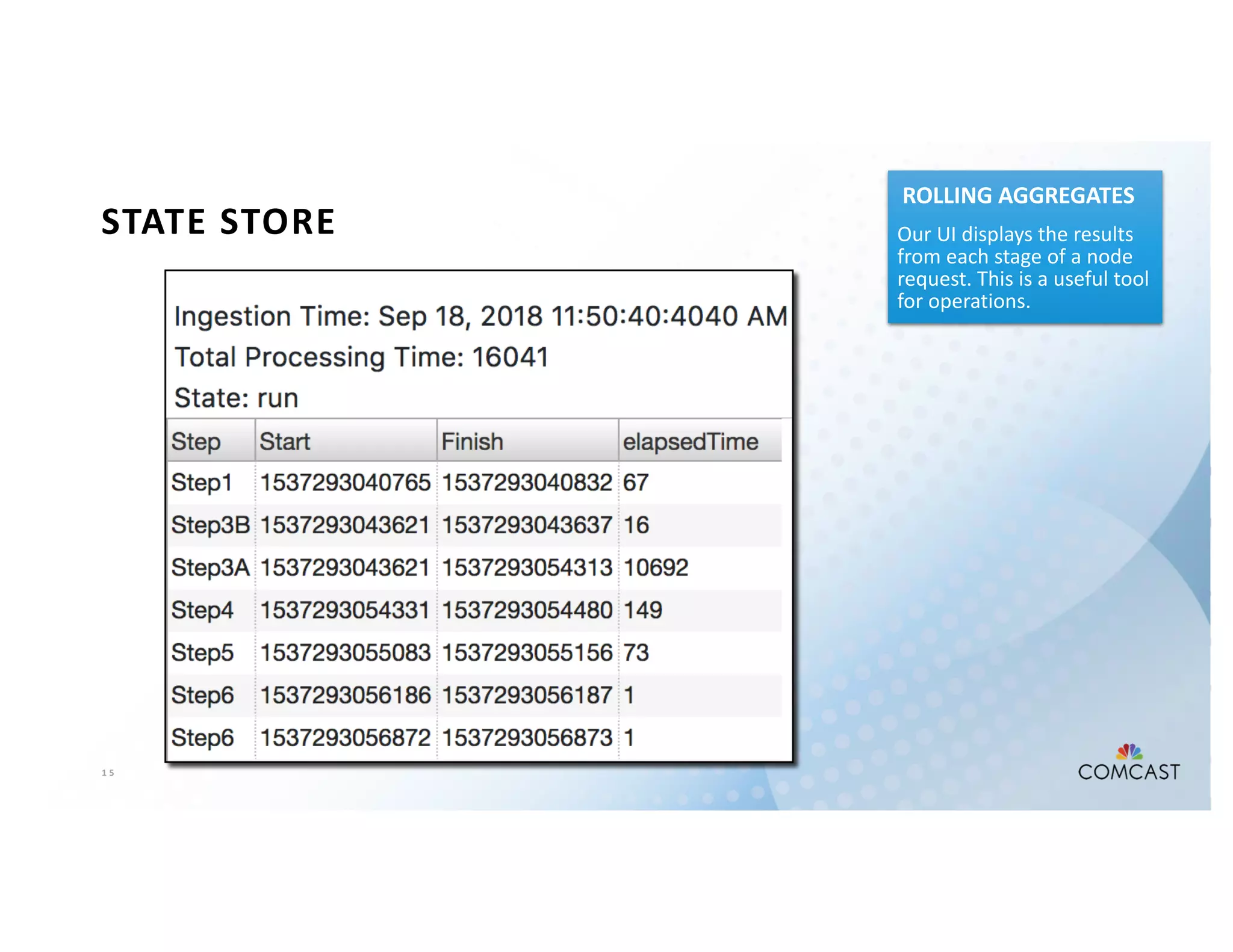

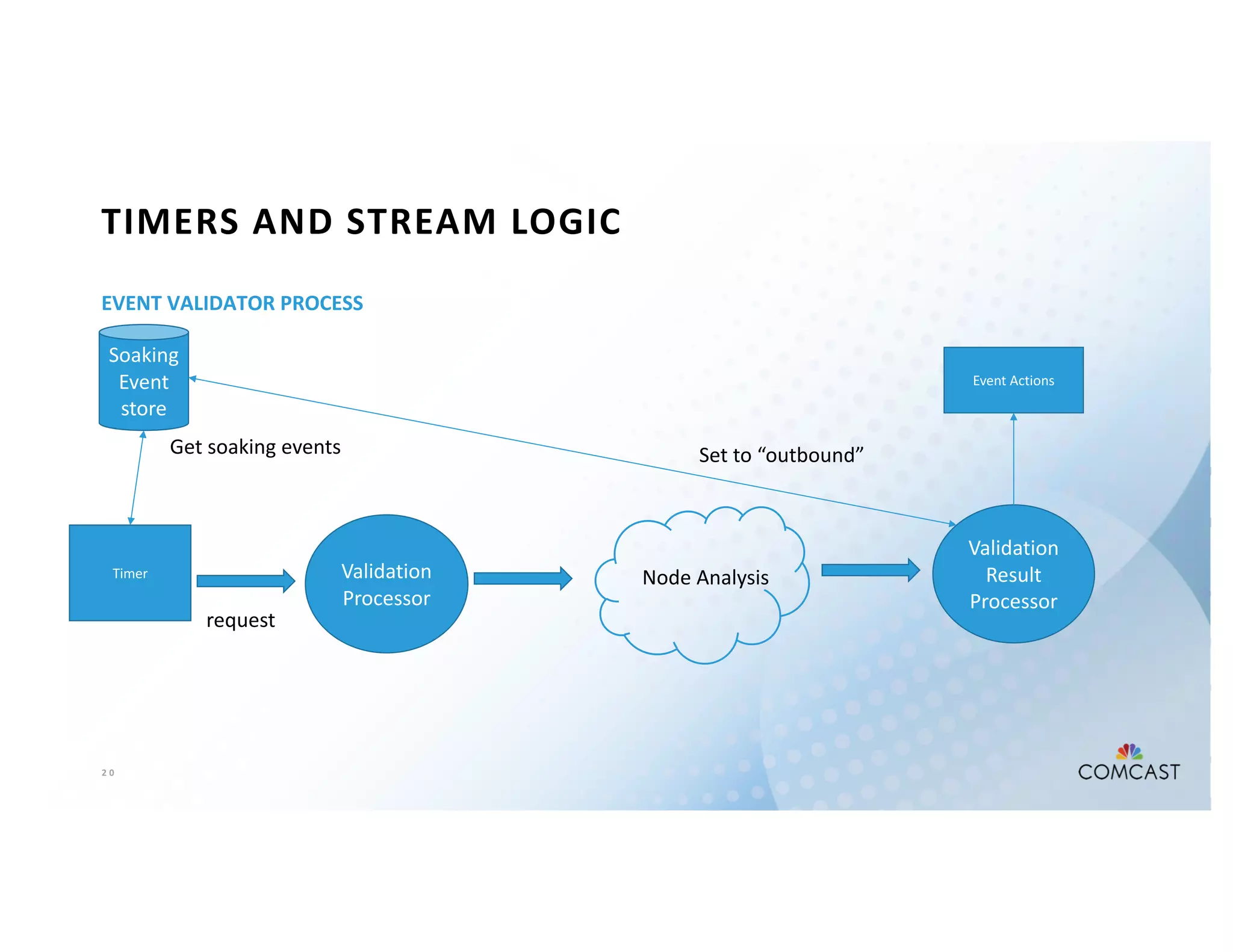

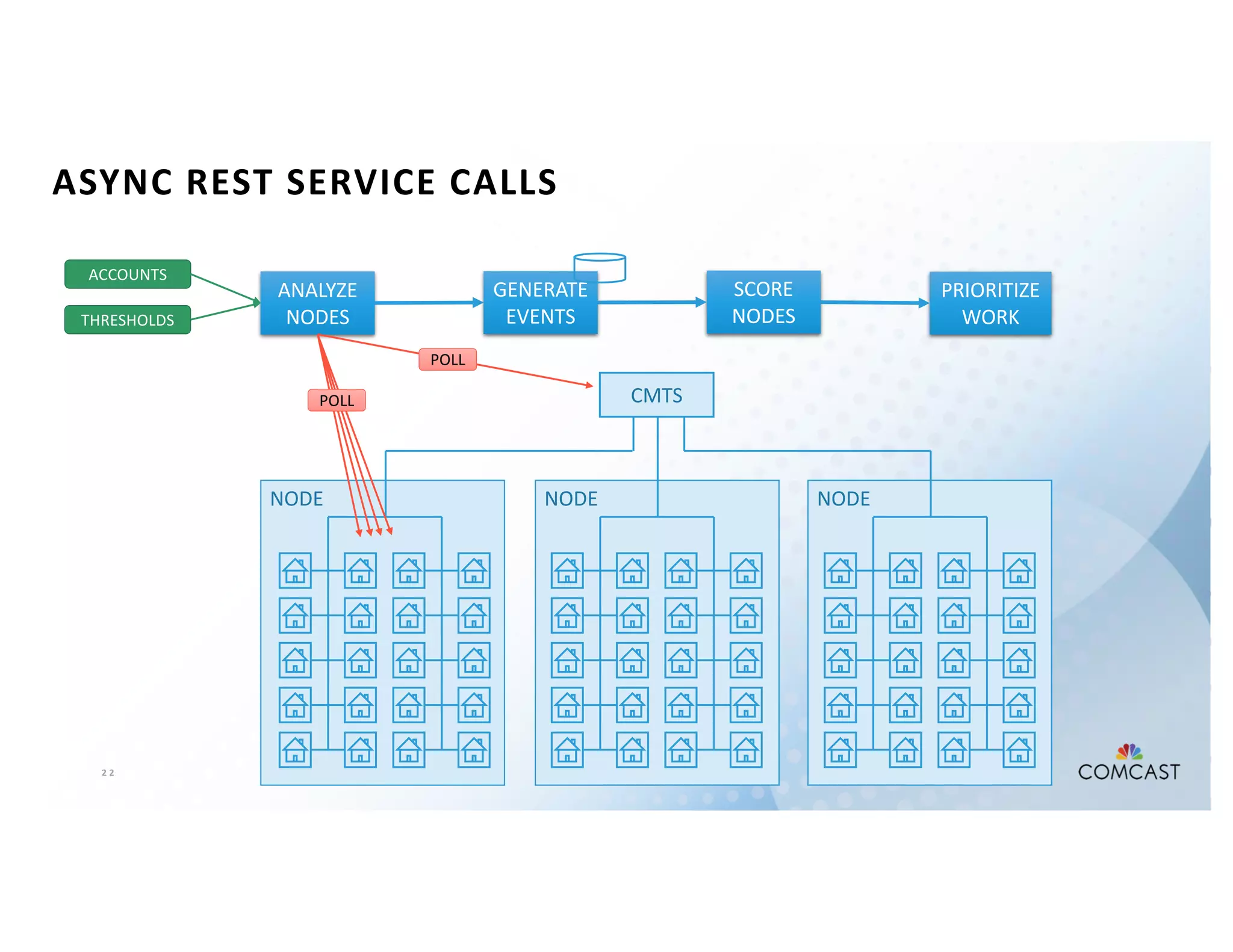

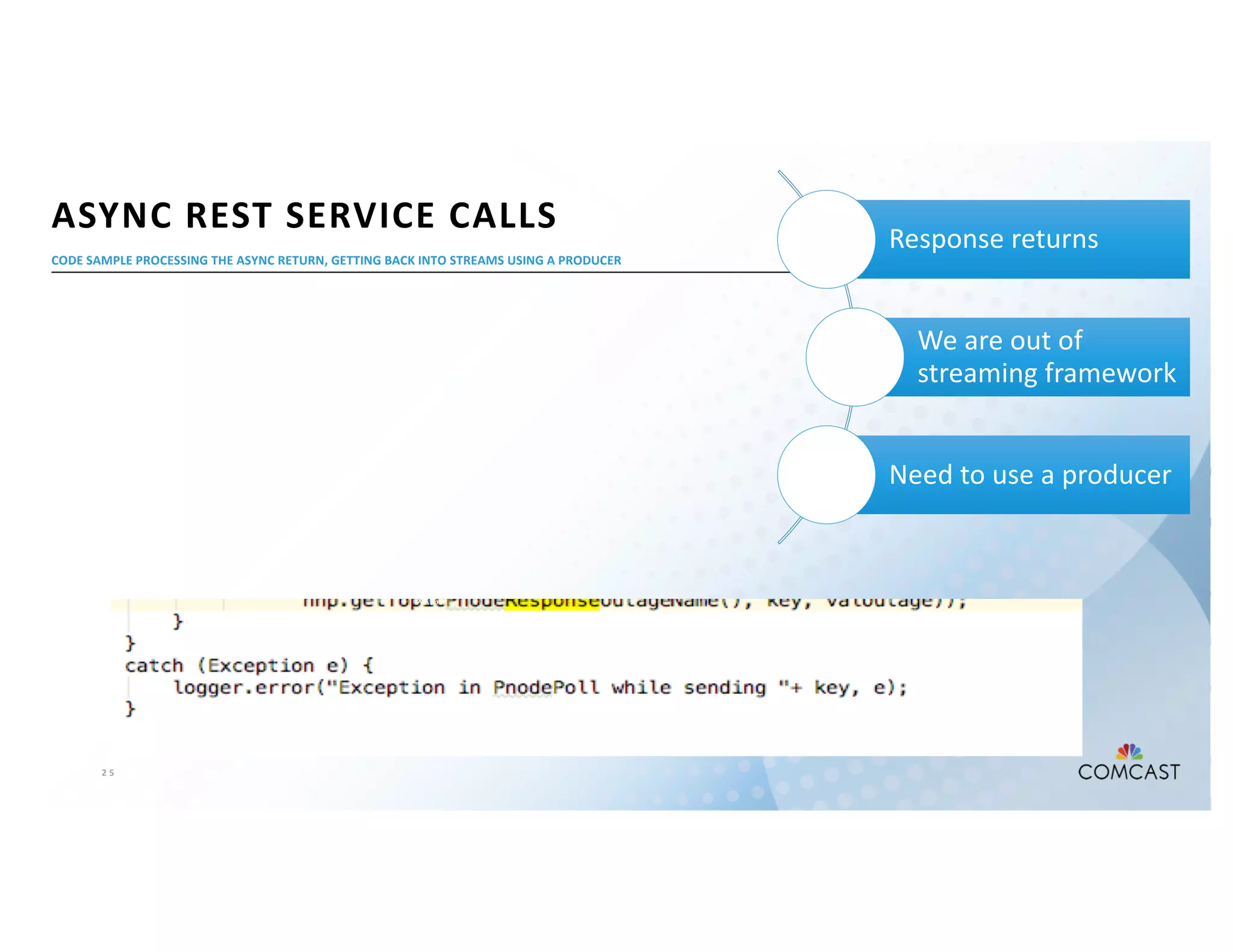

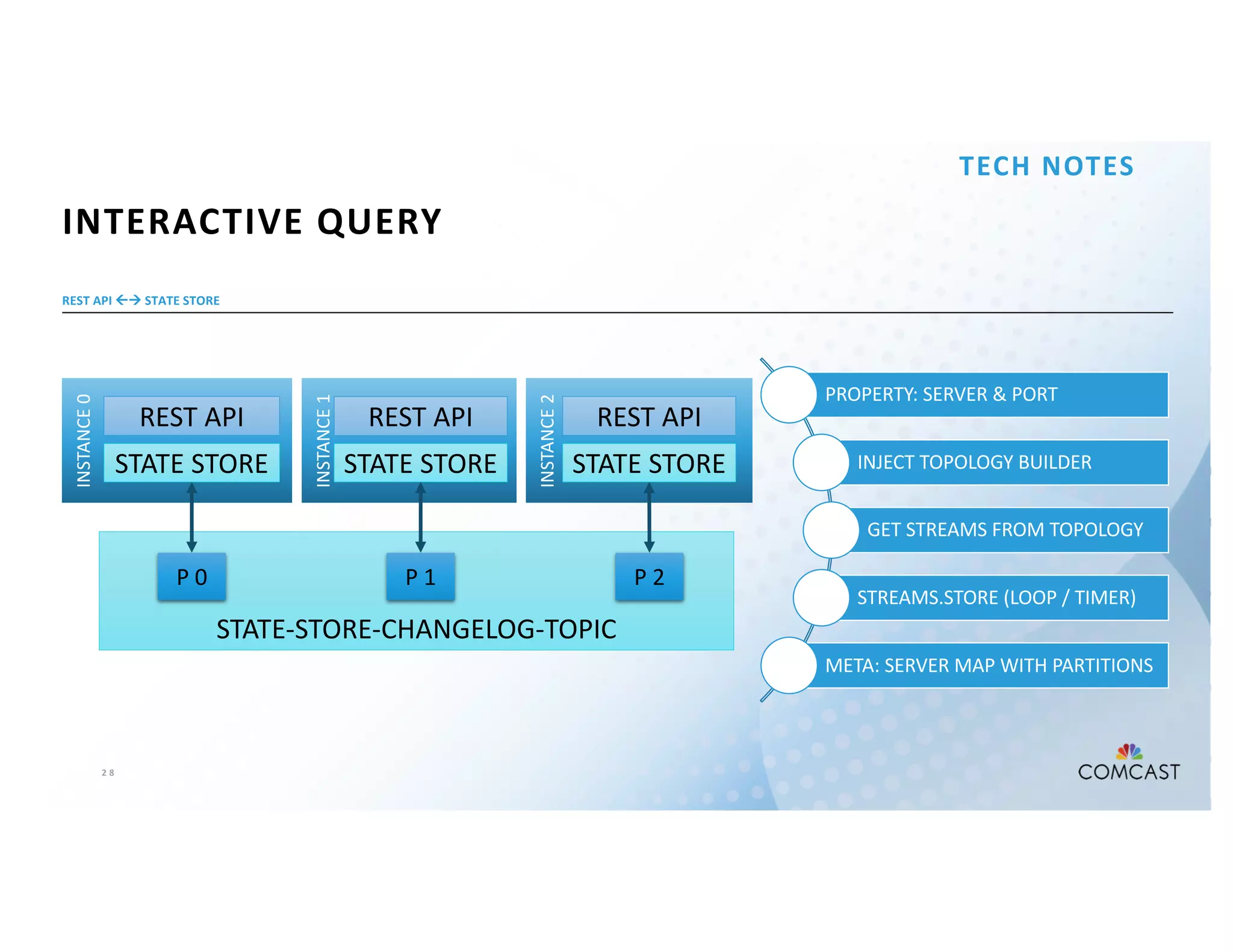

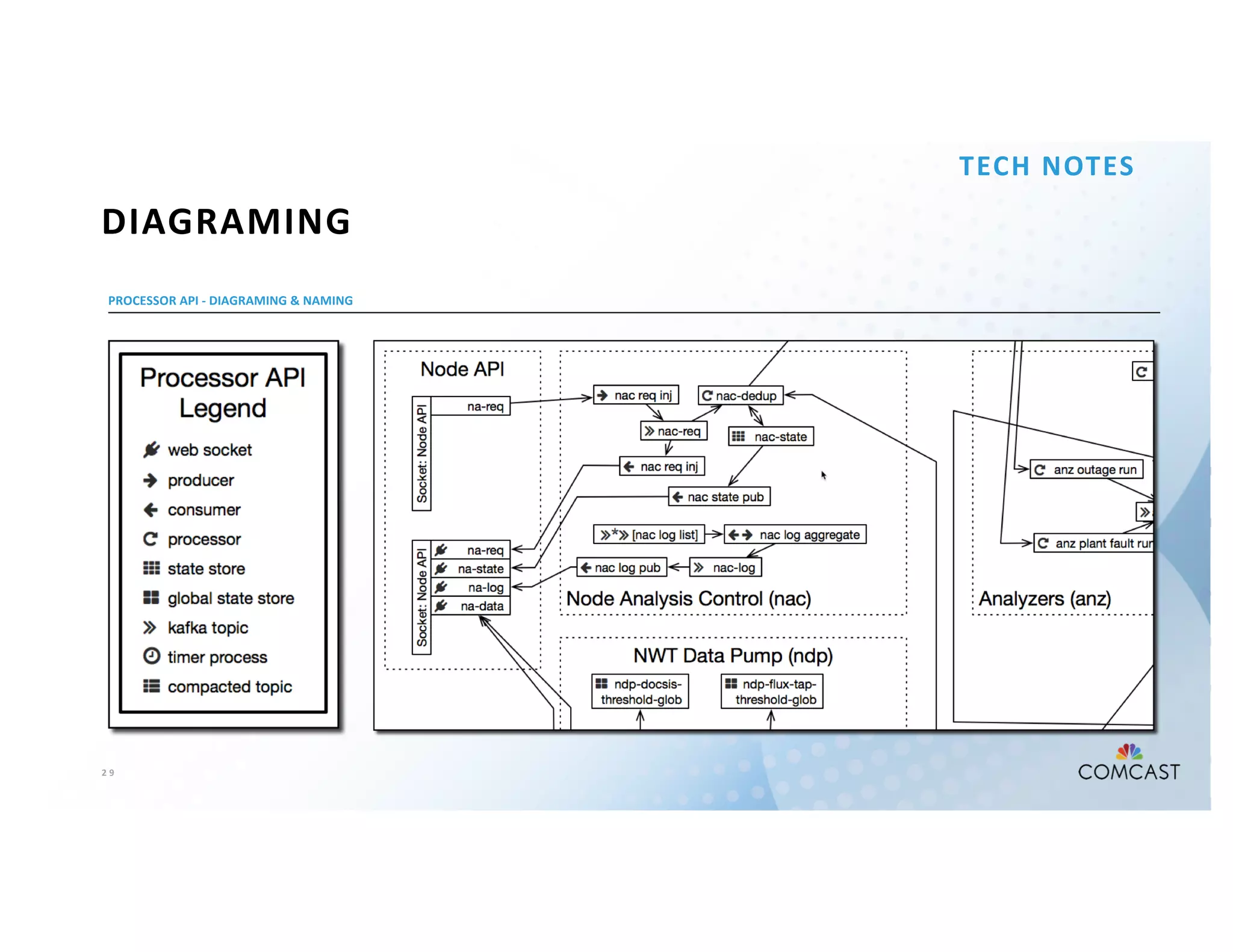

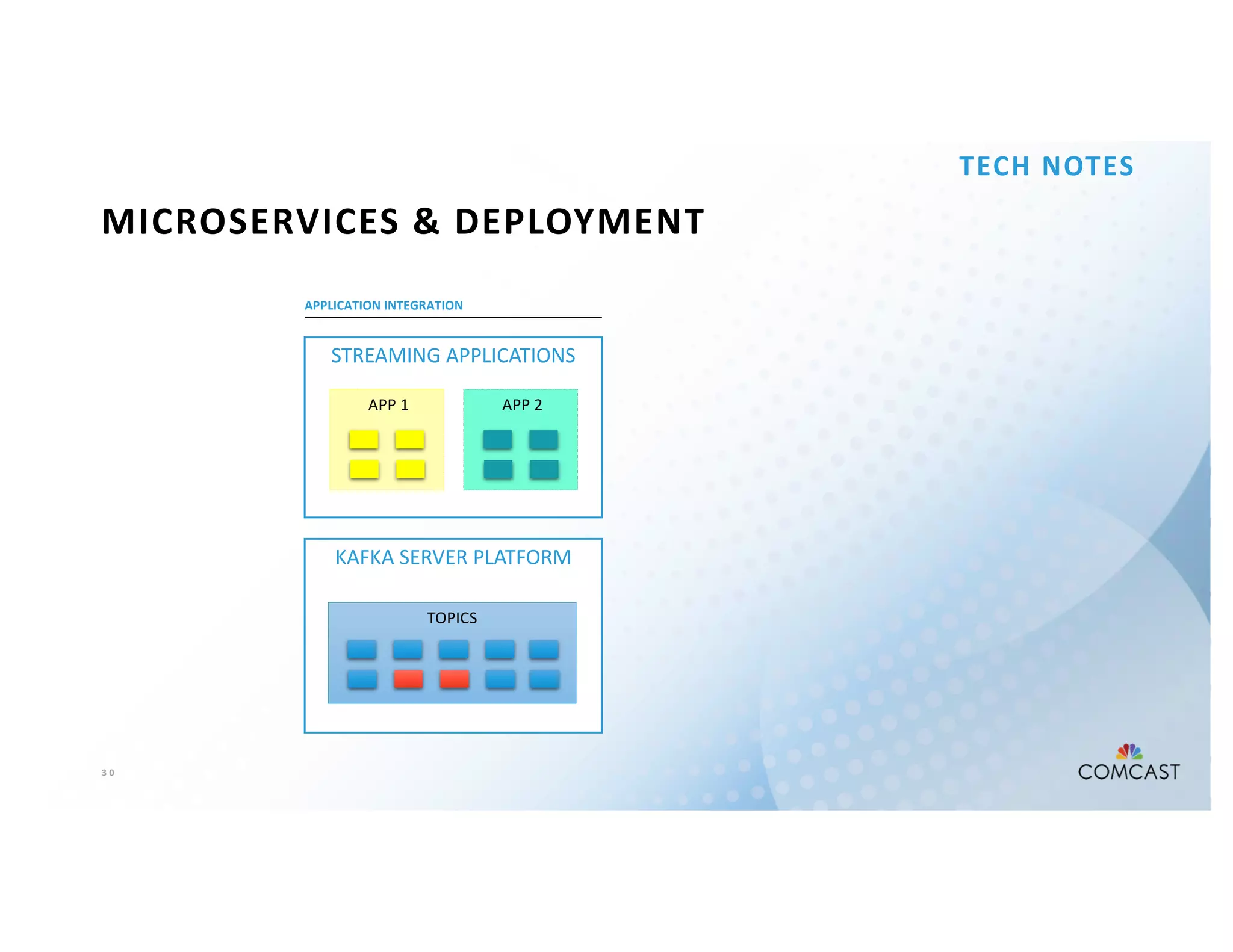

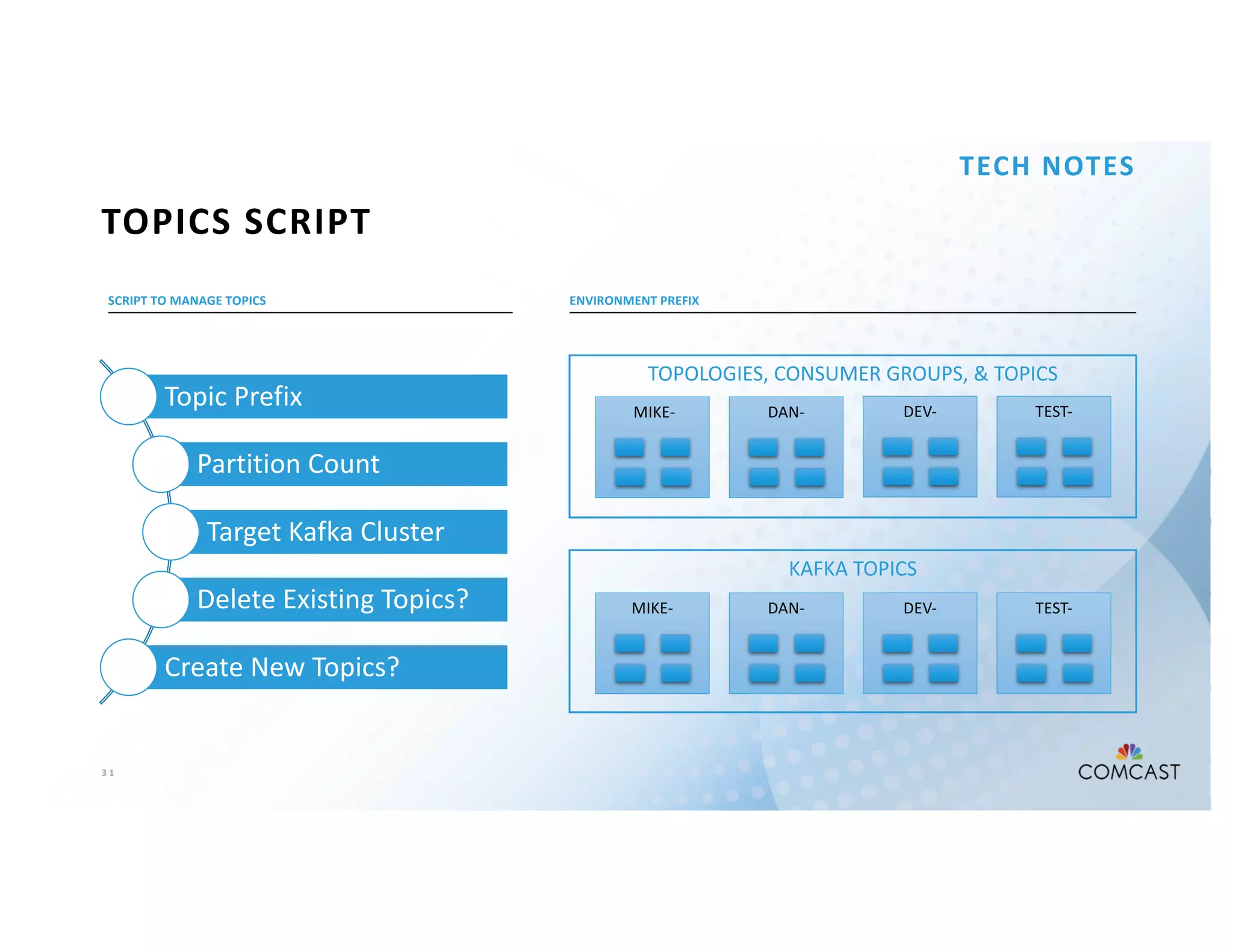

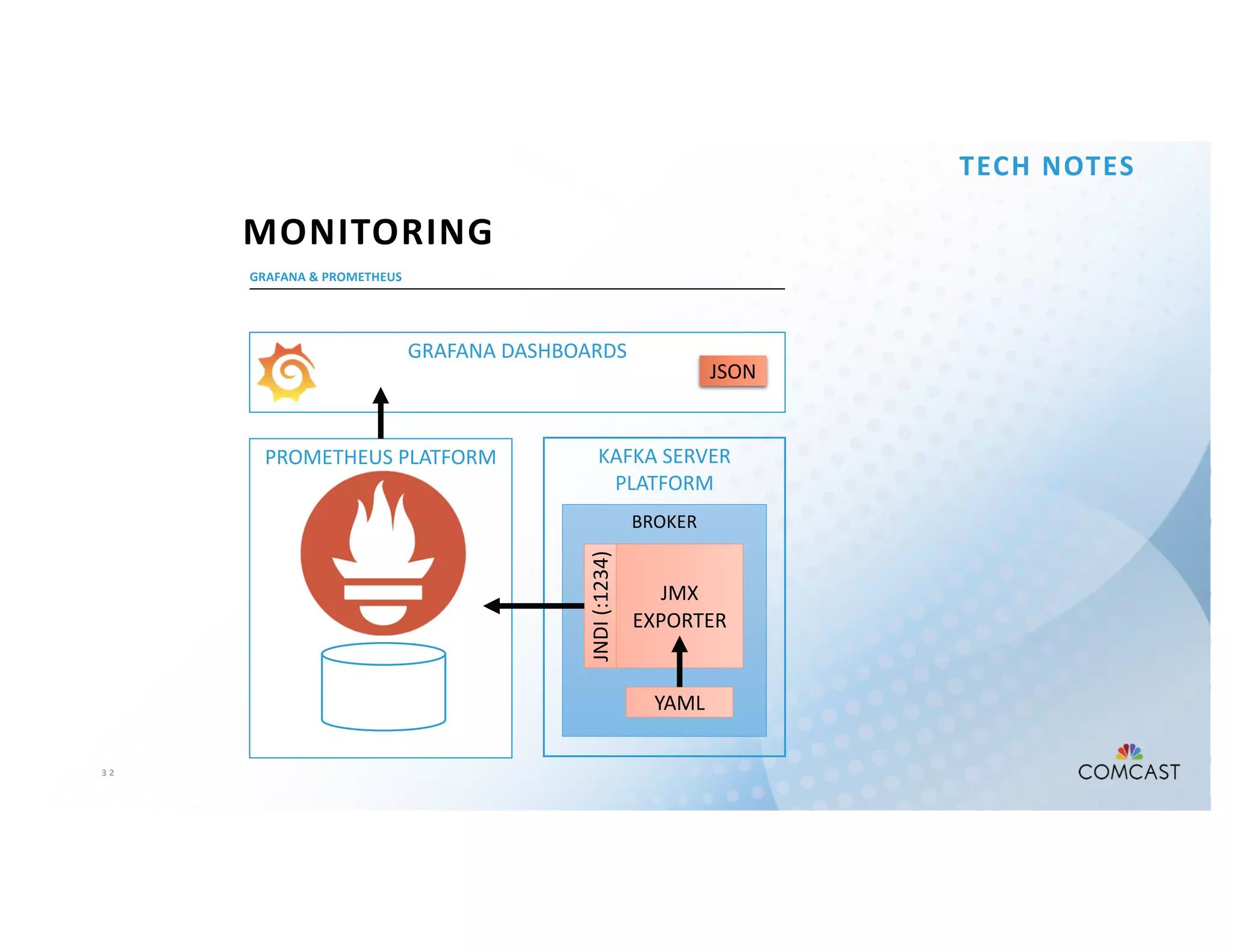

The document outlines Comcast's use of Kafka Streams for monitoring external factors affecting their outside plant, such as weather and power. It details the architecture for handling node analysis, event scoring, and state store utilization for deduplication and rolling aggregates. Additionally, it emphasizes advantages of the streaming solution over traditional database methods and discusses integration with microservices and monitoring tools.