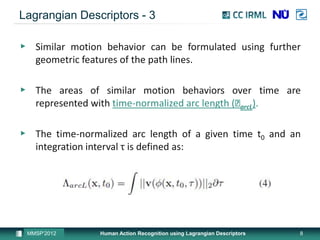

This document presents a method for human action recognition using Lagrangian descriptors. Lagrangian methods are applied to treat optical flow fields as unsteady vector fields. Lagrangian descriptors in the form of finite-time Lyapunov exponents (FTLE) and time-normalized arc length are extracted from video frames to capture motion boundaries and areas of coherent flow. A histogram of oriented gradients (HoG) is computed for each descriptor, and the descriptors are fused before training an SVM classifier. The method is evaluated on the Weizmann and KTH datasets, demonstrating its ability to recognize basic actions while maintaining privacy by working with optical flow fields rather than raw images. Future work will focus on local representations and recognizing more complex actions.

![Lagrangian Descriptors - 1

▶ The flow map describes a mapping of an initial position to its

next position after a predefined integration time τ starting at

t0.

▶ Combining all positions of one specific point within a certain

time interval [t0; t0 + τ] creates a polynomial curve denoted

as path line.

MMSP’2012 Human Action Recognition using Lagrangian Descriptors 6](https://image.slidesharecdn.com/mmsp2012-121011092522-phpapp02/85/Human-Action-Recognition-using-Lagrangian-Descriptors-6-320.jpg)