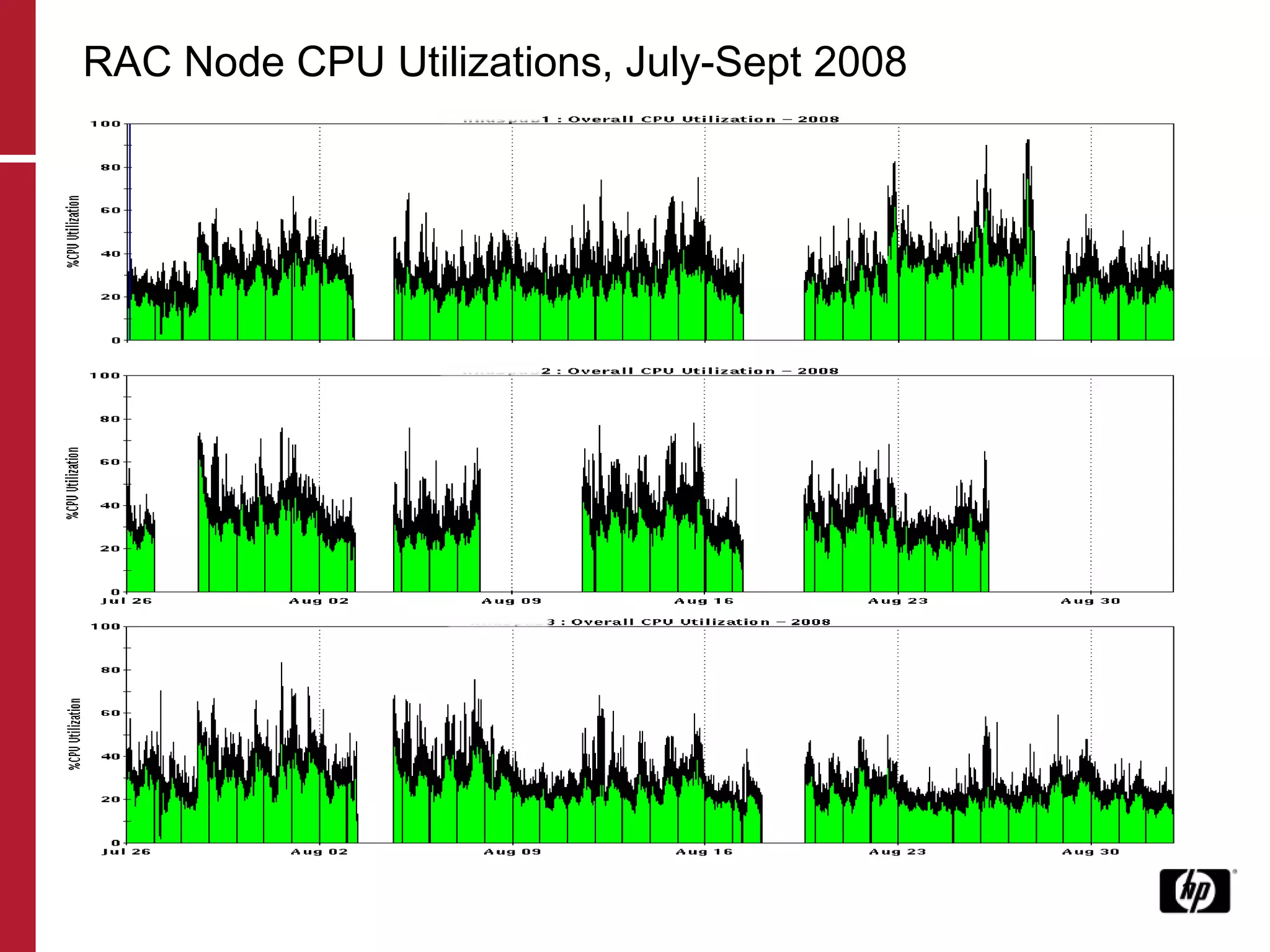

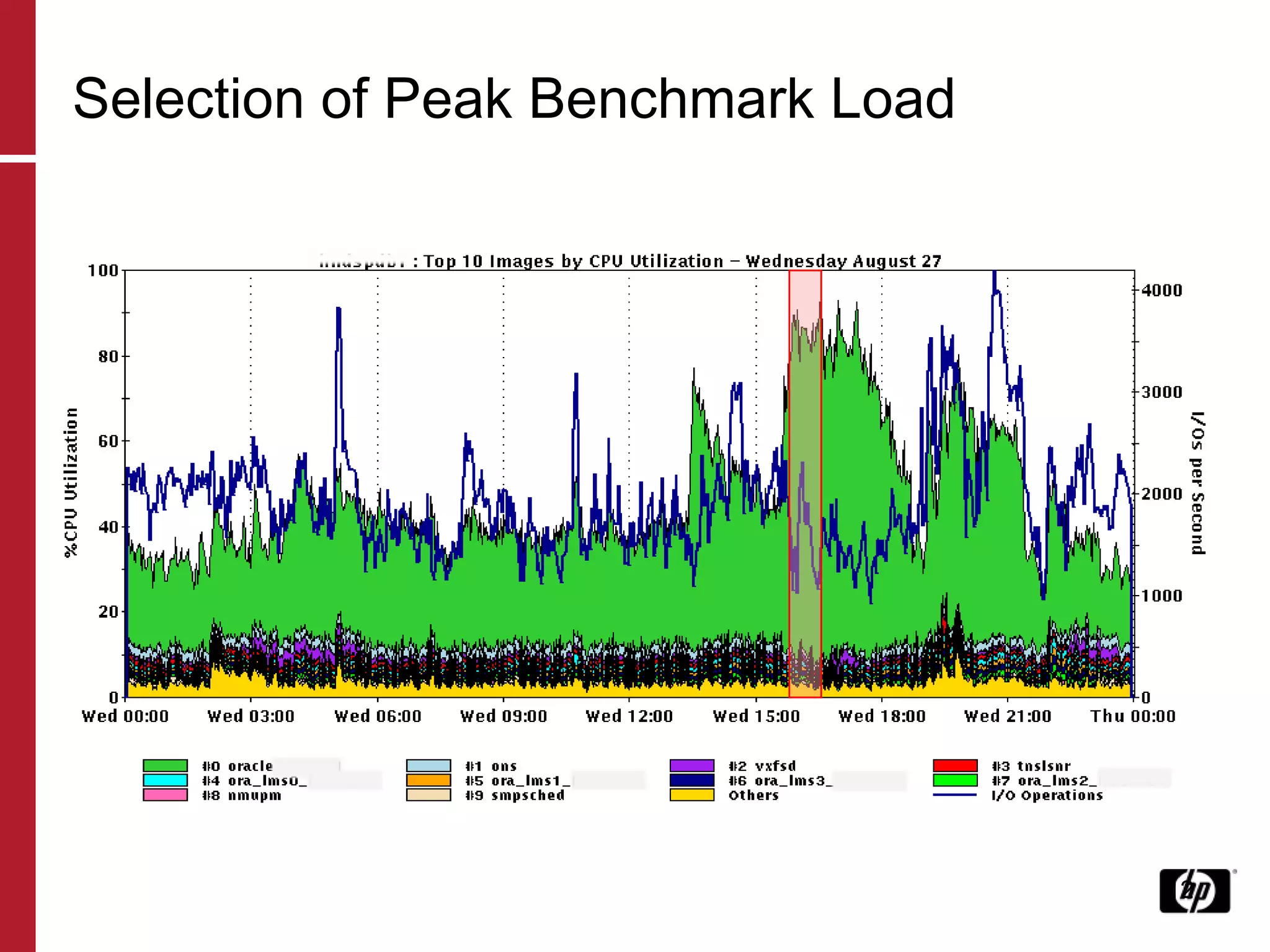

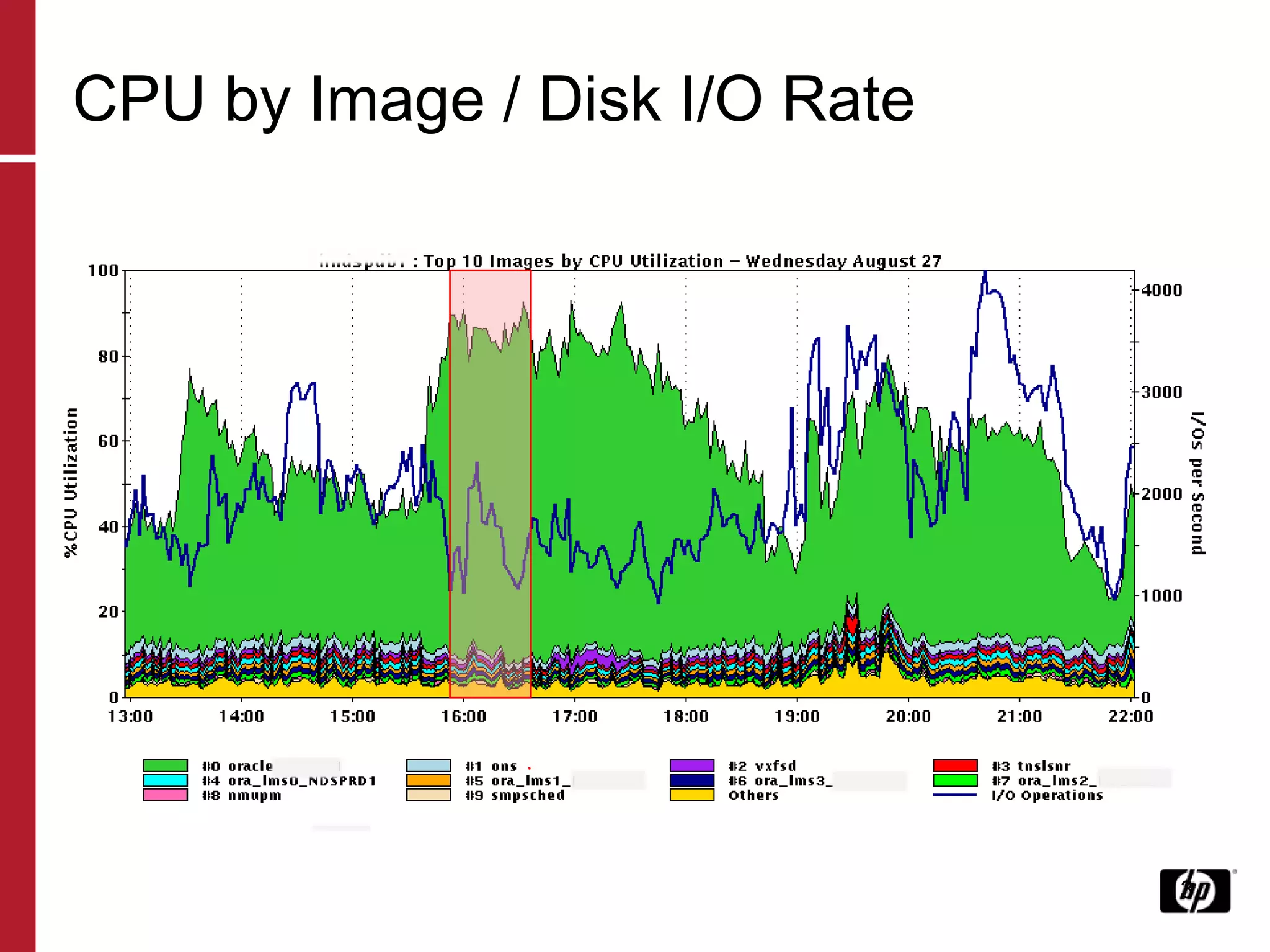

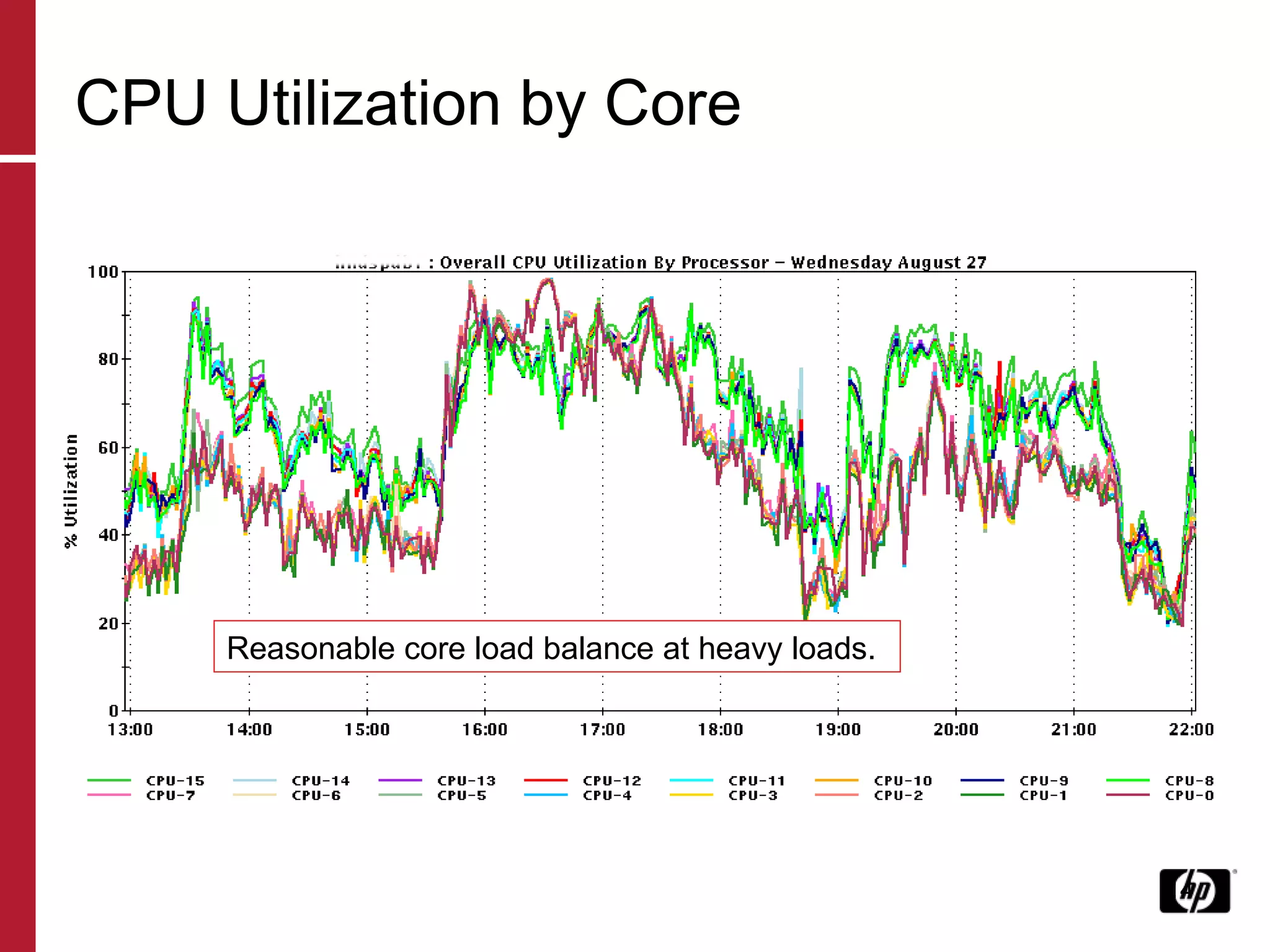

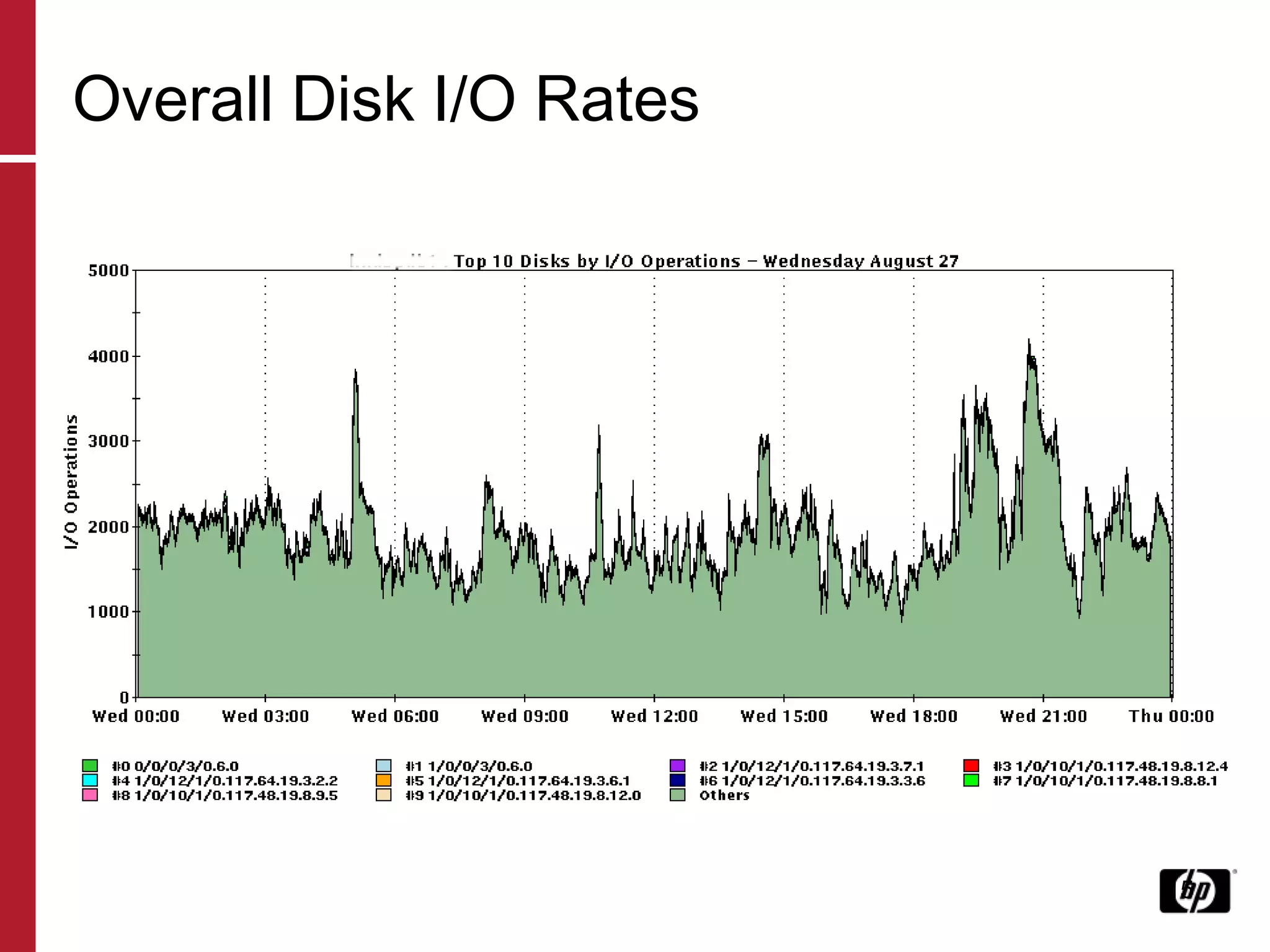

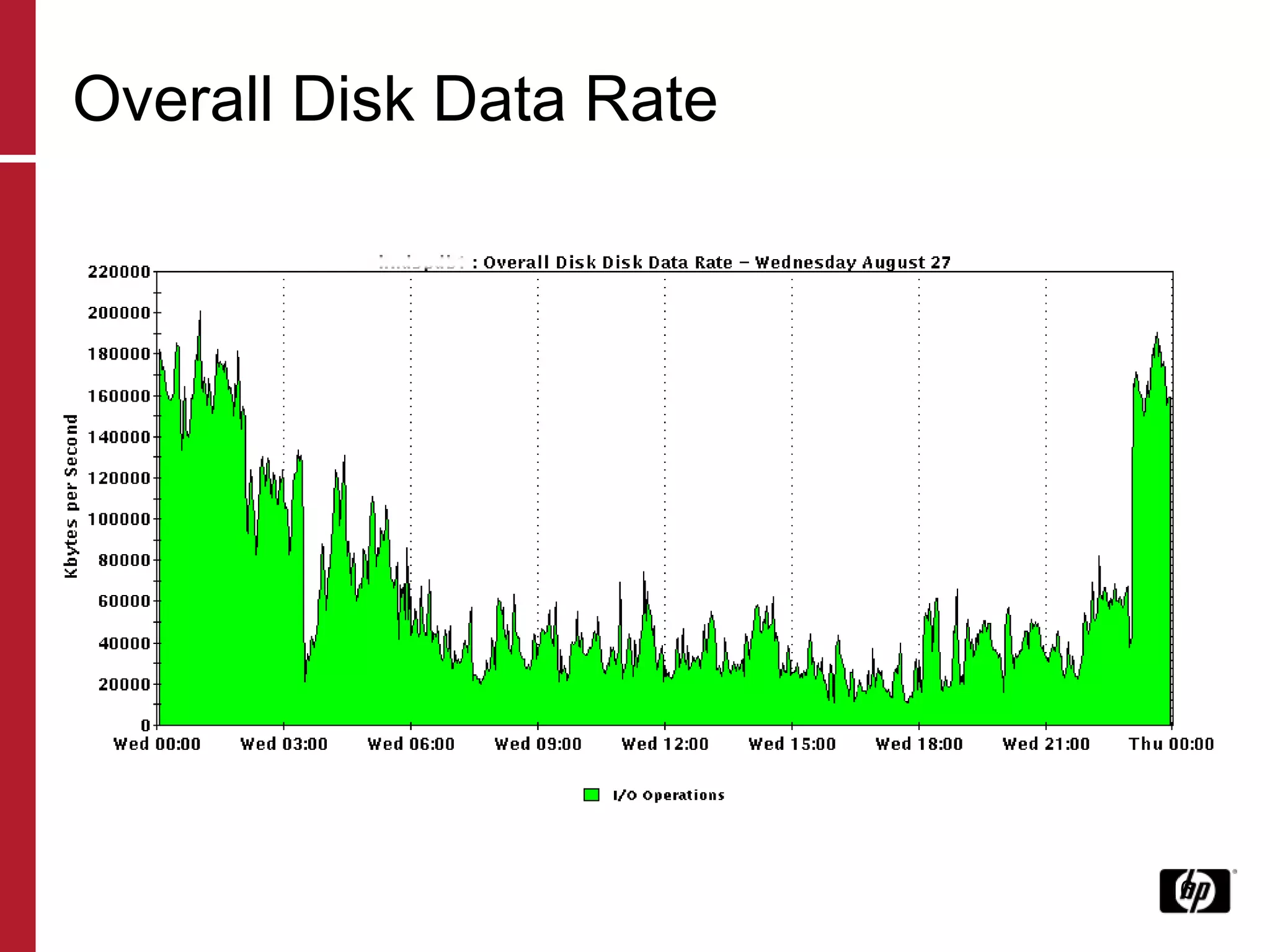

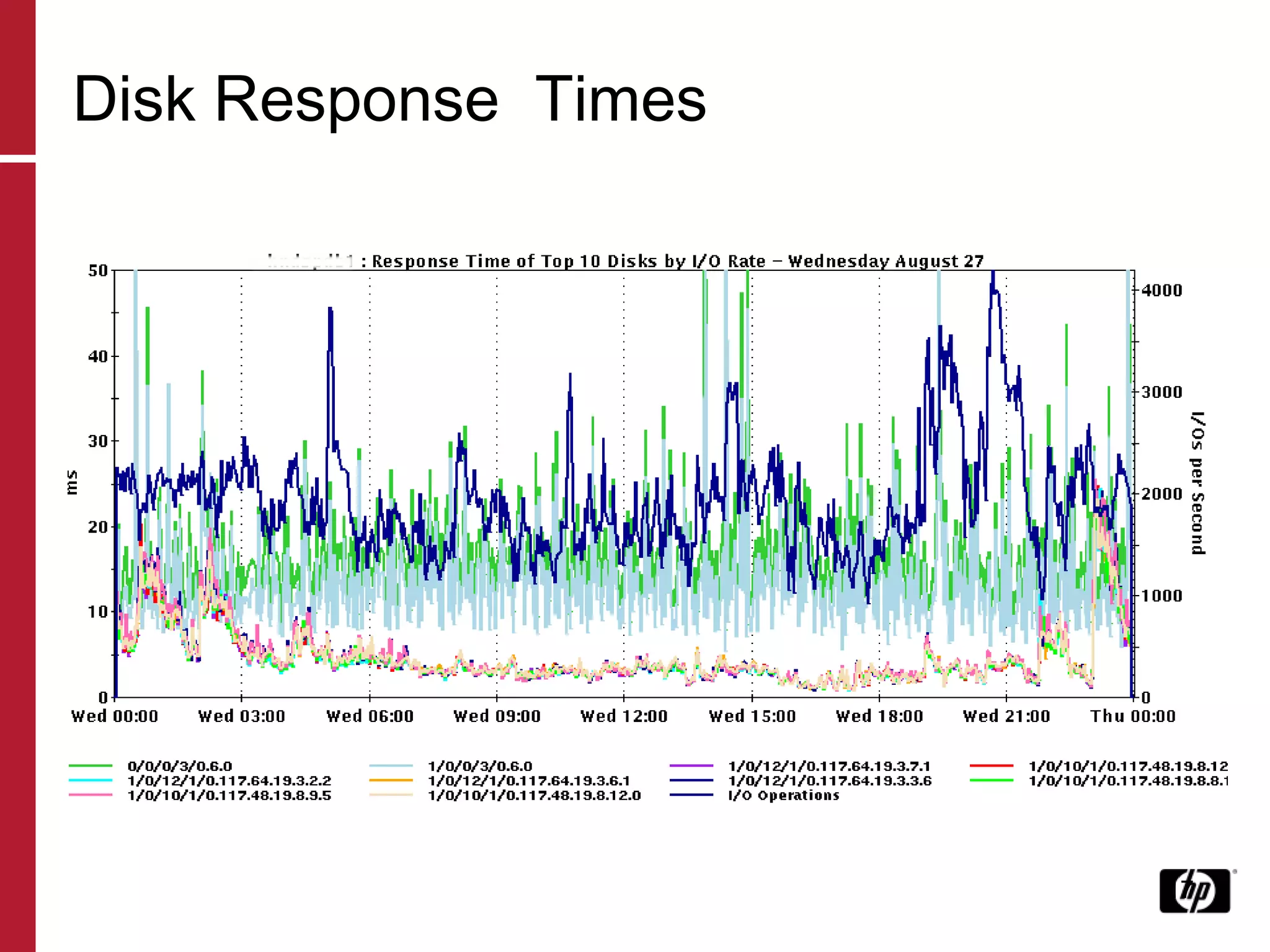

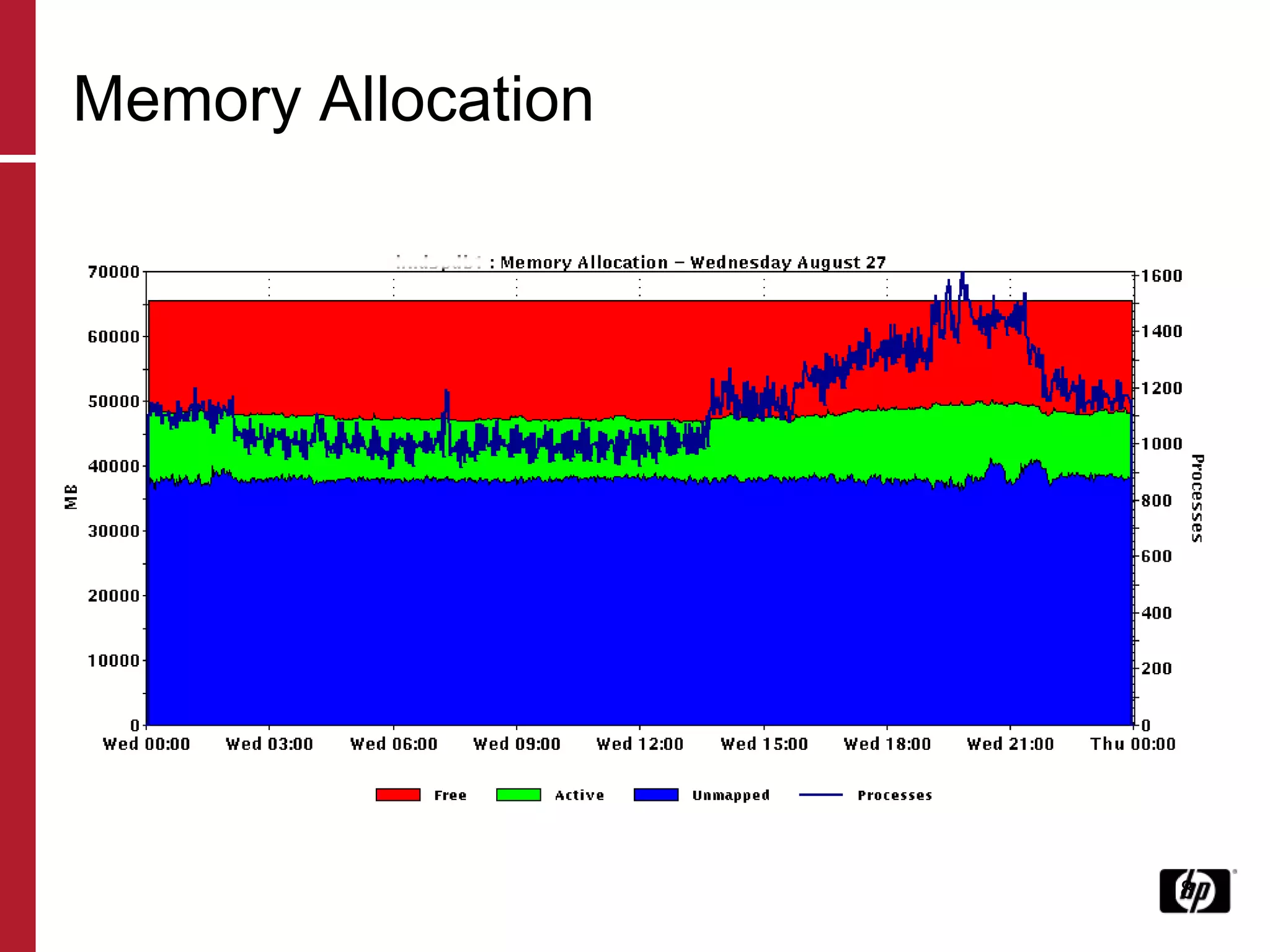

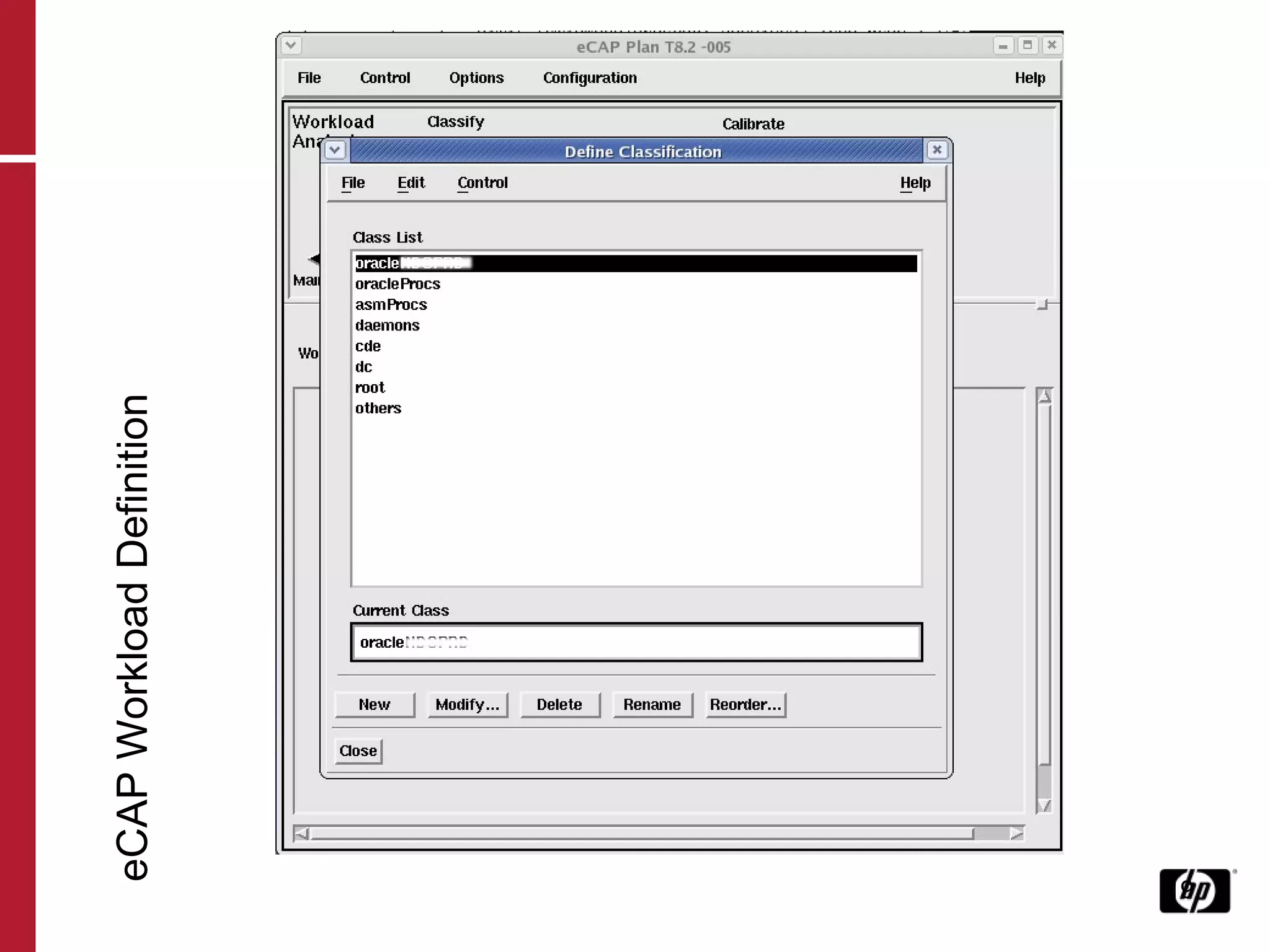

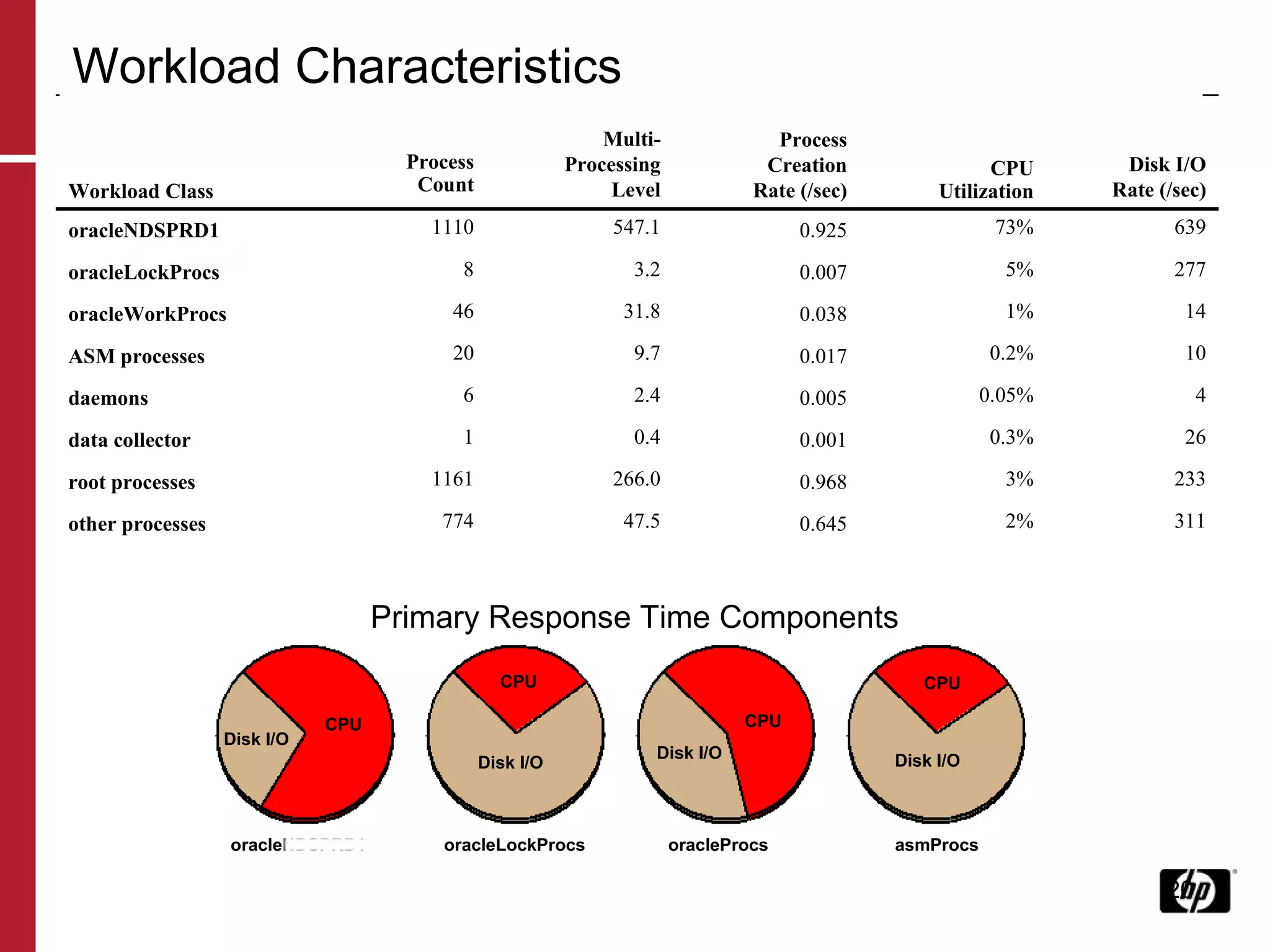

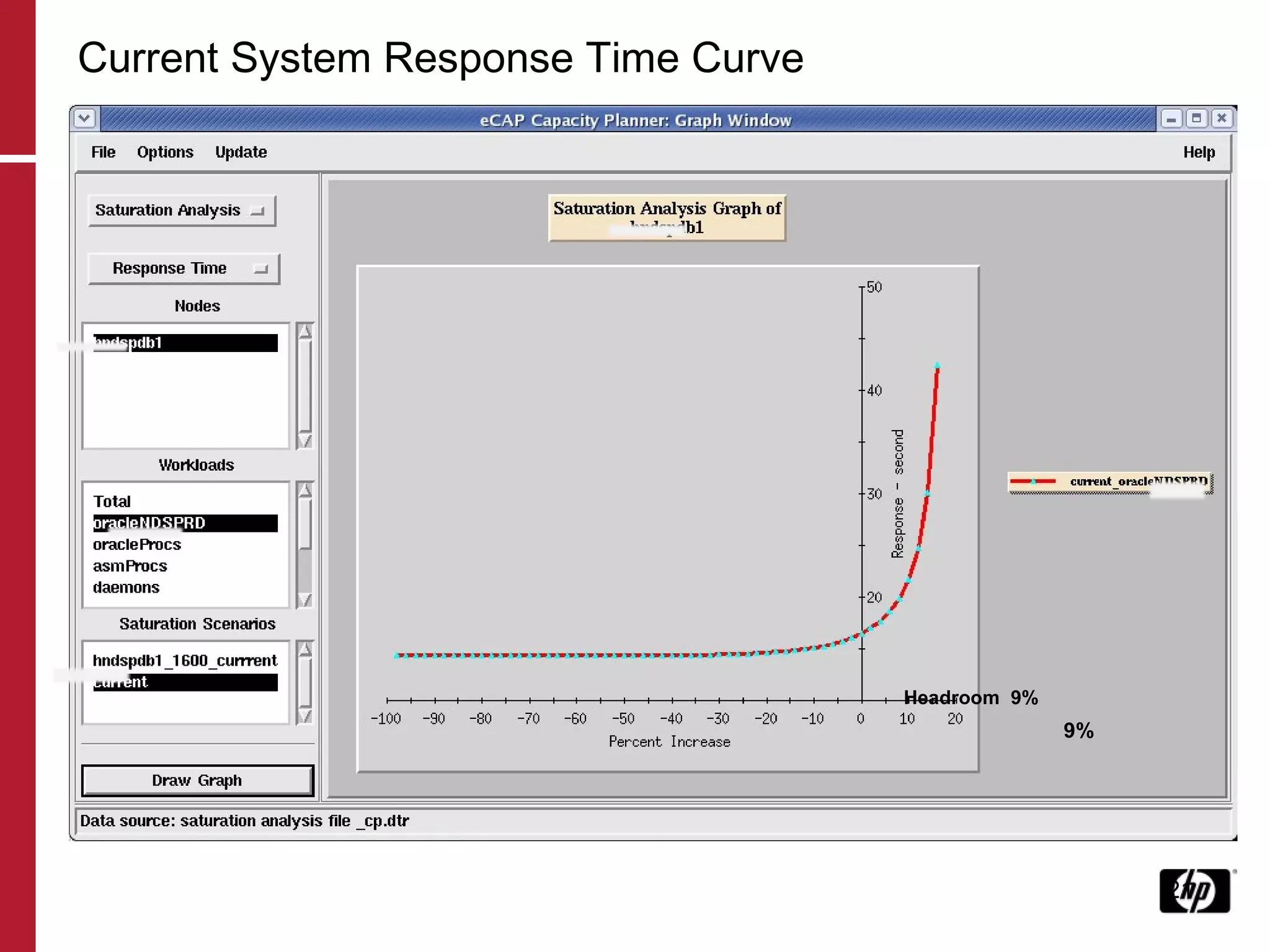

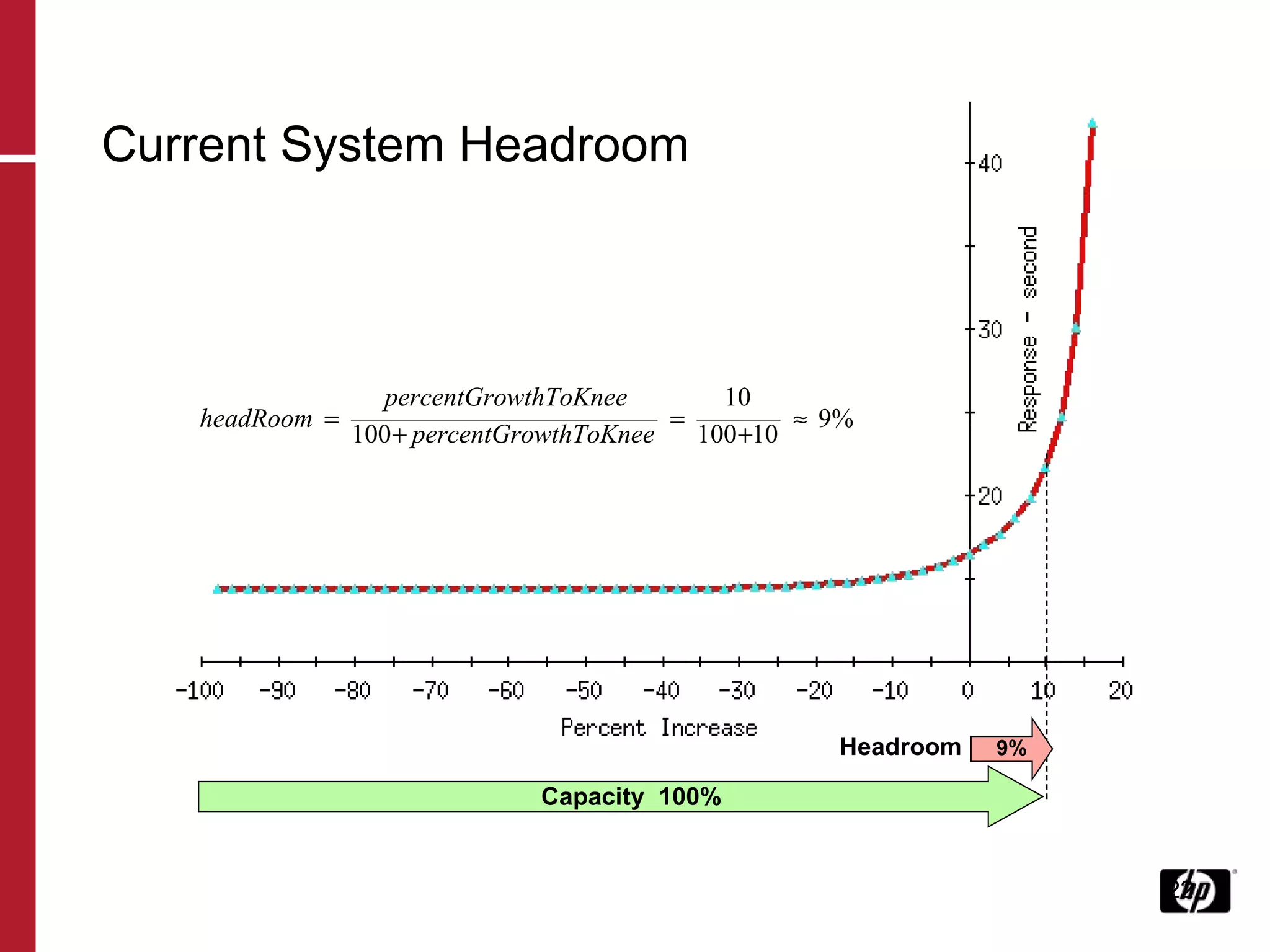

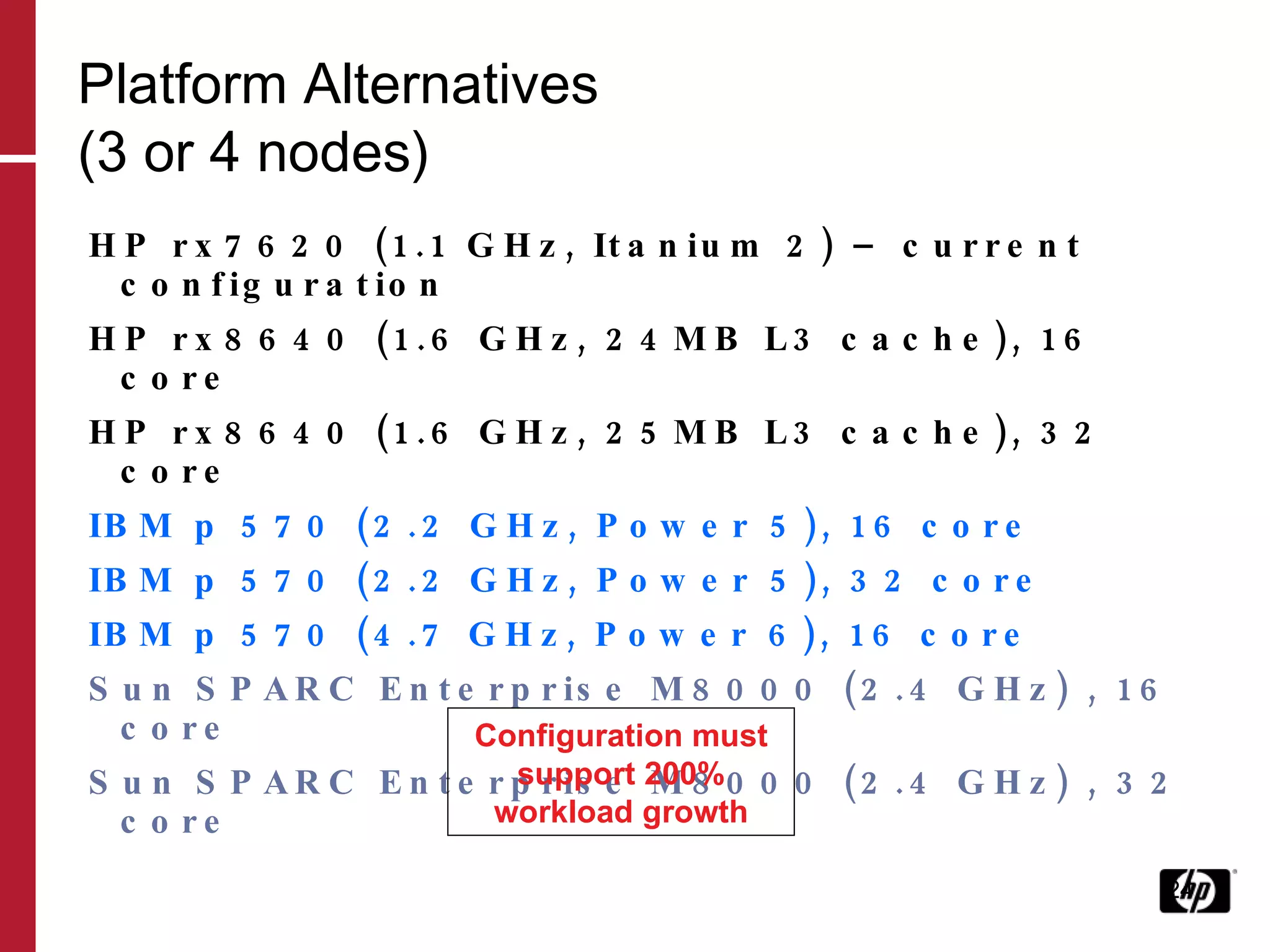

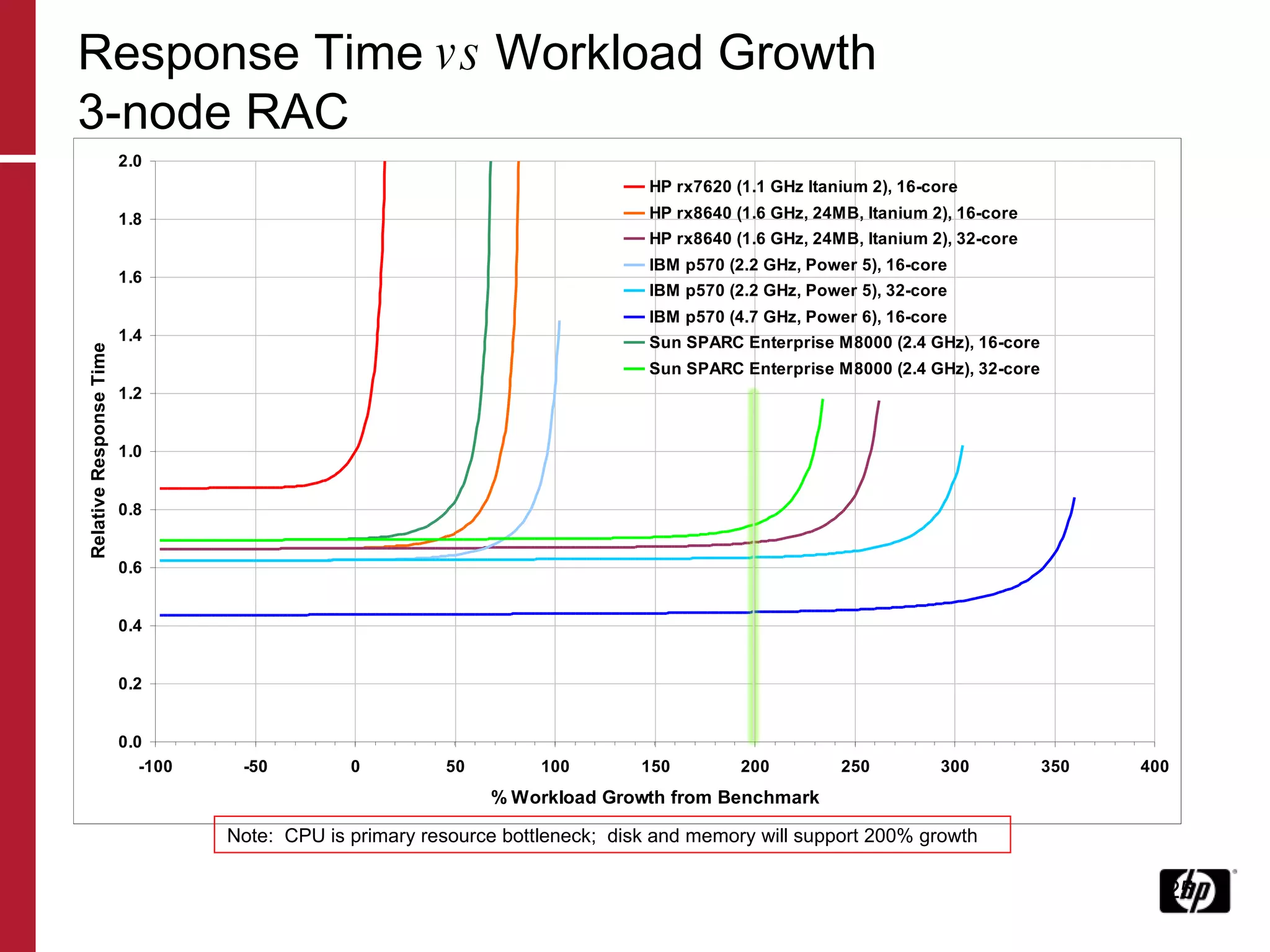

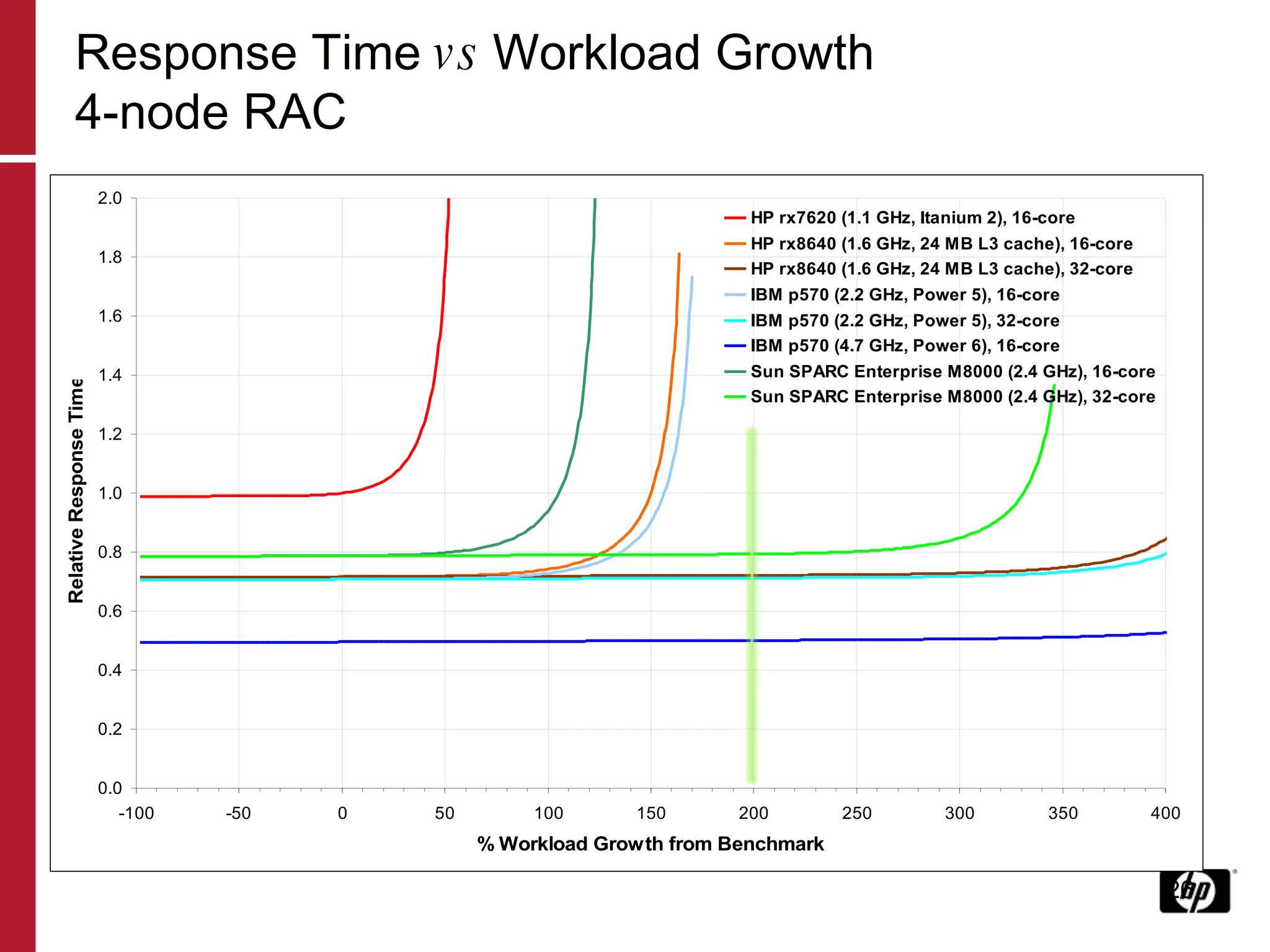

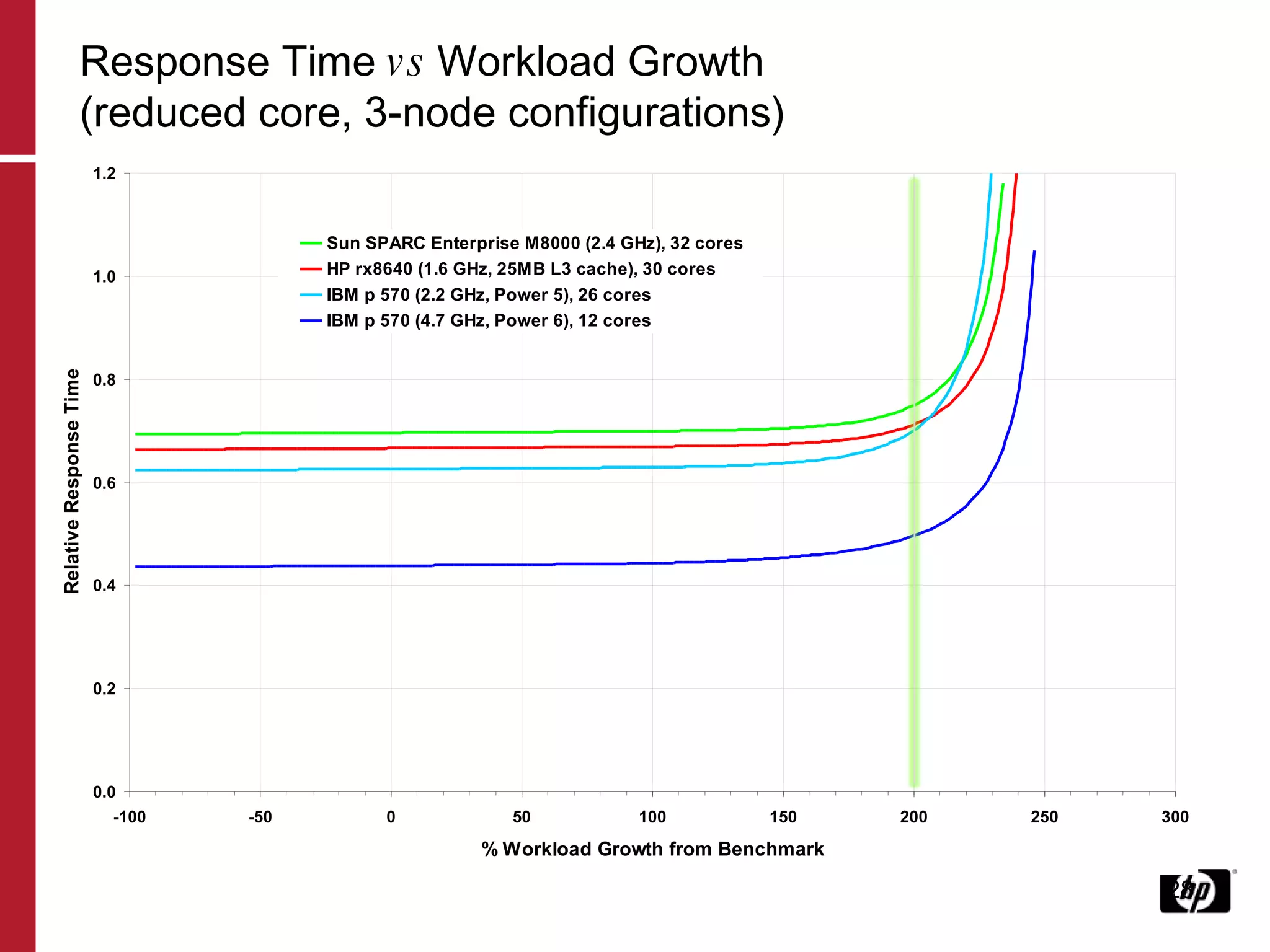

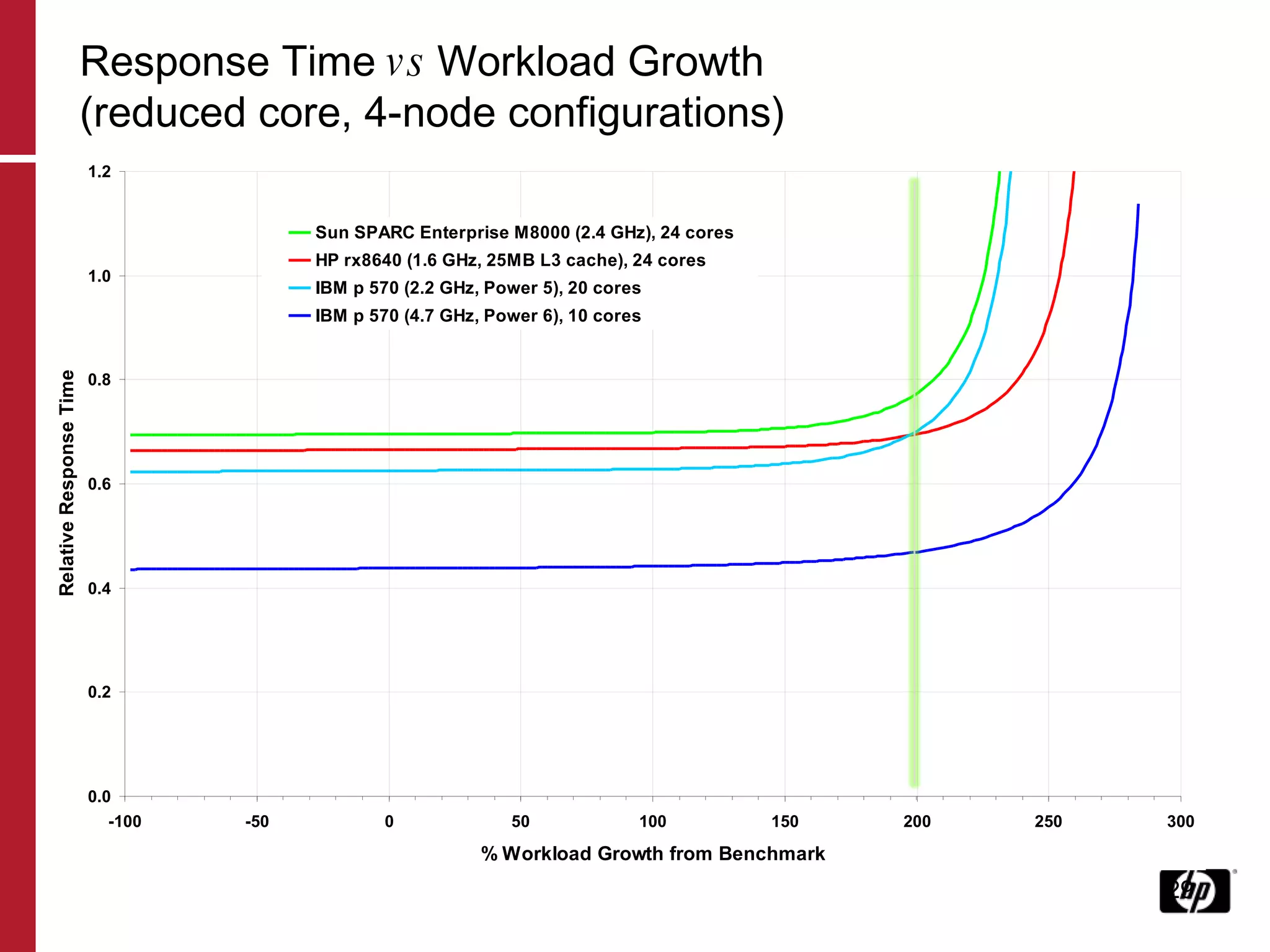

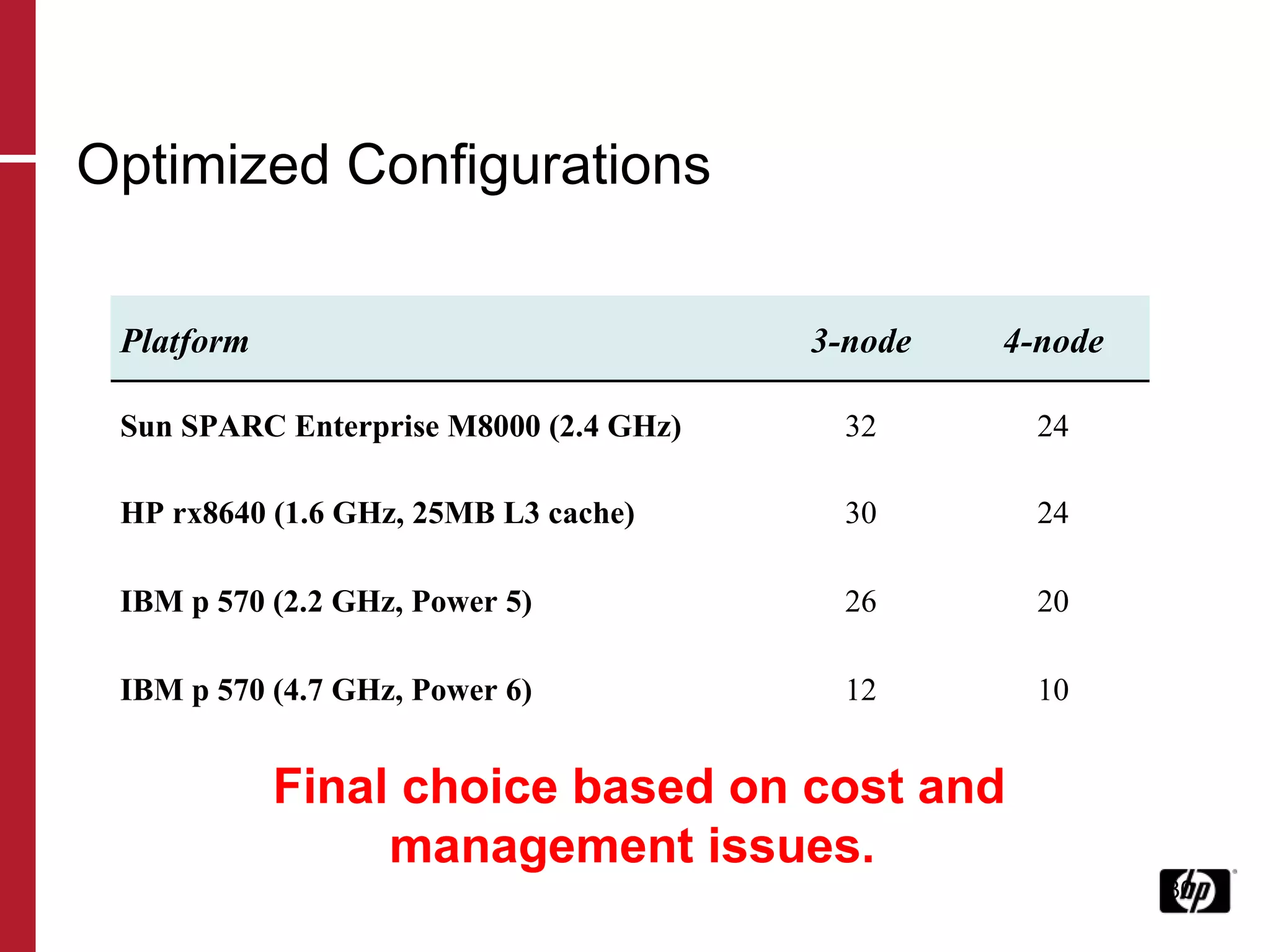

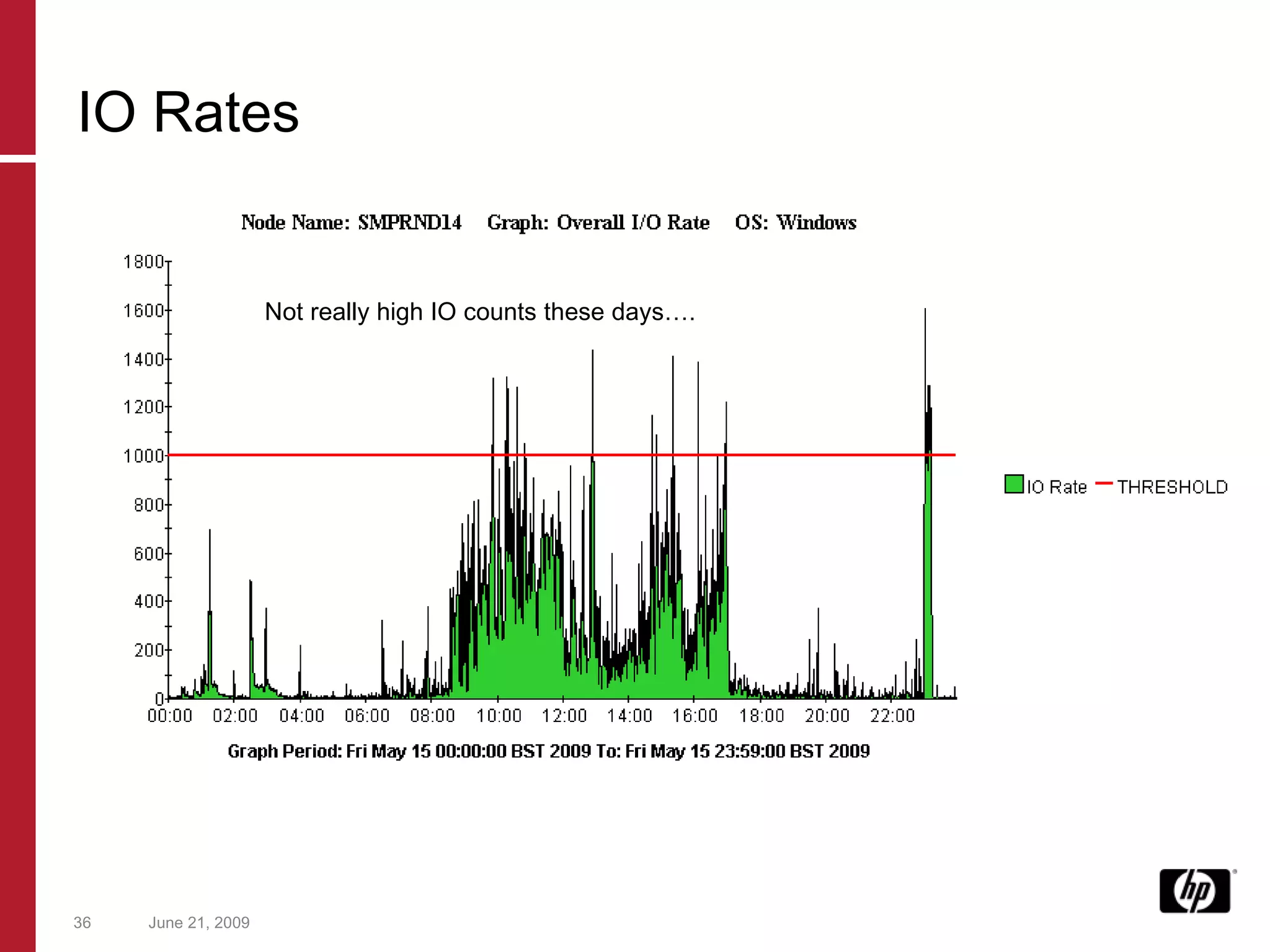

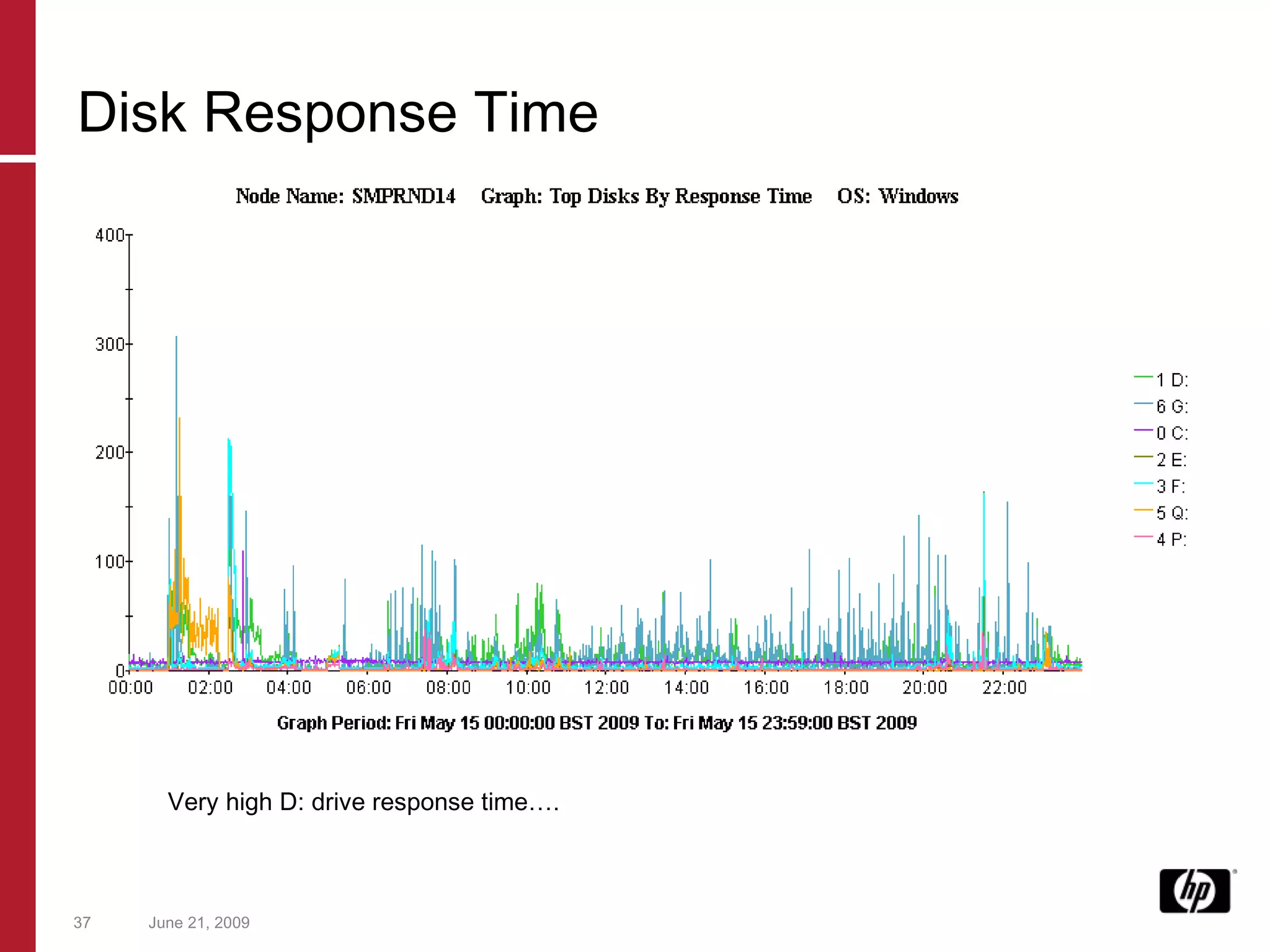

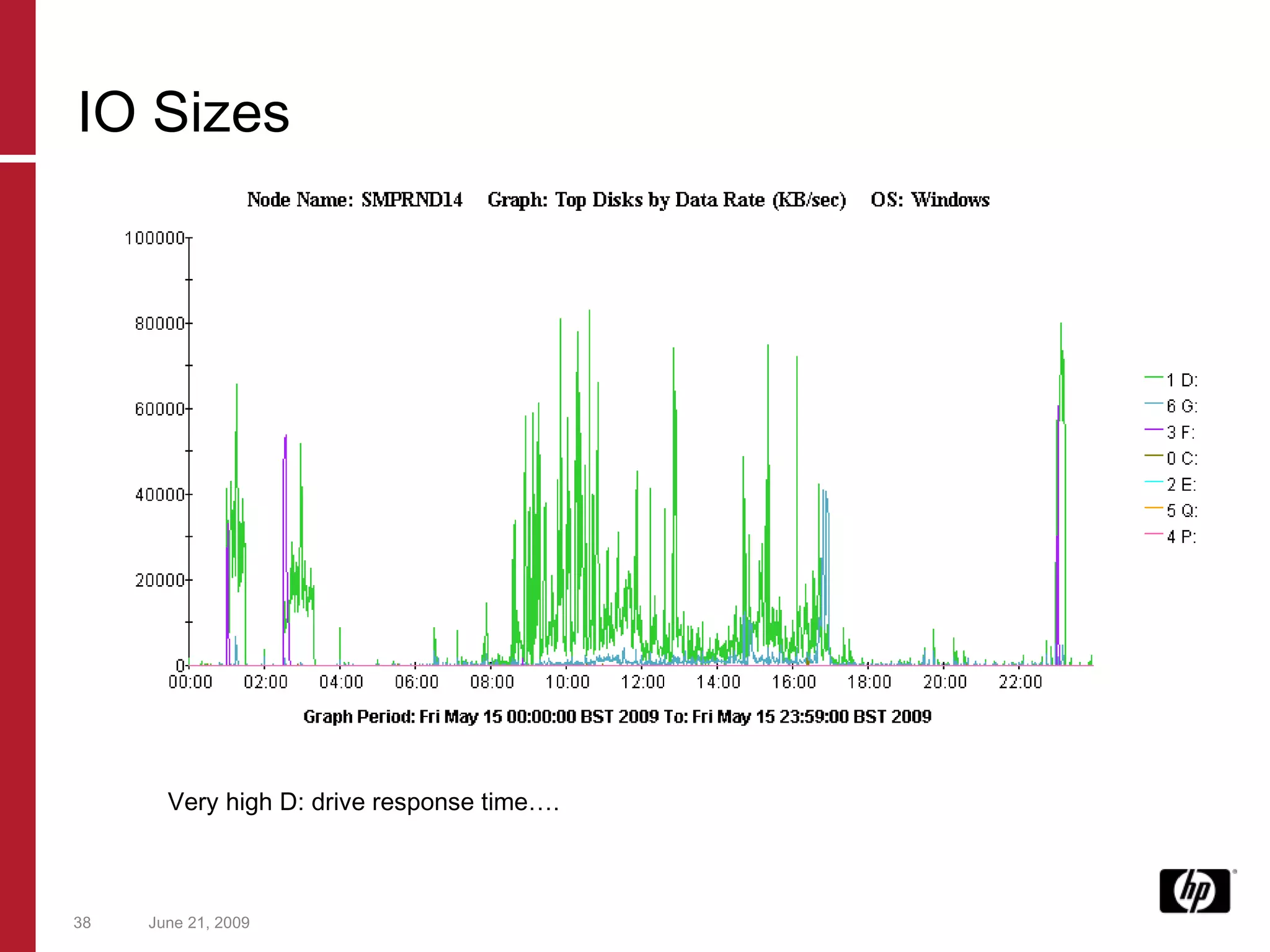

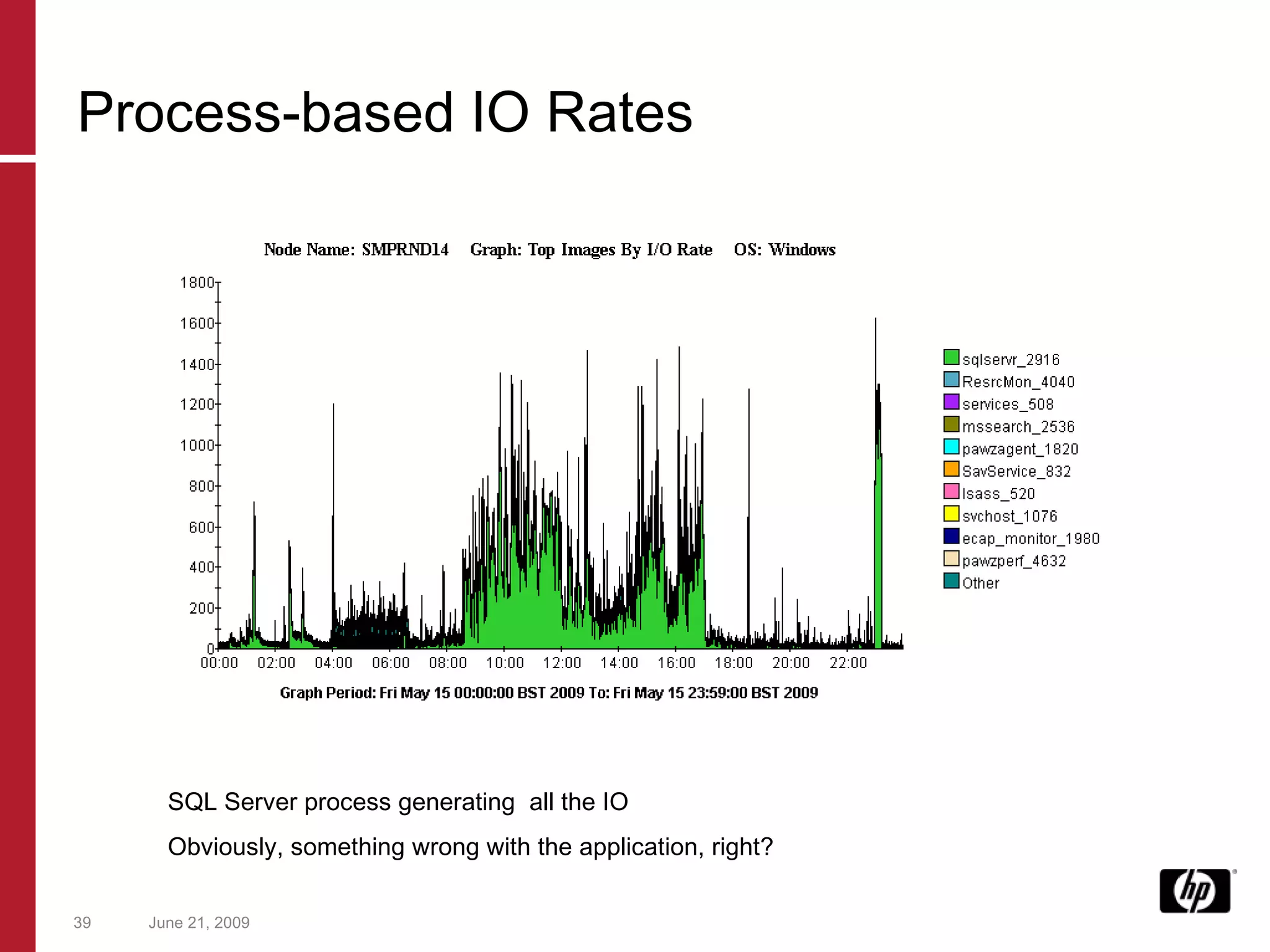

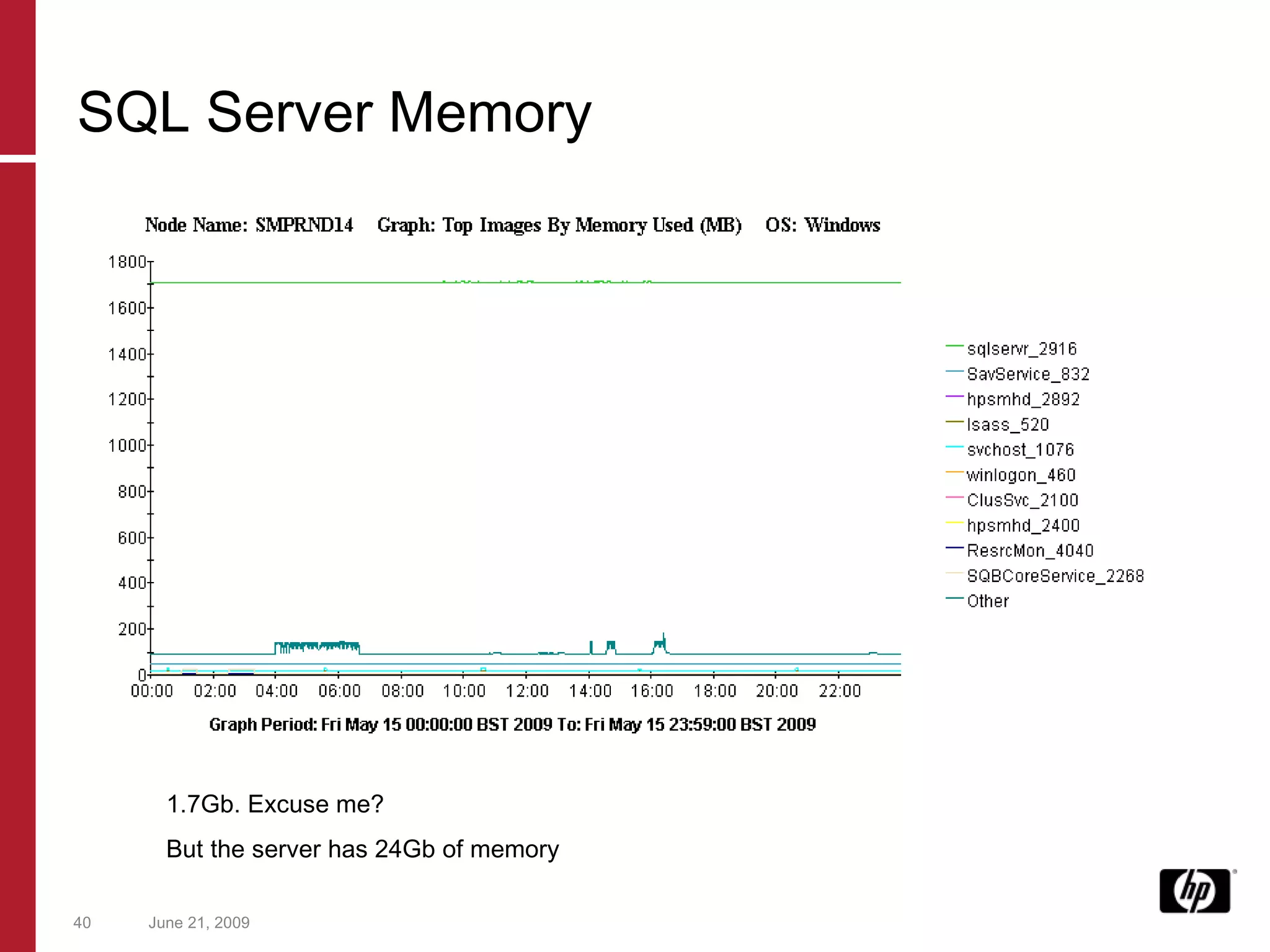

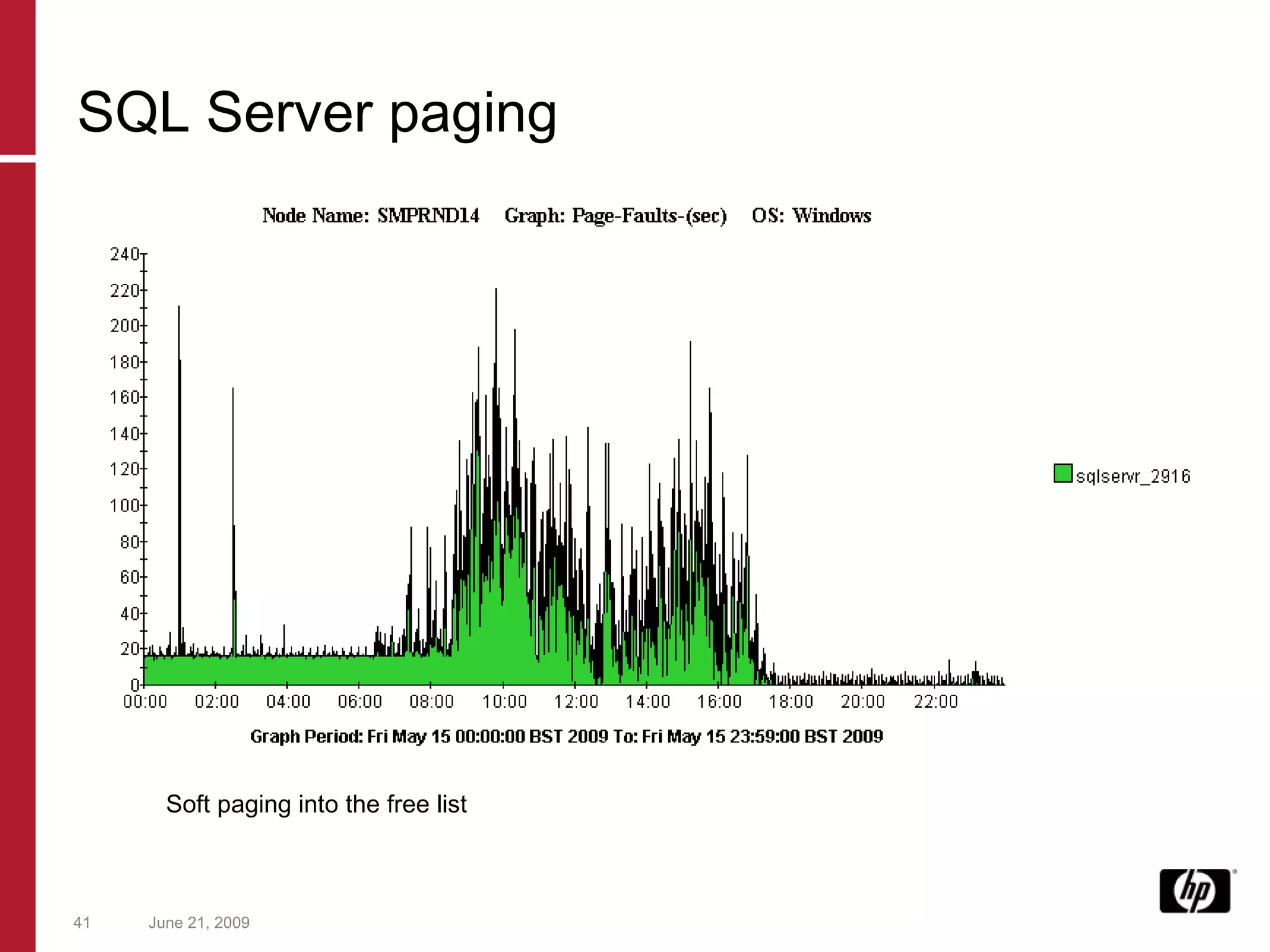

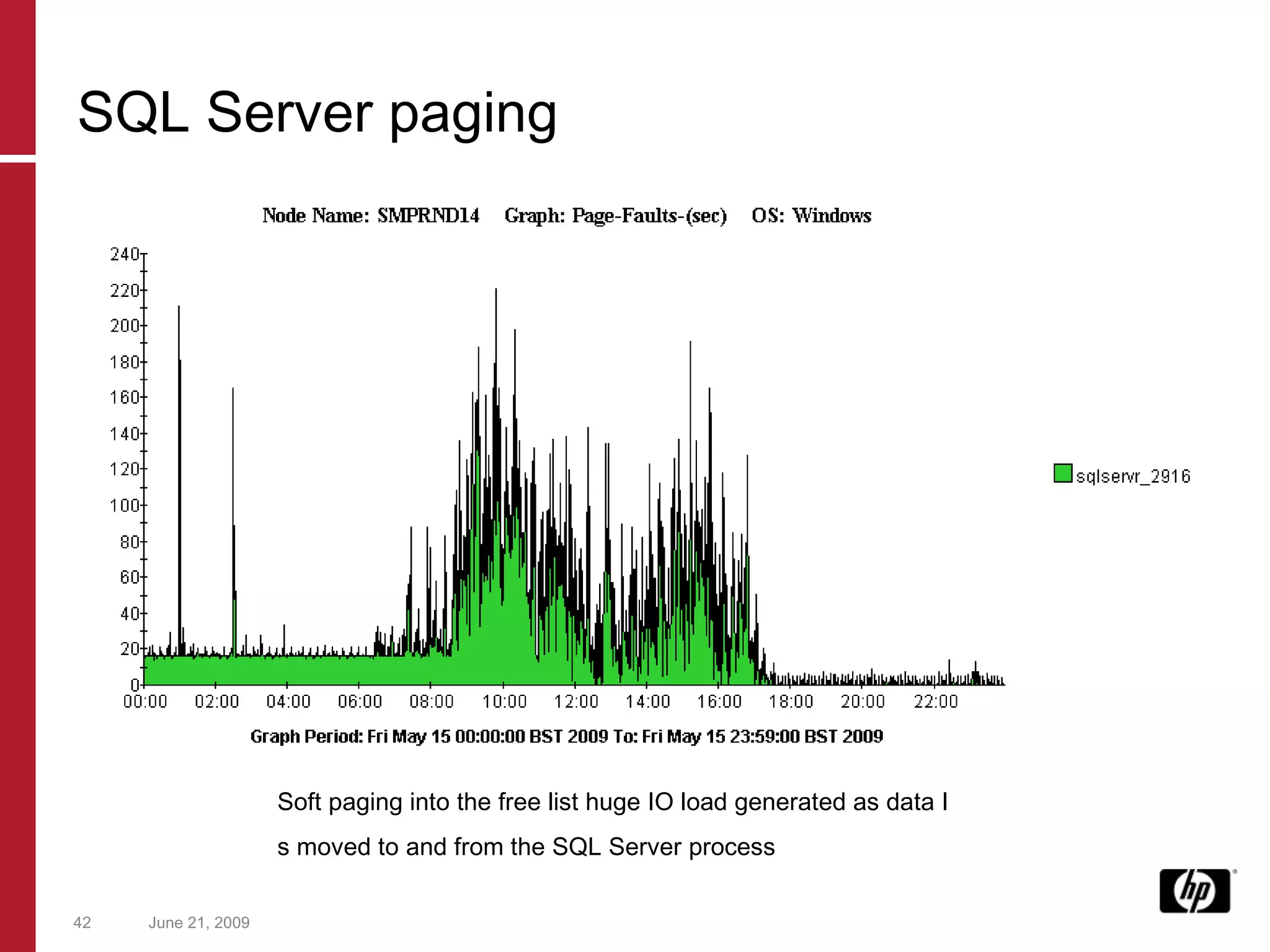

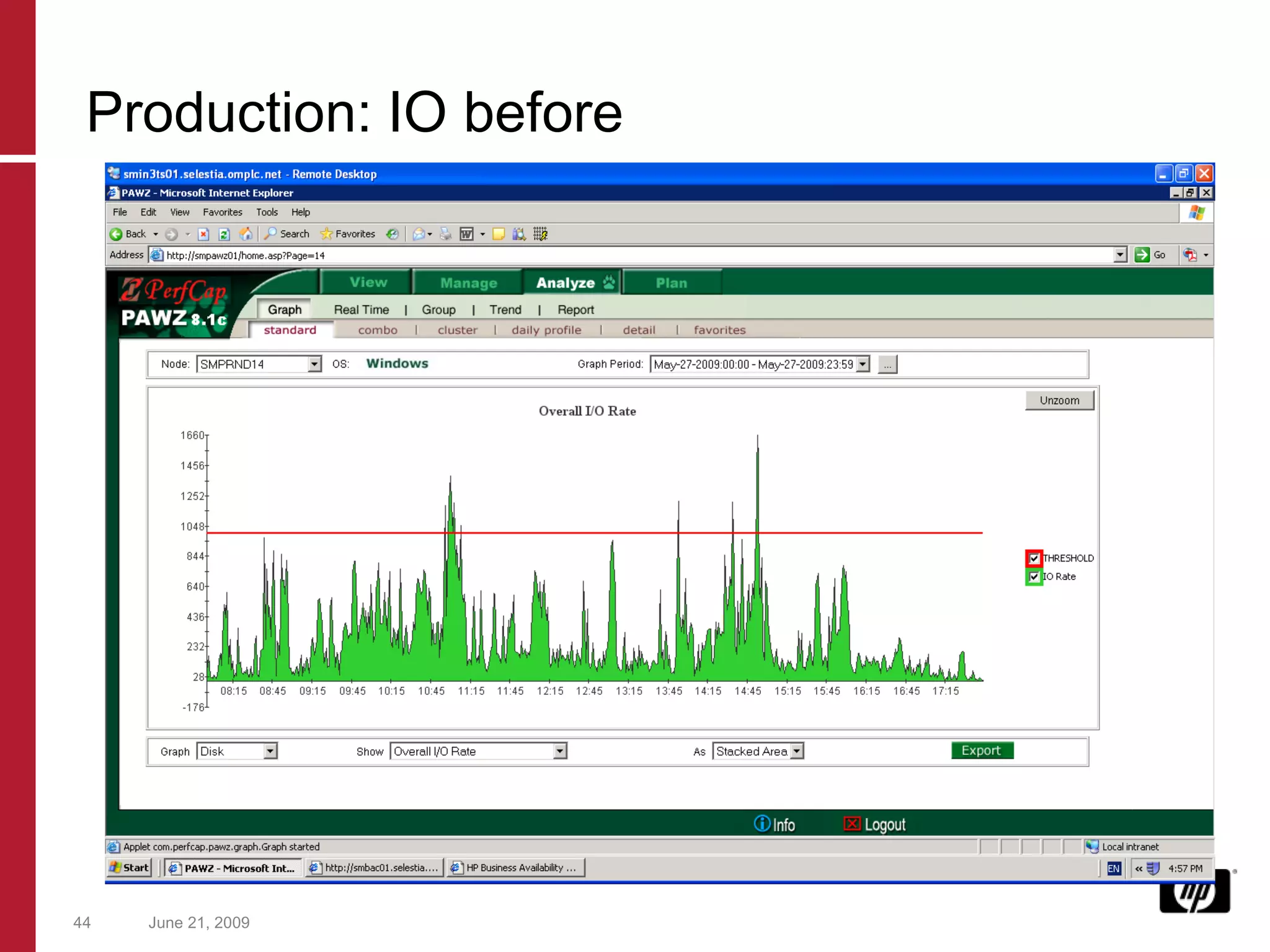

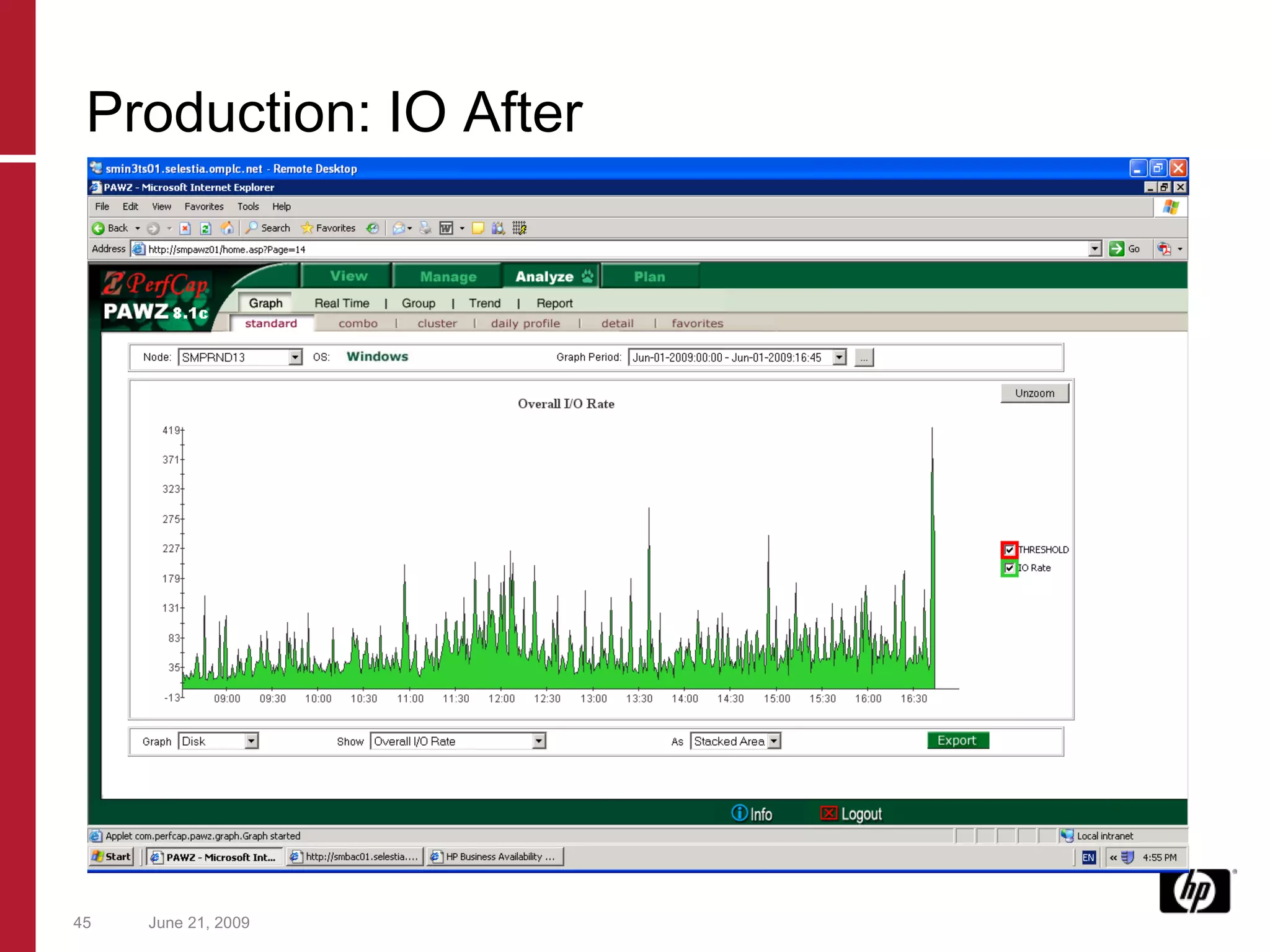

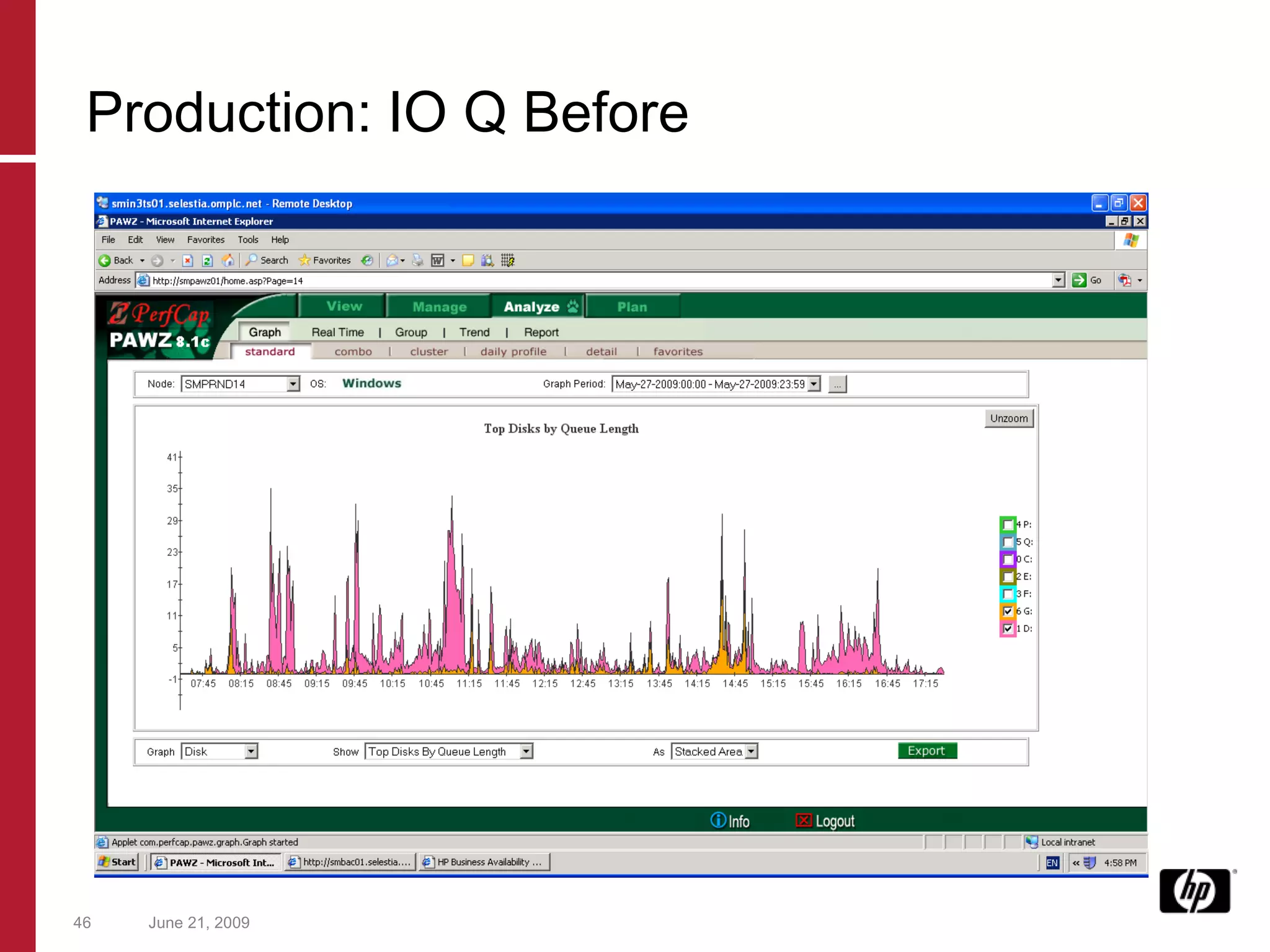

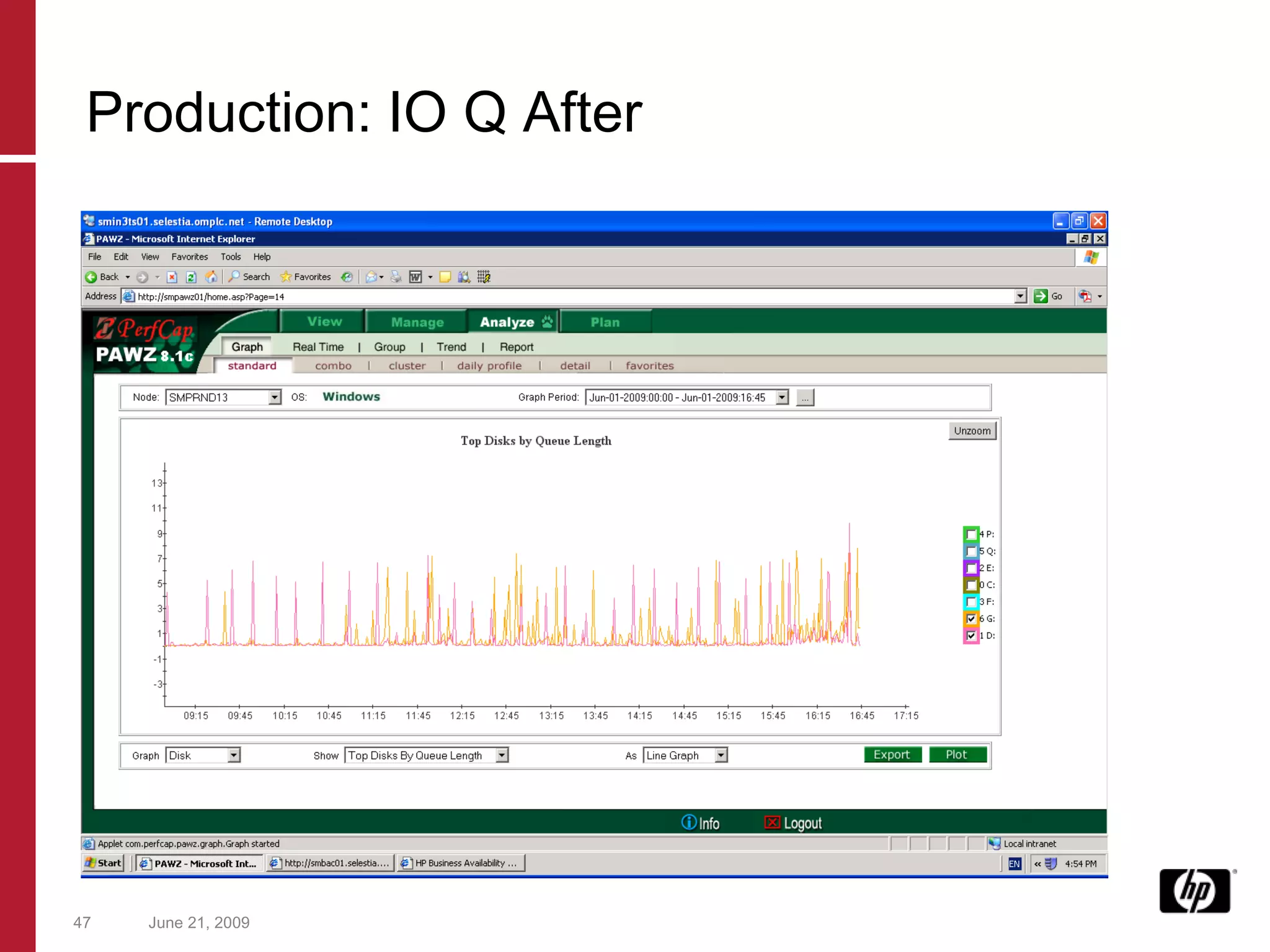

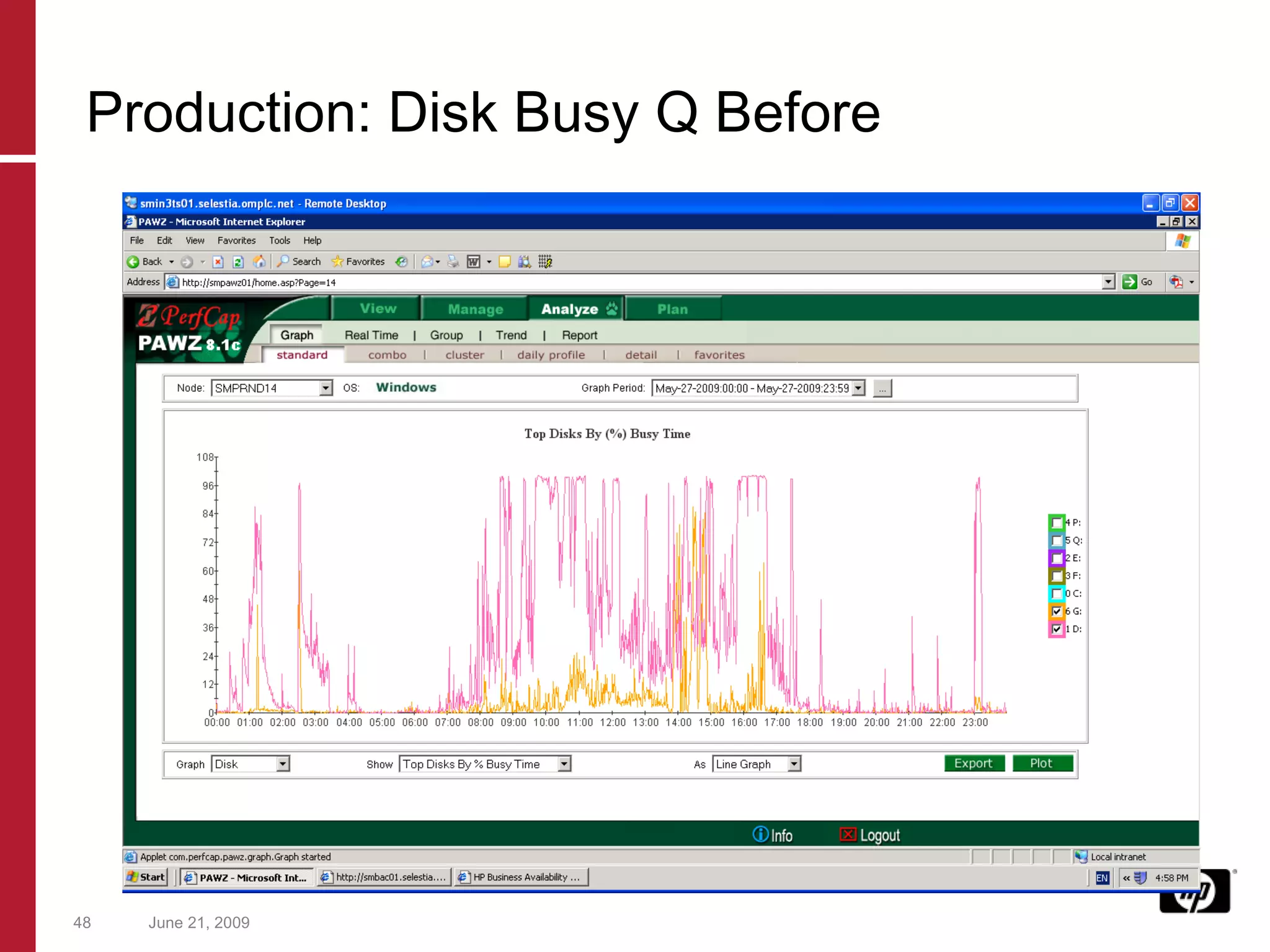

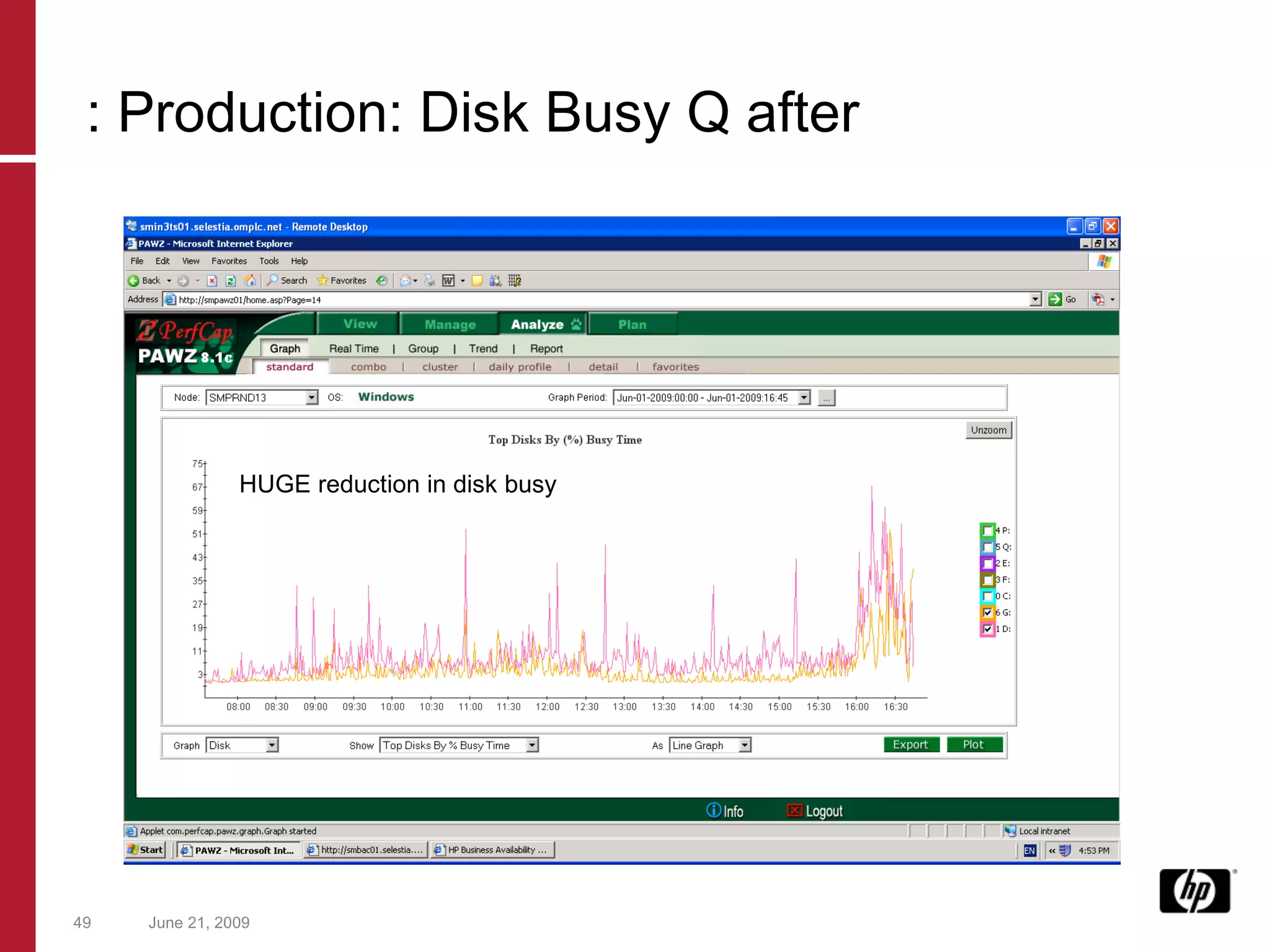

The document discusses using capacity planning and performance analysis to improve system performance. It describes two case studies: capacity planning for an Oracle RAC database and performance analysis of a SQL Server application on HP blades. For the Oracle case, different platform options were evaluated and optimized configurations identified. For SQL Server, enabling AWE resolved soft paging and reduced response times by improving memory usage. The lessons highlight challenges in using performance tools and the need for better fault detection and data presentation.

![Want to know more? Booth Number 631 http://www.perfcap.com [email_address] [email_address] [email_address]](https://image.slidesharecdn.com/hptf2240final-124562326937-phpapp02/75/Hptf-2240-Final-53-2048.jpg)